Windows Azure and Cloud Computing Posts for 9/9 and 9/16/2013+

Top Stories These Weeks:

- Vittorio Bertocci (@vibronet) reported Active Directory Authentication Library (ADAL) v1 for .NET – General Availability! on 9/12/2013 in the Windows Azure Access Control, Active Directory, and Identity section below.

- Dan Holme (@danholme) announced that Windows Azure became the very first cloud service provider to pass a new security compliance audit on 9/18/2013 in the Cloud Security, Compliance and Governance section below.

- Joe Giordano of the Windows Azure Storage Team posted Announcing Storage Client Library 2.1 RTM & CTP for Windows Phone on 9/6/2013 in the Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services section.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

• Updated 9/22/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

<Return to section navigation list>

Joe Giordano of the Windows Azure Storage Team posted Announcing Storage Client Library 2.1 RTM & CTP for Windows Phone on 9/6/2013:

We are pleased to announce that the storage client for .NET 2.1 has RTM’d. This release includes several notable features such as Async Task methods, IQueryable for Tables, buffer pooling support, and much more. In addition we are releasing the CTP of the storage client for Windows Phone 8. With the existing support for Windows Runtime, clients can now leverage Windows Azure Storage via a consistent API surface across multiple windows platforms. As usual all of the source code is available via github (see resources section below). You can download the latest binaries via the following nuget Packages:

- Nuget – 2.1 RTM

- Nuget – 2.1 For Windows Phone and Windows RunTime (Preview)

- Nuget – 2.1 Tables Extension library for Non-JavaScript Windows RunTime apps (Preview)

This remainder of this blog will cover some of the new features and scenarios in additional detail and provide supporting code samples. As always we appreciate your feedback, so please feel free to add comments below.

Fundamentals

For this release we focused heavily on fundamentals by dramatically expanding test coverage, and building an automated performance suite that let us benchmark performance behaviors across various high scale scenarios.

Here are a few highlights for the 2.1 release:

- Over 1000 publicly available Unit tests covering every public API

- Automated Performance testing to validate performance impacting changes

- Expanded Stress testing to ensure data correctness under massive loads

- Key performance improving features that target memory behavior and shared infrastructure (more details below)

Performance

We are always looking for ways to improve the performance of client applications by improving the storage client itself and by exposing new features that better allow clients to optimize their applications. In this release we have done both and the results are dramatic.

For example, below are the results from one of the test scenarios we execute where a single XL VM round trips 30 256MB Blobs simultaneously (7.5 GB in total). As you can see there are dramatic improvements in both latency and CPU usage compared to SDK 1.7 (CPU drops almost 40% while latency is reduced by 16.5% for uploads and 23.2% for downloads). Additionally, you may note the actual latency improvements between 2.0.5.1 and 2.1 are only a few percentage points. This is because we have successfully removed the client out of the critical path resulting in an application that is now entirely dependent on the network. Further, while we have improved performance in this scenario CPU usage has dropped another 13% on average compared to SDK 2.0.5.1.

This is just one example of the performance improvements we have made, for more on performance as well as best practices please see the Tech Ed Presentation in the Resources section below.

Async Task Methods

Each public API now exposes an Async method that returns a task for a given operation. Additionally, these methods support pre-emptive cancellation via an overload which accepts a CancellationToken. If you are running under .NET 4.5, or using the Async Targeting Pack for .NET 4.0, you can easily leverage the async / await pattern when writing your applications against storage.

Buffer Pooling

For high scale applications, Buffer Pooling is a great strategy to allow clients to re-use existing buffers across many operations. In a managed environment such as .NET, this can dramatically reduce the number of cycles spent allocating and subsequently garbage collecting semi-long lived buffers.

To address this scenario each Service Client now exposes a BufferManager property of type IBufferManager. This property will allow clients to leverage a given buffer pool with any associated objects to that service client instance. For example, all CloudTable objects created via CloudTableClient.GetTableReference() would make use of the associated service clients BufferManager. The IBufferManager is patterned after the BufferManager in System.ServiceModel.dll to allow desktop clients to easily leverage an existing implementation provided by the framework. (Clients running on other platforms such as Windows Runtime or Windows Phone may implement a pool against the IBufferManager interface)

For desktop applications to leverage the built in BufferManager provided by the System.ServiceModel.dll a simple adapter is required:

using Microsoft.WindowsAzure.Storage;

using System.ServiceModel.Channels;

public class WCFBufferManagerAdapter : IBufferManager

{

private int defaultBufferSize = 0;

public WCFBufferManagerAdapter(BufferManager manager, int defaultBufferSize)

{

this.Manager = manager;

this.defaultBufferSize = defaultBufferSize;

}

public BufferManager Manager { get; internal set; }

public void ReturnBuffer(byte[] buffer)

{

this.Manager.ReturnBuffer(buffer);

}

public byte[] TakeBuffer(int bufferSize)

{

return this.Manager.TakeBuffer(bufferSize);

}

public int GetDefaultBufferSize()

{

return this.defaultBufferSize;

}

}With this in place my application can now specify a shared buffer pool across any resource associated with a given service client by simply setting the BufferManager property.

BufferManager mgr = BufferManager.CreateBufferManager([MaxBufferPoolSize], [MaxBufferSize]);

serviceClient.BufferManager = new WCFBufferManagerAdapter(mgr, [MaxBufferSize]);Multi-Buffer Memory Stream

During the course of our performance investigations we have uncovered a few performance issues with the MemoryStream class provided in the BCL (specifically regarding Async operations, dynamic length behavior, and single byte operations). To address these issues we have implemented a new Multi-Buffer memory stream which provides consistent performance even when length of data is unknown. This class leverages the IBufferManager if one is provided by the client to utilize the buffer pool when allocating additional buffers. As a result, any operation on any service that potentially buffers data (Blob Streams, Table Operations, etc.) now consumes less CPU, and optimally uses a shared memory pool.

.NET MD5 is now default

Our performance testing highlighted a slight performance degradation when utilizing the FISMA compliant native MD5 implementation compared to the built in .NET implementation. As such, for this release the .NET MD5 is now used by default, any clients requiring FISMA compliance can re-enable it as shown below:

CloudStorageAccount.UseV1MD5 = false;

New Range Based Overloads

In 2.1 Blob upload API’s include an overload which allows clients to only upload a given range of the byte array or stream to the blob. This feature allows clients to avoid potentially pre-buffering data prior to uploading it to the storage service. Additionally, there are new download range API’s for both streams and byte arrays that allow efficient fault tolerant range downloads without the need to buffer any data on the client side.

Client Tracing

The 2.1 release implements .NET Tracing, allowing users to enable log information regarding request execution and REST requests (See below for a table of what information is logged). Additionally, Windows Azure Diagnostics provides a trace listener that can redirect client trace messages to the WADLogsTable if users wish to persist these traces to the cloud.

Logged Data

Each log line will include the following data:

- Client Request ID: Per request ID that is specified by the user in OperationContext

- Event: Free-form text

As part of each request the following data will be logged to make it easier to correlate client-side logs to server-side logs:

- Request:

- Request Uri

- Response:

- Request ID

HTTP status code

Trace Levels

Level

Events

Off

Nothing will be logged.

Error

If an exception cannot or will not be handled internally and will be thrown to the user; it will be logged as an error.

Warning

If an exception is caught and handled internally, it will be logged as a warning. Primary use case for this is the retry scenario, where an exception is not thrown back to the user to be able to retry. It can also happen in operations such as CreateIfNotExists, where we handle the 404 error silently.

Informational

The following info will be logged:

- Right after the user calls a method to start an operation, request details such as URI and client request ID will be logged.

- Important milestones such as Sending Request Start/End, Upload Data Start/End, Receive Response Start/End, Download Data Start/End will be logged to mark the timestamps.

- Right after the headers are received, response details such as request ID and HTTP status code will be logged.

- If an operation fails and the storage client decides to retry, the reason for that decision will be logged along with when the next retry is going to happen.

- All client-side timeouts will be logged when storage client decides to abort a pending request.

Verbose

Following info will be logged:

- String-to-sign for each request

- Any extra details specific to operations (this is up to each operation to define and use)

Enabling Tracing

A key concept is the opt-in / opt-out model that the client provides to tracing. In typical applications it is customary to enable tracing at a given verbosity for a specific class. This works fine for many client applications, however for cloud applications that are executing at scale this approach may generate much more data than what is required by the user. As such we have provided the ability for clients to work in either an opt-in model for tracing which allows clients to configure listeners at a given verbosity, but only log specific requests if and when they choose. Essentially this design provides the ability for users to perform “vertical” logging across layers of the stack targeted at specific requests rather than “horizontal” logging which would record all traffic seen by a specific class or layer.

To enable tracing in .NET you must add a trace source for the storage client to the app.config and set the verbosity:

<system.diagnostics>

<sources>

<source name="Microsoft.WindowsAzure.Storage">

<listeners>

<add name="myListener"/>

</listeners>

</source>

</sources>

<switches>

<add name="Microsoft.WindowsAzure.Storage" value="Verbose" />

</switches>

…Then add a listener to record the output; in this case we will simply record it to application.log

<sharedListeners>

<add name="myListener"

type="System.Diagnostics.TextWriterTraceListener"

initializeData="application.log"/>

</sharedListeners>

The application is now set to log all trace messages created by the storage client up to the Verbose level. However, if a client wishes to enable logging only for specific clients or requests they can further configure the default logging level in their application by setting OperationContext.DefaultLogLevel and then opt-in any specific requests via the OperationContext object:// Disable Default Logging

OperationContext.DefaultLogLevel = LogLevel.Off;

// Configure a context to track my upload and set logging level to verbose

OperationContext myContext = new OperationContext() { LogLevel = LogLevel.Verbose };

blobRef.UploadFromStream(stream, myContext);With client side tracing used in conjunction with storage logging clients can now get a complete view of their application from both the client and server perspectives.

Blob Features

Blob Streams

In the 2.1 release, we improved blob streams that are created by OpenRead and OpenWrite APIs of CloudBlockBlob and CloudPageBlob. The write stream returned by OpenWrite can now upload much faster when the parallel upload functionality is enabled by keeping number of active writers at a certain level. Moreover, the return type is changed from a Stream to a new type named CloudBlobStream, which is derived from Stream. CloudBlobStream offers the following new APIs:

public abstract ICancellableAsyncResult BeginCommit(AsyncCallback callback, object state);

public abstract ICancellableAsyncResult BeginFlush(AsyncCallback callback, object state);

public abstract void Commit();

public abstract void EndCommit(IAsyncResult asyncResult);

public abstract void EndFlush(IAsyncResult asyncResult);Flush already exists in Stream itself, so CloudBlobStream only adds asynchronous version. However, Commit is a completely new API that now allows the caller to commit before disposing the Stream. This allows much easier exception handling during commit and also the ability to commit asynchronously.

The read stream returned by OpenRead does not have a new type, but it now has true synchronous and asynchronous implementations. Clients can now get the stream synchronously via OpenRead or asynchronously using [Begin|End]OpenRead. Moreover, after the stream is opened, all synchronous calls such as querying the length or the Read API itself are truly synchronous, meaning that they do not call any asynchronous APIs internally.

Table Features

IgnorePropertyAttribute

When persisting POCO objects to Windows Azure Tables in some cases clients may wish to omit certain client only properties. In this release we are introducing the IgnorePropertyAttribute to allow clients an easy way to simply ignore a given property during serialization and de-serialization of an entity. The following snippet illustrates how to ignore my FirstName property of my entity via the IgnorePropertyAttribute:

public class Customer : TableEntity

{

[IgnoreProperty]

public string FirstName { get; set; }

}Compiled Serializers

When working with POCO types previous releases of the SDK relied on reflection to discover all applicable properties for serialization / de-serialization at runtime. This process was both repetitive and expensive computationally. In 2.1 we are introducing support for Compiled Expressions which will allow the client to dynamically generate a LINQ expression at runtime for a given type. This allows the client to do the reflection process once and then compile a Lambda at runtime which can now handle all future read and writes of a given entity type. In performance micro-benchmarks this approach is roughly 40x faster than the reflection based approach computationally.

All compiled expressions for read and write are held in a static concurrent dictionaries on TableEntity. If you wish to disable this feature simply set TableEntity.DisableCompiledSerializers = true;

Serialize 3rd Party Objects

In some cases clients wish to serialize objects in which they do not control the source, for example framework objects or objects form 3rd party libraries. In previous releases clients were required to write custom serialization logic for each type they wished to serialize. In the 2.1 release we are exposing the core serialization and de-serialization logic for any CLR type. This allows clients to easily persist and read back entities objects for types that do not derive from TableEntity or implement the ITableEntity interface. This pattern can also be especially useful when exposing DTO types via a service as the client will longer be required to maintain two entity types and marshal between them.

A general purpose adapter pattern can be used which will allow clients to simply wrap an object instance in generic adapter which will handle serialization for a given type. The example below illustrates this pattern:

public class EntityAdapter<T> : ITableEntity where T : new()

{

public EntityAdapter()

{

// If you would like to work with objects that do not have a default Ctor you can use (T)Activator.CreateInstance(typeof(T));

this.InnerObject = new T();

}

public EntityAdapter(T innerObject)

{

this.InnerObject = innerObject;

}

public T InnerObject { get; set; }

/// <summary>

/// Gets or sets the entity's partition key.

/// </summary>

/// <value>The partition key of the entity.</value>

public string PartitionKey { [TODO: Must implement logic to map PartitionKey to object here!] get; set; }

/// <summary>

/// Gets or sets the entity's row key.

/// </summary>

/// <value>The row key of the entity.</value>

public string RowKey {[TODO: Must implement logic to map RowKey to object here!] get; set; }

/// <summary>

/// Gets or sets the entity's timestamp.

/// </summary>

/// <value>The timestamp of the entity.</value>

public DateTimeOffset Timestamp { get; set; }

/// <summary>

/// Gets or sets the entity's current ETag. Set this value to '*' in order to blindly overwrite an entity as part of an update operation.

/// </summary>

/// <value>The ETag of the entity.</value>

public string ETag { get; set; }

public virtual void ReadEntity(IDictionary<string, EntityProperty> properties, OperationContext operationContext)

{

TableEntity.ReadUserObject(this.InnerObject, properties, operationContext);

}

public virtual IDictionary<string, EntityProperty> WriteEntity(OperationContext operationContext)

{

return TableEntity.WriteUserObject(this.InnerObject, operationContext);

}

}

The following example uses the EntityAdapter pattern to insert a DTO object directly to the table via the adapter:table.Execute(TableOperation.Insert(new EntityAdapter<CustomerDTO>(customer)));Further I can retrieve this entity back via:

testTable.Execute(TableOperation.Retrieve<EntityAdapter<CustomerDTO>>(pk, rk)).Result;

Note, the Compiled Serializer functionality will be utilized for any types serialized or deserialized via TableEntity.[Read|Write]UserObject.Table IQueryable

In 2.1 we are adding IQueryable support for the Table Service layer on desktop and phone. This will allow users to construct and execute queries via LINQ similar to WCF Data Services, however this implementation has been specifically optimized for Windows Azure Tables and NoSQL concepts. The snippet below illustrates constructing a query via the new IQueryable implementation:

var query = from ent in currentTable.CreateQuery<CustomerEntity>()

where ent.PartitionKey == “users” && ent.RowKey = “joe”

select ent;

The IQueryable implementation transparently handles continuations, and has support to add RequestOptions, OperationContext, and client side EntityResolvers directly into the expression tree. Additionally, since this makes use of existing infrastructure optimizations such as IBufferManager, Compiled Serializers, and Logging are fully supported.Note, to support IQueryable projections the type constraint on TableQuery of ITableEntity, new() has been removed. Instead, any TableQuery objects not created via the new CloudTable.CreateQuery<T>() method will enforce this constraint at runtime.

Conceptual model

We are committed to backwards compatibility, as such we strive to make sure we introduce as few breaking changes as possible for existing clients. Therefore, in addition to supporting the new IQueryable mode of execution, we continue to support the 2.x “fluent” mode of constructing queries via the Where, Select, and Take methods. However, these modes are not strictly interoperable while constructing queries as they store data in different forms.

Aside from query construction, a key difference between the two modes is that the IQueryable interface requires that the query object be able to execute itself, as compared to the previous model of executing queries via a CloudTable object. A brief summary of these two modes of execution is listed below:

Fluent Mode (2.0.x)

- Queries are created by directly calling a constructor

- Queries are executed against a CloudTable object via ExecuteQuery[Segmented] methods

- EntityResolver specified in execute overload

- Fluent methods Where, Select, and Take are provided

IQueryable Mode (2.1+)

- Queries are created by an associated table, i.e. CloudTable.CreateQuery<T>()

- Queries are executed by enumerating the results, or by Execute[Segmented] methods on TableQuery

- EntityResolver specified via LINQ extension method Resolve

- IQueryable Extension Methods provided : WithOptions, WithContext, Resolve, AsTableQuery

The table below illustrates various scenarios between the two modes:

Fluent Mode

IQueryable Mode

Construct Query

TableQuery<ComplexEntity> stringQuery = new TableQuery<ComplexEntity>()

TableQuery<ComplexEntity> query = (from ent in table.CreateQuery<ComplexEntity>()

Filter

q.Where(TableQuery.GenerateFilterCondition("val",QueryComparisons.GreaterThanOrEqual, 50));

TableQuery<ComplexEntity> query =(from ent in table.CreateQuery<ComplexEntity>()

where ent.val >= 50select ent);

Take

q.Take(5);

TableQuery<ComplexEntity> query = (from ent in table.CreateQuery<ComplexEntity>()

select ent).Take(5);

Projection

q.Select(new List<string>() { "A", "C" })

TableQuery<ProjectedEntity> query = (from ent in table.CreateQuery<ComplexEntity>()

select new ProjectedEntity(){a = ent.a,b = ent.b,c = ent.c…});

Entity Resolver

currentTable.ExecuteQuery(query, resolver)

TableQuery<ComplexEntity> query = (from ent in table.CreateQuery<ComplexEntity>()

select ent).Resolve(resolver);

Execution

currentTable.ExecuteQuery(query)

foreach (ProjectedPOCO ent in query)

< OR >

query.AsTableQuery().Execute(options, opContext)

Execution Segmented

TableQuerySegment<Entity> seg = currentTable.ExecuteQuerySegmented(query, continuationToken, options, opContext);

TableQuery<ComplexEntity> query = (from ent in table.CreateQuery<ComplexEntity>()

select ent).AsTableQuery().ExecuteSegmented(token, options, opContext);

Request Options

currentTable.ExecuteQuery(query, options, null)

TableQuery<ComplexEntity> query = (from ent in table.CreateQuery<ComplexEntity>()

select ent).WithOptions(options);

< OR >

query.AsTableQuery().Execute(options, null)

Operation Context

currentTable.ExecuteQuery(query, null, opContext)

TableQuery<ComplexEntity> query = (from ent in table.CreateQuery<ComplexEntity>()

select ent).WithContext(opContext);

< OR >

query.AsTableQuery().Execute(null, opContext)

Complete Query

The query below illustrates many of the supported extension methods and returns an enumerable of string values corresponding to the “Name” property on the entities.

var nameResults = (from ent in currentTable.CreateQuery<POCOEntity>()

where ent.Name == "foo"

select ent)

.Take(5)

.WithOptions(new TableRequestOptions())

.WithContext(new OperationContext())

.Resolve((pk, rk, ts, props, etag) => props["Name"].StringValue);

Note the three extension methods which allow a TableRequestOptions, an OperationContext, and an EntityResolver to be associated with a given query. These extensions are available by including a using statement for the Microsoft.WindowsAzure.Storage.Tables.Queryable namespace.The extension .AsTableQuery() is also provided, however unlike the WCF implementation this is no longer mandatory, it simply allows clients more flexibility in query execution by providing additional methods for execution such as Task, APM, and segmented execution methods.

Projection

In traditional LINQ providers projection is handled via the select new keywords, which essentially performs two separate actions. The first is to analyze any properties that are accessed and send them to the server to allow it to only return desired columns, this is considered server side projection. The second is to construct a client side action which is executed for each returned entity, essentially instantiating and populating its properties with the data returned by the server, this is considered client side projection. In the implementation released in 2.1 we have allowed clients to separate these two different types of projections by allowing them to be specified separately in the expression tree. (Note, you can still use the traditional approach via select new if you prefer.)

Server Side Projection Syntax

For a simple scenario where you simply wish to filter the properties returned by the server a convenient helper is provided. This does not provide any client side projection functionality, it simply limits the properties returned by the service. Note, by default PartitionKey, RowKey, TimeStamp, and Etag are always requested to allow for subsequent updates to the resulting entity.

IQueryable<POCOEntity> projectionResult = from ent in currentTable.CreateQuery<POCOEntity>()

select TableQuery.Project(ent, "a", "b");This has the same effect as writing the following, but with improved performance and simplicity:

IQueryable<POCOEntity> projectionResult = from ent in currentTable.CreateQuery<POCOEntity>()

select new POCOEntity()

{

PartitionKey = ent.PartitionKey,

RowKey = ent.RowKey,

Timestamp = ent.Timestamp,

a = ent.a,

b = ent.b

};Client Side Projection Syntax with resolver

For scenarios where you wish to perform custom client processing during deserialization the EntityResolver is provided to allow the client to inspect the data prior to determining its type or return value. This essentially provides an open ended hook for clients to control deserialization in any way they wish. The example below performs both a server side and client side project, projecting into a concatenated string of the “FirstName” and “LastName” properties.

IQueryable<string> fullNameResults = (from ent in from ent in currentTable.CreateQuery<POCOEntity>()

select TableQuery.Project(ent, "FirstName", "LastName"))

.Resolve((pk, rk, ts, props, etag) => props["FirstName"].StringValue + props["LastName"].StringValue);

The EntityResolver can read the data directly off of the wire which avoids the step of de-serializing the data into the base entity type and then selecting out the final result from that “throw away” intermediate object. Since EntityResolver is a delegate type any client side projection logic can be implemented here (See the NoSQL section here for a more in depth example).Type-Safe DynamicTableEntity Query Construction

The DynamicTableEntity type allows for clients to interact with schema-less data in a simple straightforward way via a dictionary of properties. However constructing type-safe queries against schema-less data presents a challenge when working with the IQueryable interface and LINQ in general as all queries must be of a given type which contains relevant type information for its properties. So for example, let’s say I have a table that has both customers and orders in it. Now if I wish to construct a query that filters on columns across both types of data I would need to create some dummy CustomerOrder super entity which contains the union of properties between the Customer and Order entities.

This is not ideal, and this is where the DynamicTableEntity comes in. The IQueryable implementation has provided a way to check for property access via the DynamicTableEntity Properties dictionary in order to provide for type-safe query construction. This allows the user to indicate to the client the property it wishes to filter against and its type. The sample below illustrates how to create a query of type DynamicTableEntity and construct a complex filter on different properties:

TableQuery<DynamicTableEntity> res = from ent in table.CreateQuery<DynamicTableEntity>()

where ent.Properties["customerid"].StringValue == "customer_1" ||

ent.Properties["orderdate"].DateTimeOffsetValue > startDate

select ent;

In the example above the IQueryable was smart enough to infer that the client is filtering on the “customerid” property as a string, and the “orderdate” as a DateTimeOffset and constructed the query accordingly.Windows Phone Known Issue

The current CTP release contains a known issue where in some cases calling HttpWebRequest.Abort() may not result in the HttpWebRequest’s callback being called. As such, it is possible when cancelling an outstanding request the callback may be lost and the operation will not return. This issue will be addressed in a future release.

Summary

We are continuously making improvements to the developer experience for Windows Azure Storage and very much value your feedback. Please feel free to leave comments and questions below.

Resources

• Alexandre Brisebois (@Brisebois) described Preventing Jobs From Running Simultaneously on Multiple Role Instances with Blob Leasing (see post below) on 9/10/2013:

There are times where we need to schedule maintenance jobs to maintain our Windows Azure Cloud Services. Usually, it requires us to design our systems with an extra Role in order to host these jobs.

Adding this extra Role (Extra Small VM) costs about $178.56 a year!

But don’t worry! There’s another way to schedule jobs. You can use Blob leasing to control the number of concurrent execution of each job. You can also use Blob leasing to help distribute the jobs over multiple Role instances.

To limit the number of concurrent executions of a job, you need to acquire a Blob lease before you start to execute the job. If the acquisition fails, the job is already running elsewhere and the Role instance must wait for the lease to be released before trying to run the job.

To distribute jobs evenly over multiple Role instances, you can execute a single job per Role instance and have each role determine which job to execute based on the Blob lease that they successfully acquire.

In a previous post I mentioned that it’s better to acquire a short lease and to keep renewing it than to acquire an indefinite lease. This also applies to job scheduling, because if a Role is taken down for maintenance, other roles must be able to execute the job.

To help me manage this complexity, I created a Job Reservation Service that uses a Blob Lease Manager in order to regulate how jobs are executed throughout my Cloud Services. The rest of this post will demonstrate how to use the Job Reservation Service. …

Alexandre continues with source code listing for his Job Reservation Service.

• Alexandre Brisebois (@Brisebois) advised Don’t be Fooled, Blob Lease Management is Tricky Business in a 9/8/2013 post:

Leasing Blobs on Windows Azure Blob Storage Service establishes a lock for write and delete operations. The lock duration can be 15 to 60 seconds, or can be infinite.

Personally prefer to lease a blob for a short period of time, which I renew until I decide to release the lease. To some, it might seem more convenient to lease a blob indefinitely and to release it when they’re done. But on Windows Azure, Role instances can be taken offline for a number of reasons like maintenance updates. Blobs that are indefinitely leased can eventually be leased by a process that no longer exists.

Granted that leasing a blob for a short period of time and renewing the lease costs more, but it’s essential for highly scalable Windows Azure Cloud Services. In situations where a process that had the original lease is prevented from completing its task, an other Role can take over.

Renewing blob leases requires extra work, but it’s really worth it when you think about it.

To help me deal with the complexity created by the necessity of continuously renewing the Blob lease, I created a Blob Lease Manager. It uses Reactive Extensions (Rx) to renew the Blob lease on a regular schedule that is based on the number of seconds specified when the lease was originally acquired.

Interesting Information About Blob Leases

Once a lease has expired, the lease ID is maintained by the Blob service until the blob is modified or leased again. A client may attempt to renew or release their lease using their expired lease ID and know that if the operation is successful, the blob has not been changed since the lease ID was last valid.

If the client attempts to renew or release a lease with their previous lease ID and the request fails, the client then knows that the blob was modified or leased again since their lease was last active. The client must then acquire a new lease on the blob.

If a lease expires rather than being explicitly released, a client may need to wait up to one minute before a new lease can be acquired for the blob. However, the client can renew the lease with their lease ID immediately if the blob has not been modified.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Philip Fu posted [Sample Of Sep 16th] How to store the images in SQL Azure to the All-In-One Code Framework blog on 9/16/2013:

Sample Download :

CS Version: http://code.msdn.microsoft.com/How-to-store-the-in-SQL-6c6a46b5

VB Version: http://code.msdn.microsoft.com/How-to-store-the-in-SQL-76078065

This sample demonstrates how to store images in Windows Azure SQL Server.

Sometimes the developers need to store the files in the Windows Azure. In this sample, we introduce two ways to implement this function:

- Store the image data in SQL Azure. It's easy to search and manage the images.

- Store the image in the Blob and store the Uri of the Blob in SQL Azure. The space of Blob is cheaper. If we can store the image in the Blob and store the information of image in SQL Azure, it's also easy to manage the images.

You can find more code samples that demonstrate the most typical programming scenarios by using Microsoft All-In-One Code Framework Sample Browser or Sample Browser Visual Studio extension. They give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates. If it is the first time that you hear about Microsoft All-In-One Code Framework, please watch the introduction video on Microsoft Showcase, or read the introduction on our homepage http://1code.codeplex.com/.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

• ComponentOne offers a live demonstration of their Silverlight-based OData Explorer here:

Note: Only the Northwind OData source is active. The buttons on the bottom row let you add, remove, and edit data sources.

You can learn more about Component One’s Studio for Silverlight and try other demos here.

Paul Horan described “New features for web-based access to database resources” in his OData Support in SQLAnywhere 16.0 article of 9/20/2013 for Sys-Con Media:

OData is quickly becoming the Lingua Franca for data exchange over the web. The OData standard defines a protocol and a language structure for issuing queries and updates to remote data sources, including (but not limited to) relational databases, file systems, content management systems, and traditional web sites. It builds upon existing Web technologies, like HTTP and RESTful web services, the Atom Publishing Protocol (AtomPub), XML, and Javascript Object Notation (JSON).

SAP and Sybase iAnywhere released SQL Anywhere version 16 in March 2013, and it had many cool new features. This blog post will cover one specific enhancement, the new support for OData access to SA databases.

Note: SA 16.0 is the follow-on release to version 12.0.1. There was no version 13, 14, or 15.

Background

SQL Anywhere actually introduced support for SOAP and REST-based web services back in their version 9.0 release! To enable web access, the server needed to be started with a new commandline switch that started an internal HTTP listener. This allowed the database server itself to function as a web server, and it could handle incoming HTTP/s requests. Inside the database, the developer would create separate SERVICE objects that could take a regular SQL query against a table, view, or stored procedure, and transform the result set into a number of formats, including XML, HTML, and JSON. Figure 1 below shows that basic architecture. ODBC/JDBC client/server connections would come into the server on a TCP/IP port, and HTTP/S connections would arrive through a separate port and be processed by the HTTP listener.

While this was a nice feature, it had the following negative aspects:

- Even though the HTTP web server component was listening on a separate port from the ODBC/JDBC connections, it still meant opening a port through the firewall and exposing the actual database server process to the open internet. Network administrators typically have a problem with opening non-standard ports through their firewall, especially to critical resources like database servers...

- The SERVICE objects were separate database objects from the underlying tables, views, and procedures that they were exposing. These would be written in a specific SQL Anywhere syntax, and would have to be maintained separately. Changes to the schema were not automatically reflected in the service objects, including artifacts like the WSDL for any SOAP services.

- The URI for accessing these services would need to include the physical database name. For example:

http://<servername>:<port>/<database name>/<service name>

This is a key piece of information that could potentially be used for malicious attacks against the database server.

SA 16.0 Architecture

SA 16 introduces a new server process for providing OData support. Its name is DBOSRV16.EXE, and it consists of two distinct components:

- The DBOSRV16.EXE HTTP server, which is the Jetty open source Java servlet container. This process runs outside the SA16 database server, and listens for incoming HTTP or HTTPS connections from web clients.

- The OData Producer Java servlet. This opens a JDBC connection to the SQL Anywhere database, and is responsible for processing the OData queries and updates and responding with either AtomPub (XML) or JSON formatted result sets. The OData producer servlet code is provided, and can be compiled and executed inside any web server capable of running Java servlets.

The best feature of this new setup is that database objects (tables and views) are automatically exposed to the OData producer. There is no longer any need to create and maintain separate SERVICE objects. In addition, the HTTP requests are not hitting the database server directly, increasing the security protection of that critical resource.

It's important to know that this does not replace the existing web services infrastructure - that all still exists in SA 16. These features are new additions to the architecture. Figure 2 below shows the revised architecture, with the DBOSRV16.EXE process managing incoming web requests.

Getting Started … Excised for brevity

Running OData Queries … Excised for brevity

Conclusion

The new OData Server process in SQL Anywhere 16 has a great many potential benefits.

- It can speed the development and prototyping phase, by allowing quick creation and modeling of OData services, without requiring heavy backend or EIS development.

- It can enhance the security of a production web services environment by eliminating the need for HTTP access directly to the database server.

- It can reduce the overall complexity of an n-tier application by eliminating the need to write middle-tier components that do nothing but transform data into JSON or XML.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

![]() No significant articles so far this week.

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

Vittorio Bertocci (@vibronet) posted Getting Acquainted with AuthenticationResult on 9/16/2013:

Now that the cat’s out of the bag, we can finally play with it, time & energy permitting, in the next days (weeks?) I’ll be covering some aspects of using ADAL .NET to help you to get the most from AD in your applications.

In the announcement post I introduced AcquireToken, the key primitive exposed by ADAL for obtaining access tokens to be used with the protected resources you want to access. Today I’ll go a bit deeper on how to use the results of that operation.

Let’s consider a common case, in which you have a desktop app accessing a Web API protected by Windows Azure AD, associated to the domain CloudIdentity.net (could have been just as well whatever.onmicrosoft.com, I just like to use the other one because it’s shorter). The client is regularly registered in the tenant, hence we have its ID; same for the Web API, for which we have the resource ID.

Here there’s the code you’d write for acquiring the token:

AuthenticationContext ac = new AuthenticationContext("https://login.windows.net/cloudidentity.net"); AuthenticationResult ar = ac.AcquireToken("https://cloudidentity.net/WindowsAzureADWebAPITest", "a4836f83-0f69-48ed-aa2b-88d0aed69652", new Uri("https://cloudidentity.net/myWebAPItestclient"));

The first couple of lines initialize the instance of AuthenticationContext which we can use for interacting with the associated AD instance.

The next line asks to the Windows Azure AD tenant for a token scoped for the resource https://cloudidentity.net/WindowsAzureADWebAPITest, advertises that the request is coming from the client a4836f83-0f69-48ed-aa2b-88d0aed69652, and indicates https://cloudidentity.net/myWebAPItestclient as reply url (technicalities of the OAuth2 code grant; let’s just say that ehre you have to pass the same value that is stored in the client registration in Windows Azure AD).

If that’s the first time that you call AcquireToken with those parameters, ADAL will take care of popping out a dialog containing a browser, which will in turn present the user with whatever authentication experience Windows Azure AD decides to use in this case.

If the user successfully authenticates, AcquireToken returns to you a nice AuthenticationResult instance. There you will find the access token (in the AccessToken property) you need to call the web API, and let me be clear: if performing the call as is is all you want to do, you don’t need to know anything else.

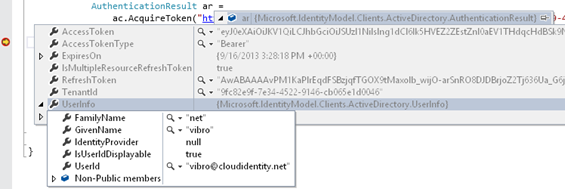

That said, you’ll discover that there’s way more stuff in AuthenticationResult: if you know what it’s for, you can use the extra info for interesting tweaks. Let’s take a look!Here there’s a view courtesy of the VS2013 debugger. Let me give you a brief description for all of those properties:

AccessToken – Those are the bits of the access token themselves. In most cases it will be a JWT, but you’re not supposed to know that! Or, to clarify: the token is meant for the resource, and for the client the token itself is supposed to be an opaque blob to be used but not inspected. Should you peek in it and take a dependency on any of its aspects in the client code, you’d end up with extremely brittle software: the characteristics of the token are the result of a negotiation between the resource and its issuer, which can change things at any time without having to inform the client. You’ve been warned.

AccessTokenType – givens indications on the intended usage. More details are beyond the scope of this post, but not not leaving completely dry: in this case “bearer” indicates that this token should be simply attached to requests.

ExpiresOn – this is the expiration of the access token. If you repeat the AccessToken call with the exact same parameters before the expiration occurs, you are going to get the exact same token as cached by ADAL. If you repeat the call after the expiration, if there is an associated refresh token (see below) and it is not expired itself ADAL is going to automatically (and silently, no UI) use it to get a new access token and return it to you. Bottom line; in general always use AcquireToken instead of storing the access token in your own code!

Note: ADAL does not offer any clock skew functionality. That expiration value is final. If there is clock skew between the issuer and the resource, you might need to do something to get things renewed sooner. More details in a future post.IsMultipleResourceRefreshToken – this flag indicates whether the refresh token (if present in the AuthenticationResult, it won’t always be the case) is one of those magic refresh tokens that can be used for silently obtaining an access token even for resources that are different from the one for which the refresh token/access token couple was obtained in the first place. Of all the flavors of AD ADAL can talk to, this can only happen with Windows Azure AD. This is another candidate for its own blog post.

RefreshToken – This contains the bits of the refresh token. It won’t always be present: ACS never issues it, ADFS will do so only in special cases and even Windows Azure AD will do so only when the underlying OAuth flow is right (e.g. code grant).

Important: in the general case, you don’t need to use this field at all. ADAL caches the refresh token and uses it transparently, taking full advantage of all of its capabilities, every single time you call AcquireToken. We return the refresh token because there are some cases where you might want to have full control about some other aspects, but those are only advanced scenarios that should be fairly rare.

TenantId – this is the identifier of the tenant that was used for authenticating the user. You might not have known that information in advance, given that you can create a generic AuthenticationContext tied to the Common endpoint: in that case, the true tenant is established only when the end user enters his/her credentials which by necessity must belong to a specific tenant. This value makes a lot of sense for Windows Azure AD, but less for other AD flavors.

UserInfo – This is a bit of a “contamination” from OpenId Connect. I won’t go in any details, I’ll just say that when ADAL triggers a user authentication with Windows Azure AD, as in the case described here, besides the expected bits (access token, refresh token…) you also get back an Id_token describing the user. Some info might be useful to you as generic properties (FirstName, LastName). Other properties can instead play an important role in the mechanics of how you handle authentication and manage state. More details below.

One property of UserInfo, UserId, deserves to be explored in more details. In the Windows Azure AD case, UserId will contain the UPN of the user who authenticated to obtain the tokens back. There are variations I don’t want to talk about yet, but they’ll work like in the UPN case hence for our explanation that makes no difference. Now, although this is a less-than-perfect identifier (it can be reassigned) it is still a pretty good moniker for tying the obtained tokens to the user they are associated to. As such, it is used as one of the component of the cache key under which ADAL stores the current result. And as such, this can play a role to gain more control in subsequent calls to AcquireToken. Let’s make an example.

Suppose that your client application is designed to allow the user to use multiple accounts at once. A classic example would be the People app in Windows 8, where you can connect multiple accounts from different providers. Say that you want to make sure that you will perform a certain API call using a specific user, for example the same one that was used in the earlier call. You have a reference to that user, the string you found in UserInfo.UserId from the AuthenticationResult of the first call: all you need to do is specifying it as a parameter in AcquireToken.

AuthenticationResult ar = ac.AcquireToken("https://cloudidentity.net/AnotherResource", "a4836f83-0f69-48ed-aa2b-88d0aed69652", new Uri("https://cloudidentity.net/whatevs"), "vibro@cloudidentity.net");Adding the UserId as parameter will have the following effect:

- ADAL will search the cache for an entry that not only satisfies the resource and clientid constraint, but also that it was obtained with the same userid

- If the cache does not contain a suitable entry, ADAL will pop out the browser dialog with the user textbox pre-populated with the indicated userid

That’s pretty neat, as it gives you the necessary control for handling tokens in multi-users scenarios that can notoriously get very complicated. I’ll get back to this in the post where I’ll discuss the cache model. So much to write!

Now, technically there’s nothing preventing your application from gathering the UPN of the intended user and feeding it directly in AcquireToken: that can work, but there are limitations:

- the username textbox will be pre-populated with the right values, but the end user can always delete its content and type another username. ADAL will always use what’s returned in the UserInfo.UserId as cache key, hence you can catch this situation with a simple check on the AuthenticationResult

- different flavors of AD work differently

Neither ADFS or ACS will return id_tokens, hence the UserInfo properties will not carry values when you work with those authority types. Well, mostly. We did do some extra steps for giving you at least some info.

For results from those authorities ADAL itself generates an identifier and stores it in the UserId field. That won’t tell you an absolute identifier for the user, but it does offer you a mechanism for enforcing that subsequent calls to AcquireToken can search the cache for the same user that came into play here. I know, it’s complicated: let me make you an example. Say that you call AcquireToken against ADFS, and you get back the usual tokens and a UserId value of 1234567. Now, from that userid value you cannot know that the user is in fact mario@contoso.com; but by using that userid value later on you can enforce that the token you are getting from the cache is associated to the same user that authenticated earlier, even if you are not certain about the actual identity of the user. It’s a bit like when you leave your jacket a the coat check. They give you a ticket with a number, and as long as you show up later with the same number you get your jacket back: at no point in that transaction your name need to come up.

If that’s not crystal clear don’t worry too much, I’ll be getting back on this multiple times in the next posts.That’s pretty much it! However, before closing I just want to make sure I stress that for the basic case you don’t need to know anything, and I mean anything at all, about the structure of AuthenticationResult. There is a little utility method which crafts a header for your web API flow, and that’s all you need to know to be able to call your OAuth-protected API. Just make sure you always call AcquireToken so that ADAL has the chance of handling token lifecycle & refreshing for you, and that’s all you need! Code below:

string authHeader = ar.CreateAuthorizationHeader(); HttpClient client = new HttpClient(); HttpRequestMessage request = new HttpRequestMessage(HttpMethod.Get, "https://localhost:44353/api/Values"); request.Headers.TryAddWithoutValidation("Authorization", authHeader); HttpResponseMessage response = await client.SendAsync(request); string responseString = await response.Content.ReadAsStringAsync(); MessageBox.Show(responseString);All so standard that I’ll deem it self-explanatory and call it a post.

Next, I’ll be talking about cache. Stay tuned!

Vittorio Bertocci (@vibronet) reported Active Directory Authentication Library (ADAL) v1 for .NET – General Availability! on 9/12/2013:

After more than one year, three developer previews and a ton of feedback from customers and partners (that would be you! Thank you!!!) today we are finally announcing the general availability of the Active Directory Authentication Library (ADAL) for .NET v1.0!

You can download it directly from the NuGet gallery or get it from Visual Studio.Through the year we produced a lot of material on ADAL, but between name changes (it started its existence as Windows Azure Authentication Library – AAL) and features set variations it might not be super easy for you to get a good idea of what the product does. The good news is that the MSDN documentation for ADAL is on its way. Also, I am going to take this chance to pretend that I never wrote anything about ADAL and use this post to (re)introduce the library to you, so that you can be confident that what you are reading is up to date for the RTM version. I will also try to convey the why’s behind the current design, which are likely to make this a pretty long post. So, grab your favorite caffeine delivery vessel and read on!

What Is the Active Directory Authentication Library (ADAL)?

If you are into definitions, here’s one for you:

The Windows Azure Authentication Library (ADAL) is a library meant to help developers to take advantage of Active Directory for enabling client apps to access protected resources.

In more concrete terms. If you have a resource (Web API or otherwise) that is secured via Active Directory, and you have a client application that needs to consume it, ADAL will help you to obtain the security token(s) the client needs to access the resource. In addition, ADAL will help you to maintain and reuse the tokens already obtained.

That’s pretty much it, actually. The rest is all about qualifying a bit better the terms I used:

Active Directory – I am using the term in its broadest sense. In practice: ADAL can work with Windows Azure AD, Windows Server AD (it requires the ADFS version in Windows Server 2012 R2) and ACS namespace.

Protected resource – In theory this can be pretty much anything that can be remotely accessed; in practice, we expect it to be a REST service in the vast majority of cases.

”Protected” in this context means that the service expects the caller to present an access token, which must be verified as coming from the right AD before granting access.Client app – This probably calls to mind the classic rich client applications, something with a UI built on the native visual elements of the platform it targets. ADAL can totally work with those, I’d even daresay they are the top scenario for this release, but they are not the only one: any app operating in a client role is a candidate. In practice, any application that is not a browser (code-behind of web sites, long running processes, workers, batches, etc) and needs to request a token to access a resource is a client app that can take advantage of ADAL.

Today’s release, the v1 of ADAL .NET, applies those principles to the .NET platform. However all of the above holds for ADAL in general: different platforms will have different capabilities and admit different app types, but that’s the general scope.

Now that you know what ADAL is for, let’s focus on how it operates.

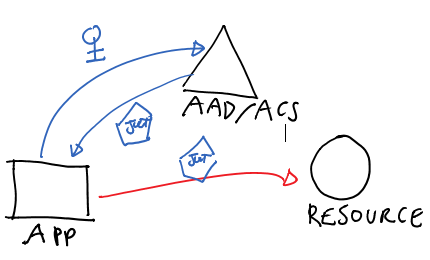

Whereas in the past our libraries surfaced all the constructs and concepts of the protocols and artifacts used in the mechanics of authentication, in ADAL we decided to focus on the scenario and the high level tasks. That allows us to eliminate, or at the very least late-bind, a lot of the complexity that with the traditional approaches would be something inescapable even for the simplest uses. Skeptical? A healthy attitude, very good. Go on, all will be revealed!ADAL’s Main Pattern

Identity is hard. There are so many things one needs to keep track of! Token formats, what protocol to use for a given topology, which parameters work for one identity provider but not for the other, how to prevent the user from being prompted every time, how to avoid saving passwords and secrets, what to do when you need multiple authentication factors, and many more obscure details.

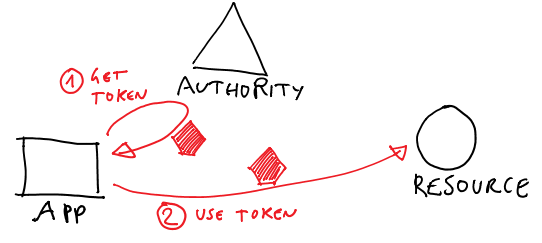

…And yet, a high level description of how things work sounds so simple:

- I have a client app

- I want to call a service, but it requires me to present a token

Hence

- I go to some kind of authority, and I do whatever is necessary to get a token for the resource

- once I have the token, I call the resource

Not hard at all, right? By virtue of its lack of details, the above describes pretty much all known client-calls-an-API scenarios: a WPF app calling a local service, a console app accessing a Web API running on Windows Azure, a web site code-behind calling the Bing Maps API, a continuous build integration worker process extracting stuff from a queue service, a web site using OAuth2 obtaining and using a token for delegated access to an API, and so on.

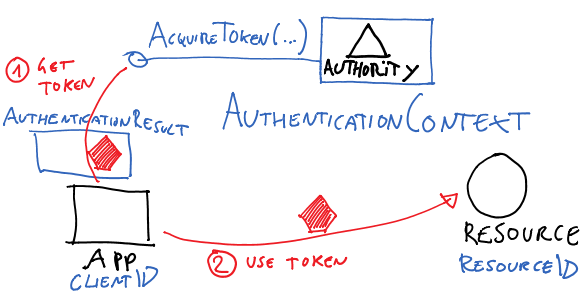

What if we could work at that level, instead of preoccupying ourselves with all sorts of details? Well, what you see diagrammed above is pretty much the ADAL object model. I mean it fairly literally.

ADAL’s main class, AuthenticationContext, represents in your app’s code the authority (Windows Azure AD tenant, Windows Server ADFS instance or ACS namespace) from where you want to get tokens from.

AuthenticationContext’s main method, AcquireToken, is the primitive which allows you to perform leg #1 in the diagram. The parameters will vary depending on the concrete scenario you are dealing with (more about that later) but in general you can expect to have to qualify which app is requesting the token and for which resource. Both are passed using the identifiers with which the client app and the resource are known by the authority (all actors must have been provisioned, AD will not issue tokens for unknown entities).

Upon successful authentication, AcquireToken returns an AuthenticationResult, which contains (among other things) an access token for the target service.

Once you have a token, the ball is on your court. ADAL does not force you to use channels or special proxies, nor it imposes you to use any specific protocol for accessing your target resource. You are responsible for plugging the token in the request to your resource, according to the protocol(s) it supports. In the vast majority of cases we observed during the preview that’s OAuth2.0, which just means that you have to add it in the right HTTP header of the request, but we did have somebody injecting the token in a WCF channel. It’s entirely up to you.

One last thing I’d highlight at this point is that every time you get a token from the authority ADAL adds it to a local cache. Every subsequent call to AcquireToken will examine the cache, and if a suitable token is present it will be returned right away. If a suitable token cannot be found, but there is enough information for obtaining a new one without repeating the entire authentication process (as it is the case with OAuth2 refresh tokens) ADAL will do so automatically. The cache is fully queryable and can be disabled or substituted with your own implementation, but if you don’t need either you don’t even need to know it’s there: AccessToken will use it transparently.

There you have it: now you understand what ADAL does, even how to do use it almost at the code level. Remarkably, all this didn’t require forcing you to grok any security protocol concept aside from a nondescript “token”. If you are still skeptical you might ask “where’s the trick? Where did all the complexity go?”. There is no trick, of course; but there are tradeoffs which are intrinsic to the problem, and I want to be transparent about those to set the right expectations. So, for the question “where did the complexity go” here there are few answers.

- It was moved from the code to the AD setup. AD, in all the flavors supported by ADAL, has the ability of maintaining descriptions of entities (client apps, web APIs, users) and the relationships which tie them together (“can client A call service B?”). ADAL fully relies on that intelligence, passing around identifiers that are actually references to what AD already knows about apps. That makes the code MUCH simpler, but in turn requires every entity you want to work with to be registered in your authority of choice. If in your company somebody else (like and administrator) does that for you, that’s a net gain for you the developer as you can leverage their work and avoid reinventing the wheel. If you are a one-man band an you wear both the hat of the developer and of the administrator, you are responsible for doing all the necessary provisioning before being able to code against the scenario. It’s still a big advantage (do it once, use it from multiple apps; maintain neater code; etc) but somebody got to take care of that.

- It was pruned by the pre-selection of fixed scenarios. ADAL gets tokens only from AD. To support that, it implements a number of protocols and artifacts: but those are not exposed to you for general use. ADAL’s API surface is tied to the AD-based topologies supported at this point in time, and its object model reflects that. That allows us to maintain a super simple set of primitives, but it also means that there’s little margin for customization or use beyond the intended scope.

- Dealing with finer details is delayed to the last possible moment. The high level description of the token based authentication flow is accurate for all scenarios, and an OM based on it is easy to understand for everybody. However, once the rubber hits the road it is inevitable that you’ll have to deal with some concrete aspects that are specific to the scenario you are implementing. For example: if you are writing a native client app and you want to get a token for a specific user, you’ll have to let AcquireToken know about it; conversely, if you are developing a long running process there might be no user involvement at all, but you might have to pass to AcquireToken an X.509 certificate to identify your app with AD.

How does ADAL reconcile those two abstraction levels? Simple. The act of acquiring a token is done always through the same primitive, AcquireToken. However such primitive provides multiple overloads, specialized for the scenarios ADAL supports.

The advantage of this approach is that if you write a native client app you only need to learn about the overloads that work for you, and you can ignore the ones for worker processes. Of course the issue becomes finding the right overload for your scenario! However we count on the fact that you know what are the entities that are used in your scenario, hence zeroing on the overload that has the right placeholders should be pretty straightforward.Bottom line: those scenarios have intrinsic complexity, but we did our best to factor things so that it is minimized and does not spill across scenarios.

What Scenarios/Topologies ADAL .NET v1 Supports?

Let’s get more concrete and take a look at the typical scenarios/topologies you can implement with ADAL.

Important: although ADAL’s object model abstracts away most differences between authority types (Windows Azure AD, Windows Server AD /ADFS, ACS) such differences exist: that means that certain scenarios will be supported when the authority is Windows Azure AD but not when it is an ACS namespace, and similar. I’ll call it out in the descriptions.

Native Client – Interactive User Authentication

Windows Azure AD/ADFS

This is the case in which you are developing a native app (anything that is not a browser and that can show UI, that includes consoles) and you want to access a resource with a token obtained as the current interactive user. This is probably ADAL’s easiest flow.

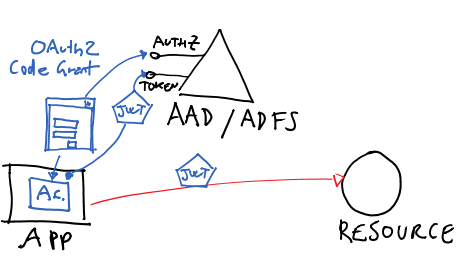

Peeking under the cover, for Windows Azure AD and for ADFS this flow is implemented using the OAuth2 code grant for public clients. Code-wise, you write something like the following:

AuthenticationContext _authenticationContext =new AuthenticationContext("https://login.windows.net/mytenant.onmicrosoft.com"); AuthenticationResult _authenticationResult =_authenticationContext.AcquireToken(“http://myservices/service1”,"a8cb2a71-da38-4cf4-9023-7799d00e09f6",new Uri("http://TodoListClient"));The first line creates an AuthenticationContext tied to the AAD tenant “mytenant”. I would use the exact same logic for an ADFS based authority; the visible difference would be that the AuthenticationContext would be initialized with the address of the ADFS instances’s root endpoints (typically hostname+”/Adfs/”).

The second line asks to mytenant to issue a token for the service with identifier http://myservices/service1, communicates that the request comes from client with ID a8cb2a71-da38-4cf4-9023-7799d00e09f6 and passes in the return URI (used as a terminator in the flow against the authorization endpoint: an OAuth2 protocol detail).

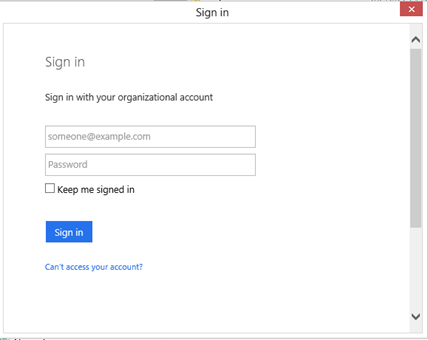

If this is the first time we execute that code, the effect of the call to AcquireToken will be to pop out a browser dialog gathering the user credentials:

ADAL handles creation of the dialog, crafting of the initial URL, navigation and every other aspect in full transparency. The dialog is really just a browser surface, and the authority decides what to send in term of experience: here we got username & password, but specific users might get multiple auth factors, consent prompts and similar.

Upon successful authentication, the AccessToken will be in _authenticationResult. The content _authenticationResult gets cached: subsequent calls to AcquireToken using the same parameters will yield the results from the cache until an expiration occurs.

You can find a detailed sample showing this scenario in this code gallery entry.

ACS

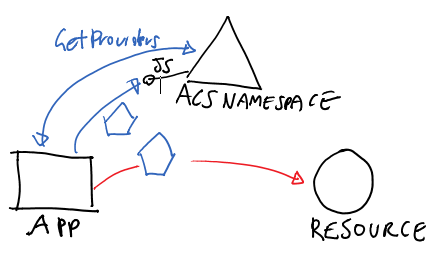

The scenario is possible also with an ACS namespace as authority; however the underlying protocols are different – ACS uses javascriptnotify for this – and the AcquireToken syntax reflects that.

Code:

_authenticationContext = new AuthenticationContext("https://mynamespace.accesscontrol.windows.net"); List<IdentityProviderDescriptor> idps = (List<IdentityProviderDescriptor>) _authenticationContext.GetProviders("http://myservices/service1"); AuthenticationResult result1 = _authenticationContext.AcquireToken("http://myservices/service1", idps[0]);The first line creates an AuthenticationContext instance tied to our ACS namespace.

The 2nd line reaches out for ACS’ info feed and extracts all of the IdPs supported by the target RP.

The 3rd line asks for a token for our RP (the resource) using the first IdP from the list. This will pop out a browser, already pointed to the IdP of choice, and use it to drive the authentication process. As for the AD case, the results will be cached.

An example can be found here.

Server to Server – Client Credentials Grant

This is the scenario in which the client application itself has its own credentials, which are exchanged for an issued token for the specified resource. Under the hood this is implemented as a OAuth2 client credentials grant, though different authority types use different OAuth2 drafts; in any case that’s fully immaterial to you given that the library takes care of picking the right style in full transparence. This topology is not available for ADFS. Also, different authority types support different crednetial types. Check the MSDN documentation for details.

Code:

_authenticationContext = new AuthenticationContext("https://login.windows.net/21211b42-1e25-4046-8872-d8832a38099b"); ClientCredential clientCred2 = new ClientCredential("2188a797-7d21-41dc-84f3-3c1720262614", "HBheXXXXXXXXXXXXXXXXXXXXXXXXXXXM="); AuthenticationResult _authenticationResult = _authenticationContext.AcquireToken("https://localhost:9001", clientCred2);The flow here introduces a new concept, the credential, which needs to be initialized for the client and passed to AcquireToken. There are other credential types, such as X.509, but the differences are only syntactic.

Example here.

Confidential Client Code Grant

This scenario is the most classic OAuth2 flow. ADAL .NET expects you to obtain the code on your own, given that the exact flow would depend on the stack you are developing against (web forms?MVC?).

Once you have that, you can pass it to AcquireTokenByAuthorizationCode. The method name departs from the main AcquireToken because it does not fully participate in the ADAL’s mainstream flow (for example, it does not save results in the cache).

We don’t have a sample for this, but we should publish one soon.

Notable Features

The above are the main supported topologies. ADAL also have some cross-cutting features that can improve your control over a specific scenario or make it easy to target specific types opf applications (e.g. apps that deal with a single user for their entire lifetime, vs apps which maintain multiple users at once).

Here I am going to list the main ones in no particular order, and give you a super-quick hit at what they are useful for; each of them is worth its own blog post, and I’ll try to diligently make that happen in the weeks ahead.

Resource Driven Discovery

Resources receiving unauthenticated or incorrectly secured requests can send back a challenge that indicates useful info such as which authority they trust, what is their resourceID, and so on. ADAL is capable of reading the format of that challenge and trigger a token acquisition on the basis of that.

Instance discovery and validation

ADAL protects you from resources forwarding you to malicious authorities by validating the authority URL against known templates. Note, this holds for AAD but ADFS is not currently capable of automated validation hence for ADFS scenarios you need to opt out of this function (at AuthenticationContext construction time).

Cache related features

ADAL comes with a default in-memory cache which spans the process, and that gets used automatically. That cache is fully queryable via LINQ, and contains far more than just tokens: when available, the cache will also contain user info such as identifiers, first and last name, and so on.

You can easily implement your own cache and plug it in: you might want to do so when you want a persistent store, enforce your own boundaries between cache stores associated to different AuthenticatonContext instances, and so on.

Broad Use Refresh Tokens

AAD issues refresh tokens that can redeemed for access tokens associated to any resource in the tenant, as opposed to just the resource they were originally obtained for. ADAL is aware of this possibility, and will actively take advantage of it when available.

Common endpoint

AAD offers a “common” endpoint not tied to any specific tenant; when used, such endpoints allows the end user to determine which tenant should be used according to the UPN he or she enters in the username field. ADAL supports late-binding AuthenticationContext which start against common but are adjusted after the first authentication disambiguates the tenant.

Experience modifiers

ADAL offers various flags for exercising more control over how the experience takes place, such as flags for guaranteeing that the end user will be prompted no matter what’ in the cache

Direct use of refresh tokens

For expert users, ADAL offers methods for using refresh tokens directly. Those methods do not affect the cache content.

To Learn More

ADAL is designed to help you to take advantage of Active Directory in your apps with a simple object model which does not require you to get a PhD in protocols & information security. It is one of the most obvious examples of the on-premises/cloud symmetry in Microsoft’s offering, given that the same code can be pointed to a Windows Azure AD or to an ADFS with practically no changes. I am incredibly proud of what the team has accomplished, and I feel privileged to have had the chance of working with such fine engineers on this key component.

Now that we finally hit GA, you can use ADAL .NET in production in your own apps: if you have questions, doubts or feedback feel free to hit the Contact tab and send me a not!Also: ADAL .NET is just the first release of the ADAL family. Stay tuned for news in this area

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

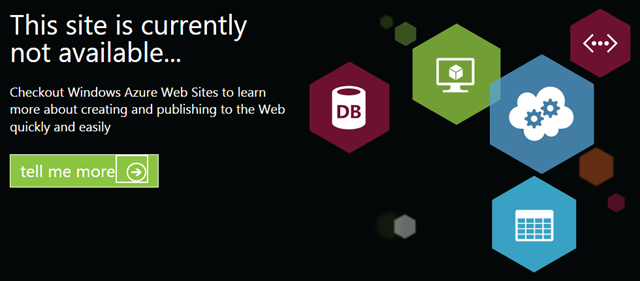

My (@rogerjenn) OakLeaf Windows Azure Website Upgraded to Standard Tier to Avoid Outages from Memory Use post of 9/20/2013 begins:

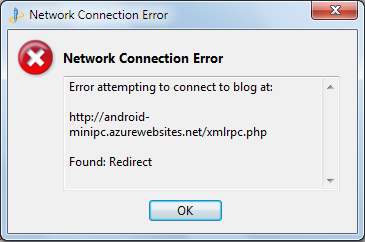

Adding new posts to my Android MiniPCs and TVBoxes Shared Windows Azure Web Site (WAWS) began suspending availability and redirecting to this Black Screen of Death about 15 minutes after each clock hour:

Note: For details about the start of this problem, see my Oakleaf Windows Azure Website Encounters Memory Usage Limit of 9/17/2013.

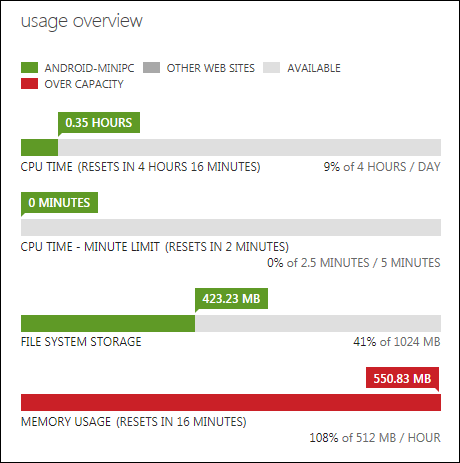

The Windows Azure Management Portal’s Usage Overview screen showed 390.53 of 512 MB/hour Memory usage one minute after being reset:

Running a Shared tier WAWS, which has a memory usage cap of 512 MB per hour, costs about US$10/month. A Small-instance Standard tier WAWS, which includes 1.75 GB of memory, costs $0.10 per hour or about $75.00 per month. Fortunately, I’m running the site under a Microsoft Partner Network Cloud Essentials subscription, which includes $100 per month of Windows Azure services. Otherwise, I would have moved the site to a free service, such as Blogger.

Note: The Windows Azure Cloud Essentials benefit is no longer available to new subscribers.

…

My (@rogerjenn) Oakleaf Windows Azure Website Encounters Memory Usage Limit post of 9/19/2013 reported:

Attempts to update a post to my Android MiniPCs and TVBoxes Shared Windows Azure Web Site (WAWS) with Windows Live Writer began throwing the following error at about 1:00 PM on 9/16/2013:

Attempts to access the site returned [the above] dreaded BSOD equivalent.

The Windows Azure Management Portal’s dashboard reported the Android mini-pc site was suspended:

Here’s the Usage Overview section of the report:

High memory usage probably results from caching the larger-than-average size of the posts to this site with the Word Press Super Cache.

…

Patrick reported Oracle Linux image available in Windows Azure starting September 23rd in a 9/20/2013 post to the Windows Azure Technical Support Team:

We are happy to announce that on September 23rd, Oracle Linux 6.4 (Red Hat compatible kernel) will be available in Windows Azure.

The Oracle image will be an endorsed image and will have the following characteristics for support:

- The Oracle Linux images will be published as Bring-Your-Own-License, which means the customer must have a support contract with Oracle already in place.

Support for Oracle Linux images published in Windows Azure is provided by Oracle. Customers should engage Oracle support directly. For Windows Azure Platform issues, please go to http://www.windowsazure.com/en-us/support/options/.

We hope this new image in Azure will be a great success and I encourage all of you to give it a whirl and let us (and Oracle) know what you think!

Azure Support would not be possible without your constructive feedback so please tell us your thoughts!

Note: The link to the original version of this post is broken.

Mary Jo Foley (@maryjofoley) asserted “Microsoft and AT&T are working on a new service, due next year, that will connect AT&T's MPLS VPN to Windows Azure” in a summary of her Microsoft and AT&T to provide VPN-to-Windows Azure service article of 9/18/2013 for ZDNet’s All About Windows blog:

Microsoft and AT&T will be providing a way for users to connect AT&T's virtual private networking technology to Windows Azure, Microsoft's public cloud, the pair announced on September 18.

The new offering will be available some time in the first half of 2014, and will use AT&T's cloud integration technology, AT&T NetBond, to connect AT&T's Multi-Protocol Label Switching (MPLS)-based VPN offering to Azure. AT&T customers already can use NetBond to connect their own datacenters to AT&T's other cloud offerings.

"Customers of the (new Microsoft-AT&T) solution are expected to benefit from the enterprise-grade security of virtual private networking, with as much as 50 percent lower latency than the public Internet, and access to cloud resources from any site using almost any wired or wireless device," according to Microsoft's press release outlining the new partnership.