Windows Azure and Cloud Computing Posts for 7/22/2013+

Top stories this week:

- Scott Guthrie (@scottgu) blogged Windows Azure July Updates: SQL Database, Traffic Manager, AutoScale, Virtual Machines on 7/23/2013 in the Windows Azure SQL Database, Federations and Reporting, Mobile Services section.

- The SQL Server Team (@SQLServer) announced Premium Preview for Windows Azure SQL Database Now Live! on 7/23/2013 in the Windows Azure SQL Database, Federations and Reporting, Mobile Services section.

- Eric Ligman (@ericligman) announced the availability of new free eBooks about Windows Azure in the Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses section.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 7/28/2013 with new articles marked ‡.

• Updated 7/24/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

‡ Andrew Brust (@andrewbrust) posted The Odd Couple: Hadoop and Data Security to his ZDNet Big on Data blog on 7/24/2013:

This guest post comes courtesy of Tony Baer’s OnStrategies blog. Tony is a principal analyst, covering Big Data, at Ovum.

By Tony Baer

Until now, security wasn’t a term that would normally be associated with Hadoop. Hadoop was originally conceived for a trusted environment, where the user base was confined to a small elite set of advanced programmers or statisticians and the data was largely weblogs. But as Hadoop crosses over to the enterprise, the concern is that many enterprises will want to store pretty sensitive data.

So what stood for Hadoop security up till now was using Kerberos to authenticate users for firing up remote clusters if the local ones were already used; accessing specific MapReduce tasks; applying coarse-grained access control to HDFS files or directories; or providing authorization to run Hive metadata tasks.

Security is a headache for even the most established enterprise systems. But getting beyond concern about Trojan Horses and other incursions, there is a laundry list of capabilities that are expected with any enterprise data store. There are the "three As" of Authorization, Access, and Authentication; there is the concern over the sanctity of the data; and there may be requirements for restricting access based on workload type and capacity utilization concerns. Clearly there are many missing pieces for Hadoop waiting to fall into place.

For starters, Hadoop is not a monolithic system, but a collection of modules that correspond to open source projects or vendor proprietary extensions. Until now the only single mechanism has been Kerberos tokens for authenticating users to different Hadoop components, but only standalone mechanisms for admitting named users (e.g. to Hive metadata stores). You could restrict user access at the file system or directory level, but for the most part such measures are only useful for preventing accidental erasures. And when it comes to monitoring the system (e.g., JobTracker, TaskTracker), you can do so from insecure Web clients.

There are more missing pieces concerning data, as nothing was built into the Apache project. There was no standard way for encrypting data, and neither was there any way for regulating who can have what kinds of privileges with which sets of data. Obviously, that matters when you transition from low level Web log data to handling names, account numbers, account balances or other personal data. And by the way, even low level machine data such as Web logs or logs from devices that detect location grow sensitive when associated with people’s identities.

There’s a mix of activity on the open source and vendor proprietary sides for addressing the void. There are some projects at incubation stage within Apache, or awaiting Apache approval, for providing LDAP/Active Directory linked gateways (Knox), data lifecycle policies (Falcon), and APIs for processor-based encryption (Rhino). There’s also an NSA-related project for adding fine-grained data security (Accumulo) based on Google BigTable constructs. And Hive Server 2 will add the LDAP/AD integration that’s current missing.

For the near term, not surprisingly, vendors are working to fill the void. Besides employing file system or directory-level permissions, you could physically isolate specific clusters, then superimpose perimeter security. Or you could use virtualization to similar effect, although that was not what it was engineered for. Zettaset Secure Data Warehouse includes role-based access control to augment Kerberos authentication, and applies a form of virtualization with its proprietary file system swap-out for HDFS; VMware’s Project Serengeti is intended to open source the API to virtualization.

Activity monitoring, what happens to data over its lifecycle – is supposed to be the domain of Apache Falcon, which is still in incubation. But vendors are beating the Apache project to the punch: IBM has extended its InfoSphere Guardium data lineage tool to monitor who is interacting with which data in HDFS, HBase, Hive, and MapReduce. Cloudera has recently introduced the Navigator module to Cloudera Manager to monitor data interaction, while Revelytix has introduced Loom, a tool for tracking the lineage of data feeding into HDFS. These offerings are the first steps — but not the last word — for establishing audit trails. Clearly, such capability will be essential as Hadoop gets utilized for data and applications subject to any regulatory compliance and/or enforcing any types of privacy protections over data.

Similarly, there are also moves active for masking or encrypting Hadoop data. Although there is nothing built into the Hadoop platform that prevents data masking or encryption, the sheer volume of data involved has tended to keep the task selective at best. IBM has extended InfoSphere Optim to provide selective data masking for Hadoop; Dataguise and Protegrity offer similar capabilities for encryption at the HDFS file level. Intel however holds the trump cards here with the recent Project Rhino initiative for open sourcing APIs for implementing hardware-based encryption, stealing a page from the SSL appliance industry (it forms the basis of Intel's new, hardware-optimized Hadoop distro).

It's in this context that Cloudera has introduced Sentry, a new open source project for providing role-based authorization for creating Hive Server 2 and Impala 1.1 tables. It’s a logical step for implementing the types of data protection measures for table creation and modification that are taken for granted in the SQL world, where privileges are differentiated by role for specific actions (e.g., select views and tables, insert to tables, transform schema at server or node level).

There are several common threads to Cloudera’s announcement. It breaks important new ground for adding features that gradually raise Hadoop security to the level of the SQL database world, yet also reveals what’s missing. For instance, the initial release lacks any visual navigation of HDFS files, Hive or Impala metadata tables, and data structures; it also lacks the type of time-based authorization that is common practice with Kerberos tickets. Most importantly, it lacks direct integration with Active Directory or LDAP (it can be performed manually), and it stores permissions locally. Sentry is only version 1; much of this wish list is already on Cloudera’s roadmap.

All these are good starts, but the core issue is that Sentry, like all Hadoop security features, remain point solutions lacking any capability for integrating to common systems of record for identity and access management – or end user directories. Sure, for many of these offerings, you can perform the task manually and wind up with piecemeal integration.

Admittedly, it’s not that single sign-on is ubiquitous in the SQL world. You can take the umbrella approach of buying all your end user and data security tools from your database provider, but there remains an active market for best in breed – that’s popular for enterprises seeking to address points of pain. But at least most best of breed SQL data security tools incorporate at least some provision for integration with directories or broader-based identity and access management systems.

Incumbents such as IBM, Oracle, Microsoft, and SAP/Sybase will be (and in some cases already are) likely to extend their protection umbrellas to Hadoop. That places onus on third parties – both veteran and startups – on the Hadoop side to get their acts together and align behind some security gateway framework allowing centralized administration of authentication, access, authorization, and data protection measures. Incubating projects like Apache Knox offer some promise, but again, the balkanized nature of open source projects threatens to make this a best of breed challenge.

For Hadoop to go enterprise, the burden of integration must be taken off the backs of enterprise customers. For Hadoop security, can the open source meritocracy stop talking piecemeal projects before incumbents deliver their own faits accomplis: captive open source silos to their own security umbrellas?

Mike Wood (@mikewo) wrote An Introduction to Windows Azure BLOB Storage for the Simple-Talk Newsletter and Red Gate Software published it on 7/12/2013 (missed when posted):

Windows Azure BLOB storage service can be used to store and retrieve Binary Large Objects (BLOBs), or what are more commonly known as files. In this introduction to the Windows Azure BLOB Storage service we will cover the difference between the types of BLOBs you can store, how to get files into and out of the service, how you can add metadata to your files and more.

There are many reasons why you should consider using BLOB storage. Perhaps you want to share files with clients, or off-load some of the static content from your web servers to reduce the load on them. However, if you are using Azure’s Platform as a Service (PaaS), also known as Cloud Services, you’ll most likely be very interested in BLOB storage because it provides persistent data storage. With Cloud Services you get dedicated virtual machines to run your code on without having to worry about managing those virtual machines. Unlike the hard drives found in Windows Azure Virtual Machines (the Infrastructure as a Service –IaaS- offering from Microsoft), the hard drives used in Cloud Services instances are not persistent. Because of this, any files you want to have around long term should be put into a persistent store, and this is where BLOB storage is so useful.

Where Do We Start?

BLOB Storage, along with Windows Azure Tables and Windows Azure Queues make up the three Windows Azure Storage services. Azure tables are a non-relational, key-value-pair storage mechanism and the Queue service provides basic message-queuing capabilities. All three of these services store their data within a Windows Azure Storage Account which we will need to get started. At the time of writing, each account can hold up to 200 TB of data in any combination of Tables, Queues or BLOBs and all three can be accessed via public HTTP or HTTPS REST based endpoints, or through a variety of client libraries that wrap the REST interface.

To get started using the BLOB service, we’ll first need to have a Windows Azure account and create a Storage Account. You can get a free trial account or, if you have a MSDN Subscription, you can sign up for your Windows Azure benefits in order to try out the examples included in this article. After you have signed up for your Azure account, you can then create a storage account that can then be used to store BLOBs.

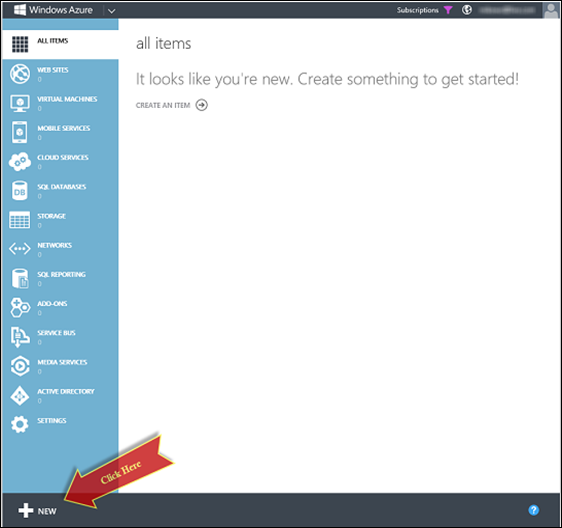

To create a storage account, log in to the Windows Azure management portal at https://manage.windowsazure.com. After you log in to the portal you can quickly create a Storage Account by clicking on the large NEW icon at the bottom left hand of the portal.

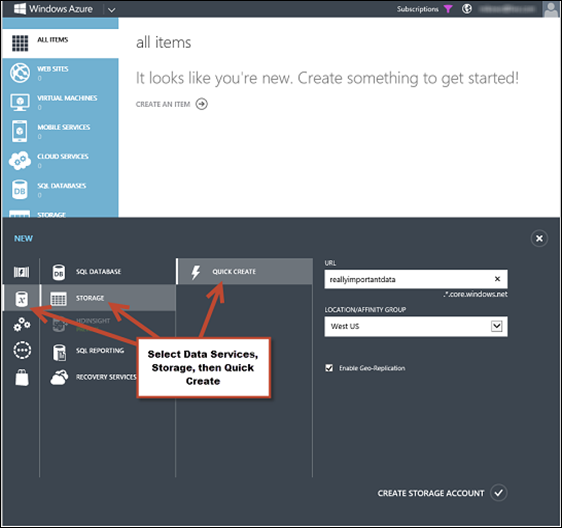

From the expanding menu select the ‘Data Services’ option, then ‘Storage’ and finally, ‘Quick Create’.

You will now need to provide a name for your storage account in the URL textbox. This name is used as part of the URL for the service endpoint and so it must be globally unique. The portal will indicate whether the name is available whenever you pause or finish typing. Next, you select a location for your storage account by selecting one of the data center locations in the dropdown. This location will be the primary storage location for your data, or more simply, your account will reside in this Data Center. If you have created ‘affinity groups’, which is a friendly name of a collection of services you want to run in the same location, you will also see that in your drop down list. If you have more than one Windows Azure subscriptions related to your login address, you may also see a dropdown list to enable you to select the Azure subscription that the account will belong to.

All storage accounts are stored in triplicate, with transactionally-consistent copies in the primary data center. In addition to that redundancy, you can also choose to have ‘Geo Replication’ enabled for the storage account. ’Geo Replication’ means that the Windows Azure Table and BLOB data that you place into the account will not only be stored in the primary location but will also be replicated in triplicate to another data center within the same region. So, if you select ‘West US’ for your primary storage location, your account will also have a triplicate copy stored in the East US data center. This mapping is done automatically by Microsoft and you can’t control the location of your secondary replication, but it will never be outside of a region so you don’t have to worry about your West US based account somehow getting replicated to Europe or Asia as part of the Geo Replication feature. Storage accounts that have Geo Replication enabled are referred to as geo redundant storage (GRS) and cost slightly more than accounts that do not have it enabled, which are called locally redundant storage (LRS).

Once you have selected the location and provided a name, you can click the ‘Create Storage Account’ action at the bottom of the screen. The Windows Azure portal will then generate the storage account for you within a few moments. When the account is fully created, you will see a status of Online. By selecting the new storage account in the portal, you can retrieve one of the access keys we will need in order to work with the storage account.

Click on the ‘Manage Access Keys’ at the bottom of the screen to display the storage account name, which you provided when you created the account, and two 512 bit storage access keys used to authenticate requests to the storage account. Whoever has these keys will have complete control over your storage account short of deleting the entire account. They would have the ability to upload BLOBs, modify table data and destroy queues. These account keys should be treated as a secret in the same way that you would guard passwords or a private encryption key. Both of these keys are active and will work to access your storage account. It is a good practice to use one of the keys for all the applications that utilize this storage account so that, if that key becomes compromised, you can use this dialog to regenerate the key you haven’t been using, then update the all the apps to use that newly regenerated key and finally regenerate the compromised key. This would prevent anyone abusing the account with the compromised key.

Your storage account is now created and we have what we need to work with it. For now, get a copy of the Primary Access Key by clicking on the copy icon next to the text box.

What Kind of BLOB is that?

Any file type can be stored in the Windows Azure BLOB Storage service, such as Image files, database files, text files, or virtual hard drive files. However, when they are uploaded to the service they are stored as either a Page BLOB or a Block BLOB depending on how you plan on using that file or the size of the file you need to work with.

Page BLOBs are optimized for random reads and writes so they are most commonly used when storing virtual hard drive files for virtual machines: In fact, the Page BLOB was introduced when the first virtual drive for Windows Azure was announced: the Windows Azure Cloud Drive (at the time they were known as Windows Azure X-Drives). Nowadays, the persisted disks used by Windows Azure Virtual Machine (Microsoft’s IaaS offering) also use the Page BLOB to store their data and Operating System drives. Each Page BLOB is made up of one or more 512-byte pages of data, up to a total size limit of 1 TB per file.

The majority of files that you upload would benefit from being stored as Block BLOBs, which are written to the storage account as a series of blocks and then committed into a single file. We can create a large file by breaking it into blocks, which can be uploaded concurrently and then then committed together into a single file in one operation. This provides us with faster upload times and better throughput. The client storage libraries manage this process by uploading files of less than 64 MB in size in a single operation, and uploading larger files across multiple operations by breaking down the files and running the concurrent uploads. A Block BLOB has a maximum size of 200 GB. For this article we will be using Block BLOBs in the examples.

Upload a File Already!

With a storage account created, and our access key available, we can utilize any of the three Windows Azure storage services including BLOB storage from outside of Windows Azure. Of course, you’ll want a way of viewing your BLOB storage in the same way you look at any other file system. We’ll show you how a bit later, but if you’re developing an application, your first concern will probably be to have the means to upload files automatically from code. We will therefore now look at the code that is required to upload a file, starting with a simple console application that uploads a file using the 2.0 Windows Azure .NET client library in C#.

Using Visual Studio create a new C# Console application.

When the standard console application is created from the template, it will not have a reference to the storage client library. We will add it using the Package Manager (NuGet).

Right-click on the project, and select ‘Manage NuGet Packages’ from the context menu.

This will load up the Package Manager UI. Select the ‘Online’ tab from the Package Manager dialog, and search for ‘Azure Storage’. As of the time of this writing version 2.0.5.1 was available. Select the Windows Azure Storage package and click ‘Install’.

If you prefer to manage your packages via the Package Manager Console, you can also type Install-Package WindowsAzure.Storage to achieve the same result. The Package Manager will add the references needed by the storage client library. Some of these references won’t be utilized when just working with BLOB storage. Below you can see that several assembly references were added, but specifically the Microsoft.WindowsAzure.Configuration and Microsoft.WindowsAzure.Storage assemblies are what we will be working with in this example.

…

Mike continues with source code and more blob details. Read his entire article here.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

‡ Chris Risner (@chrisrisner) produced a Notifications Hubs with iOS video for Channel9 on 7/26/2013:

In this video, Chris Risner walks you through using Windows Azure's Service Bus Notification Hubs feature to enable push notifications to iOS applications. Notification Hubs give you a way to deliver broadcast style push notifications at scale with a simple API and an objective-C client SDK. This video will also demonstrate how you can trigger our Notification Hub to deliver a push notification using Windows Azure Mobile Services.

Scott Guthrie (@scottgu) blogged Windows Azure July Updates: SQL Database, Traffic Manager, AutoScale, Virtual Machines on 7/23/2013:

This morning we released some great updates to Windows Azure. These new enhancements include:

- SQL Databases: Support for Automated SQL Export and a New Premium Tier SQL Database option

- Traffic Manager: New support for managing Windows Azure Traffic Manager in the HTML Portal

- AutoScale: Support for Windows Azure Mobile Services, AutoScale rules for Service Bus Queue Depth, Alerts on AutoScale actions

- Virtual Machines: Updates to the IaaS management experiences in the Management Portal

All of these improvements are now available to use immediately (note: some are still in preview). Below are more details about them.

SQL Databases: Support for Automated SQL Database Exports

One commonly requested feature we’ve heard has been the ability for customers to perform recurring, fully automated, exports of a SQL Database to a Storage account. Starting today this is now a built-in feature of Windows Azure. You can now export transactional-consistent copies of your SQL Databases, in an automated recurring way, to a .bacpac file in a Storage account using any schedule you wish to define.

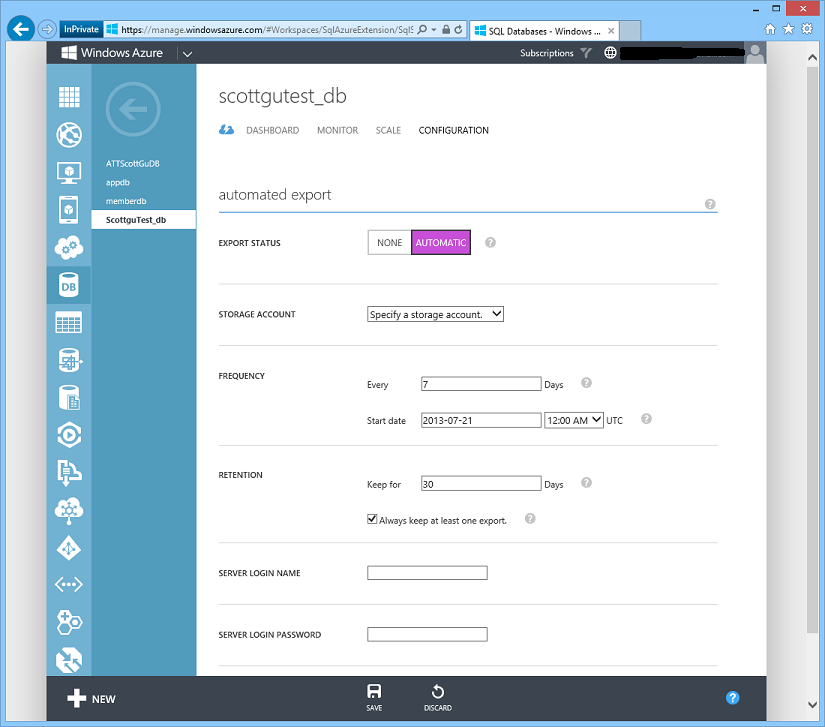

To take advantage of this feature, click on the “Configuration” tab of any SQL Database you would like to set up an automated export rule on:

Clicking the “Automatic” setting on “export status” will expand the page to include several additional configuration options that allow you to configure the database to be automatically exported to a transactionally consistent .bacpac file in a storage account of your choosing:

You can fully automate and control the time and schedule of the exports. By default, it’s set to once per week, but you may set it up to be as frequent as once per day. The start date and time allows you to define when the first export will happen. The time is in UTC, so if you want backups to happen each day at midnight US Eastern time, put 5:00 AM UTC. Keep in mind that exports can take several hours depending on the size of the database, so the start time is not a guarantee about when exports will be completed.

Next, specify the number of days to keep each export file. You can retain multiple export files. Use the “Always keep at least one export” option to ensure that you always have at least one export file to use as a backup. This overrides the retention period, so even if you stop backups for 30 days, you’ll still have an export.

Lastly, you’ll need to specify the server login and password for Automated Export to use. After providing the required information for your automated export, click Save, and your first automated export will be kicked off once the Start Date + Time is reached. You can check the status of your database exports (and see the date/time of the last one) in the quick glance list on the “Dashboard” tab view of your SQL Database.

Creating a new Database from an Exported One

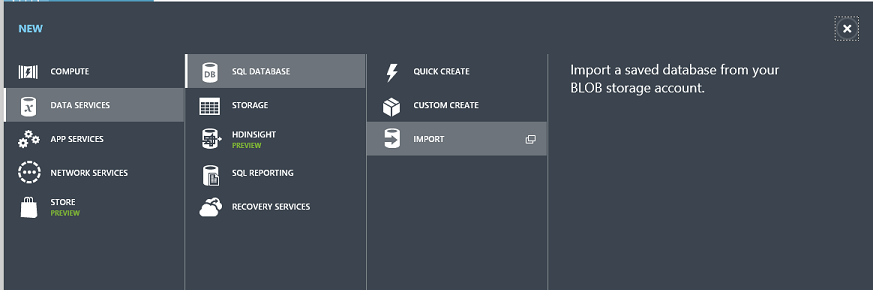

If you want to create a new SQL Database instance from an exported copy, simply choose the New->Data Services->Sql Database->Import option within the Windows Azure Management Portal:

This will then launch a dialog that allows you to select the .bacpac file for your SQL Database export from your storage account, and easily recreate the database (and name it anything you want).

Cost Impact

When an automated export is performed, Windows Azure will first do a full copy of your database to a temporary database prior to creating the .bacpac file. This is the only way to ensure that your export is transactionally consistent (this database copy is then automatically removed once the export has completed). As a result, you will be charged for this database copy on the day that you run the export. Since databases are charged by the day, if you were to export every day, you could in theory double your database costs. If you run every week then it would be much less.

If your storage account is in a different region from the SQL Database, you will be charged for network bandwidth. If your storage account is in the same region there are no bandwidth charges. You’ll then be charged the standard Windows Azure Storage rate (which is priced in GB saved) for any .bacpac files you retain in your storage account.

Conditions to set up Automated Export

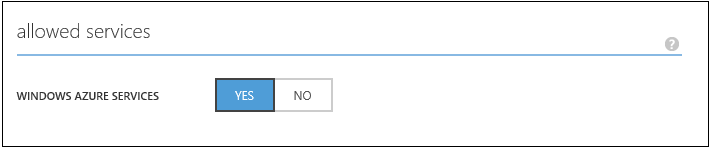

Note that in order to set up automated export, Windows Azure has to be allowed to access your database (using the server login name/password you configured in the automated export rule in the screen-shot above) . To enable this, go to the “Configure” tab for your database server and make sure the switch is set to “Yes”:

SQL Databases: Announcing New Premium Tier for Windows Azure SQL Databases

Today, we’re excited to announce the preview of a new Premium Tier for Windows Azure SQL Databases that delivers more predictable performance for business-critical applications. The Premium Tier helps deliver more powerful and predictable performance for cloud applications by dedicating a fixed amount of reserved capacity for a database including its built-in secondary replicas. This capability will help you scale databases even better and with more isolation.

Reserved capacity is ideal for cloud-based applications with the following requirements:

- High Peak Load – An application that requires a lot of CPU, Memory, or IO to complete its operations. For example, if a database operation is known to consume several CPU cores for an extended period of time, it is a candidate for using a Premium database.

- Many Concurrent Requests – Some database applications service many concurrent requests. The normal Web and Business Editions in SQL Database have a limit of 180 concurrent requests. Applications requiring more connections should use a Premium database with an appropriate reservation size to handle the maximum number of needed requests.

- Predictable Latency – Some applications need to guarantee a response from the database in minimal time. If a given stored procedure is called as part of a broader customer operation, there might be a requirement to return from that call in no more than 20 milliseconds 99% of the time. This kind of application will benefit from a Premium database to make sure that dedicated computing power is available.

To help you best assess the performance needs of your application and determine if your application might need reserved capacity, our Customer Advisory Team has put together detailed guidance. Read the Guidance for Windows Azure SQL Database premium whitepaper for tips on how to continually tune your application for optimal performance and how to know if your application might need reserved capacity. Additionally, our engineers have put together a whitepaper, Managing Premium Databases, on how to setup, use and manage your new premium database once you are accepted into the Premium preview and quota is approved.

Requesting an invitation to the reserved capacity preview requires two steps:

- Visit the Preview Features page to request access to the Premium preview program. Initial acceptance requires customers with active, paid Windows Azure subscriptions and account administrator responsibility.

- Once your subscription has been activated for the preview program, request a Premium database quota from either the server dashboard or server quickstart in the SQL Databases extension of the Windows Azure Management Portal.

For a closer look at signing up for the Premium preview, please review the short tutorial page, Sign up for Premium preview for Windows Azure SQL Database. For more details on Premium for SQL Database pricing, please visit the Windows Azure SQL Database pricing page.

Traffic Manager: Integrated within the Windows Azure Management Portal

The Windows Azure Traffic Manager is the newest service we’ve added to the Windows Azure Management Portal. Windows Azure Traffic Manager allows you to control the distribution of network traffic to your Cloud Services and VMs hosted within Windows Azure. It does this by allowing you to group multiple deployments of your Cloud Services under a single public endpoint, and allows you to manage the traffic load rules to them.

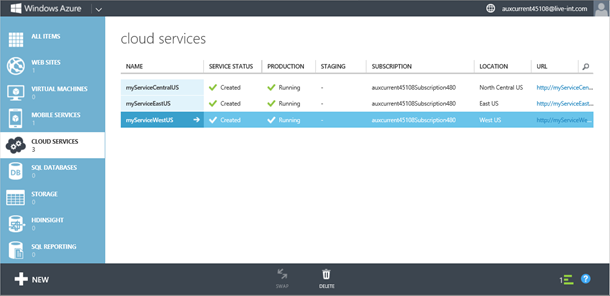

As an example of how to use this, let’s consider a scenario in which a Traffic Manager would help a Cloud Service be highly reliable and available. Let’s say that we have a Cloud Service that has been deployed across three regions: East US, West US and North Central US (using three different cloud service instances: myServiceEastUS, myServiceWestUS and myServiceCentralUS):

If we now wanted to make our Cloud Service efficient and minimize the response time for any request that is made to it, we might want to direct our network requests so that a request originating from an IP range or location goes to the deployed server with the lowest response time for that particular range or location. With Windows Azure Traffic Manager we can now easily do this.

Windows Azure Traffic Manager creates a routing table by pinging your cloud service from various locations around the world and calculates the response times. It then uses this table to redirect requests to your cloud service so that they are served with the lowest possible response times.

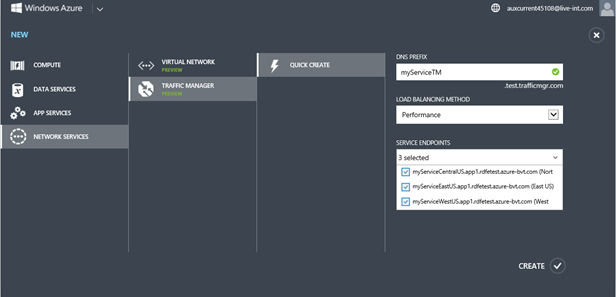

Here is how we could set this up: Create a Traffic Manager profile via NEW -> Network Services -> Traffic Manager -> Quick Create:

We’ll choose the Performance option from the “Load Balancing Method” drop down. We’ll select the three instance deployment endpoints we wish to put within the Traffic Manager (in this case our separate deployments within East US, West US and North Central US) and click the Create button:

Once we have created our Traffic Manager Profile, we can update our public facing domain www.myservice.com to resolve to our Traffic Manager DNS (in this case myservicetm.test.trafficmgr.com).

By clicking on the traffic manager profile we just created within the Windows Azure Management Portal, we can also later add additional cloud service endpoints to our traffic manager profile, change monitoring and health settings, and change other configuration settings such as DNS TTL and the Load Balancing Method.

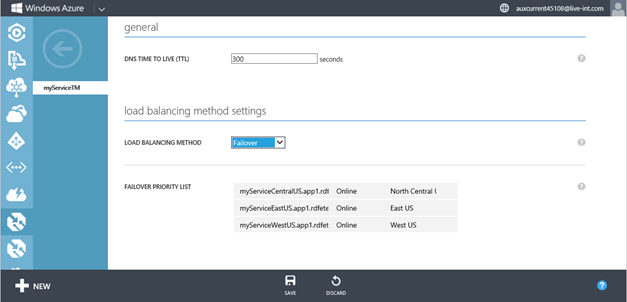

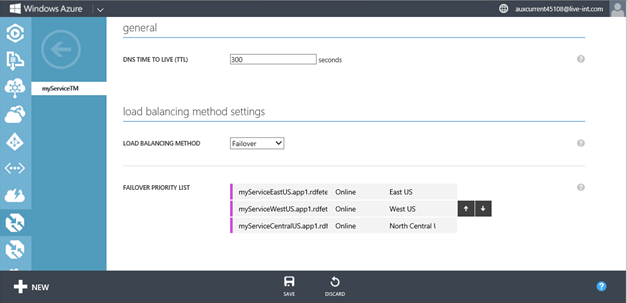

For example, let’s assume we want to later change our Load Balancing Method so that instead of being about performance it is instead optimized for failover scenarios and high availability. Lets say we want all our requests to be served by West US, and in the event the West US instance fails, we want the East US deployment to take point, followed by the deployment in North Central US if that fails too. We can enable this by going to the Configure tab of our Traffic Manager Profile and changing the Load Balancing Method to Failover:

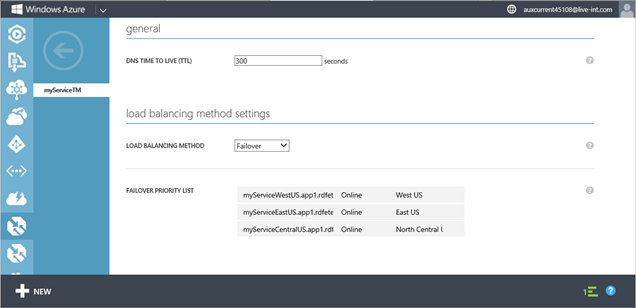

Next, we’ll change the Failover Priority List so that the deployment in West US, myServiceWestUS, is first in the list followed by myServiceEastUS and myServiceCentralUS.

Then we’ll click on Save to finalize the changes:

By changing these settings we’ve now enabled automatic failover rules for our cloud service instances and enabled multi-region reliability. The new integrated Traffic Manager experience within today’s Windows Azure Management Portal update makes configuring all of this super easy to setup.

AutoScale: Mobile Services, Service Bus, Trends and Alerts

Three weeks ago we added new automatic scaling support for Web Sites, Cloud Services and Virtual Machines.

AutoScale enables you to configure Windows Azure to automatically scale your application dynamically on your behalf (without any manual intervention required) so that you can achieve the ideal performance and cost balance. Once configured, AutoScale will regularly adjust the number of instances running in response to the load in your application. We’ve seen a huge adoption of AutoScale in the three weeks that it has been available. Today I’m excited to announce that even more AutoScale features are now available for you to use:

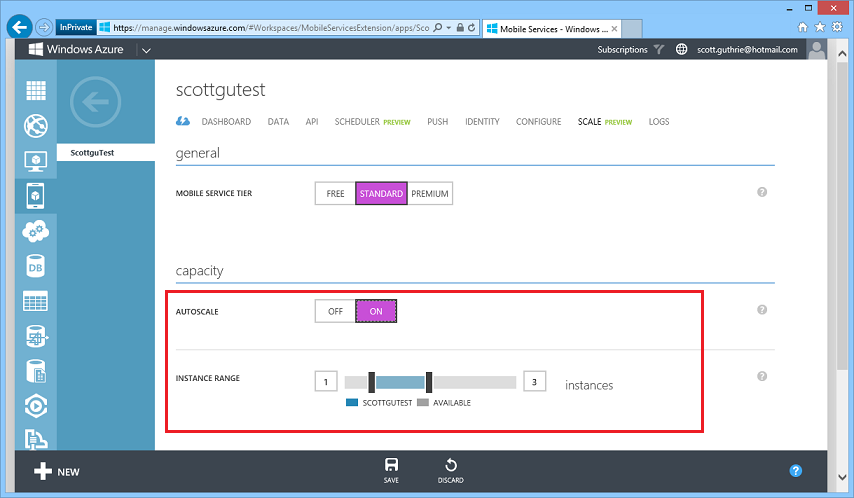

Windows Azure Mobile Services Support

AutoScale now supports automatically scaling Mobile Service backends (in addition to Web Sites, VMs and Cloud Services). This feature is available in both the Standard and Premium tiers of Mobile Services.

To enable AutoScale for your Mobile Service, simply navigate to the “Scale” tab of your Mobile Service and set AutoScale to “On”, and the configure the minimum and maximum range of scale units you wish to use:

When this feature is enabled, Windows Azure will periodically check the daily number of API calls to and from your Mobile Service and will scale up by an additional unit if you are above 90% of your API quota (until reaching the set maximum number of instances you wish to enable).

At the beginning of each day (UTC), Windows Azure will then scale back down to the configured minimum. This enables you to minimize the number of Mobile Service instances you run – and save money.

Service Bus Queue Depth Rules

The initial preview of AutoScale supported the ability to dynamically scale Worker Roles and VMs based on two different load metrics:

CPU percentage of the Worker/VM machine

- Storage queue depth (number of messages waiting to be processed in a queue)

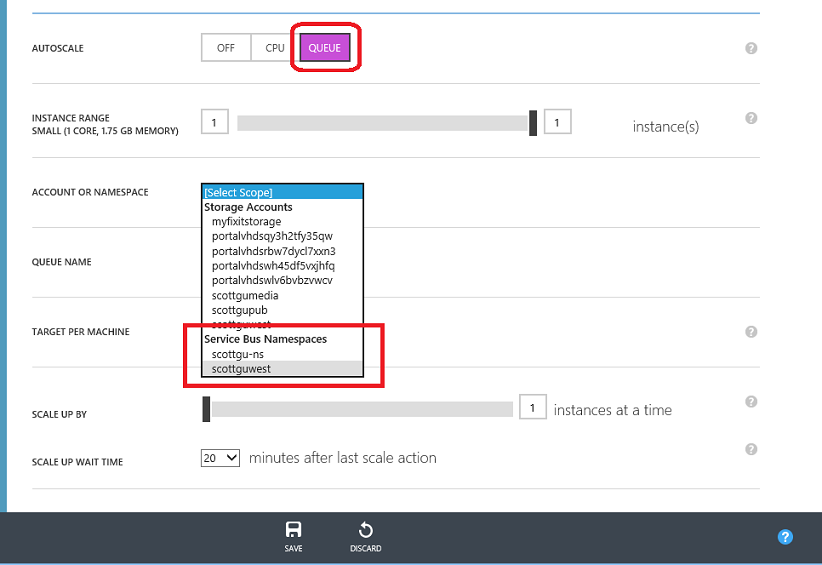

With today’s update, you can also now scale your VMs and Cloud Services based on the queue depth of a Service Bus Queue as well. This is ideal for scenarios where you want to dynamically increase or decrease the number of backend systems you are running based on the backlog of messages waiting to be processed in a queue.

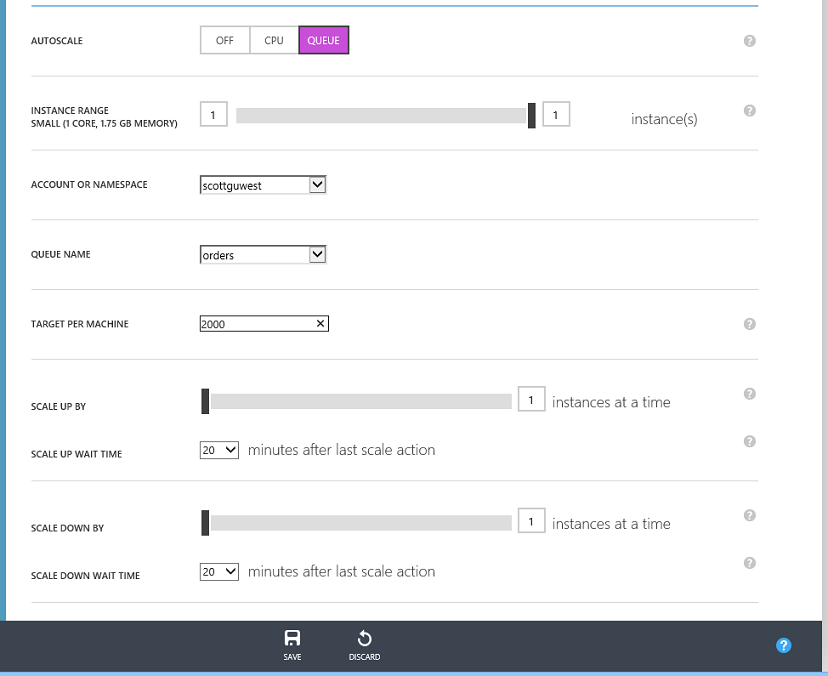

To enable this, choose the “Queue” AutoScale option within the “Scale” tab of a VM or Cloud Service. When you select 'Queue' in the AutoScale section, click on the ' Account / Namespace ' dropdown. You will now see a list of both your Storage Accounts and Service Bus Namespaces:

Once you select a Service Bus namespace, the list of queues in that namespace will appear in the ‘Queue Name’ section. Choose the individual queue that you want AutoScale to monitor:

As with Storage Queues, scaling by Service Bus Queue depth allows you to define a 'Target Per Machine'. This target should represent the amount of messages that you believe each worker role can handle at a time. For example, if you have a target of 200, and 2000 messages are in the queue, AutoScale will scale until you have 10 machines. It will then dynamically scale up/down as your application load changes.

Historical Trend Monitoring

When you AutoScale by CPU, we also now show a miniature graph of your role’s CPU utilization over the past week. This can help you set appropriate targets when first configuring AutoScale, and see how AutoScale has affected CPU once it’s turned on.

Alerts

In certain rare scenarios, something may cause the AutoScale engine to fail to execute a rule. We will now inform you in the Windows Azure Management Portal if an AutoScale failure is ongoing:

If you ever see this in the Portal, we recommend monitoring the responsiveness and capacity of your service to make sure that there are currently enough compute instances deployed to meet your goals.

In addition, if the AutoScale engine fails to get metrics, such as CPU percentage, from your virtual machines or website (this can be caused by intermittent network failures or diagnostics failure on the machine), the engine may possibly take a special one-time scale-up action, if your capacity was previously determined to be too low. After this, no further scale actions will be taken until the AutoScale engine can receive metrics again.

Virtual Machines:

Today’s Windows Azure update also includes several nice enhancements to how you create and manage Virtual Machines using the Windows Azure Management Portal.

Richer Custom Create Wizard

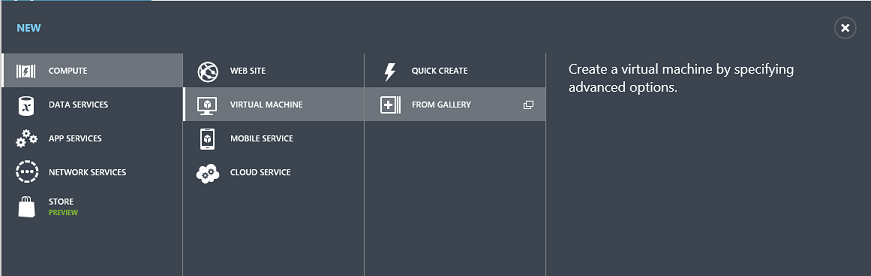

We now expose more Virtual Machine options when you create a new Virtual Machine using the “From Gallery” option in the management portal:

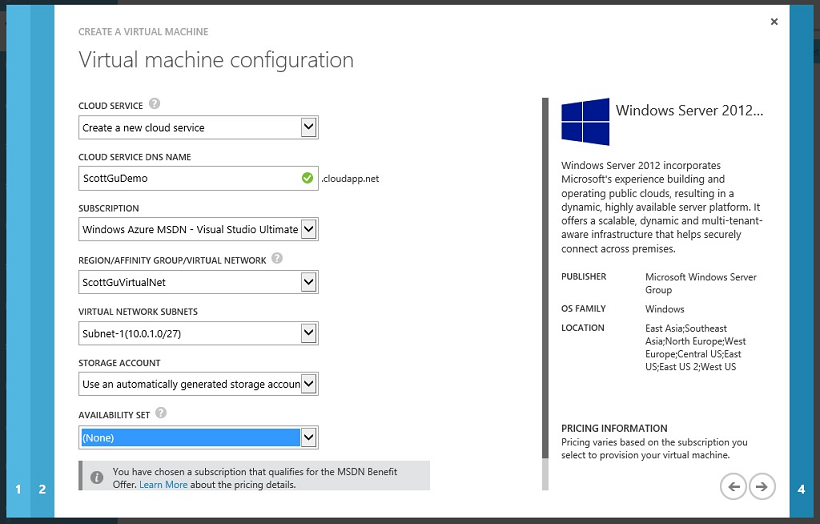

When you select a VM image from the gallery there are now two updates screens that you can use to configure additional options with it – including the ability to place it within a Cloud Service and create/manage availability sets and virtual network subnet settings:

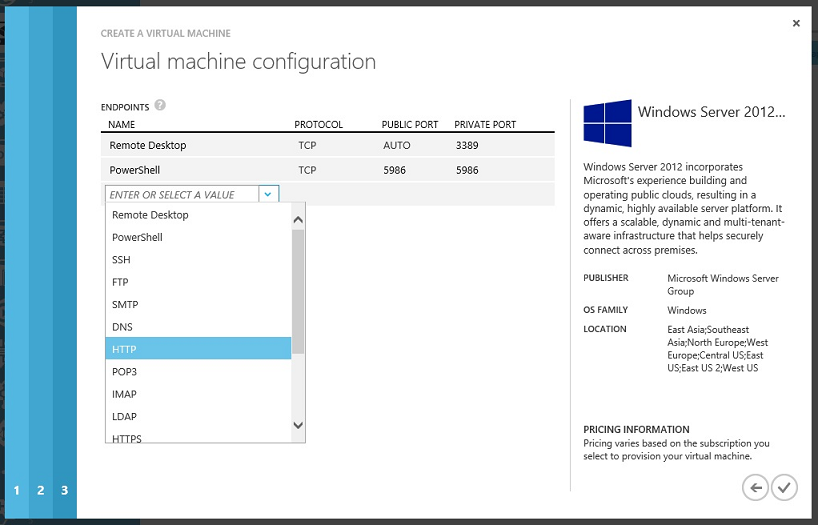

There is also a new screen that allows you to configure and manage network endpoints as part of VM creation within the wizard:

We now enable remote PowerShell by default, and make it really easy for users to configure other well-known protocol endpoints. You can select from well known protocols from a drop-down list (the screen-shot above shows how this is done) or you can manually enter your own port mapping settings.

Exposing the Cloud Services that are Behind a Virtual Machine

Starting this month you may have noticed that we also now expose the underlying Cloud Service used to host one or more Virtual Machines grouped within a single deployment. Previously we didn’t surface the fact that there was a Cloud Service behind VMs directly in the management portal – now you’ll always be able to access the underlying cloud service if you want (which allows you to control/configure more advanced settings).

Some additional notes:

- You can now use the VM gallery to deploy a VM into an existing – empty - Cloud Service. This enables the scenario where you want to customize the DNS name for the deployment before deploying any VMs into it.

- You can now more easily add multiple VMs to a cloud service container using changes we’ve made to the Create VM wizard.

- You can now use the new Traffic Manager support to enable network load traffic distribution to VMs hosted within Cloud Services.

- There are no additional charges for VMs now that the Cloud Services are exposed. They were always created; we’re simply un-hiding them going forward to enable more advanced configuration options to be surfaced.

Summary

Today’s release includes a bunch of great features that enable you to build even better cloud solutions. If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Windows Azure Developer Center to learn more about how to build apps with it.

The SQL Server Team (@SQLServer) announced Premium Preview for Windows Azure SQL Database Now Live! on 7/23/2013:

On July 8, 2013, at the Worldwide Partner Conference (WPC) in Houston, Texas, Satya Nadella, Server and Tools President announced a new Premium service for Windows Azure SQL Database that delivers more predictable performance for business-critical applications. This new Premium service further strengthens the data platform vision by giving you more flexibility and choice across on-premises and cloud for your business-class applications.

Today, we’re excited to announce that the limited preview is now live! SQL Database customers with account administrator rights can immediately request an invitation to this preview by visiting the Windows Azure Preview Features page.

The Premium preview for Windows Azure SQL Database will help deliver more powerful and predictable performance for cloud applications by dedicating a fixed amount of reserved capacity for a database including its built-in secondary replicas. This capability will help you raise the bar on the types of modern business applications you bring to the cloud. Reserved capacity is ideal for cloud-based applications with the following requirements:

- High Peak Load – An application that requires a lot of CPU, Memory, or IO to complete its operations. For example, if a database operation is known to consume several CPU cores for an extended period of time, it is a candidate for using a Premium database.

- Many Concurrent Requests – Some database applications service many concurrent requests. The normal Web and Business Editions in SQL Database have a limit of 180 concurrent requests. Applications requiring more connections should use a Premium database with an appropriate reservation size to handle the maximum number of needed requests.

- Predictable Latency – Some applications need to guarantee a response from the database in minimal time. If a given stored procedure is called as part of a broader customer operation, there might be a requirement to return from that call in no more than 20 milliseconds 99% of the time. This kind of application will benefit from a Premium database to make sure that computing power is available.

To help you best assess the performance needs of your application and determine if your application might need reserved capacity, our Customer Advisory Team has put together detailed guidance. Read the Guidance for Windows Azure SQL Database premium whitepaper for tips on how to continually tune your PaaS application for optimal performance and how to know if your application might need reserved capacity. Additionally, our engineers have put together a thorough resource, Managing Premium Databases, on how to setup, use and manage your new premium database once you are accepted into the Premium preview and quota is approved.

Requesting an invitation to the reserved capacity preview requires two steps:

- Visit the Preview Features page to request access to the Premium preview program. Initial acceptance requires customers have active, paid Windows Azure subscriptions and account administrator responsibility.

- Once your subscription has been activated for the preview program, request a Premium database quota from either the server dashboard or server quickstart in the SQL Databases extension of the Windows Azure Management Portal.

For a closer look at signing up for the Premium preview, please review the short tutorial page, Sign up for Premium preview for Windows Azure SQL Database.

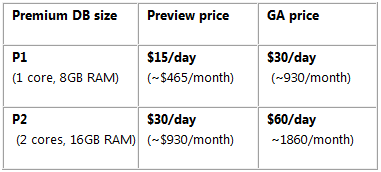

Premium databases are billed daily based on the reservation size, at 50 percent of the general availability price for the duration of the preview (see table below).

Initially, we will offer two reservation sizes: P1 and P2. P1 offers better performance predictability than the SQL Database Web and Business editions, and P2 offers roughly twice the performance of P1 and is suitable for applications with greater peaks and sustained workload demands. Premium database are billed based on the Premium database reservation size and storage volume of the database.

Premium Database:

Storage: $0.095 per GB per month (prorated daily)

For more details on Premium for SQL Database pricing, please visit the Windows Azure SQL Database pricing page.

We’re excited to see how the new Premium service for SQL Database helps you drive even greater innovation and raise the bar on the types of modern business applications you bring to the cloud. Sign up today!

The Windows Azure SQL Database Team sent messages announcing the availability of the Premium Preview on 7/23/2013:

![]() Click here to sign up.

Click here to sign up.

Pricing details are available here.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

Haddy El-Haggan (@Hhaggan) explained how to Start coding Service Bus Brokered Messaging using queue in a 7/21/2013 post:

After the introduction of the Service Bus, we agreed that they are divided into 2 different types the Relay Messaging and the Brokered Messaging and here are the differences between them. In this post or application associated to it, we are going in more depth in it, we now understand the main functionalities that will be used in developing an application using the Brokered Messaging API and have seen how the code can be done with Brokered Messaging whether with queues or Topic & Subscription.

To create the Windows Azure Service Bus on the Windows Azure account you can read this blog post describing how to do it.

Now starting to work with the development, there are few things that you have to put in consideration like working with Service Bus doesn’t mean that you have to build Cloud or Windows Azure application, remember that Service Bus is a messaging platform where it can connect multiple application together no matter where they are hosted.

As previously described in a previous blog post, Building your first Service Bus Brokered Messaging using Queue. I am not willing to write the same code again, however I just want to clarify that this type of messaging is like the queue storage that is used to connect both the WebRole and the WorkerRole in Windows Azure Cloud Services. However the Service Bus Brokered Messaging using queues also connects multiple roles together, with certainly some differences between them, you can find them here.

Here is a complete guide for the Windows Azure Service Bus Libraries, the Microsoft.ServiceBus & the Microsoft.ServiceBus.Messaging, they are based on the .Net SDK 1.8 Version.

Here is a link for the project discussed using Microsoft Windows Azure Service Bus Brokered Messaging using queue.

Haddy El-Haggan (@Hhaggan) described Service Bus Queues Vs Queue Storage in a 7/21/2013 post:

We have seen before in previous blog posts how to communicate between the Windows Azure Cloud Services roles WebRole and WorkerRole using Queue Storage. I also introduced the Windows Azure Service Bus, and showed the development of the Windows Azure service bus using queue messaging. As both of these types of queue are used for the connection between multiple roles, like the previous explained WebRole and WorkerRole communication using the queue storage and the inter role communication using the Service Bus queues.

For the Queue storage, some people don’t consider it as a storage for a simple reason that it is not durable. The queue storage only be stored for a maximum time of 7 days as for the Windows Azure Service Bus queues, its time to live is more flexible than the queue storage and you can decide its duration on creating the Service Bus itself on Windows Azure. For the queue message, the queue storage will not allow more than 64 KB in the same time the service bus queue messaging will accept more than 64 KB it can work with more than that but less than 256 KB. In the other hand, this point that you should take in consideration that the Windows Azure Queue storage will give you the ability to exceed 5 GB as the total size of all queue messages in the interval of time to live but the service bus queue storage will not allow you to reach this size, it is preferable that the total size of all queues be less than 5 GB during the interval of time to live for the messages.

However the queue storage is a very easy to implement, you can see an example in the following link. For the Windows Azure Service Bus brokered messaging using queue storage, will require more development, integration with WCF service.

You can find all about the predefined functions for the queue storage in the following document. You can also find all the predefined functions for the Microsoft.ServiceBus DLL and the Microsoft.ServiceBus.Messaging DLL.

For more information about the differences I really recommend you to read the following web page.

Travis D. Brown (@travisdbrown) posted Understanding the Basics of Windows Azure Service Bus on 7/19/2013:

As we become more distributed in our everyday lives, we must change our approach and view of how we build software. Distributed environments call for distributed software solutions. According to Wikipedia, a distributed system is a software system in which components located on networked computers communicate and coordinate their actions by passing messages. The most important part of a distributed system is the ability to pass a unified set of messages. Windows Azure Service Bus allows developers to take advantage of a highly responsive and scalable message communication infrastructure through the use of their Relayed Messaging or Brokered Messaging solutions.

Relay Messaging

Relay Messaging provides the most basic messaging requirements for a distributed software solution. This includes the following:

- Traditional one-way Messaging

- Request/Response Messaging

- Peer to Peer Messaging

- Event Distribution Messaging

These capabilities allow developers to easily expose a secured service that resides on a private network to external clients without the need of making changes to your Firewall or corporate network infrastructure.

Relay Messaging does not come without limitations. One of the greatest disadvantages of relay messaging is that it requires both the Producer (sender) and Consumer (receiver) to be online. If the receiver is down and unable to respond to a message, the sender will receive an exception and the message will not be able to process. Relay messaging not only creates a dependency on the receiver due to its remoting nature, this behavior also makes all responses subject to network latency. Relay Messaging is not suitable for HTTP-style communication therefore not recommended for occasionally connected clients.

Brokered Messaging

Unlike Relay Messaging, Brokered Messaging allows asynchronous decoupled communication between the Producer and Consumer. The main components of the brokered messaging infrastructure that allows for asynchronous messaging are Queues, Topics, and Subscriptions.

Queues

Service bus queues provides the standard queuing theory of FIFO (First In First Out). Queues bring a durable and scalable messaging solution that creates a system that is resilient to failures. When messages are added to the queue, they remain there until some single agent has processed the message. Queues allow overloaded Consumers to be scaled out and continue to process at their own pace.

Topics and Subscriptions

In contrast to queues, Topics and Subscriptions permit one-to-many communication which enables support for the publish/subscribe pattern. This mechanism of messaging also allows Consumers to choose to receive discrete messages that they are interested in.

Common Use Cases

When should you consider using Windows Azure Service Bus? What problems could Service Bus solve? There are countless scenarios where you may find benefits in your application having the ability to communicate with other application or processes. A few example may include an inventory transfer system or a factory monitoring system.

Inventory Transfer

In an effort to offer exceptional customer service, most retailers will allow their customers to have merchandise transferred to a store that is more conveniently located to their customers. Therefore, the store that has the merchandise must communicate to the store that will be receiving the product of this transaction. This includes information such as logistical information, customer information, and inventory information. To solve this problem using Windows Azure Service Bus, the retailer would setup relay messaging service for all retail locations that could receive a message describing the inventory transfer transaction. When the receiving store gets this notification they will use this information to allow the store to track the item and update their inventory.

Factory Monitoring

Windows Azure Service Bus could also be used to enable factory monitoring. Typically machines within a factory are constantly monitored to insure system health and safety. Accurate monitoring of these systems is a cost saver in the manufacturing industry because it allows factory workers to take a more proactive response to potential problems. By taking advantage of Brokered Messaging, the factory robots and machines can broadcast various KPI (Key Performance Indicator) data to the server to allow subscribed agents such as a monitoring software to respond to the broadcasted messages.

Summary

In summary, Windows Azure Service Bus offers a highly responsive and scalable solution for distributed systems. For basic request/response or one-way messaging such as transferring inventory within a group of retail stores, Relay Messaging will meet most system requirements. If your requirements call for a more flexible system that will support asynchrony and multiple message consumers, it is better to take advantage of the Queues and Topics that are made available in Brokered Messaging.

No significant articles today

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

Vittorio Bertocci (@vibronet) explained Securing a Web API with Windows Azure AD and Katana in a 7/23/2013 post:

During the Active Directory //BUILD/ 2013 talk, I briefly touched on how the Web API in my sample scenarios were secured using the new OWIN middleware offered by the ASP.NET’s Katana project, as opposed to the custom code we describe in our current native app+Web API walkthrough.

I had a lot of ground to cover, hence I did not have the chance of going in any depth; however the topic is super important, hence here I’ll provide a more thorough coverage of how you can use Katana to secure access to Web API via Windows Azure AD. Here there’s how it’s going to go down:

- First I’ll give you a quick introduction to Owin, Katana and why the entire thing is very relevant to your interests if you want to secure a Web API with Active Directory.

- That done, we’ll dive straight into Visual Studio and the Windows Azure AD portal to give a practical demonstration of how easy is is getting to secure a Web API in this brave new host-independent world.

Backgrounder

As preannounced, here there’s the theory. If you already know everything about OWIN and Katana, feel free to jump straight to the Walkthrough section. And if you aren’t really interested on what goes on under the hood, you can also skip directly to the walkthrough: none of the explanations in this backgrounder are strictly necessary for you to effectively use the Katana classes to secure your API.

“OWIN” Who?

That was my reaction when, months ago, I first heard my good friend & esteemed colleague Daniel Roth telling me about this new way of processing HTTP requests in .NET; it is also the reaction I often get when people in my team hear about this for the first time.

“OWIN” stands for “Open Web Interface for .NET”: its home on the web is here. In a nutshell, it is a specification which describes a standard interface between .NET web servers and .NET applications: by decoupling the two, OWIN makes it possible to write very portable code which is independent from the host it is going to be run on.

Did it help? Hmm, I thought so. Let’s try another angle.Today’s Web applications on .NET mainly target the ASP.NET/IIS platform, which plays both the roles of the host (underlying process management) and the server (network management and requests dispatching). Code that needs to run before your application is typically packaged following conventions that are specific of the ASP.NET/IIS platform: HttpModules, HttpHandlers, and so on. It works great, but it’s all quite monolithic. In practice, that means that if you’d like to target a different host (more and more situations call for running your Web API on a lightweight host; running on multiple platforms requires dealing with diverse hosts; etc) you end up reinventing the wheel multiple times as you have to re-implement all of the host specific logic for the target environments. On the other hand, think if you could unbundle things so that you can pick and choose components for individual functions & pipeline stages, selecting only the ones you need and substituting only the ones that are actually different across hosting platforms. And what’s best is that all those changes would take place beneath your application, which would now be far more portable. Does that sound a bit like Node.JS? Perhaps Rack? Well, that’s by design.

OWIN aims at making that unbundling possible, by defining a very simple interface between the various actors involved in request processing. Now, the guys behind the OWIN project will send me hate mail for oversimplifying, but here there’s what I understand to be the core of what a constitutes a server in OWIN:

- An environment dictionary, of the form IDictionary<string, object>, used for holding the request processing state: things like the request path, the headers collection, the request bits themselves, and so on

- A delegate, of the form Func<IDictionary<string, object>, Task>, which is used to model how every component appear to each other.

Basically, an app is a list of those components executed in sequence, each of those awaiting the next and passing the environment dictionary to each other. Doesn’t get simpler than this!

I would encourage you to actually read through the OWIN specification. It is really short and easy to grok, especially if you are used to security protocol specifications. The above pretty much sums it up: the main extra info is section 4, where the spec describes the host’s responsibilities for bootstrapping the process, but you’ll hardly find anything surprising there.

What is Katana

Now, if you are a man (or woman) of vision you’ll be already excited about the possibilities this unlocks. If you are a bit of a blue collar like yours truly, though, your reaction might be something to the effect “right right, it’s awesome, but all of my customers/apps run on IIS today. What’s in it for them?”.

Enter the Katana Project. In a nutshell, it is a collection of NuGet packages which 1) makes it possible (and easy!) to run OWIN components on classic ASP.NET/IIS and 2) provides various useful features in form of OWIN components. Note: OWIN components are often called OWIN middleware in literature, and I’ll use the terms myself interchangeably.

I highly recommend reading the “An Overview of Project Katana” whitepaper penned by the always excellent Howard Dierking. It gives a far better introduction/positioning of OWIN than I can ever hope to deliver, and it explains in details how Katana works. Below I’ll just give the minimal set of practical info I myself needed to understand how to use Katana for my own specific tasks, but of course there is much more that can be accomplished with it.

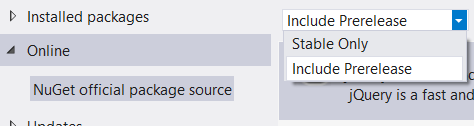

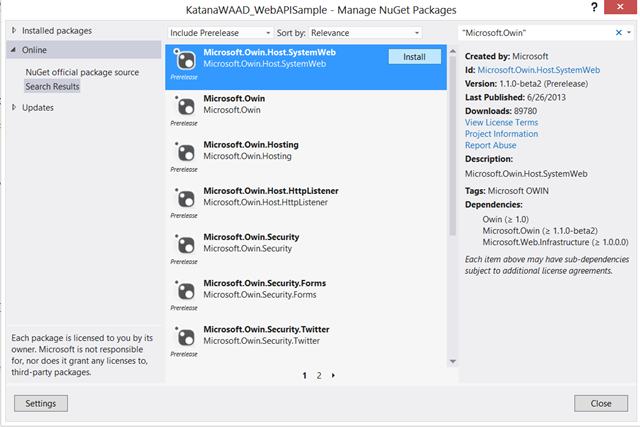

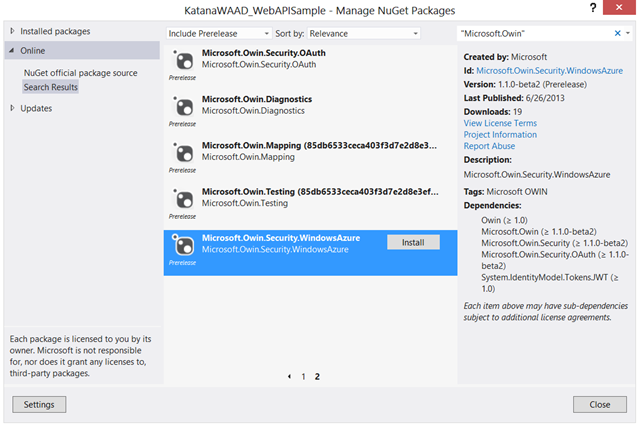

First of all: how to get the bits? Katana is a pretty large collection of NuGets, regularly published via the public feed; however as it is still in prerelease (it follows the VS 2013 rhythm) to see them from the VS UI you need to flip the default “Stable Only” dropdown to “Include Prerelease”.

A good way to zero on the packages you want is to type “Microsoft.OWIN” in the search field.

If you feel adventurous, you can also access the nightly builds fromhttp://www.myget.org/f/aspnetwebstacknightlyrelease/.

I’ll get back to this in the walkthrough, however: for most intents & purposes, all you need to do to enable your ASP.NET to use OWIN boils down to adding a reference to Microsoft.Owin.Host.SystemWeb. That does quite a lot of magic behind the scenes. The associated assembly is decorated so that it gets registered to execute logic at the pre application start event. At that time, it registers in the ASP.NET webserver pipeline its own HttpModule, designed to host the OWIN pipeline.

At startup time the HttpModule looks up for a Startup class, and if it finds it it executes its Configuration method. This bit is the actionable part for you: if you want to manipulate the OWIN pipeline in your project, they way you can do so is by providing the Startup class and adding your logic in Configuration. I would have never figured this out on my own, but that’s pretty much how that works in convention-based stacks… there is a bit of an initial curve to hike, but once you find out how they work things get super convenient.Before moving to the practical part, I’ll preempt another question you, as typical reader of this blog, might have: “wait a minute, the ASP.NET pipeline is event based and that’s important for authentication tasks; this OWIN pipeline seems just defined by the order in which you provide components. How do the two gel together?”. Well, I won’t go in the details, but I’ll say enough to reassure you: the two do work together quite well. Katana allows for segments of its pipeline to be decorated by “stage markers” which can refer to classic ASP.NET events: the HttpModule in Katana will ensure that the actual execution of the individual components is interleaved as indicated with the ASP.NET events of choice.

The above constitutes the foundation of all the work you’ll be doing with OWIN. Katana builds on that foundation, by providing out of the box a number of pre-built middleware elements which perform basic functions such as authenticating users from well-known providers. Faithful to the “total composability” principle behind OWIN, Katana delivers all those functions in individual NuGet packages (of the form Microsoft.Owin.Security.<provider>) which can be included on a need-to-use basis. I am sure it comes as no surprise that Windows Azure AD is among the in-box providers

Walkthrough

Alrighty, time to get our hands dirty. In the rest of the post I’ll walk you through the creation of a simple solution which includes a Web API secured via Katana & OWIN middleware, a test client and all of the necessary settings in Windows Azure AD to allow the necessary authentication steps to take place.

Setting Up the Web API Project

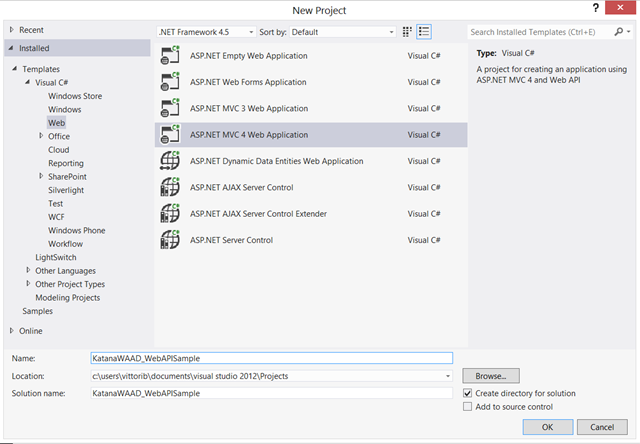

I will use Visual Studio 2012 to emphasize that this all works already with today’s tools, even though Katana and the Windows Azure AD endpoints for the code grant flow are still in preview.

Fire up Visual Studio 2012 and create a new MVC4 Web API project.

Make sure you pick the Web API template.

Wait for all the NuGets included in the project template to come down from the Internet (if your connection is not very fast you might have to be a bit patient) and once you get back control of the VS UI head to the Solution Explorer, right click on the Reference node and choose “Manage NuGet Packages”.Make sure that the leftmost dropdown says “Include Prerelease”, then enter in the search field “Microsoft.Owin”. Look for “Microsoft.Owin.Host.SystemWeb”.

Once you find it, click Install; accept all the various licenses (only if you agree with those, of course).

Don’t close the Manage NuGet Packages dialog yet! The package you just referenced brought down all of the necessary classes for hosting the OWIN pipeline in ASP.NET, and automatically hooked it up by providing self-registration logic for its own HttpModule. Now we need to acquire the OWIN middleware that performs the function we want in our scenario: validating OAuth2 resource requests carrying bearer tokens issued by Windows Azure AD. In the same dialog, look up Microsoft.Owin.Security.WindowsAzure (you might have to go to the 2nd page of results).

Before hitting Install, take a look at the dependencies on the right pane. Among those you can find our good old JWT handler, which I am sure you are already familiar with, and various other Katana components which implement lower level functions: for example, Microsoft.Owin.Security.OAuth implements the simple “retrieve the token from the Authorization header-validate it-create a ClaimsPrincipal” scaffolding that Microsoft.Owin.Security.WindowsAzure (can I abbreviate it in M.O.S.WA?) specializes for the case in which Windows Azure AD is the issuer of the token. M.O.S.WA (carefully handcrafted by the inimitable Barry Dorrans) basically implements all of the things we describe for the Web API side validation logic in the walkthrough (automatic retrieval of the signature verification settings, keys rollover, etc) but packages it in a super simple programming interface. In fact, let’s use it right away! After having installed M.O.S.WA, go back to the Solution Explorer. Right-click on the project, choose Add->Add Class.

Call the new class Startup.cs (it’s important to use that exact name).

The OWIN infrastructure in Katana is going to look for this class at start up time, and if it finds it it will call its Configure method assuming that we placed there whatever pipeline initialization logic we want to run. Hence, we need to add the Configure method to our newly created class and use it to add in the OWIN pipeline the middleware for processing Windows Azure AD secured calls. Here there’s how my code looks like after I have done so:using System; using System.Collections.Generic; using System.Linq; using System.Web; using Owin; using Microsoft.Owin.Security.WindowsAzure; namespace KatanaWAAD_WebAPISample { public class Startup { public void Configuration(IAppBuilder app) { app.UseWindowsAzureBearerToken(new WindowsAzureJwtBearerAuthenticationOptions() { Audience = "https://cloudidentity.net/API/KatanaWAAD_WebAPISample", Tenant = "cloudidentity.net" }); } } }Again, please refer to the Overview of Project Katana for fine details: for our purposes it should suffice to say that the convention for adding a middleware to the pipeline it to use its usexxx method. In this case the only configuration info our middleware requires are the App ID Uri we want to use for identifying our service (==what we expect in the Audience of tokens issued for our service) and the Windows Azure AD tenant we expect tokens from. The middleware can retrieve all of the other info (issuer, signing keys, endpoints, etc) from those two. Now that’s not very complicated, is it. Who said that identity is difficult?

Now that we have our middleware in place, let’s modify the API just a little bit to take advantage of that. For example, take the default GET :

// GET api/values [Authorize] public IEnumerable<string> Get() { return(((ClaimsPrincipal)Thread.CurrentPrincipal).Claims.Select(c=>c.Value)); //return new string[] { "value1", "value2" }; }Here I simply decorated the method with [Authorize] (so that only authenticated callers can invoke it) and substituted the default hardcoded values list with all the values of the incoming claims. It won’t make much sense on the client, but it should demonstrate that the middleweare successfully processed the incoming JWT token from Windows Azure AD.

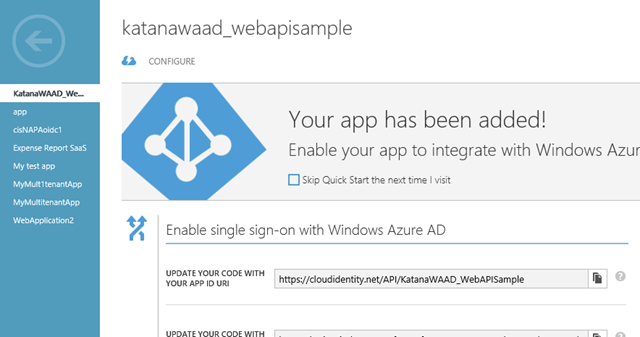

Setting Up the Web API in Windows Azure AD

Now that our Web API project is ready, it’s time to let Windows Azure AD know about it. As you have seen in our recent announcement, the Windows Azure portal now offers a very easy experience for registering Web API and configuring their relationships with native clients.

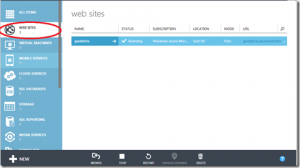

Let’s begin by navigating to the Windows Azure portal. If you have multiple subscriptions, make sure you sign in with the one corresponding to the tenant you indicated in the web API: in my case, I navigated to windows.azure.com/cloudidentity.net.

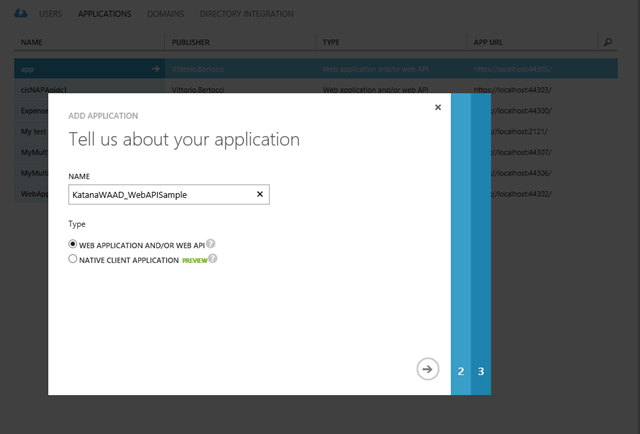

Once in, select your directory; click on the APPLICATIONS header; locate the Add button in the command bar at the bottom of the screen and click on it.

Keep the default type and enter a name: I usually reuse the VS project name to avoid losing track of things, but you can do whatever works best for you. Click on the arrow on the bottom right to advance to the next step.

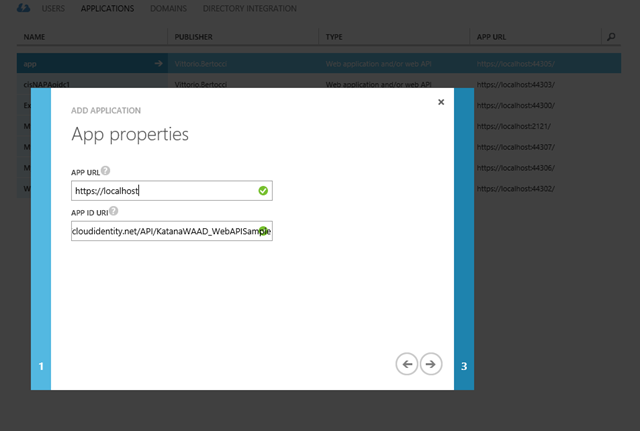

Here you are asked to enter the APP ID Uri (here you should enter the value you have chosen as Audience in the Startup.cs file in your Web API project) and the application URL.

About the latter, I’ll tell you a secret: that value is truly needed only if you are writing a Web application, which is one of the possible uses of the wizard we are going through. In that case, the URL is used to know to where the token representing successful authentication should be sent to. In the case of a Web API, however, the return URL will be handled by the client; hence here you can put whatever valid URL you like and that won’t have impact on the process. It is usually a good idea to put the correct URL anyway, given that you never know how you’ll want to evolve this API in the future (perhaps you’ll want to add a web UX!) but in this case I am not doing it for not adding steps to the walkthrough.

Use the right arrow to move to the next screen.

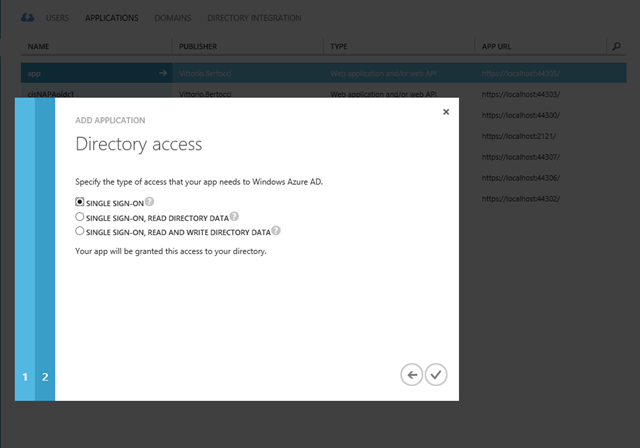

Here you can choose to grant extra powers to your Web API: if as part of the function being performed you need it to access the tenant’s Graph API, here you can ensure that the app entry representing your Web API will have the necessary privileges. Here we just want a token back, hence you can stick with the default and click the checkmark button on the lower right.

It is done! Your Web API is now listed among the apps of your Windows Azure AD tenant.

Setting Up a Test Client Project in the Portal and in Visual Studio

To exercise our Web API we need a client capable of obtaining a token from Windows Azure AD; in turn, Windows Azure AD requires such client to be registered and explicitly tied to the Web API before issuing tokens for it.

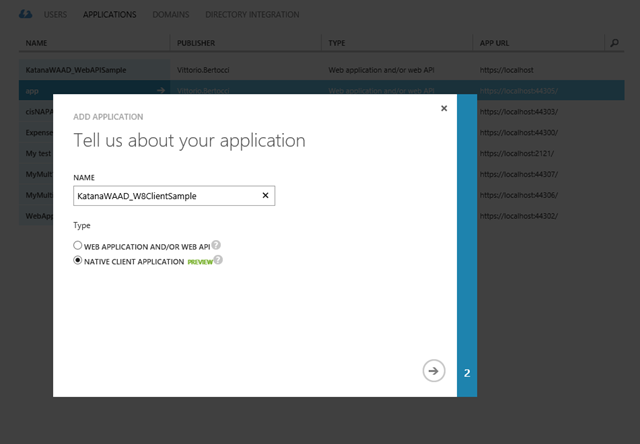

Since we are already in the portal, let’s go ahead and register our client right away: we’ll create a Visual Studio project for it right after.

Click on the big back arrow on the top left to get back to the applications list; once again, click on Add.

This time, you’ll want to select “native client application” as the application type. Move to the next screen.

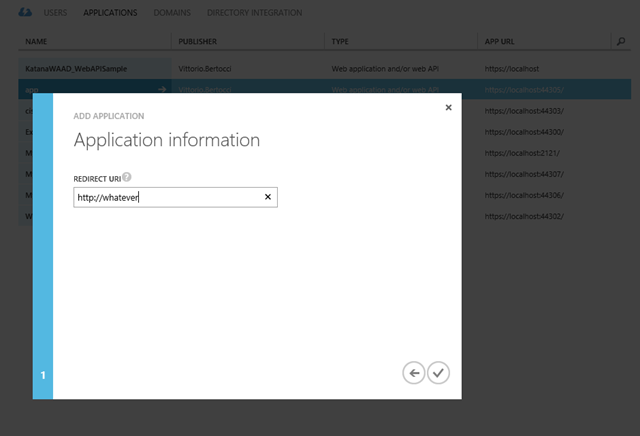

Here the wizard just asks you what URI should be used in the OAuth2 code grant flow for returning the code to the client application. If that sounds Klingon to you, don’t worry: we’ll postpone the detailed discussion of what it means to another day. Just think of this as a value that you’ll need to enter in your client that will be used at token acquisition time. Click on the checkmark button to finalize.

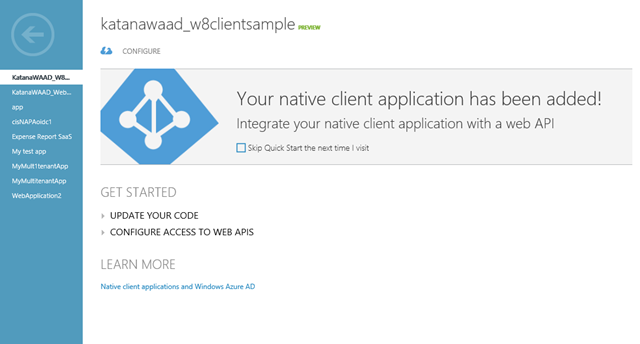

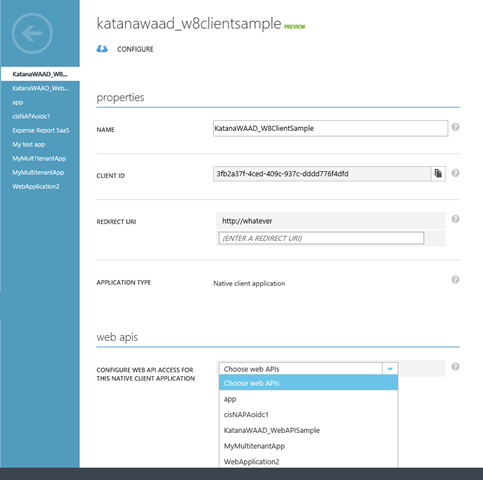

Excellent! Now Windows Azure AD has an entry for our client. We have two things left to do on the portal: ensure that this client is enabled to call our Web API, and gather the client coordinates we need to plug in our client project for obtaining access tokens. Let’s start with fixing things up between our client and our perspective service. Click on the “Configure Access to Web APIs” link in the Get Started section of the screen above, and select “configure it now”. You’ll end up on the following screen:

Select your Web API (mine is “”KatanaWAAD_WebAPISample”) from the dropdown at the bottom of the page and hit Save from the command bar. Your client is now in the list of clients that can call your Web API.

Don’t close the browser, we’ll need some of the stuff on this page, and go back to Visual Studio.

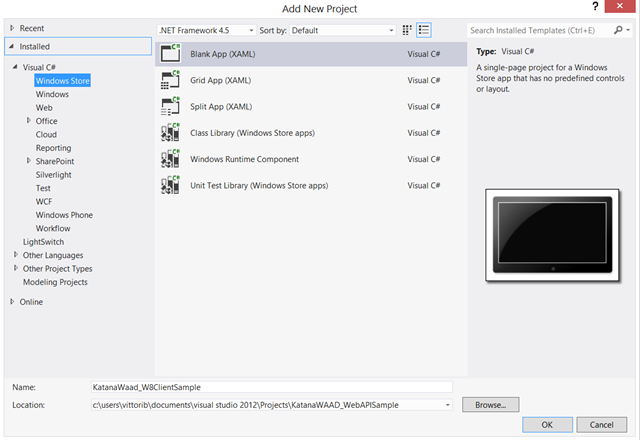

In the Solution Explorer, right click on the solution and click Add->New Project. Here I am going to create a blank Windows Store application, but you can definitely create any type of app you like (classic desktop apps are super easy to handle via AAL .NET, but even without libraries WP8 apps aren’t too hard either).

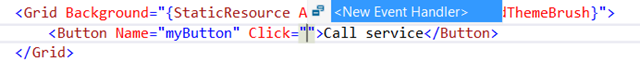

We aren’t going to do anything fancy here, we just want to see the various moving parts move. Head to MainPage.xaml, and add a button as shown below.

If you first name the button and then type the Click attribute, you’ll have the chance of having Visual Studio generate the event handler declaration for you. That’s what I did, VS generated myButton_Click for me.

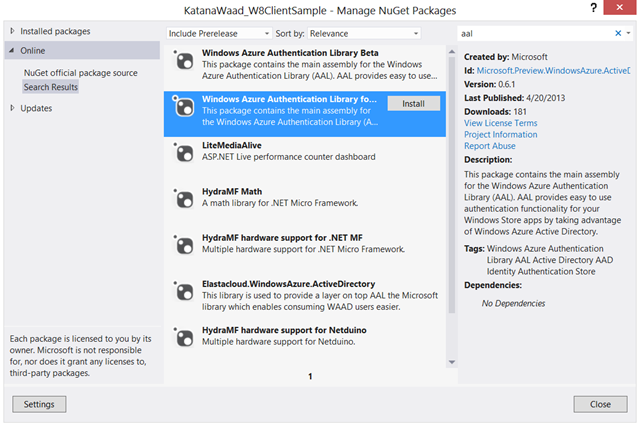

That done, I added a reference to the AAL for Windows Store package:

That done, all that’s left is to wire up the event with the code to 1) acquire the access token for the service and 2) use it to invoke the Web API. That’s largely boilerplate code, where you plug in the parameters specific to your scenario. Below you can find MainPage.xaml.cs in its entirety, so that you can see the usings directives as well.

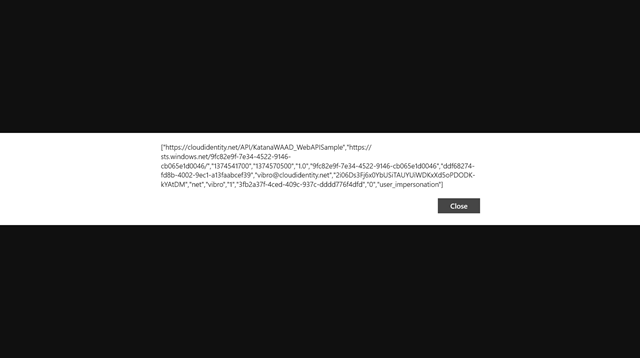

1: using Microsoft.Preview.WindowsAzure.ActiveDirectory.Authentication;2: using System;3: using System.Net.Http;4: using System.Net.Http.Headers;5: using Windows.UI.Popups;6: using Windows.UI.Xaml;7: using Windows.UI.Xaml.Controls;8: using Windows.UI.Xaml.Navigation;9:10: namespace KatanaWaad_W8ClientSample11: {12: public sealed partial class MainPage : Page13: {14: public MainPage()15: {16: this.InitializeComponent();17: }18:19: private async void myButton_Click(object sender, RoutedEventArgs e)20: {21: AuthenticationContext authenticationContext =22: new AuthenticationContext("https://login.windows.net/cloudidentity.net");23: AuthenticationResult _authResult =24: await authenticationContext.AcquireTokenAsync(25: "https://cloudidentity.net/API/KatanaWAAD_WebAPISample",26: "3fb2a37f-4ced-409c-937c-dddd776f4dfd",27: "http://whatever",28: "vibro@cloudidentity.net", "");29:30: string result = string.Empty;31: HttpClient httpClient = new HttpClient();32: httpClient.DefaultRequestHeaders.Authorization =33: new AuthenticationHeaderValue("Bearer", _authResult.AccessToken);34: HttpResponseMessage response =35: await httpClient.GetAsync("http://localhost:16159/Api/Values");36:37: if (response.IsSuccessStatusCode)38: {39: result = await response.Content.ReadAsStringAsync();40:41: }42: MessageDialog md = new MessageDialog(result);43: IUICommand x = await md.ShowAsync();44: }45: }46: }Here there are few notes on the salient lines. Please refer to the AAL content for more in depth info of what’s going on in here.

Line 21: here you create the AuthenticationContext pointing to your Windows Azure AD tenant of choice.

Lines 23-28: the call to AcquireTokenAsync specifies the resource you want a token for (you chose this identifier at Web API project development time and used in when registering the API with AD), the client ID of your application and associated return URI (as copied from the client properties page you should still have open in the browser), and some extra parameters (here I provide the user I want to be pre-populated in the authentication dialog, so that I’ll have less typing to do.)

Lines 32-33 add the resulting access token in the Authorization header, as expected when using OAuth2 for accessing a resource

Lines 35-36 perform the actual call: you might notice I am calling on HTTP, that’s really bad of me as a bearer token should always be used on a protected channel. I am skipping it just for keeping the walkthrough short.

The rest of the code just reads the response and displays it on a dialog.

Testing the Solution

Finally ready to rock & roll! Put a breakpoint on Configuration in Startup.cs and hit F5.

Did the debugger break there? Excellent. That means that the Owin pipeline is correctly registered and is operating as expected. F10 your way to the end of Configuration, then open the Immediate window to take a look at the effects of the call to UseWindowsAzureBearerToken.

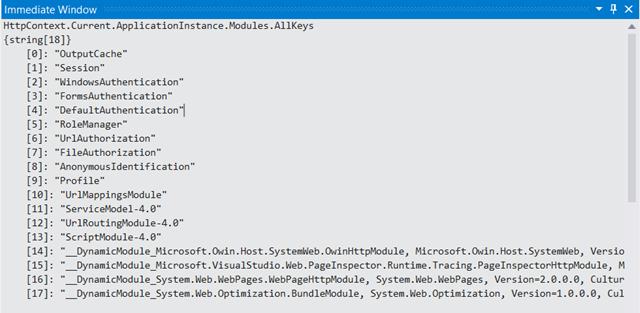

Let’s start by examining the list of HttpModules: type HttpContext.Current.ApplicationInstance.Modules.AllKeys.

As you can see in [14], the OwinHttpModule has been successfully added to the list. Very good.

What about the OWIN pipeline itself? Well, that’s a bit more complicated; but if you feel adventurous, you can use the Locals window to examine the non-public members of app (the parameter of Configure) and there you’l find a _middleware property, which does show that the OAuth2BearerTokenAuthenticationMiddleware is now in the collection.

Enough snooping hit F5 to let the Web API project fully start.

Go back to Visual Studio, right click on the client project in the Solution Explorer and choose Debug->Run new instance. The minimal UI of our text client shows up:

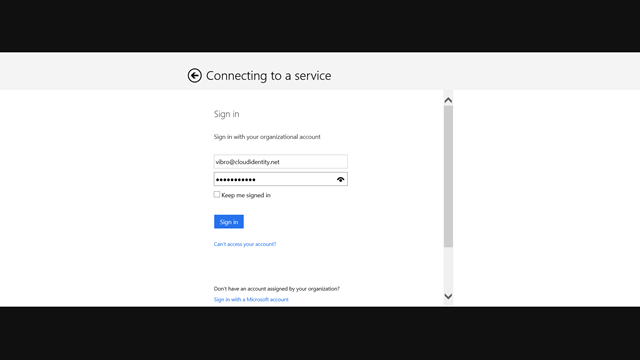

Hit the button: as expected, you’ll get the familiar WAB-based authentication experience.

Sign in with your test user.

As expected, you get back a jumbled array of values which proves that the Web API correctly processed the incoming token.

Wrap

Everything you have read about in this post is possible today: and although it is still in preview, it should give you a pretty good idea of what the experience at release time is going to be. Given how much simpler everything is, if you plan to secure Web API with organizational accounts I would recommend you start experimenting with this ASAP.

What’s next? For starters, more flexible middleware. Barry is working on middleware that will work with ADFS vNext, and on middleware that will dispense with all of the metadata automation and allow you to specify every parameter manually (for those custom cases where you need more fine grained control). Then, expect the steps described in this post (at least the ones about the service) to be automated by ASP.NET VS2013 templates at some point in the future, similarly to what you have seen for Web UX template types. It’s an exciting time for developing business API. Stay tuned!

Dan Plastina described Azure RMS pricing and availability in a 7/16/2013 post to the Active Directory Rights Management Services blog (missed when published):

The RMS team is driving hard towards our preview. I'll blog about that in the coming days but, in the meantime, I wanted to share with you the pricing information for the Azure RMS offer. A more formal sales channel document will be released in the near future as well.

- RMS can be purchased directly via the Office 365 portal as a user subscription license.

- Subscription covers the use of any RMS enlightened application (e.g. Office, Office 365, Foxt PDF Reader, Secude RMS for SAP offer)

- $2/user/month.

- Consumption of rights protected content is free. A license is required to protect content.

- Azure RMS can be purchased as part of Office 365 suite offerings

- It is included in E3/E4 and A3/A4 SKUs

- It is available as an add on to many other Office 365 SKUs.

Available Fall 2013

- Azure RMS can be purchased standalone for use with the Azure RMS Connector or third party RMS enlightened applications.

- Azure RMS will be available via the Microsoft Enterprise Volume License programs (EA/EAS/EES)

- Azure RMS subscription will include the rights to use AD RMS on-premise

- Enterprise CAL (ECAL) customers can add on the Azure RMS service