Windows Azure and Cloud Computing Posts for 4/14/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 4/17/2012 with new articles marked • by Jim O’Neil, Kristian Nese, Microsoft TechNet, David Linthicum, Chris Czarnecki, Brian Swan, Wely Lau, Mark Stafford, Manu Cohen-Yashar, Himanshu Singh, Michael Simons

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Wade Wegner (@WadeWegner) discussed a Return Empty Set Instead of ResourceNotFound from Table Storage issue in a 4/14/2012 post:

This past week I’ve been working on a little project – amazing how less email equates to more time for other endeavors – and I was surprised when I received a DataServiceQueryException when querying table storage in the local storage emulator. I was querying based on partition and row keys and, if no data matched the statement, I received an HTTP 404: Resource Not Found exception.

I was initially puzzled. Shouldn’t I receive an empty set or null instead?

Of course, I had forgotten that this is by design. The DataServiceContext will throw a DataServiceQueryException if there’s no data to return. To receive an empty set it’s necessary to set the IgnoreResourceNotFoundException property to true.

Here’s a simplified version of the code:

string connectionString = "UseDevelopmentStorage=true"; var context = CloudStorageAccount.Parse(connectionString) .CreateCloudTableClient().GetDataServiceContext(); context.IgnoreResourceNotFoundException = true; var results = context.CreateQuery<TableEntity>("tableName") .Where(e => e.PartitionKey == partitionKey && e.RowKey == rowKey).AsTableServiceQuery(); var key = results.FirstOrDefault();Problem solved. No DataServiceQueryException!

Something to keep in mind when working with the Windows Azure table storage service. I almost didn’t blog about but decided that it was worth a few minutes effort. Probably something to add to your Windows Azure development checklist (you have one, right?).

M Sheik Uduman Ali (@udooz) analyzed Synchronous, Async and Parallel Programming Performance in Windows Azure in a 4/5/2012 post (missed when published):

This post discusses the performance benefits of effectively using .NET TPL when doing I/O bound operations.

Intent

When there is a need for non-synchronous programming pattern (asynchronous and/or parallel) in Azure applications, the pattern of choice must be based on the target VM size we have chosen for that app and the type of operation particular part does.

Detail

.NET provides TPL (Task Parallel Library) to write non-synchronous programming much easier way. The asynchronous API enables to perform I/O bound and compute-bound asynchronous operations which lets the main thread to do the remaining operations without waiting for the asynchronous operations to complete. Refer http://snip.udooz.net/Hbmib2 for details. The parallel API enables to effectively utilizes the multicore processors on your machine to perform data intensive or task intensive operations. Refer http://snip.udooz.net/HTLrVv for details.

When writing azure applications, we may need to interact with many external resources like blob, queues, tables, etc. So, it is very obvious to think asynchronous or parallel programming patterns when the amount of I/O operations are higher. In these cases, we should be more cautious on selecting asynchronous and parallel. The extra-small instance provides shared CPU power, the small instance provides single core and medium or above provide multicore. Hence, asynchronous pattern would be the better option for extra-small and small instances. For problem those are highly parallel in nature, then the application should be placed on Medium or above instance with parallel pattern.

To confirm the above statement, I did a small proof of concept which has high I/O operation. The program interacts with Azure blob to get large number of blobs to get data to solve a problem. I’ve taken a small amount of Enron Email dataset from http://www.cs.cmu.edu/~enron/ which contains email messages for various Enron users on their respective Inbox folder as shown in figure 1 and figure 2.

The above figure shows the “inbox” for the user “benson-r”. Every user has approximately more than 200 email messages. A message contains the following content:

Message-ID: <21651803.1075842014433.JavaMail.evans@thyme> Date: Tue, 5 Feb 2002 11:06:50 -0800 (PST) From: robert.stalford@enron.com To: jay.webb@enron.com Subject: online power option change request Cc: andy.zipper@enron.com Mime-Version: 1.0 Content-Type: text/plain; charset=us-ascii Content-Transfer-Encoding: 7bit ======= OTHER HEADERS======= Jay, It was ..... ====== remaining message body ======The program going to solve how many times particular user written email to this user. The email messages reside in a blob container with appropriate blob directory. Hence, the pseudo code is some thing like:

for every user get the blob sub-directory for the user from the blob container create new dictionary // key - sender email ID, value - count for every blob in the sub-directory get blob content parse the “From” value from the message if the “From” value already exists on dictionary increment the value by 1 else add From field value as key and value as 1 into the dictionary write the resultI apply “sync, async and parallel” along with normal Task.StartNew and Task.StartNew + ContinueWith programming patterns on “fetching and parsing email messages” logic (more chatty I/O).

The Code

The normal procedural flow is shown below:

// rootContainer is CloudBlobDirectory represents "maildir" container var mailerInbox = rootContainer.GetSubdirectory(mailerFolder + "/inbox"); foreach (var blob in mailerInbox.ListBlobs()) { //don't see the subfolders if any if (blob is CloudBlobDirectory) continue; var email = mailerInbox.GetBlobReference(blob.Uri.ToString()).DownloadText(); //parsing From field var match = Regex.Match(email, @"From\W*(\w[-.\w]*@[-a-z0-9]+(\.[-a-z0-9]+)*)"); if (match.Groups.Count > 0) { var key = match.Groups[1].Value; //estimate is a Dictionary contains From email id and the count if (estimate.ContainsKey(key)) estimate[key] = estimate[key]++; else estimate.Add(key, 1); } } var sb = new StringBuilder(); foreach (var kv in estimate) { sb.AppendFormat("{0}: {1}\n", kv.Key, kv.Value); } //writing the result to a blob var result = mailerInbox.GetBlobReference("result_normal_" + attempt); result.UploadText(sb.ToString());The parallel version is shown below:

var mailerInbox = rootContainer.GetSubdirectory(mailerFolder + "/inbox"); Parallel.ForEach(mailerInbox.ListBlobs(), blob => { if (!(blob is CloudBlobDirectory)) { var email = mailerInbox.GetBlobReference(blob.Uri.ToString()).DownloadText(); var match = Regex.Match(email, @"From\W*(\w[-.\w]*@[-a-z0-9]+(\.[-a-z0-9]+)*)"); if (match.Groups.Count > 0) { var key = match.Groups[1].Value; // used ConcurrentDictionary cestimate.AddOrUpdate(key, 1, (k,v) => v++); } } }); //the result writing part is here, similar to normal versionThe asynchronous version is:

var mailerInbox = rootContainer.GetSubdirectory(mailerFolder + "/inbox"); var tasks = new Queue(); foreach (var blob in mailerInbox.ListBlobs()) { if (blob is CloudBlobDirectory) continue; // blobStorage is a wrapper for Azure Blob storage REST API var webRequest = blobStorage.GetWebRequest(blob.Uri.ToString()); tasks.Enqueue(Task.Factory.FromAsync(webRequest.BeginGetResponse, webRequest.EndGetResponse, TaskCreationOptions.None) .ContinueWith(t => { var response = t.Result; var stream = new StreamReader(response.GetResponseStream()); var emailMsg = stream.ReadToEnd(); stream.Close(); response.Close(); var match = regex.Match(emailMsg); if (match.Groups.Count > 0) { var key = match.Groups[1].Value; cestimate.AddOrUpdate(key, 1, (k, v) => v++); } })); } Task.WaitAll(tasks.ToArray());The major difference in the “fetching and parsing” part is, instead of managed API, I have used REST API with a wrapper so that I can access the Blob asynchronously. In addition the above, I have used normal TPL tasks in two different way. In the first way, I just processed “fetching and parsing” stuff as shown below:

foreach (var blob in mailerInbox.ListBlobs()) { if (blob is CloudBlobDirectory) continue; string blobUri = blob.Uri.ToString(); tasks.Enqueue(Task.Factory.StartNew(() => { var email = mailerInbox.GetBlobReference(blobUri).DownloadText(); var match = Regex.Match(email, @"From\W*(\w[-.\w]*@[-a-z0-9]+(\.[-a-z0-9]+)*)"); if (match.Groups.Count > 0) { var key = match.Groups[1].Value; cestimate.AddOrUpdate(key, 1, (k, v) => v++); } })); }

Task.WaitAll(tasks.ToArray());Another one way, I have used ContinueWith option with the Task as shown below:

foreach (var blob in mailerInbox.ListBlobs()) { if (blob is CloudBlobDirectory) continue; string blobUri = blob.Uri.ToString(); tasks.Enqueue(Task.Factory.StartNew(() => { return mailerInbox.GetBlobReference(blobUri).DownloadText(); }).ContinueWith(t => { var match = regex.Match(t.Result); if (match.Groups.Count > 0) { var key = match.Groups[1].Value; cestimate.AddOrUpdate(key, 1, (k, v) => v++); } }, TaskContinuationOptions.OnlyOnRanToCompletion)); } Task.WaitAll(tasks.ToArray());Results

I’ve hosted the work role and storage account at “Southeast Asia”. On every VM size, I’ve made 6 runs and removed the first time result. I have given 12 concurrent connection in the ServicePointManager for all the testing. I did not change this value in medium and large instances. All the results are in millisecond.

Extra Small

…

Small

…

Medium

…

Large

…

Surprisingly, irrespective of the VM size, when an operation is I/O bound, asynchronous pattern outshines all the other approaches followed by Parallel.

Final Words

Hence, the “asynchronous” approach won the I/O bound operation (shown as a diagram also here).

Let me come up with one more test which covers on which area Parallel approach will shine. In addition to these, when you have lesser I/O and want smooth multithreading, Task and Task + ContinueWith may help you.

What do you think? Share your thoughts!

I highly thank Steve Marx and Nuno for validating my approach and the results which are actually improved my overall testing strategy.

The source code is available at http://udooz.net/file-drive/doc_download/23-mailanalyzerasyncpoc.html

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

• Manu Cohen-Yashar (@ManuKahn) recommended that you Scale Data Applications with Sql Azure in a 4/17/2012 post:

When designing a data application, traditionally the bottleneck lies within the database. A traditional on-premises database does not scale very well. It is difficult to split a database between a cluster of machines so usually the database runs on the most powerful machine available which tend to be expensive.

To solve the problem some data processing is moved form its natural environment (i.e. DB) to the business tier which scale much better. The business tier is easy to replicate so it can help reduce the performance bottleneck of the database.

In Sql Azure things are different. Sql Azure is NOT running on simple Sql servers 2008 machines which happen to be on the cloud. Sql Azure has a different architecture. It runs on a grid of servers split into service and platform layers. The platform layer runs the sql engine and the service layer is responsible for the connection and provisioning.

"SQL Azure subscribers access the actual databases, which are stored on multiple machines in the data center, through the logical server. The SQL Azure Gateway service acts as a proxy, forwarding the Tabular Data Stream (TDS) requests to the logical server. It also acts as a security boundary providing login validation, enforcing your firewall and protecting the instances of SQL Server behind the gateway against denial-of-service attacks. The Gateway is composed of multiple computers, each of which accepts connections from clients, validates the connection information and then passes on the TDS to the appropriate physical server, based on the database name specified in the connection"

An important aspect about Sql Azure is the fact that this is a shared infrastructure. You do not own a specific server. This is why Sql Azure billing is calculated according to the amount of storage you use only and does not take the cpu consumption of the database into consideration.

Another thing to know about Sql Azure is the fact that your databases are replicated across 3 different servers. Large databases can be split horizontally using Sql Azure Sharding.

"Sharding is an application pattern for improving the scalability and throughput of large-scale data solutions. To “shard” an application is the process of breaking an application’s logical database into smaller chunks of data, and distributing the chunks of data across multiple physical databases to achieve application scalability"

Sql Azure in contrast to on-premises databases scales very well.

SQL Azure combined with database sharding techniques provides for virtually unlimited scalability of data for an application.And a final word about billing: The only compute power customers are charged for in windows azure is on the running roles. Sql Azure CPU is free !!!

So the conclusion is that in cloud applications you might want to consider to move data processing back to the database, use Sql Azure Sharding and save money.

You might check out Manu’s four-part Azure ServiceBus Topic using REST API – Part 4 series (missed when published).

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

• Mark Stafford (@markdstafford) of the OData.org team reported the availability of JSON Light Sample Payloads on 4/16/2012:

We recently put together a document that contains some of our thinking about JSON light as well as a whole slew of sample payloads. We would love to hear any feedback you have on the format; you can comment in the accompanying OneNote.

We will be accepting public feedback on JSON light until May 4, 2012. We will also provide a preview release of the JSON light bits via NuGet for interested parties.

- JSON light at-a-glance: https://skydrive.live.com/redir.aspx?cid=0d23ed2816deea7b&resid=D23ED2816DEEA7B!966&parid=D23ED2816DEEA7B!106&authkey=!AAYCE2RUJccjhHk

- Discussion: https://skydrive.live.com/redir.aspx?cid=0d23ed2816deea7b&page=view&resid=D23ED2816DEEA7B!967&parid=D23ED2816DEEA7B!106&authkey=!ABdrv3heWfQFY4Y

Interesting use of Windows Live SkyDrive for enclosures, but the URLs are a bit long. The most recent SkyDrive update offers a built-in URL shortener.

Glenn Gailey (@ggailey777) explained Upgrading WCF Data Services Projects to WCF Data Services 5.0 in a 4/16/2012 post:

Now that WCF Data Services 5.0 has been shipped with support for OData v3, it’s time to start updating existing applications to take advantage of some of the new features of OData v3. For a complete list of new OData v3 features that are supported in WCF Data Services 5.0, see the post WCF Data Services 5.0 RTM Release.

Note: If you do not need any of the new OData v3 functionalities, you don’t really need to upgrade to WCF Data Services 5.0. If you do upgrade, you can still take advantage of the OData v2 behaviors in WCF Data Services 5.0. For more information, see the section OData Protocol Versions in Data Service Versioning (WCF Data Services).

Side-by-Side Installation

This release installs side-by-side with the previous versions of WCF Data Services that are in the .NET Framework. This is achieved by renaming the WCF Data Services assemblies from System.Data.Services.*.dll to Microsoft.Data.Services.*.dll, which makes it easier to target the version of WCF Data Services without having to use the multi-targeting in Visual Studio (trust me this is a good thing). This new out-of-band version of WCF Data Services is installed in the program files directory rather than with the reference assemblies. This means that you can find the new assemblies in the following installation path on an x64 computer:

%programfiles(x86)%\Microsoft WCF Data Services\5.0\binAs you would expect, the WCF Data Services client and server libraries are in the .NETFramework subdirectory and the Silverlight client assemblies are in the SL directory (plus, the Silverlight assemblies have SL appended to the file name).

Common Upgrade Tasks

There are two things that you need to do before you can upgrade a Visual Studio project to use WCF Data Services 5.0 libraries.

- Install the new 5.0 release, which you can get from the Download Center page WCF Data Services 5.0 for OData v3.

- Remove any existing references to existing assemblies the name of which start with System.Data.Services.

At this point, you can upgrade either data service or client projects.

Upgrading a Data Service Project

Use the following procedure to upgrade an existing WCF Data Services instance to 5.0 and use OData v3 support:

- Remove references to System.Data.Services.dll and System.Data.Services.Client.dll.

- Add a new reference to Microsoft.Data.Services.dll and Microsoft.Data.Services.Client.dll assemblies (found in the installation location described above).

- Change the Factory attribute in the .svc file markup to:

Factory="System.Data.Services.DataServiceHostFactory, Microsoft.Data.Services, Version=5.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"- Change the value of DataServiceBehavior.MaxProtocolVersion to DataServiceProtocolVersion.V3.

Upgrading an OData Client Project

Really, the only way that I have found to be able to correctly upgrade an existing client application to use the WCF Data Services 5.0 version of the client libraries is as follows:

- Remove the existing references to System.Data.Services.Client.dll.

- Delete the existing service references to OData services.

- Re-add the service reference using the Add Service Reference dialog in Visual Studio.

Heads-up for JSON-based Applications

OData v3 introduces a change in the Accept header values used to request a JSON response from the data service. This change doesn’t technically break OData v2 clients because it requires that the data service use the OData v3. However, you may start to get HTTP 415 responses after you upgrade the client or service to OData v3 and request that the data service use the OData v3 protocol with the old application/json value. For a good, detailed explanation of the nuances of this, see the post What happened to application/json in WCF DS 5.0?

As you may recall from my earlier post Getting JSON Out of WCF Data Services, you have to do some extra work enable the data service to handle the $format=json query option. Remember that when upgrading such a data service to support OData v3, you must also change the JSON Accept header value inserted by your code to application/json;odata=verbose.

Tony Baer (@TonyBaer) stood in on 4/16/2012 for Andrew Brust (@andrewbrust) as the author of Fast Data hits the Big Data fast lane for ZDNet’s Big Data blog:

Of the 3 “V’s” of Big Data – volume, variety, velocity (we’d add “Value” as the 4th V) – velocity has been the unsung ‘V.’ With the spotlight on Hadoop, the popular image of Big Data is large petabyte data stores of unstructured data (which are the first two V’s). While Big Data has been thought of as large stores of data at rest, it can also be about data in motion.

“Fast Data” refers to processes that require lower latencies than would otherwise be possible with optimized disk-based storage. Fast Data is not a single technology, but a spectrum of approaches that process data that might or might not be stored. It could encompass event processing, in-memory databases, or hybrid data stores that optimize cache with disk.

Fast Data is nothing new, but because of the cost of memory, was traditionally restricted to a handful of extremely high-value use cases. For instance:

- Wall Street firms routinely analyze live market feeds, and in many cases, run sophisticated complex event processing (CEP) programs on event streams (often in real time) to make operational decisions.

- Telcos have handled such data in optimizing network operations while leading logistics firms have used CEP to optimize their transport networks.

- In-memory databases, used as a faster alternative to disk, have similarly been around for well over a decade, having been employed for program stock trading, telecommunications equipment, airline schedulers, and large destination online retail (e.g., Amazon).

Hybrid in-memory and disk have also become commonplace, especially amongst data warehousing systems (e.g., Teradata, Kognitio), and more recently among the emergent class of advanced SQL analytic platforms (e.g., Greenplum, Teradata Aster, IBM Netezza, HP Vertica, ParAccel) that employ smart caching in conjunction with a number of other bells and whistles to juice SQL performance and scaling (e.g., flatter indexes, extensive use of various data compression schemes, columnar table structures, etc.). Many of these systems are in turn packaged as appliances that come with specially tuned, high-performance backplanes and direct attached disk.

Finally, caching is hardly unknown to the database world. Hot spots of data that are frequently accessed are often placed in cache, as are snapshots of database configurations that are often stored to support restore processes, and so on.

So what’s changed?

The usual factors: the same data explosion that created the urgency for Big Data is also generating demand for making the data instantly actionable. Bandwidth, commodity hardware and, of course, declining memory prices, are further forcing the issue: Fast Data is no longer limited to specialized, premium use cases for enterprises with infinite budgets.Not surprisingly, pure in-memory databases are now going mainstream: Oracle and SAP are choosing in-memory as one of the next places where they are establishing competitive stakes: SAP HANA vs. Oracle Exalytics. Both Oracle and SAP for now are targeting analytic processing, including OLAP (by raising the size limits on OLAP cubes) and more complex, multi-stage analytic problems that traditionally would have required batch runs (such as multivariate pricing) or would not have been run at all (too complex, too much delay). More to the point, SAP is counting on HANA as a major pillar of its stretch goal to become the #2 database player by 2015, which means expanding HANA’s target to include next generation enterprise transactional applications with embedded analytics.

Potential use cases for Fast Data could encompass:

- A homeland security agency monitoring the borders requiring the ability to parse, decipher, and act on complex occurrences in real time to prevent suspicious people from entering the country

- Capital markets trading firms requiring real-time analytics and sophisticated event processing to conduct algorithmic or high-frequency trades

- Entities managing smart infrastructure which must digest torrents of sensory data to make real-time decisions that optimize use of transportation or public utility infrastructure

- B2B consumer products firms monitoring social networks may require real-time response to understand sudden swings in customer sentiment

For such organizations, Fast Data is no longer a luxury, but a necessity.

More specialized use cases are similarly emerging now that the core in-memory technology is becoming more affordable. YarcData, a startup from venerable HPC player Cray Computer, is targeting graph data, which represents data with many-to-many relationships. Graph computing is extremely process-intensive, and as such, has traditionally been run in batch when involving Internet-size sets of data. YarcData adopts a classic hybrid approach that pipelines computations in memory, but persisting data to disk. YarcData is the tip of the iceberg – we expect to see more specialized applications that utilize hybrid caching that combine speed with scale.

But don’t forget, memory’s not the new disk

The movement – or tiering – of data to faster or slower media is also nothing new. What is new is that data in memory may no longer be such a transient thing, and if memory is relied upon for in situ processing of data in motion or rapid processing of data at rest, memory cannot simply be treated as the new disk. Excluding specialized forms of memory such as ROM, by nature anything that’s solid state is volatile: there goes your power… and there goes your data. Not surprisingly, in-memory systems such as HANA still replicate to disk to reduce volatility. For conventional disk data stores that increasingly leverage memory, Storage Switzerland’s George Crump makes the case that caching practices must become smarter to avoid misses (where data gets mistakenly swapped out). There are also balance of system considerations: memory may be fast, but is its processing speed well matched with processor? Maybe solid state overcomes I/O issues associated with disk, but may still be vulnerable to coupling issues if processors get bottlenecked or MapReduce jobs are not optimized.Declining memory process are putting Fast Data on the fast lane to mainstream. But as the technology is now becoming affordable, we’re still early in the learning curve for how to design for it.

Clemens Vasters (@clemensv) asked “REST API” or “HTTP API”? in a 4/13/2012 post:

I just wrote this email on a private mailing list and thought it may make sense to share it. The context of the discussion was overuse of the term “REST” in a document discussing an HTTP API:

REST is a set of architectural principles. REST describes how state flows and describes the shape of relationships between the parties in a distributed system. HTTP is a protocol with a variety of stacks supporting it, and the REST principles were born out of developing HTTP. There could, in theory, a broad variety of protocols that also embody REST architecture, but there are, in fact, very few (if any) that aren’t just variations of HTTP.

“The client sends …”, “The server receives …”, “The server provides an interface for …” are all statements about implementation and, thus, HTTP. It commonly starts making talking about REST specifically when debating whether a system is actually following the principles according to the 5.3.3 “Data View” section in [1], since everything up to that point in Fielding’s dissertation you get generally for free with HTTP.

[1] http://www.ics.uci.edu/~fielding/pubs/dissertation/rest_arch_style.htm

Bottom line: HTTP APIs are HTTP APIs. REST is about how things hang together. The terms aren’t interchangeable. In most technical discussions about interfaces or methods or URIs and most other implementation details, HTTP API is the right term.

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Identity and Workflow

Jim O’Neil (@jimoneil) announced on 4/16/2012 that he will appear on Mike Benkovich’s Windows Azure Soup to Nuts: Service Bus (April 17) Webcast":

My colleague, Mike Benkovich, has been running a weekly webcast called “Cloud Computing: Soup to Nuts”, covering various parts of the Windows Azure platform for the developer audience. It’s a great way to get your ‘head in the clouds’ and make use of the 90-day Windows Azure Free Trial or your MSDN benefits (you did know you get free time in the cloud with MSDN, right?).

Tomorrow, Tuesday April 17th, I’ll be the guest presenter for the ninth segment of his series: “Getting Started with the Service Bus” from 2 – 3 p.m. ET.

No man is an island, and no cloud application stands alone! Now that you've conquered the core services of web roles, worker roles, storage, and Microsoft SQL Azure, it's time to learn how to bridge applications within the cloud and between the cloud and on premises. This is where the Service Bus comes in—providing connectivity for Windows Communication Foundation and other endpoints even behind firewalls. With both relay and brokered messaging capabilities, you can provide application-to-application communication as well as durable, asynchronous publication/subscription semantics. Come to this webcast ready to participate from your own computer to see how this technology all comes together in real time.

Did you catch that last part of the abstract ? I’m going to need some help with the demos, and that’s where you come in. Intrigued? Register for the webcast!

To catch up on the rest of Mike’s series, check out his Soup to Nuts page on BenkoTips!

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Wely Lau (@wely_live) described Moving applications to the cloud: Part 3 – The recommended solution in a 4/17/2012 post to the ACloudyPlace blog:

We illustrated Idelma’s case study in the last article. This article continues from where we left off, looking at how a partner, Ovissia, would provide a recommended solution. Just as a reminder, Idelma had some specific requirements for migration including: cost-effectiveness, no functional changes for the user, and the proprietary CRM system stays on-premise.

Having analyzed the challenges Idelma faces and the requirements it mentioned, Ovissia’s presales architect Brandon gets back to Idelma with the answers. In fact, some of the migration techniques are referenced from the first post in this series.

Cloud Architecture

Figure 1 - TicketOnline Cloud Architecture

Above is the recommended cloud architecture diagram when moving TicketOnline to the cloud. As can be seen from the figure, some portions of the system will remain similar to the on-premise architecture, while others shift towards the cloud-centric architecture.

Let’s take a look at each component in more detail.

1. Migrating a SQL Server 2005 database to SQL Azure

SQL Azure is cloud-based database service built on SQL Server technologies. In fact, at the moment, the most similar version of SQL Azure is SQL Server 2008.

There are several ways to migrate SQL Server to SQL Azure. One of the simplest ways is to use “Generate and Publish Scripts” wizard from SQL Server 2008 R2 / 2012 Management Studio.

Figure 2 – SQL Server Management Studio 2008 R2 (Generate Scripts Wizard)

Another option is to use third party tool such as SQL Azure Migration Wizard.

After the database has been successfully migrated to SQL Azure, connecting to it from the application is as straightforward as changing the connection string.

2. Relaying on-premise WCF Service using Windows Azure Service Bus

One of Idelma’s requirements states that the CRM web service must remain on-premise and also must be consumed securely. To satisfy this, Ovissia recommends using Windows Azure Service Bus which provides messaging and relay capability across multiple network hierarchies. With the relay capability and secured by Access Control Service, it enables the hybrid model scenario such that the TicketOnline web application is able to securely connect back to the on-premise CRM Service.

2.1 Converting ASMX to WCF

Despite its powerful capability, Service Bus requires WCF Service instead of asmx web service. Thus, the current asmx web service should be converted to WCF Service. MSDN library provides a walkthrough on migrating ASMX Web Service to WCF.

3. Converting to Web Role

Windows Azure Web Role is used to host web applications running on Windows Azure. Therefore, it is an ideal component to host the TicketOnline web application. Hosting on Windows Azure Web Role requires an ASP.NET web application, not an ASP.NET website. Please refer to this documentation for the difference between the two. The MSDN library also provides a detailed walkthrough on how to convert a web site to web application in Visual Studio.

When the website has been converted to a web application project, it is one step closer to the Web Role. In fact, there are only three differences between the two as can be seen on the following figures.

Figure 3 – Differences between Web Role VS ASP.NET Web Application (Windows Azure Platform Training Kit – BuildingASPNETApps.pptx, slide 7)

4. Converting Windows Service Batch Job to Windows Azure Worker Role

Running Windows Service on Windows Azure can be pretty challenging. In fact, Windows Service is not available out-of-the-box on Windows Azure. The recommended approach is to convert the Windows Service to a Windows Azure Worker Role. You may refer to section 3 of the first article in this series for further explanation.

5. Conventional File System to Windows Azure Blob Storage

Idelma uses a conventional file server to store documents and images. When moving the application to Windows Azure, the ideal option is to store them in Windows Azure Storage, particularly Blob Storage. Not only is it cost-effective, but Windows Azure Storage also provides highly available and scalable storage services.

However, migrating from a conventional file system to Blob storage requires some effort:

- First, the API – the way the application accesses Blob Storage. For a .NET application, Windows Azure provides a Storage Client Library for .NET which enables .NET developers to access Windows Azure Storage easily.

- Second, migrating existing files – this can be done through explorer tools such as Cloud Xplorer or Cloud Storage Studio.

6. Configuration changes

Today, many applications (including TicketOnline) store settings such as application configuration and connection string in .config files (app.config / web.config). We know that the .config file is stored in an individual virtual machine (VM), but storing those settings in a .config file has a drawback. If you need to apply any changes to the settings, a re-deployment is required.

In the cloud, the recommended solution is to store the settings in an accessible and centralized medium such as a database. But if we just need to store the key-value pair setting, ServiceConfiguration.cscfg is actually a good choice. Changing settings in ServiceConfiguration.cscfg does not require a re-deployment and each VM will always get the latest updated settings.

There’s effort little bit of work to do when changing the setting from .config to ServiceConfiguration.cscfg. The following snippet shows the difference between the two.

string settingFromConfigFiles = ConfigurationManager.AppSettings["name"].ToString(); //getting setting from .config files string settingFromAzureConfig = RoleEnvironment.GetConfigurationSettingValue("name").ToString(); //getting setting from ServiceConfiguration.cscfg7. Sending Email on Windows Azure

The current architecture shows that email is sent through on-premise SMTP. If there is a requirement to continue using on-premise SMTP to send email, we could either propose to use a similar relay technique using Service Bus or use Windows Azure Connect to group cloud VMs and on-premise SMTP together.

Another option is to use a third-party SMTP provider. Recently, Microsoft has partnered with SendGrid to provide a special offer to Windows Azure subscribers for 25,000 free emails per month. This serves as a value-added service by Windows Azure without any extra charges.

8. Logging on the Cloud

Currently, TicketOnline stores the logs in a database. Although this works well with a SQL Azure database, it may not be the most cost-effective option as SQL Azure charges approximately $ 10 per GB per month. Over the time, the log will grow more and more, and might result in high running costs for the customer.

Remember, a workable solution is not enough; the solution should be cost-effective as well.

Windows Azure Storage is another option to store diagnostic data and logs. In fact, Windows Azure Diagnostic makes Windows Azure Storage the best option to store logs and diagnostic information. More details on Windows Azure Diagnosticscan be found in section 4 of the first article in this series.

Conclusion

To conclude, this article provides a recommended solution to answer the challenges that Idelma face. You can see the difference between the on-premise and cloud architecture. This article also explains various components of the proposed solution.

Of course, this is not the only available solution as there may be other variations. There is no one-size-fits-all solution and there are always trade-offs among solutions. Finally, I hope this series on Moving Applications to the Cloud brings you some insight, especially for those who are considering moving applications to the cloud.

Full disclosure: I’m a paid contributor to Red Gate Software’s ACloudyPlace blog.

• Brian Swan (@brian_swan) posted Azure Real World: Managing and Monitoring Drupal Sites on Windows Azure on 4/17/2012:

A few weeks ago, I co-authored an article (with my colleague Rama Ramani) about how the Screen Actors Guild Awards website migrated its Drupal deployment from LAMP to Windows Azure: Azure Real World: Migrating a Drupal Site from LAMP to Windows Azure. Since then, Rama and another colleague, Jason Roth, have been working on writing up how the SAG Awards website was managed and monitored in Windows Azure. The article below is the fruit of their work…a very interesting/educational read.

Overview

Drupal is an open source content management system that runs on PHP. Windows Azure offers a flexible platform for hosting, managing, and scaling Drupal deployments. This paper focuses on an approach to host Drupal sites on Windows Azure, based on learning from a BPD Customer Programs Design Win engagement with the Screen Actors Guild Awards Drupal website. This paper covers guidelines and best practices for managing an existing Drupal web site in Windows Azure. For more information on how to migrate Drupal applications to Windows Azure, see Azure Real World: Migrating a Drupal Site from LAMP to Windows Azure.

The target audience for this paper is Drupal administrators who have some exposure to Windows Azure. More detailed pointers to Windows Azure content is provided throughout the paper as links.

Drupal Application Architecture on Windows Azure

Before reviewing the management and monitoring guidelines, it is important to understand the architecture of a typical Drupal deployment on Windows Azure. First, the following diagram displays the basic architecture of Drupal running on Windows and IIS7.

In the Windows Server scenario, you could have one or more machines hosting the web site in a farm. Those machines would either persist the site content to the file system or point to other network shares.

For Windows Azure, the basic architecture is the same, but there are some differences. In Windows Azure the site is hosted on a web role. A web role instance is hosted on a Windows Server 2008 virtual machine within the Windows Azure datacenter. Like the web farm, you can have multiple instances running the site. But there is no persistence guarantee for the data on the file system. Because of this, much of the shared site content should be stored in Windows Azure Blob storage. This allows them to be highly available and durable. Usually, a large portion of the site caters to static content which lends well to caching. And caching can be applied in a set of places – browser level caching, CDN to cache content in the edge closer to the browser clients, caching in Azure to reduce the load on backend, etc. Finally, the database can be located in SQL Azure. The following diagram shows these differences.

For monitoring and management, we will look at Drupal on Windows Azure from three perspectives:

- Availability: Ensure the web site does not go down and that all tiers are setup correctly. Apply best practices to ensure that the site is deployed across data centers and perform backup operations regularly.

- Scalability: Correctly handle changes in user load. Understand the performance characteristics of the site.

- Manageability: Correctly handle updates. Make code and site changes with no downtime when possible.

Although some management tasks span one or more of these categories, it is still helpful to discuss Drupal management on Windows Azure within these focus areas.

Availability

One main goal is that the Drupal site remains running and accessible to all end-users. This involves monitoring both the site and the SQL Azure database that the site depends on. In this section, we will briefly look at monitoring and backup tasks. Other crossover areas that affect availability will be discussed in the next section on scalability.

Monitoring

With any application, monitoring plays an important role with managing availability. Monitoring data can reveal whether users are successfully using the site or whether computing resources are meeting the demand. Other data reveals error counts and possibly points to issues in a specific tier of the deployment.

There are several monitoring tools that can be used.

- The Windows Azure Management Portal.

- Windows Azure diagnostic data.

- Custom monitoring scripts.

- System Center Operations Manager.

- Third party tools such as Azure Diagnostics Manager and Azure Storage Explorer.

The Windows Azure Management Portal can be used to ensure that your deployments are successful and running. You can also use the portal to manage features such as Remote Desktop so that you can directly connect to machines that are running the Drupal site.

Windows Azure diagnostics allows you to collect performance counters and logs off of the web role instances that are running the Drupal site. Although there are many options for configuring diagnostics in Azure, the best solution with Drupal is to use a diagnostics configuration file. The following configuration file demonstrates some basic performance counters that can monitor resources such as memory, processor utilization, and network bandwidth.

<DiagnosticMonitorConfiguration xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration"configurationChangePollInterval="PT1M"overallQuotaInMB="4096"><DiagnosticInfrastructureLogs bufferQuotaInMB="10"scheduledTransferLogLevelFilter="Error"scheduledTransferPeriod="PT1M" /><Logs bufferQuotaInMB="0"scheduledTransferLogLevelFilter="Verbose"scheduledTransferPeriod="PT1M" /><Directories bufferQuotaInMB="0" scheduledTransferPeriod="PT5M"><!-- These three elements specify the special directories that are set up for the log types --><CrashDumps container="wad-crash-dumps" directoryQuotaInMB="256" /><FailedRequestLogs container="wad-frq" directoryQuotaInMB="256" /><IISLogs container="wad-iis" directoryQuotaInMB="256" /></Directories><PerformanceCounters bufferQuotaInMB="0" scheduledTransferPeriod="PT1M"><!-- The counter specifier is in the same format as the imperative diagnostics configuration API --><PerformanceCounterConfiguration counterSpecifier="\Memory\Available MBytes" sampleRate="PT10M" /><PerformanceCounterConfiguration counterSpecifier="\Network Interface(*)\Bytes Total/sec" sampleRate="PT10M" /><PerformanceCounterConfiguration counterSpecifier="\Processor(*)\% Processor Time" sampleRate="PT10M" /><PerformanceCounterConfiguration counterSpecifier="\System\Processor Queue Length" sampleRate="PT10M" /><PerformanceCounterConfiguration counterSpecifier="\Web Service(_Total)\Current Connections" sampleRate="PT10M" /><PerformanceCounterConfiguration counterSpecifier="\Web Service(_Total)\Bytes Total/sec" sampleRate="PT10M" /><PerformanceCounterConfiguration counterSpecifier="\Web Service(_Total)\Connection Attempts/sec" sampleRate="PT10M" /><PerformanceCounterConfiguration counterSpecifier="\Web Service(_Total)\Get Requests/sec" sampleRate="PT10M" /><PerformanceCounterConfiguration counterSpecifier="\Web Service(_Total)\Files Sent/sec" sampleRate="PT10M" /></PerformanceCounters><WindowsEventLog bufferQuotaInMB="0"scheduledTransferLogLevelFilter="Verbose"scheduledTransferPeriod="PT5M"><!-- The event log name is in the same format as the imperative diagnostics configuration API --><DataSource name="System!*" /></WindowsEventLog></DiagnosticMonitorConfiguration>For more information about setting up diagnostic configuration files, see How to Use the Windows Azure Diagnostics Configuration File. This information is stored locally on each role instance and then transferred to Windows Azure storage per a defined schedule or on-demand. See Getting Started with Storing and Viewing Diagnostic Data in Windows Azure Storage. Various monitoring tools, such as Azure Diagnostics Manager, help you to more easily analyze diagnostic data.

Monitoring the performance of the machines hosting the Drupal site is only part of the story. In order to plan properly for both availability and scalability, you should also monitor site traffic, including user load patterns and trends. Standard and custom diagnostic data could contribute to this, but there are also third-party tools that monitor web traffic. For example, if you know that spikes occur in your application during certain days of the week, you could make changes to the application to handle the additional load and increase the availability of the Drupal solution.

Backup Tasks

To remain highly available, it is important to backup your data as a defense-in-depth strategy for disaster recovery. This is true even though SQL Azure and Windows Azure Storage both implement redundancy to prevent data loss. One obvious reason is that these services cannot prevent administrator error if data is accidentally deleted or incorrectly changed.

SQL Azure does not currently have a formal backup technology, although there are many third-party tools and solutions that provide this capability. Usually the database size for a Drupal site is relatively small. In the case of SAG Awards, it was only ~100-150 MB. So performing an entire backup using any strategy was relatively fast. If your database is much larger, you might have to test various backup strategies to find the one that works best.

Apart from third-party SQL Azure backup solutions, there are several strategies for obtaining a backup of your data:

- Use the Drush tool and the portabledb-export command.

- Periodically copy the database using the CREATE DATABASE Transact-SQL command.

- Use Data-tier applications (DAC) to assist with backup and restore of the database.

SQL Azure backup and data security techniques are described in more detail in the topic, Business Continuity in SQL Azure.

Note that bandwidth costs accrue with any backup operation that transfers information outside of the Windows Azure datacenter. To reduce costs, you can copy the database to a database within the same datacenter. Or you can export the data-tier applications to blob storage in the same datacenter.

Another potential backup task involves the files in Blob storage. If you keep a master copy of all media files uploaded to Blob storage, then you already have an on-premises backup of those files. However, if multiple administrators are loading files into Blob storage for use on the Drupal site, it is a good idea to enumerate the storage account and to download any new files to a central location. The following PHP script demonstrates how this can be done by backing up all files in Blob storage after a specified modification date.

<?php/*** Backup the blob storage to a local folder** PHP version 5.3** @category Blob Backup* @package Windows Azure* @author Bibin Kurian* @copyright 2011 Microsoft Corp. (http://www.microsoft.com)* @license New BSD license, (http://www.opensource.org/licenses/bsd-license.php)* @version SVN: 1.0* @link**//** Microsoft_WindowsAzure_Storage_Blob */require_once 'Microsoft/WindowsAzure/Storage/Blob.php';//Windows Azure BLOB storage account namedefine("AZURE_STORAGE_ACCOUNT", "YOUR_ACCOUNTNAME");//Windows Azure BLOB storage container namedefine("AZURE_STORAGE_CONTAINER", "YOUR_STORAGECONTAINERNAME");//Windows Azure BLOB storage secret keydefine("AZURE_STORAGE_KEY","YOUR_SECRETKEY");//backup folderdefine("STORAGE_BACKUP_DIR", "YOUR_LOCALDRIVE");//backup from datedefine("DEFAULT_BACKUP_FROM_DATE", strtotime("Mon, 19 Dec 2011 22:00:00 GMT"));//backup to datedefine("DEFAULT_BACKUP_TO_DATE", time());//directory seperatordefine("DS", "\\");//start backup logic//current datetime to create the backup folder$now = date("F j, Y, g.i A");$fullBackupDirPath = STORAGE_BACKUP_DIR . DS . $now . DS. AZURE_STORAGE_CONTAINER;mkdir($fullBackupDirPath, 0755, true);//For the directory creations, pointing the current directory to user specified directorychdir($fullBackupDirPath);//BLOB object$blobObj = new Microsoft_WindowsAzure_Storage_Blob('blob.core.windows.net',AZURE_STORAGE_ACCOUNT,AZURE_STORAGE_KEY);//$blobObj->setProxy(true, 'YOUR_PROXY_IF_NEEDED', 80);$blobs = (array)$blobObj->listBlobs(AZURE_STORAGE_CONTAINER, '', '', 35000);backupBlobs($blobs, $blobObj);function backupBlobs($blobs, $blobObj) {foreach ($blobs as $blob) {if (strtotime($blob->lastmodified) >= DEFAULT_BACKUP_FROM_DATE && strtotime($blob->lastmodified) <= DEFAULT_BACKUP_TO_DATE) {$path = pathinfo($blob->name);if ($path['basename'] != '$$$.$$$') {$dir = $path['dirname'];$oldDir = getcwd();if (handleDirectory($dir)) {chdir($dir);$blobObj->getBlob(AZURE_STORAGE_CONTAINER,$blob->name,$path['basename']);chdir($oldDir);}}}}}function handleDirectory($dir) {if (!checkDirExists($dir)) {return mkdir($dir, 0755, true);}return true;}function checkDirExists($dir) {if(file_exists($dir) && is_dir($dir)) {return true;}return false;}?>This script has a dependency on the Windows Azure SDK for PHP. Also note there are several parameters that you must modify such as the storage account, secret, and backup location. As with SQL Azure, bandwidth and transaction charges apply to a backup script like this.

Scalability

Drupal sites on Windows Azure can scale as load increased through typical strategies of scale-up, scale-out, and caching. The following sections describe the specifics of how these strategies are implemented in Windows Azure.

Typically you make scalability decisions based on monitoring and capacity planning. Monitoring can be done in staging during testing or in production with real-time load. Capacity planning factors in projections for changes in user demand.

Scale Up

When you configure your web role prior to deployment, you have the option of specifying the Virtual Machine (VM) size, such as Small or ExtraLarge. Each size tier adds additional memory, processing power, and network bandwidth to each instance of your web role. For cost efficiency and smaller units of scale, you can test your application under expected load to find the smallest virtual machine size that meets your requirements.

The workload usually in most popular Drupal websites can be separated out into a limited set of Drupal admins making content changes and a large user base who perform mostly read-only workload. End users can be allowed to make ‘writes’, such as uploading blogs or posting in forums, but those changes are not ‘content changes’. Drupal admins are setup to operate without caching so that the writes are made directly to SQL Azure or the corresponding backend database. This workload performs well with Large or ExtraLarge VM sizes. Also, note that the VM size is closely tied to all hardware resources, so if there are many content-rich pages that are streaming content, then the VM size requirements are higher.

To make changes to the Virtual Machine size setting, you must change the vmsize attribute of the WebRole element in the service definition file, ServiceDefinition.csdef. A virtual machine size change requires existing applications to be redeployed.

Scale Out

In addition to the size of each web role instance, you can increase or decrease the number of instances that are running the Drupal site. This spreads the web requests across more servers, enabling the site to handle more users. To change the number of running instances of your web role, see How to Scale Applications by Increasing or Decreasing the Number of Role Instances.

Note that some configuration changes can cause your existing web role instances to recycle. You can choose to handle this situation by applying the configuration change and continue running. This is done by handling the RoleEnvironment.Changing event. For more information see, How to Use the RoleEnvironment.Changing Event.

A common question for any Windows Azure solution is whether there is some type of built-in automatic scaling. Windows Azure does not provide a service that provides auto-scaling. However, it is possible to create a custom solution that scales Azure services using the Service Management API. For an example of this approach, see An Auto-Scaling Module for PHP Applications in Windows Azure.

Caching

Caching is an important strategy for scaling Drupal applications on Windows Azure. One reason for this is that SQL Azure implements throttling mechanisms to regulate the load on any one database in the cloud. Code that uses SQL Azure should have robust error handling and retry logic to account for this. For more information, see Error Messages (SQL Azure Database). Because of the potential for load-related throttling as well as for general performance improvement, it is strongly recommended to use caching.

Although Windows Azure provides a Caching service, this service does not currently have interoperability with PHP. Because of this, the best solution for caching in Drupal is to use a module that uses an open-source caching technology, such as Memcached.

Outside of a specific Drupal module, you can also configure Memcached to work in PHP for Windows Azure. For more information, see Running Memcached on Windows Azure for PHP. Here is also an example of how to get Memcached working in Windows Azure using a plugin: Windows Azure Memcached plugin.

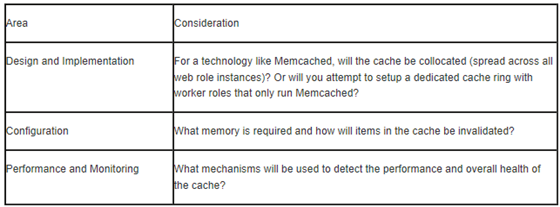

In a future paper, we hope to cover this architecture in more detail. For now, here are several design and management considerations related to caching.

For ease of use and cost savings, collocation of the cache across the web role instances of the Drupal site works best. However, this assumes that there is available reserve memory on each instance to apply toward caching. It is possible to increase the virtual machine size setting to increase the amount of available memory on each machine. It is also possible to add additional web role instances to add to the overall memory of the cache while at the same time improving the ability of the web site to respond to load. It is possible to create a dedicated cache cluster in the cloud, but the steps for this are beyond the scope of this paper.

For Windows Azure Blob storage, there is also a caching feature built into the service called the Content Delivery Network (CDN). CDN provides high-bandwidth access to files in Blob storage by caching copies of the files in edge nodes around the world. Even within a single geographic region, you could see performance improvements as there are many more edge nodes than Windows Azure datacenters. For more information, see Delivering High-Bandwidth Content with the Windows Azure CDN.

Manageability

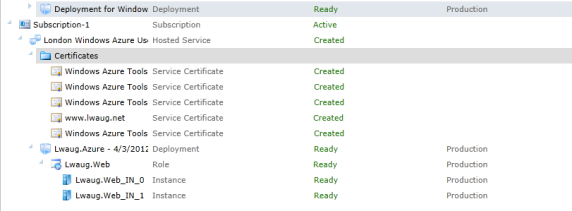

It is important to note that each hosted service has a Staging environment and a Production environment. This can be used to manage deployments, because you can load and test and application in staging before performing a VIP swap with production.

From a manageability standpoint, Drupal has an advantage on Windows Azure in the way that site content is stored. Because the data necessary to serve pages is stored in the database and blob storage, there is no need to redeploy the application to change the content of the site.

Another best practice is to use a separate storage account for diagnostic data than the one that is used for the application itself. This can improve performance and also helps to separate the cost of diagnostic monitoring from the cost of the running application.

As mentioned previously, there are several tools that can assist with managing Windows Azure applications. The following table summarizes a few of these choices.

- Windows Azure Management Portal The web interface of the Winows Azure management portal shows deployments, instance counts and properties, and supports many different common management and monitoring tasks.

- Azure Diagnostics Manager A Red Gate Software product that provides advanced monitoring and management of diagnostic data. This tool can be very useful for easily analyzing the performance of the Drupal site to determine appropriate scaling decisions.

- Azure Storage Explorer A tool created by Neudesic for viewing Windows Azure storage account. This can be useful for viewing both diagnostic data and the files in Blob storage.

Additional Drupal Resources

The following list contains additional resources for managing your Drupal deployment on Windows Azure:

- Install Drupal for Windows

- Azure Real World: Migrating a Drupal Site from LAMP to Windows Azure

- Drupal on Windows Azure

- Getting Started with Drupal 7 Administration

- Drupal Statistics

- Windows Azure Traffic Manager (WATM)

- System Center Operations Manager MP for Azure applications

- System Center Operations Manager MP for SQL Azure

The Windows Azure Team (@WindowsAzure) announced the availability of the Windows Media Services Preview on 4/16/2012:

Build workflows for the creation, management, and distribution of media with Windows Azure Media Services. Media Services offer the flexibility, scalability, and reliability of a cloud platform to handle high quality media experiences for a global audience. Some common uses of Media Services include:

End to End Workflows

Build comprehensive media workflows entirely in the cloud. From uploading media to distributing content, Media Services provide a range of pre-built, ready-to-use, first- and third-party services that can be combined to meet your specific needs. Capabilities include upload, storage, encoding, format conversion, content protection, and delivery.

Hybrid Workflows

Easily integrate Media Services with tools and processes you already use. For example, encode content on-site then upload to Media Services for transcoding into multiple formats and deliver through a third-party CDN. Media Services can be called individually via standard REST API’s for easy integration with external applications and services.

Cloud Support for Windows, Xbox, iOS, and Android

Creating, managing and delivering media across multiple devices has never been easier. Media Services provide everything you need to deliver content to a variety of devices, from Xbox and Windows PCs, to MacOS, iOS and Android.

Capabilities and Benefits of Media Services

Media Services includes cloud-based versions of many existing technologies from the Microsoft Media Platform and our media partners. Whether enhancing existing solutions or creating new workflows, you can easily combine and manage Media Services to create custom workflows that fit every need:

Ingest: Upload your media assets to Media Services storage using standard HTTP transfers or built-in third-party agents for faster UDP transfers with added security.

Encoding: Work with a range of standard codecs and formats, including industry-leading Smooth Streaming, HTTP Live Streaming, MPEG-DASH and Flash. You can choose the Windows Azure Media Encoder or a built-in third-party encoder.

Format Conversion: Convert entire libraries or individual streams with total control over input and output.

Content Protection: Easily encrypt live or on-demand video and audio with standard MPEG Common Encryption and Microsoft PlayReady, the industry’s most accepted DRM for premium content. Add watermarking to your media for an extra layer of protection.

On-Demand Streaming: Seamlessly deliver content via Windows Azure CDN or a third-party delivery network. Automatically scale to deliver high quality video experiences around the globe.

Live Streaming: Easily create and publish live streaming channels, encoding with Media Services or pushing from an external feed. Take advantage of built-in features like server-side DVR and instant replay.

For more information on these services and how to build solutions around them, visit the Windows Azure Developer Center.

Pricing and Metering for Media Services

The upcoming preview of Windows Azure Media Services will be available at no cost (charges for associated Windows Azure features like Storage, Egress, and CDN may apply). To sign up for the preview, click here.

The announcement coincided with the second day of the National Association of Broadcasters (NAB) show in Las Vegas, NV. You can read more technical details about the Windows Azure Media Services Preview for developers here.

Mary Jo Foley (@maryjofoley) also reported in her Microsoft delivers preview of its Windows Azure-hosted media services platform post of 4/16/2012 to ZDNet’s All About Microsoft blog:

… Microsoft officials also announced at NAB that the company will be working with Akamai and Deltatrae to deliver high definition streaming video of the London 2012 Olympic Games this summer across multiple countries.

Scott Guthrie (@scottgu) rang in with Announcing Windows Azure Media Services on 4/16/2012:

I'm excited to share news about a great new cloud capability we are announcing today - Windows Azure Media Services.

Windows Azure Media Services

Windows Azure Media Services is a cloud-based PaaS solution that enables you to efficiently build and deliver media solutions to customers. It offers a bunch of ready-to-use services that enable the fast ingestion, encoding, format-conversion, storage, content protection, and streaming (both live and on-demand) of video. It also integrates and exposes services provided by industry leading partners – enabling an incredibly deep media stack of functionality that you can leverage.

You can use Windows Azure Media Services to deliver solutions to any device or client - including HTML5, Silverlight, Flash, Windows 8, iPads, iPhones, Android, Xbox, and Windows Phone devices. Windows Azure Media Services supports a wide variety of streaming formats - including Smooth Streaming, HTTP Live Streaming (HLS), and Flash Media Streaming.

One of the unique aspects of Windows Azure Media Services is that all of its features are exposed using a consistent HTTP REST API. This is true both for the media services we've built, as well as the partner delivered media services that are enabled through it. This makes it incredibly easy to automate media workflows and integrate the combined set of services within your applications and media solutions. Like the rest of Windows Azure, you only pay for what you use with Windows Azure Media Services – making it a very cost effective way to deliver great solutions.

…

Windows Azure Media Services uses the same award-winning media backend that has been used to power some of the largest live sporting events ever broadcast on the web - including the 2010 Winter Olympics, 2010 FIFA World Cup, 2011 Wimbledon Championships, and 2012 NFL SuperBowl. Using Windows Azure Media Services you'll now be able to quickly standup and automate media cloud solutions of your own that are capable of delivering amazing solutions to an equal sized audience.

Learn More

We are introducing Windows Azure Media Services at the 2012 National Association of Broadcasters (NAB) Show this week, and attendees can stop by the Microsoft booth there to meet the team and see live demonstrations of it in action.

You can also visit windowsazure.com/media to learn more about the specific features it supports, and visit the windowsazure.com media dev center to learn more about how to develop against it. You can sign-up to try out the preview of Windows Azure Media Services by sending email to mediaservices@microsoft.com (along with details of the scenario you'd like to use it for).

We are really excited about the capabilities Windows Azure Media Services provides, and are looking forward to watching the solutions that will soon be built on it.

Benjamin Guinebertière (@benjguin) described How to request/buy a certificate and use it in Windows Azure in a 4/14/2012 bilingual post. From the English version:

Some domain registrars may let you request an SSL certificate for your domain. It is also possible to buy a certificate from a certificate authority. This post shows a way to request such a real or production certificate (not a test certificate) and use it in Windows Azure.

In this example I use Gandi registrar. With each domain they offer an SSL certificate; so let’s see how to request it and use it in Windows Azure. The main steps are:

- create a request from within IIS

- send the request to Gandi

- confirm the request in a bunch of e-mail and Web interfaces

- retrieve the request response and put it into IIS

- export the certificate from the IIS machine as a .pfx file

- upload the .pfx file to Windows Azure portal

- use the certificate in a simple sample Windows Azure App.

Create a request from within IIS

In this sample, the domain I registered with www.gandi.net was “appartement-a-vendre-courbevoie.fr” and we’ll create a certificate for myapp.appartement-a-vendre-courbevoie.fr so that we can expose an ssl application at https://myapp.appartement-a-vendre-courbevoie.fr.

We’ll first create a certificate request from within IIS. IIS is used as a tool that will create an unsigned certificate (with its private key) before sending it (without the private key) to the certificate authority who will sign the certificate.

Start IIS Manager, and go to the server certificate feature

Create a certificate request

In next screen the most important is to have Common name corresponding exactly to the URL that the certificate will be used with.

This generates a certificate request that looks like this

Send the request to Gandi

Before requesting the certificate, Gandi requires you to have an e-mail adress that corresponds to admin@<yourdomain>. Then, you can request the certificate. Here are the steps.

Confirm the request

Here are the steps to confirm the request

Retrieve the request response

Let’s now retrieve the result

Export the certificate

Let’s now export the certificate from the local machine to a .pfx file.

Upload the .pfx file to Windows Azure portal

Let’s send the .pfx file to Windows Azure

Use the certificate in a simple sample Windows Azure App.

NB: In some configurations, I’ve seen the necessity to have the certificate stored at current user’s level, not only at local machine level. Let’s first copy the certificate from the local machine store to the current user store.

Let’s now use the certificate in a Visual Studio 2010 project and deploy it to Windows Azure.

Let’s deploy to a bunch of extra small machines to show that the certificate is deployed automatically by Windows Azure to each instance. Note that SSL channel ends on each VM in the Web farm as I showed in this previous post.

By the way, using 6 extra small machines is the same price as 1 small machine.

(…)

(…)

In order to be able to access the App. from the domain name corresponding to the certificate, a CNAME entry must be added in the DNS; then myapp.appartement-a-vendre-courbevoie.fr matches sslapp.cloudapp.net

Here is the result

Richard Conway (@azurecoder) described Automating the generation of service certificates in Windows Azure on 4/13/2012:

I was prompted to write this having seen some of the implementations of the generation of service certificates online. Some poor explanations so I though I’d plug the gap. First let us cover some definitions. We interact with our subscription through a management certificate.

The management certificate needs to be uploaded to the subscription through the portal. This is the only function that we can’t automate. Obvious why, everybody has probably seen the chicken and the egg here already. Anyway, Microsoft have provided a .publishsettings file and Uri which eases the pain of automating this process because the fabric will instamagically update your subscription when you use your live id to login and download a publishsettings file. Y voila you have management access.

A service certificate is something different though. Service certificates are bound to an individual hosted service and don’t entail management of anything. They actually allow you to perform any operation which involves a certificate for that particular hosted service. Under the seams that certificate is being added to the Personal store on each of the role instances within that service.

Service certificates are immensely important for two essential functions: SSL and Remote Desktop.

Management Portal Showing Service Certificates

SSL is intrinsic to the role instance since it is part of IIS which is present on each of the web roles. Remote Desktop requires a plugin but equally uses the service certificate for authentication purposes.

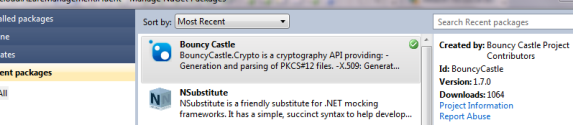

I wanted to highlight one great way of generating service certificates. There are several ways to do this but we’ll focus on a single one although we can use makecert, powershell and Microsoft provide a test app called CertificateGenerator (essentially a COM Callable Wrapper) amongst others. This way uses Bouncy Castle, a great library which is available through nuget. Simply:

Bouncy Castle from Nuget

> Install-Package BouncyCastle

at the Package Manager Console prompt and it is installed.

Let’s start by determining all of our using statements:

using Org.BouncyCastle.Asn1.X509; using Org.BouncyCastle.Crypto; using Org.BouncyCastle.Crypto.Generators; using Org.BouncyCastle.Crypto.Prng; using Org.BouncyCastle.Math; using Org.BouncyCastle.Security; using Org.BouncyCastle.X509;And then our method signature:

public static X509Certificate2 Create(string name, DateTime start, DateTime end, string userPassword, bool addtoStore = false)In order to create our certificate as a minimum we need a name, a validity period and as we are protecting a private key we need a private key password (more on this later!). Additionally we may want to add this to a local certificate store which the System.Cryptography assembly allows us to do fairly easily.

We always start any asymmetric cryptographic operation with the a private-public key pair. To generate keys we can use the following:

// generate a key pair using RSA var generator = new RsaKeyPairGenerator(); // keys have to be a minimum of 2048 bits for Azure generator.Init(new KeyGenerationParameters(new SecureRandom(new CryptoApiRandomGenerator()), 2048)); var cerKp = generator.GenerateKeyPair();Two properties that an X509v3 certificate has are a serial number and a subject name (and issuer name). The representation of what this looks like is canonical so we use terms such as “Common Name” (CN) or “Organisational Unit” (OU) to define details about the party the certificate represents and who the authority is that is vouching for them.

To create a subject name we use the X509Name as below and to generate a serial number which is a unique reference to our certificate we generate a large random prime:

// create the CN using the name passed in and create a unique serial number for the cert var certName = new X509Name("CN=" + name); var serialNo = BigInteger.ProbablePrime(120, new Random());After doing this we can create an X509v3CertificateGenerator object which will encapsulate and create the certificate for us:

// start the generator and set CN/DN and serial number and valid period var x509Generator = new X509V3CertificateGenerator(); x509Generator.SetSerialNumber(serialNo); x509Generator.SetSubjectDN(certName); x509Generator.SetIssuerDN(certName); x509Generator.SetNotBefore(start); x509Generator.SetNotAfter(end);Once we’ve set the basic and essential properties we can focus on what the cert actually does:

// add the server authentication key usage var keyUsage = new KeyUsage(KeyUsage.KeyEncipherment); x509Generator.AddExtension(X509Extensions.KeyUsage, false, keyUsage.ToAsn1Object()); var extendedKeyUsage = new ExtendedKeyUsage(new[] {KeyPurposeID.IdKPServerAuth}); x509Generator.AddExtension(X509Extensions.ExtendedKeyUsage, true, extendedKeyUsage.ToAsn1Object());Two types of property that the certificate has are Key Usage and Extended Key Usage which tell us all about its purpose to life. It’s rasion D’etre (it’s getting that time of night where I think I can actually speak French!)

In this case the certificate we create will need to be able to do two things.

- Prove to a client that it has authority to verify the server and

- Encrypt a key during a key exchange process

X509 Certificate with KU/EKU properties

Both of these are common to SSL (TLS).

The rest is fairly straightforward. We can set a signature algorithm. Note the use of Sha1 which by extension is the thumbprint algorithm in our certificate which is an integrity check to prove that the cert hasn’t been tampered with. It’s important to be aware that Azure will only support this thumbprint algorithm.

// algorithm can only be SHA1 ?? x509Generator.SetSignatureAlgorithm("sha1WithRSA"); // Set the key pair x509Generator.SetPublicKey(cerKp.Public); Org.BouncyCastle.X509.X509Certificate certificate = x509Generator.Generate(cerKp.Private);When this is done we will want to do common tasks with this and generally end up with our familiar X509Certificate2 exposed by the System.Cryptography.X509Certificates namespace and used in all common crypto tasks. Well the means to do this are fairly easy and provided by Bouncy Castle.

// export the certificate bytes byte[] certStream = DotNetUtilities.ToX509Certificate(certificate).Export(X509ContentType.Pkcs12, userPassword);Also not the use of PKCS#12 (Public Key Cryptographic Standard) which defines the private and uses a form of password-based encryption (PBE) to ensure that only with the password can I access the private key. As we can just use our password and now treat the X509Certificate2 class as a container for our cert with private key.

var cert = new X509Certificate2(certStream, userPassword);Adding the certificate to the store is fairly easy. You would first start by opening the store you want to engage:

/// <summary> /// Returns the My LocalMachine store /// </summary> private static X509Store ReturnStore() { var store = new X509Store(StoreName.My, StoreLocation.LocalMachine); store.Open(OpenFlags.OpenExistingOnly | OpenFlags.ReadWrite); return store; }After that all it takes is a bit addition using your X509Certificate2 object and then closing the store to release the handle.

One thing to note is that this certificate is self-signed. This doesn’t have to be the case; I could easily build a PKI here using this simple technique. Of course the code would like slightly differently (maybe we’ll cover this in a follow-up post) as would the issuer name.

I thought I’d write this post to offer readers another way to generate certificates. Six years ago when I was involved as the CTO in a startup that produced epassport software I would get immersed into the underlying details of these standards. Most of the time we would use OpenSSL which is an absolute gem of a library but Bouncy Castle comes a pretty close second in terms of functionality and upkeep. Have a play and enjoy!

The next generation of the Azure Fluent Management library uses the above code in order to automate the setup of SSL for a webrole and remote desktop. There has been a lot of refactoring on this recently to help us streamline deployments and we hope to release this in the coming week.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

• Michael Simons posted Filtering Data using Entity Set Filters (Michael Simons) to the Visual Studio LightSwitch blog on 4/17/2012:

It is common for line of business applications to have scenarios which require row level security (RLS). For example a LightSwitch developer creates an application that stores Employee information such as name and address along with a relationship to EmployeeBenefit information such as salary and number of vacation days. All users of the application should have access to read all of the Employee information but a typical user should only be able to view their own EmployeeBenefit information. In this article, I will show how the entity set filter functionality that was added to LightSwitch in Visual Studio 11 can be used to solve this problem.

Visual Studio LightSwitch (LightSwitch V1)