Windows Azure and Cloud Computing Posts for 2/9/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 2/12/2012 with new articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services, Big Data

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services, Big Data

• Roope Astala posted “Cloud Numerics” Example: Analyzing Demographics Data from Windows Azure Marketplace to the Microsoft Codename “Cloud Numerics” blog on 2/7/2012 (missed when published):

Imagine your sales business is ‘booming’ in cities X, Y, and Z, and you are looking to expand. Given that demographics provide regional indicators of sales potential, how do you find other cities with similar demographics? How do you sift though large sets of data to identify new cities and new expansion opportunities?

In this application example, we use “Cloud Numerics” to analyze demographics data such as average household size and median age between different postal (ZIP) codes in the United States.

This blog post demonstrates the following process for analyzing demographics data:

- We go through the steps of subscribing to the dataset in the Windows Azure Marketplace.

- We create a Cloud Numerics C# application for reading in data and computing correlation between different ZIP codes. We partition the application into two projects: one for reading in the data, and another one for performing the computation.

- Finally, we step through the writing of results to Windows Azure blob storage.

Before You Begin

- Create a Windows Live ID for accessing Windows Azure Marketplace

- Create a Windows Azure subscription for deploying and running the application (if do not have one already)

- Install “Cloud Numerics” on your local computer where you develop your C# applications

- Run the example application as detailed in the “Cloud Numerics” Lab Getting Started wiki page to validate your installation

Because of the memory usage, do not attempt to run this application on your local development machine. Additionally, you will need to run it on at least two compute nodes on your Windows Azure cluster.

Note!

You specify how many compute nodes are allocated when you use the Cloud Numerics Deployment Utility to configure your Windows Azure cluster. For details, see this section in the Getting Started guide. …

Roope continues with the following step-by-step sections:

- Step 1: Subscribe to the Demographics Dataset at Windows Azure Marketplace

- Step 2: Set Up the Cloud Numerics Project

- Step 3: Add Service Reference to Dataset

- Step 4: Create a Serial Reader for Windows Azure Marketplace Data

- Step 5: Parallelize Reader Using IParallelReader Interface

- Step 6: Add Method for Getting Mapping from Rows to Geographies

- Step 7: Compute Correlation Matrix Using Distributed Data

- Step 8: Select Interesting Subset of Cities and Write the Correlations into a BlobStep 9: Putting it All Together

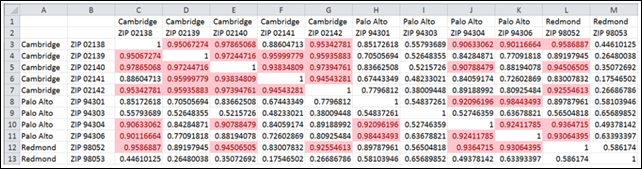

The resulting worksheet appears as follows:

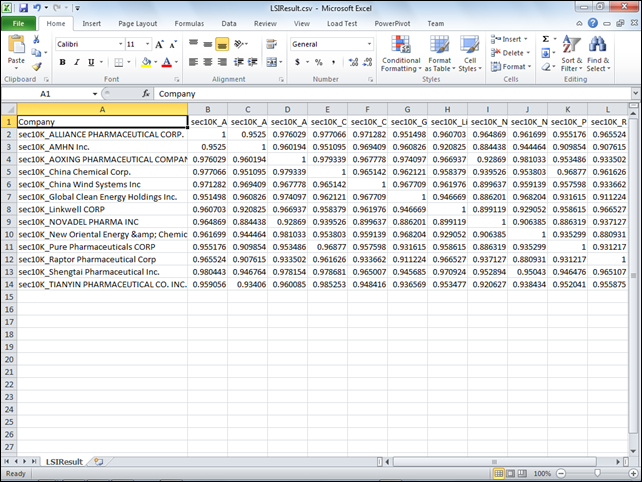

Compare the above with the data pattern from the worksheet created by the Latent Semantic Indexing sample in my Deploying “Cloud Numerics” Sample Applications to Windows Azure HPC Clusters post of 1/28/2012:

Compare the above with the data pattern from the worksheet created by the Latent Semantic Indexing sample in my Deploying “Cloud Numerics” Sample Applications to Windows Azure HPC Clusters post of 1/28/2012:

Roope also includes copyable source code for his Demographics Program.cs and DemographicsReader Class1.cs classes at the end of his post.

• Yanpei Chen, Archana Ganapathi, Rean Griffith, and Randy Katz recently posted The Case for Evaluating MapReduce Performance Using Workload Suites to GitHub. From the Abstract and Introduction:

Abstract

MapReduce systems face enormous challenges due to increasing growth, diversity, and consolidation of the data and computation involved. Provisioning, configuring, and managing large-scale MapReduce clusters require realistic, workload-specific performance insights that existing MapReduce benchmarks are ill-equipped to supply.

In this paper, we build the case for going beyond benchmarks for MapReduce performance evaluations. We analyze and compare two production MapReduce traces to develop a vocabulary for describing MapReduce workloads. We show that existing benchmarks fail to capture rich workload characteristics observed in traces, and propose a framework to synthesize and execute representative workloads. We demonstrate that performance evaluations using realistic workloads gives cluster operator new ways to identify workload-specific resource bottlenecks, and workload-specific choice of MapReduce task schedulers.

We expect that once available, workload suites would allow cluster operators to accomplish previously challenging tasks beyond what we can now imagine, thus serving as a useful tool to help design and manage MapReduce systems.

Introduction

MapReduce is a popular paradigm for performing parallel computations on large data. Initially developed by large Internet enterprises, MapReduce has been adopted by diverse organizations for business critical analysis, such as click

stream analysis, image processing, Monte-Carlo simulations, and others [1]. Open-source platforms such as Hadoop have accelerated MapReduce adoption.While the computation paradigm is conceptually simple, the logistics of provisioning and managing a MapReduce cluster are complex. Overcoming the challenges involved requires understanding the intricacies of the anticipated workload. Better knowledge about the workload enables better cluster provisioning and management. For example, one must decide how many and what types of machines to provision for the cluster.

This decision is the most difficult for a new deployment that lacks any knowledge about workload-cluster interactions, but needs to be revisited periodically as production workloads continue to evolve. Second, MapReduce configuration

parameters must be fine-tuned to the specific deployment, and adjusted according to added or decommissioned resources from the cluster, as well as added or deprecated jobs in the workload. Third, one must implement an appropriate workload management mechanism, which includes but is not limited to

job scheduling, admission control, and load throttling.Workload can be defined by a variety of characteristics, including computation semantics (e.g., source code), data characteristics (e.g., computation input/output), and the realtime job arrival patterns. Existing MapReduce benchmarks, such as Gridmix [2], [3], Pigmix [4], and Hive Benchmark [5],

test MapReduce clusters with a small set of “representative” computations, sized to stress the cluster with large datasets.While we agree this is the correct initial strategy for evaluating MapReduce performance, we believe recent technology trends warrant an advance beyond benchmarks in our understanding of workloads. We observe three such trends:

- Job diversity: MapReduce clusters handle an increasingly diverse mix of computations and data types [1]. The optimal workload management policy for one kind of computation and data type may conflict with that for

another. No single set of “representative” jobs is actually representative of the full range of MapReduce use cases.- Cluster consolidation: The economies of scale in constructing large clusters makes it desirable to consolidate many MapReduce workloads onto a single cluster [6], [7]. Cluster provisioning and management mechanisms must account for the non-linear superposition of different workloads. The benchmark approach of high-intensity, short duration measurements can no longer capture the variations in workload superposition over time.

- Computation volume: The computations and data size handled by MapReduce clusters increases exponentially [8], [9] due to new use cases and the desire to perpetually archive all data. This means that small misunderstanding of workload characteristics can lead to large penalties.

Given these trends, it is no longer sufficient to use benchmarks for cluster provisioning and management decisions. In this paper, we build the case for doing MapReduce performance evaluations using a collection of workloads, i.e., workload

suites. To this effect, our contributions are as follows:

- Compare two production MapReduce traces to both highlight the diversity of MapReduce use cases and develop a way to describe MapReduce workloads.

- Examine several MapReduce benchmarks and identify their shortcomings in light of the observed trace behavior.

- Describe a methodology to synthesize representative workloads by sampling MapReduce cluster traces, and then execute the synthetic workloads with low performance overhead using existing MapReduce infrastructure.

- Demonstrate that using workload suites gives cluster operators new capabilities by executing a particular workload to identify workload-specific provisioning bottlenecks and inform the choice of MapReduce schedulers.

We believe MapReduce cluster operators can use the workload suites to accomplish a variety of previously challenging tasks, beyond just the two new capabilities demonstrated here. For example, operators can anticipate the workload growth in different data or computational dimensions, provision the

added resources just in time, instead of over-provisioning with wasteful extra capacity. Operators can also select highly specific configurations optimized for different kinds of jobs within a workload, instead of having uniform configurations optimized for a “common case” that may not exist. Operators can also anticipate the impact of consolidating different workloads onto the same cluster. Using the workload description vocabulary we introduce, operators can systematically quantify the superposition of different workloads across many workload

characteristics. In short, once workload suites become available, we expect cluster operators to use them to accomplish innovative tasks beyond what we can now imagine.In the rest of the paper, we build the case for using workload suites by looking at production traces (Section II) and examining why benchmarks cannot reproduce the observed behavior (Section III). We detail our proposed workload synthesis and execution framework (Section IV), demonstrate that it executes representative workloads with low overhead, and gives cluster operators new capabilities (Section V). Lastly, we discuss opportunities and challenges for future work (Section VI). …

Project documentation and repository downloads are available here.

It appears to me that this technique would be useful for use with Apache Hadoop on Windows Azure clusters, as well as MapReduce operations in Microsoft Codename “Cloud Numerics” and Microsoft Research’s Excel Cloud Data Analytics and Project “Daytona.”

Three of the authors are from the University of California at Berkeley’s Electrical Engineering (my Alma Mater) and Computers Science department (fychen2, rean, randyg @eecs.berkeley.edu) and one is from Splunk, Inc. (aganapathi@splunk.com).

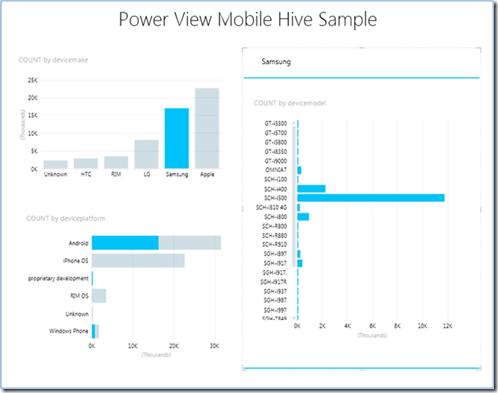

Denny Lee (@dennylee) described Connecting Power View to Hadoop on Azure–An #awesomesauce way to view Big Data in the Cloud in a 2/10/2012 post:

The post Connecting PowerPivot to Hadoop on Azure – Self Service BI to Big Data in the Cloud provided the step-by-step details on how to connect PowerPivot to your Hadoop on Azure cluster. And while this is really powerful, one of the great features as part of SQL Server 2012 is Power View (formerly known as Project Crescent). With Power ‘View, the SQL Server BI stack extends the concept of Self Service BI (PowerPivot) to Self service Reporting.

Above is a screenshot of the Power View Mobile Hive Sample that is built on top of the PowerPivot workbook created in the Connecting PowerPivot to Hadoop on Azure blog post. But taking a different medium, the steps to create a Power View report with Hadoop on Azure source can be seen in the YouTube video below.

Power View Report to Hadoop on Azure

Avkash Chauhan (@avkashchauhan) asked How many copies of your blob is stored in Windows Azure Blob Storage? in a 2/9/2012 post:

I was recently asked about if someone store their content at Windows Azure Storage, how secure is it and how many copies are there, so I decided to write this article.

Technically there are 6 copies of your blob content, distributed in two separate data centers within same continent as below:

When you copy a blob in Azure Storage, the same blob is replicated into 2 more copies in the same data center. At any given time you just access the primary copy; however, other 2 copies are for recovery purposes. So you have 3 copies of your blob data in specific data center which you have selected during Azure Storage. And after Geo-Replication you have 3 more copies of the same blob in other Data Center within same continent. This way you have 6 copies of your data in Windows Azure which can make you believe that your data is safe. However if you delete a blob and ask Windows Azure Support team to get it back, which is not possible. The sync and replication is done so fast is that once a blob is removed from primary location it is instantly gone from other location. So it is your responsibility to play with your blob content carefully.

When you create, update, or delete data to your storage account, the transaction is fully replicated on three different storage nodes across three fault domains and upgrade domains inside the primary location, then success is returned back to the client. Then, in the background, the primary location asynchronously replicates the recently committed transaction to the secondary location. That transaction is then made durable by fully replicating it across three different storage nodes in different fault and upgrade domains at the secondary location. Because the updates are asynchronously geo-replicated, there is no change in existing performance for your storage account.

Learn more at: http://blogs.msdn.com/b/windowsazurestorage/archive/2011/09/15/introducing-geo-replication-for-windows-azure-storage.aspx

Benjamin Guinebertière (@benjguin) described Analyzing 1 TB of IIS logs with Hadoop Map/Reduce on Azure with JavaScript | Analyse d’1 To de journaux IIS avec Hadoop Map/Reduce en JavaScript in a 2/9/2012 post. From the English version:

As described in a previous post, Microsoft has ported Apache Hadoop to Windows Azure (this will also be available on Windows Server). This is available as a private community technology preview for now.

This does not use Cygwin. One of the contributions Microsoft will propose in return to the open source community is the possibility to use JavaScript.

One of the goals of Hadoop is to work on large amount of unstructured data. In this sample, we’ll use JavaScript code to parse IIS logs and get information from authenticated sessions.

The Internet Information Services (IIS) logs come from a Web Farm. It may be a web farm on premises or a Web Role on Windows Azure. The logs are copied and consolidated to Windows Azure blob storage. We get a little more than 1 TB of those. Here is how this looks from Windows Azure Storage Explorer:

and from the interactive JavaScript console:

1191124656300 Bytes = 1,083321564 TB

Here is how log files look like:

#Software: Microsoft Internet Information Services 7.5

#Version: 1.0

#Date: 2012-01-06 09:09:05

#Fields: date time s-sitename s-computername s-ip cs-method cs-uri-stem cs-uri-query s-port cs-username c-ip cs-version cs(User-Agent) cs(Cookie) cs(Referer) cs-host sc-status sc-substatus sc-win32-status sc-bytes cs-bytes time-taken

2012-01-06 09:09:05 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /cuisine-francaise - 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) - http://site.supersimple.fr/ site.supersimple.fr 200 0 0 5734 321 3343

2012-01-06 09:09:12 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /cuisine-francaise/huitres - 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) - http://site.supersimple.fr/cuisine-francaise site.supersimple.fr 200 0 0 4922 346 890

2012-01-06 09:09:19 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /cuisine-japonaise - 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8= http://site.supersimple.fr/cuisine-francaise/huitres site.supersimple.fr 200 0 0 3491 544 906

2012-01-06 09:09:22 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /cuisine-japonaise/assortiment-de-makis - 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8= http://site.supersimple.fr/cuisine-japonaise site.supersimple.fr 200 0 0 3198 557 671

2012-01-06 09:09:27 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /blog - 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8= http://site.supersimple.fr/cuisine-japonaise/assortiment-de-makis site.supersimple.fr 200 0 0 3972 544 2406

2012-01-06 09:09:30 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /blog/marmiton - 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8= http://site.supersimple.fr/blog site.supersimple.fr 200 0 0 5214 519 718

2012-01-06 09:09:49 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /ustensiles - 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8= http://site.supersimple.fr/blog/marmiton site.supersimple.fr 200 0 0 6897 525 2859

2012-01-06 09:22:13 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /Users/Account/LogOn ReturnUrl=%2Fustensiles 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8= http://site.supersimple.fr/ustensiles site.supersimple.fr 200 0 0 3818 555 1203

2012-01-06 09:22:26 W3SVC1273337584 RD00155D360166 10.211.146.27 POST /Users/Account/LogOn ReturnUrl=%2Fustensiles 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8= http://site.supersimple.fr/Users/Account/LogOn?ReturnUrl=%2Fustensiles site.supersimple.fr 302 0 0 729 961 703

2012-01-06 09:22:27 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /ustensiles - 80 Test0001 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8=;+.ASPXAUTH=D5796612E924B60496C115914CC8F93239E99EEF4B3D6ED74BDD5C8C38D8C115D3021AB7F3B06E563EDE612BFBCBBE756803C85DECFACCA080E890C5DA6B4CA00A51792D812C93101F648505133C9E2C10779FA3E5AC19EE5E2B7E130C72C18F6309AEB736ABD06C87A7D636976A20534833E20160EC04B6B6617B378845AE627979EE54 http://site.supersimple.fr/Users/Account/LogOn?ReturnUrl=%2Fustensiles site.supersimple.fr 200 0 0 7136 849 1249

2012-01-06 09:22:30 W3SVC1273337584 RD00155D360166 10.211.146.27 GET / - 80 Test0001 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8=;+.ASPXAUTH=D5796612E924B60496C115914CC8F93239E99EEF4B3D6ED74BDD5C8C38D8C115D3021AB7F3B06E563EDE612BFBCBBE756803C85DECFACCA080E890C5DA6B4CA00A51792D812C93101F648505133C9E2C10779FA3E5AC19EE5E2B7E130C72C18F6309AEB736ABD06C87A7D636976A20534833E20160EC04B6B6617B378845AE627979EE54 http://site.supersimple.fr/ustensiles site.supersimple.fr 200 0 0 3926 788 1031

2012-01-06 09:22:57 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /cuisine-francaise - 80 Test0001 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8=;+.ASPXAUTH=D5796612E924B60496C115914CC8F93239E99EEF4B3D6ED74BDD5C8C38D8C115D3021AB7F3B06E563EDE612BFBCBBE756803C85DECFACCA080E890C5DA6B4CA00A51792D812C93101F648505133C9E2C10779FA3E5AC19EE5E2B7E130C72C18F6309AEB736ABD06C87A7D636976A20534833E20160EC04B6B6617B378845AE627979EE54 http://site.supersimple.fr/ site.supersimple.fr 200 0 0 5973 795 1093

2012-01-06 09:23:00 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /cuisine-francaise/gateau-au-chocolat-et-aux-framboises - 80 Test0001 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8=;+.ASPXAUTH=D5796612E924B60496C115914CC8F93239E99EEF4B3D6ED74BDD5C8C38D8C115D3021AB7F3B06E563EDE612BFBCBBE756803C85DECFACCA080E890C5DA6B4CA00A51792D812C93101F648505133C9E2C10779FA3E5AC19EE5E2B7E130C72C18F6309AEB736ABD06C87A7D636976A20534833E20160EC04B6B6617B378845AE627979EE54 http://site.supersimple.fr/cuisine-francaise site.supersimple.fr 200 0 0 8869 849 749

2012-01-06 09:30:50 W3SVC1273337584 RD00155D360166 10.211.146.27 GET / - 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 - - site.supersimple.fr 200 0 0 3687 364 1281

2012-01-06 09:30:50 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /Modules/Orchard.Localization/Styles/orchard-localization-base.css - 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 - http://site.supersimple.fr/ site.supersimple.fr 200 0 0 1148 422 749

2012-01-06 09:30:50 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /Themes/Classic/Styles/Site.css - 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 - http://site.supersimple.fr/ site.supersimple.fr 200 0 0 15298 387 843

2012-01-06 09:30:51 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /Themes/Classic/Styles/moduleOverrides.css - 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 - http://site.supersimple.fr/ site.supersimple.fr 200 0 0 557 398 1468

2012-01-06 09:30:51 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /Core/Shapes/scripts/html5.js - 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 - http://site.supersimple.fr/ site.supersimple.fr 200 0 0 1804 370 1015

2012-01-06 09:30:53 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /Themes/Classic/Content/current.png - 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 - http://site.supersimple.fr/ site.supersimple.fr 200 0 0 387 376 656

2012-01-06 09:30:57 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /modules/orchard.themes/Content/orchard.ico - 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 - - site.supersimple.fr 200 0 0 1399 346 468

2012-01-06 09:31:54 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /Users/Account/LogOn ReturnUrl=%2F 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 - http://site.supersimple.fr/ site.supersimple.fr 200 0 0 4018 435 718

2012-01-06 09:32:14 W3SVC1273337584 RD00155D360166 10.211.146.27 POST /Users/Account/LogOn ReturnUrl=%2F 80 - 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 __RequestVerificationToken_Lw__=BpgGSfFnDr9KB5oclPotYchfIFzjWXjJ5qHrtRcXoZmLRjG8pL9fw5CtMAN3Arckjm0ZfLtUsuBUGDNRztQPPWmlGLb6tfzSmELzdYbEg5RktsGNkxBr9+eyU342Lf8wSw2YFxqiUX7X8WlXwt0DQITMg2o= http://site.supersimple.fr/Users/Account/LogOn?ReturnUrl=%2F site.supersimple.fr 302 0 0 709 1083 812

2012-01-06 09:32:14 W3SVC1273337584 RD00155D360166 10.211.146.27 GET / - 80 Test0001 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 __RequestVerificationToken_Lw__=BpgGSfFnDr9KB5oclPotYchfIFzjWXjJ5qHrtRcXoZmLRjG8pL9fw5CtMAN3Arckjm0ZfLtUsuBUGDNRztQPPWmlGLb6tfzSmELzdYbEg5RktsGNkxBr9+eyU342Lf8wSw2YFxqiUX7X8WlXwt0DQITMg2o=;+.ASPXAUTH=94C70A59F9DA0E7294DCAAAEF9A0C52FA585B56A7FC4E01AF24437C84327D3E862548C2C0A5B71DD073443F000CE5767AF9009FFDCDE5F3EE184C3D73CF4BA4C7B8650461A448467FBAB87E311209F4DFB83B19335C9002E5EC5423E145165F64F226AC7F47C19B6035025ABDEDB4A7CAB4FF63A8C22FEED3C6002E6A99920FA8249D3B9 http://site.supersimple.fr/Users/Account/LogOn?ReturnUrl=%2F site.supersimple.fr 200 0 0 3926 935 906

2012-01-06 09:33:22 W3SVC1273337584 RD00155D360166 10.211.146.27 GET / - 80 Test0001 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 __RequestVerificationToken_Lw__=BpgGSfFnDr9KB5oclPotYchfIFzjWXjJ5qHrtRcXoZmLRjG8pL9fw5CtMAN3Arckjm0ZfLtUsuBUGDNRztQPPWmlGLb6tfzSmELzdYbEg5RktsGNkxBr9+eyU342Lf8wSw2YFxqiUX7X8WlXwt0DQITMg2o=;+.ASPXAUTH=94C70A59F9DA0E7294DCAAAEF9A0C52FA585B56A7FC4E01AF24437C84327D3E862548C2C0A5B71DD073443F000CE5767AF9009FFDCDE5F3EE184C3D73CF4BA4C7B8650461A448467FBAB87E311209F4DFB83B19335C9002E5EC5423E145165F64F226AC7F47C19B6035025ABDEDB4A7CAB4FF63A8C22FEED3C6002E6A99920FA8249D3B9 http://site.supersimple.fr/Users/Account/LogOn?ReturnUrl=%2F site.supersimple.fr 200 0 0 3926 935 1156By loading this sample in Excel, one can see that a session ID can be found from the .ASPXAUTH cookie, which is one of the cookies available as a IIS logs fields.

At the end of the processing, one tries to get the following result in 2 flat file structures.

Session headers give a summary of what happened in the session. Fields are a dummy row ID, session id, username, start date/time, end date/time, nb of visited URLs.

Session details give the list of URLs that were visited in a session. The fields are a dummy row ID, session id, hit time, url.

134211969 19251ab2b91cb3158e21c0c74f597a9872ed257d test2272g5x467 2012-01-28 20:06:08 2012-01-28 20:32:33 11

134213036 19251cd8a444c6642bbedc1ba5d848f26ad3c789 test1268gAx168 2012-02-02 20:01:47 2012-02-02 20:25:22 13

134213561 19252827f25750af10aaf89a9de3fc35ad15d97e test1987g4x214 2012-01-27 01:00:46 2012-01-27 01:06:26 5

134214566 19252bb73667cc04e5de2a6eebe5e8ba7cc77c4a test3333g4x681 2012-01-27 20:00:03 2012-01-27 20:03:23 12

134214866 19252bf03e7d962a41fde46127810339c587b0ae test1480hFx690 2012-01-27 18:18:51 2012-01-27 18:32:51 3

134215841 19253a4d1496dfea6e264ba7839d07ebd0a9662e test2467g6x109 2012-01-29 18:02:19 2012-01-29 18:13:10 11

134216451 19253b3c19f8a0f46fd44e6f979f3e8bedda7881 test3119hLx29 2012-02-02 18:04:17 2012-02-02 18:21:31 7

134216974 19253ff8924893dd72f6453568084e53985a8817 test2382g9x8 2012-02-01 01:07:55 2012-02-01 01:26:17 5

134217496 1925418002459ad897ed41b156f0e3eab78caa13 test3854g4x823 2012-01-27 02:06:38 2012-01-27 02:27:54 5

134216699 19253ff8924893dd72f6453568084e53985a8817 01:07:55 /Core/Shapes/scripts/html5.jsNote that the two structures could be joined thru the sessionid later on with HIVE for instance, but this is beyond the scope of this post. Also note that the sessionid is not the exact of value of the .ASPXAUTH cookie but a SHA1 hash of it so that it is shorter, in order to optimize netwrok traffic and have smaller result.

134216781 19253ff8924893dd72f6453568084e53985a8817 01:41:01 /Modules/Orchard.Localization/Styles/orchard-localization-base.css

134216900 19253ff8924893dd72f6453568084e53985a8817 01:25:02 /Users/Account/LogOff

134217072 1925418002459ad897ed41b156f0e3eab78caa13 02:08:01 /Modules/Orchard.Localization/Styles/orchard-localization-base.css

134217191 1925418002459ad897ed41b156f0e3eab78caa13 02:27:54 /Users/Account/LogOff

134217265 1925418002459ad897ed41b156f0e3eab78caa13 02:06:38 /

134217319 1925418002459ad897ed41b156f0e3eab78caa13 02:26:14 /Themes/Classic/Styles/moduleOverrides.css

134217414 1925418002459ad897ed41b156f0e3eab78caa13 02:17:08 /Core/Shapes/scripts/html5.js

134217596 1925420f22e51f948314b2a6fa0c53fe4d002455 19:11:29 /blog

134217654 1925420f22e51f948314b2a6fa0c53fe4d002455 19:00:21 /cuisine-francaise/barbecue

Here the code I used to do that. I may write another blog post later on to comment further that code.iislogsAnalysis.js:

iislogsAnalysis.js:/* IIS logs fields 0 date 2012-01-06 1 time 09:09:05 2 s-sitename W3SVC1273337584 3 s-computername RD00155D360166 4 s-ip 10.211.146.27 5 cs-method GET 6 cs-uri-stem /cuisine-francaise 7 cs-uri-query - 8 s-port 80 9 cs-username - 10 c-ip 94.245.127.11 11 cs-version HTTP/1.1 12 cs(User-Agent) Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) 13 cs(Cookie) - 14 cs(Referer) http://site.supersimple.fr/ 15 cs-host site.supersimple.fr 16 sc-status 200 17 sc-substatus 0 18 sc-win32-status 0 19 sc-bytes 5734 20 cs-bytes 321 21 time-taken 3343 sample lines 2012-01-06 09:09:05 W3SVC1273337584 RD00155D360166 10.211.146.27 GET /cuisine-francaise - 80 - 94.245.127.11 HTTP/1.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) - http://site.supersimple.fr/ site.supersimple.fr 200 0 0 5734 321 3343 2012-01-06 09:32:14 W3SVC1273337584 RD00155D360166 10.211.146.27 GET / - 80 Test0001 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 __RequestVerificationToken_Lw__=BpgGSfFnDr9KB5oclPotYchfIFzjWXjJ5qHrtRcXoZmLRjG8pL9fw5CtMAN3Arckjm0ZfLtUsuBUGDNRztQPPWmlGLb6tfzSmELzdYbEg5RktsGNkxBr9+eyU342Lf8wSw2YFxqiUX7X8WlXwt0DQITMg2o=;+.ASPXAUTH=94C70A59F9DA0E7294DCAAAEF9A0C52FA585B56A7FC4E01AF24437C84327D3E862548C2C0A5B71DD073443F000CE5767AF9009FFDCDE5F3EE184C3D73CF4BA4C7B8650461A448467FBAB87E311209F4DFB83B19335C9002E5EC5423E145165F64F226AC7F47C19B6035025ABDEDB4A7CAB4FF63A8C22FEED3C6002E6A99920FA8249D3B9 http://site.supersimple.fr/Users/Account/LogOn?ReturnUrl=%2F site.supersimple.fr 200 0 0 3926 935 906 2012-01-06 09:33:22 W3SVC1273337584 RD00155D360166 10.211.146.27 GET / - 80 Test0001 94.245.127.13 HTTP/1.1 Mozilla/5.0+(Windows+NT+6.1;+WOW64)+AppleWebKit/535.7+(KHTML,+like+Gecko)+Chrome/16.0.912.63+Safari/535.7 __RequestVerificationToken_Lw__=BpgGSfFnDr9KB5oclPotYchfIFzjWXjJ5qHrtRcXoZmLRjG8pL9fw5CtMAN3Arckjm0ZfLtUsuBUGDNRztQPPWmlGLb6tfzSmELzdYbEg5RktsGNkxBr9+eyU342Lf8wSw2YFxqiUX7X8WlXwt0DQITMg2o=;+.ASPXAUTH=94C70A59F9DA0E7294DCAAAEF9A0C52FA585B56A7FC4E01AF24437C84327D3E862548C2C0A5B71DD073443F000CE5767AF9009FFDCDE5F3EE184C3D73CF4BA4C7B8650461A448467FBAB87E311209F4DFB83B19335C9002E5EC5423E145165F64F226AC7F47C19B6035025ABDEDB4A7CAB4FF63A8C22FEED3C6002E6A99920FA8249D3B9 http://site.supersimple.fr/Users/Account/LogOn?ReturnUrl=%2F site.supersimple.fr 200 0 0 3926 935 1156 */ /* A cookie with authentication looks like this __RequestVerificationToken_Lw__=KLZ1dz1Aa4o2UdwJVwr0JhzSwmmSHmID9i/gutMvQkZWX9Q4QDktFHHiBhF8mSd6Cg5oIEeUpy/KNF7VLRFkrqN28raL8PfNuv0IfuKXxgl5s+uZpcvfGE6Olfsu7uNLg2bWwLZkrqXjv9cpRGaiXelmaM8=;+.ASPXAUTH=D5796612E924B60496C115914CC8F93239E99EEF4B3D6ED74BDD5C8C38D8C115D3021AB7F3B06E563EDE612BFBCBBE756803C85DECFACCA080E890C5DA6B4CA00A51792D812C93101F648505133C9E2C10779FA3E5AC19EE5E2B7E130C72C18F6309AEB736ABD06C87A7D636976A20534833E20160EC04B6B6617B378845AE627979EE54 The interesting part is ASPXAUTH=D5796612E924B60496C115914CC8F93239E99EEF4B3D6ED74BDD5C8C38D8C115D3021AB7F3B06E563EDE612BFBCBBE756803C85DECFACCA080E890C5DA6B4CA00A51792D812C93101F648505133C9E2C10779FA3E5AC19EE5E2B7E130C72C18F6309AEB736ABD06C87A7D636976A20534833E20160EC04B6B6617B378845AE627979EE54 the session ID is D5796612E924B60496C115914CC8F93239E99EEF4B3D6ED74BDD5C8C38D8C115D3021AB7F3B06E563EDE612BFBCBBE756803C85DECFACCA080E890C5DA6B4CA00A51792D812C93101F648505133C9E2C10779FA3E5AC19EE5E2B7E130C72C18F6309AEB736ABD06C87A7D636976A20534833E20160EC04B6B6617B378845AE627979EE54 */ /* the goal is to have this kind of file at the end: fffffff0a929d9fbbbbb0b4ffa744842f9188e01 D 20:07:53 /blog fffffff0a929d9fbbbbb0b4ffa744842f9188e01 H test2573g2x403 2012-01-25 20:07:53 2012-01-25 20:33:43 7 fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:09:41 /Users/Account/LogO fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:26:12 /blog/marmiton fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:16:58 /cuisine-francaise/barbecue fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:10:00 /blog/marmiton fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:11:24 / fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:27:50 /cuisine-japonaise/assortiment-de-makis fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:29:31 /cuisine-francaise/fondue-au-fromage fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:05:19 /cuisine-japonaise fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:31:32 /cuisine-francaise/dinde fffffff7e3dbde467fb4a004c31b41e5fdb49116 D 18:04:41 /cuisine-francaise/fondue-au-fromage fffffff7e3dbde467fb4a004c31b41e5fdb49116 H test3698g4x509 2012-01-27 18:04:41 2012-01-27 18:31:32 10 */var map = function (key, value, context) { var f; // fields var i; var s, sessionID, sessionData; if (value === null || value === "") { return; } if (value.charAt(0) === "#") { return; } f = value.split(" "); if (f[9] === null || f[9] === "" || f[9] === "-") { //username is anonymous, skip the log line return; } s = extractSessionFromCookies(f[13]); if (!s) { return; } sessionID = Sha1.hash(s); // hash will create a shorter key, here generated = "M " + f[9] + " " + f[0] + " " + f[1] + " " + f[6] context.write(sessionID, generated); function extractSessionFromCookies(cookies) { var i, j, sessionID; var cookieParts = cookies.split(";"); for (i = 0; i < cookieParts.length; i++) { j = cookieParts[i].indexOf("ASPXAUTH="); if (j >= 0) { sessionID = cookieParts[i].substring(j + "ASPXAUTH=".length); break; } } return sessionID; } }; var reduce = function (key, values, context) { var generated; var minDate = null; var maxDate = null; var username = null; var currentDate, currentMinDate, currentMaxDate; var nbUrls = 0; var f; var currentValue; var firstChar; while (values.hasNext()) { currentValue = values.next(); firstChar = currentValue.substring(0,1); if (firstChar == "M") { f = currentValue.split(" "); if (username === null) { username = f[1]; } currentDate = f[2] + " " + f[3]; if (minDate === null) { minDate = currentDate; maxDate = currentDate; } else { if (currentDate < minDate) { minDate = currentDate; } else { maxDate = currentDate; } } context.write(key, "D " + f[3] + " " + f[4]); // D stands for details nbUrls++; } else if (firstChar == "H") { f = currentValue.split(" "); if (username === null) { username = f[1]; } currentMinDate = f[2] + " " + f[3]; currentMaxDate = f[4] + " " + f[5]; if (minDate === null) { minDate = currentMinDate; maxDate = currentMaxDate; } else { if (currentMinDate < minDate) { minDate = currentMinDate; } if (currentMaxDate > maxDate) { maxDate = currentMaxDate; } } nbUrls += parseInt(f[6]); } else if (firstChar == "D") { context.write(key, currentValue); } else { context.write(key, "X" + firstChar + " " + currentValue); } } generated = "H " + username + " " + minDate + " " + maxDate + " " + nbUrls.toString(); // H stands for Header context.write(key, generated); } var main = function (factory) { var job = factory.createJob("iisLogAnalysis", "map", "reduce"); job.setCombiner("reduce"); job.setNumReduceTasks(64); job.waitForCompletion(true); }; //V120120c /* - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - */ /* SHA-1 implementation in JavaScript | (c) Chris Veness 2002-2010 | www.movable-type.co.uk */ /* - see http://csrc.nist.gov/groups/ST/toolkit/secure_hashing.html */ /* http://csrc.nist.gov/groups/ST/toolkit/examples.html */ /* - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - */ var Sha1 = {}; // Sha1 namespace /** * Generates SHA-1 hash of string * * @param {String} msg String to be hashed * @param {Boolean} [utf8encode=true] Encode msg as UTF-8 before generating hash * @returns {String} Hash of msg as hex character string */ Sha1.hash = function (msg, utf8encode) { utf8encode = (typeof utf8encode == 'undefined') ? true : utf8encode; // convert string to UTF-8, as SHA only deals with byte-streams if (utf8encode) msg = Utf8.encode(msg); // constants [§4.2.1] var K = [0x5a827999, 0x6ed9eba1, 0x8f1bbcdc, 0xca62c1d6]; // PREPROCESSING msg += String.fromCharCode(0x80); // add trailing '1' bit (+ 0's padding) to string [§5.1.1] // convert string msg into 512-bit/16-integer blocks arrays of ints [§5.2.1] var l = msg.length / 4 + 2; // length (in 32-bit integers) of msg + ‘1’ + appended length var N = Math.ceil(l / 16); // number of 16-integer-blocks required to hold 'l' ints var M = new Array(N); for (var i = 0; i < N; i++) { M[i] = new Array(16); for (var j = 0; j < 16; j++) { // encode 4 chars per integer, big-endian encoding M[i][j] = (msg.charCodeAt(i * 64 + j * 4) << 24) | (msg.charCodeAt(i * 64 + j * 4 + 1) << 16) | (msg.charCodeAt(i * 64 + j * 4 + 2) << 8) | (msg.charCodeAt(i * 64 + j * 4 + 3)); } // note running off the end of msg is ok 'cos bitwise ops on NaN return 0 } // add length (in bits) into final pair of 32-bit integers (big-endian) [§5.1.1] // note: most significant word would be (len-1)*8 >>> 32, but since JS converts // bitwise-op args to 32 bits, we need to simulate this by arithmetic operators M[N - 1][14] = ((msg.length - 1) * 8) / Math.pow(2, 32); M[N - 1][14] = Math.floor(M[N - 1][14]) M[N - 1][15] = ((msg.length - 1) * 8) & 0xffffffff; // set initial hash value [§5.3.1] var H0 = 0x67452301; var H1 = 0xefcdab89; var H2 = 0x98badcfe; var H3 = 0x10325476; var H4 = 0xc3d2e1f0; // HASH COMPUTATION [§6.1.2] var W = new Array(80); var a, b, c, d, e; for (var i = 0; i < N; i++) { // 1 - prepare message schedule 'W' for (var t = 0; t < 16; t++) W[t] = M[i][t]; for (var t = 16; t < 80; t++) W[t] = Sha1.ROTL(W[t - 3] ^ W[t - 8] ^ W[t - 14] ^ W[t - 16], 1); // 2 - initialise five working variables a, b, c, d, e with previous hash value a = H0; b = H1; c = H2; d = H3; e = H4; // 3 - main loop for (var t = 0; t < 80; t++) { var s = Math.floor(t / 20); // seq for blocks of 'f' functions and 'K' constants var T = (Sha1.ROTL(a, 5) + Sha1.f(s, b, c, d) + e + K[s] + W[t]) & 0xffffffff; e = d; d = c; c = Sha1.ROTL(b, 30); b = a; a = T; } // 4 - compute the new intermediate hash value H0 = (H0 + a) & 0xffffffff; // note 'addition modulo 2^32' H1 = (H1 + b) & 0xffffffff; H2 = (H2 + c) & 0xffffffff; H3 = (H3 + d) & 0xffffffff; H4 = (H4 + e) & 0xffffffff; } return Sha1.toHexStr(H0) + Sha1.toHexStr(H1) + Sha1.toHexStr(H2) + Sha1.toHexStr(H3) + Sha1.toHexStr(H4); } // // function 'f' [§4.1.1] // Sha1.f = function (s, x, y, z) { switch (s) { case 0: return (x & y) ^ (~x & z); // Ch() case 1: return x ^ y ^ z; // Parity() case 2: return (x & y) ^ (x & z) ^ (y & z); // Maj() case 3: return x ^ y ^ z; // Parity() } } // // rotate left (circular left shift) value x by n positions [§3.2.5] // Sha1.ROTL = function (x, n) { return (x << n) | (x >>> (32 - n)); } // // hexadecimal representation of a number // (note toString(16) is implementation-dependant, and // in IE returns signed numbers when used on full words) // Sha1.toHexStr = function (n) { var s = "", v; for (var i = 7; i >= 0; i--) { v = (n >>> (i * 4)) & 0xf; s += v.toString(16); } return s; } /* - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - */ /* Utf8 class: encode / decode between multi-byte Unicode characters and UTF-8 multiple */ /* single-byte character encoding (c) Chris Veness 2002-2010 */ /* - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - */ var Utf8 = {}; // Utf8 namespace /** * Encode multi-byte Unicode string into utf-8 multiple single-byte characters * (BMP / basic multilingual plane only) * * Chars in range U+0080 - U+07FF are encoded in 2 chars, U+0800 - U+FFFF in 3 chars * * @param {String} strUni Unicode string to be encoded as UTF-8 * @returns {String} encoded string */ Utf8.encode = function (strUni) { // use regular expressions & String.replace callback function for better efficiency // than procedural approaches var strUtf = strUni.replace( /[\u0080-\u07ff]/g, // U+0080 - U+07FF => 2 bytes 110yyyyy, 10zzzzzz function (c) { var cc = c.charCodeAt(0); return String.fromCharCode(0xc0 | cc >> 6, 0x80 | cc & 0x3f); } ); strUtf = strUtf.replace( /[\u0800-\uffff]/g, // U+0800 - U+FFFF => 3 bytes 1110xxxx, 10yyyyyy, 10zzzzzz function (c) { var cc = c.charCodeAt(0); return String.fromCharCode(0xe0 | cc >> 12, 0x80 | cc >> 6 & 0x3F, 0x80 | cc & 0x3f); } ); return strUtf; } /** * Decode utf-8 encoded string back into multi-byte Unicode characters * * @param {String} strUtf UTF-8 string to be decoded back to Unicode * @returns {String} decoded string */ Utf8.decode = function (strUtf) { // note: decode 3-byte chars first as decoded 2-byte strings could appear to be 3-byte char! var strUni = strUtf.replace( /[\u00e0-\u00ef][\u0080-\u00bf][\u0080-\u00bf]/g, // 3-byte chars function (c) { // (note parentheses for precence) var cc = ((c.charCodeAt(0) & 0x0f) << 12) | ((c.charCodeAt(1) & 0x3f) << 6) | (c.charCodeAt(2) & 0x3f); return String.fromCharCode(cc); } ); strUni = strUni.replace( /[\u00c0-\u00df][\u0080-\u00bf]/g, // 2-byte chars function (c) { // (note parentheses for precence) var cc = (c.charCodeAt(0) & 0x1f) << 6 | c.charCodeAt(1) & 0x3f; return String.fromCharCode(cc); } ); return strUni; } /* - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - */This code will produce an intermediary flat file structure that looks like this (headers are after details):

00000e399c3e94f8f919314762998b784d178bd4 D 02:14:32 /Core/Shapes/scripts/html5.jsthen, 2 jobs will be able to get only headers, and details. Here they are.

00000e399c3e94f8f919314762998b784d178bd4 D 02:00:54 /Users/Account/LogOff

00000e399c3e94f8f919314762998b784d178bd4 D 02:09:39 /Modules/Orchard.Localization/Styles/orchard-localization-base.css

00000e399c3e94f8f919314762998b784d178bd4 D 02:13:24 /Themes/Classic/Styles/moduleOverrides.css

00000e399c3e94f8f919314762998b784d178bd4 D 02:12:37 /

00000e399c3e94f8f919314762998b784d178bd4 H test3059g2x50 2012-01-25 02:00:54 2012-01-25 02:12:37 5

00000e7fd498e90cf3f10b5158e1ccf6ff3b8153 D 00:26:22 /Users/Account/LogOff

00000e7fd498e90cf3f10b5158e1ccf6ff3b8153 D 00:24:12 /

00000e7fd498e90cf3f10b5158e1ccf6ff3b8153 H test0118g5x29 2012-01-28 00:24:12 2012-01-28 00:26:22 2

iisLogsAnalysisToH.js

iisLogsAnalysisToH.js// V120120a var map = function (key, value, context) { var generated; var minDate; var maxDate; var username; var nbUrls; var l, f; var firstChar; var sessionID; if (!value) { return; } l = value.split("\t"); if (l.length < 2) { return; } sessionID = l[0]; firstChar = l[1].substring(1, 0); if (firstChar != "H") { return; } f = l[1].split(" "); username = f[1]; minDate = f[2] + " " + f[3]; maxDate = f[4] + " " + f[5]; nbUrls = f[6]; generated = sessionID + "\t" + username + "\t" + minDate + "\t" + maxDate + "\t" + nbUrls; context.write(key, generated); }; var main = function (factory) { var job = factory.createJob("iisLogAnalysisToH", "map", ""); job.setNumReduceTasks(0); job.waitForCompletion(true); };and iisLogsAnalysisToD.js:// V120120a var map = function (key, value, context) { var generated; var hitTime var Url var l, f; var firstChar; var sessionID; if (!value) { return; } l = value.split("\t"); if (l.length < 2) { return; } sessionID = l[0]; firstChar = l[1].substring(1, 0); if (firstChar != "D") { return; } f = l[1].split(" "); hitTime = f[1]; Url = f[2]; generated = sessionID + "\t" + hitTime + "\t" + Url; context.write(key, generated); }; var main = function (factory) { var job = factory.createJob("iisLogAnalysisToD", "map", ""); job.setNumReduceTasks(0); job.waitForCompletion(true); };Before executing the code, one needs to provision a cluster in order to have processing power. With Windows Azure, here is how this can be done:In order to copy the data from blob storage to Hadoop distributed file system (HDFS), one way is to connect thru Remote Desktop to the headnode and issue a distcp command. Before that one needs to configure Windows Azure Storage (ASV) in the console.

distcp automatically generates a map only job that copies data from one location to another in a distributed way. This job can be tracked from the standard Hadoop console:

JavaScript code must be uploaded to HDFS before being executed:

then javascript code can be executed:

This code runs within a few hours on a 1x8CPU+32x2CPU cluster.

Once it is finished, the two remaining scripts can be run in parallel (or not):

Then, one gets the result in HDFS folders that can be copied back to Windows Azure blobs thru distcp, or exposed as HIVE tables and retrieved thru SSIS in SQL Server or SQL Azure thanks to the ODBC driver for HIVE. This may be explained in a future blog post.

Here are just the HIVE commands to view the files as tables:

CREATE EXTERNAL TABLE iisLogsHeader (rowID STRING, sessionID STRING, username STRING, startDateTime STRING, endDateTime STRING, nbUrls INT)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n'

STORED AS TEXTFILE

LOCATION '/user/cornac/iislogsH'

Michelle Hart reported NEW! Wiki launched for Apache Hadoop on Windows Azure on 2/8/2012:

Although Apache Hadoop on Windows Azure is currently only available via CTP, you can get a jumpstart learning all about it by visiting the Apache Hadoop on Windows wiki. This wiki also covers Apache Hadoop on Windows Server.

The wiki contains overview information about Apache Hadoop, as well as information about the Hadoop offerings on Windows and related Microsoft technologies, including Windows Azure. It also provides links to more detailed technical content from various sources and in various formats: How-to topics, Code Samples, Videos, and more.

Avkash Chauhan (@avkashchauhan) described the Internals of Hadoop Pig Operators as MapReduce Job in a 2/8/2012 post:

I was recently asked to show that Pig scripts are actually MapReduce jobs so to explain it in very simple way I have created the following example:

- Read a text file using Pig Script

- Dump the content of the file

As you can see below that when “dump” command was used a MapReduce job was initiated:

c:\apps\dist>pig

2012-02-09 05:19:12,777 [main] INFO org.apache.pig.Main - Logging error messages to: c:\apps\dist\pig_1328764752777.log

2012-02-09 05:19:13,198 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to hadoop file system at: hdfs://10.114.226.34:9000

2012-02-09 05:19:13,652 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to map-reduce job tracker at: 10.114.226.34:9010

grunt> raw = load 'avkashwordfile.txt';

grunt> dump raw;

2012-02-09 05:19:46,542 [main] INFO org.apache.pig.tools.pigstats.ScriptState - Pig features used in the script: UNKNOWN

2012-02-09 05:19:46,542 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - pig.usenewlogicalplan is set to true. New logical plan will be used.

2012-02-09 05:19:46,761 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - (Name: raw: Store(hdfs://10.114.226.34:9000/tmp/temp-1709215369/tmp275450578:org.apache.pig.impl.io.InterStorage) - scope-1 Operator Key: scope-1)

2012-02-09 05:19:46,776 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MRCompiler - File concatenation threshold: 100 optimistic? false

2012-02-09 05:19:46,823 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MultiQueryOptimizer - MR plan size before optimization: 1

2012-02-09 05:19:46,823 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MultiQueryOptimizer - MR plan size after optimization: 1

2012-02-09 05:19:46,995 [main] INFO org.apache.pig.tools.pigstats.ScriptState - Pig script settings are added to the job

2012-02-09 05:19:47,026 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - mapred.job.reduce.markreset.buffer.percent is not set, set to default 0.3

2012-02-09 05:19:48,308 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - Setting up single store job

2012-02-09 05:19:48,339 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 1 map-reduce job(s) waiting forsubmission.

2012-02-09 05:19:48,839 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 0% complete

2012-02-09 05:19:48,870 [Thread-6] INFO org.apache.hadoop.mapreduce.lib.input.FileInputFormat - Total input paths to process : 1

2012-02-09 05:19:48,870 [Thread-6] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths to process : 1

2012-02-09 05:19:48,886 [Thread-6] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths (combined) to process : 1

2012-02-09 05:19:51,183 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - HadoopJobId: job_201202082253_0006

2012-02-09 05:19:51,183 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - More information at: http://10.1

14.226.34:50030/jobdetails.jsp?jobid=job_201202082253_0006

2012-02-09 05:20:15,198 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 50% complete

2012-02-09 05:20:16,198 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 50% complete

2012-02-09 05:20:21,198 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 50% complete

2012-02-09 05:20:30,932 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 100% complete

2012-02-09 05:20:30,932 [main] INFO org.apache.pig.tools.pigstats.PigStats - Script Statistics:

HadoopVersion PigVersion UserId StartedAt FinishedAt Features

0.20.203.1-SNAPSHOT 0.8.1-SNAPSHOT avkash 2012-02-09 05:19:46 2012-02-09 05:20:30 UNKNOWN

Success!

Job Stats (time in seconds):

JobId Maps Reduces MaxMapTime MinMapTIme AvgMapTime MaxReduceTime MinReduceTime AvgReduceTime Alias Feature Outputs

job_201202082253_0006 1 0 12 12 12 0 0 0 raw MAP_ONLY hdfs://10.114.226.34:9000/tmp/temp-170

9215369/tmp275450578,

Input(s):

Successfully read 15 records (482 bytes) from: "hdfs://10.114.226.34:9000/user/avkash/avkashwordfile.txt"

Output(s):

Successfully stored 15 records (183 bytes) in: "hdfs://10.114.226.34:9000/tmp/temp-1709215369/tmp275450578"

Counters:

Total records written : 15

Total bytes written : 183

Spillable Memory Manager spill count : 0

Total bags proactively spilled: 0

Total records proactively spilled: 0

Job DAG:

job_201202082253_0006

2012-02-09 05:20:30,948 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - Success!

2012-02-09 05:20:30,979 [main] INFO org.apache.hadoop.mapreduce.lib.input.FileInputFormat - Total input paths to process : 1

2012-02-09 05:20:30,979 [main] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths to process : 1

(avkash)

(amit)

(akhil)

(avkash)

(hello)

(world)

(hello)

(state)

(avkash)

(akhil)

(world)

(state)

(world)

(state)

(hello)

grunt>

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Sahil Malik (@sahilmalik) described Two Azure Success Stories in One Day in a 2/9/2012 post to his WinSmarts blog:

I’m doing a training on Azure, and right here in the class we had two success stories.

- There are a couple of people in the class who run a third party software for the banking industry. They are considering moving to Azure, and one of the biggest most important pieces to move is their database. In a matter of minutes, we were able to move their entire database, including data to a SQL Azure database. We did so by using http://sqlazuremw.codeplex.com/.

- We have another very interesting group of individuals in the class whose aim is to build a mobile application on the Android platform that is able to bring data from on-premise applications easily and securely. With very little effort we were able to author a service running in the cloud, and bring the data on an Android emulator. This took only about 20-30 minutes to do. Tomorrow, I will help them enhance this to use Azure ServiceBus so we can securely pull the data from an on-premise application and basically more or less have a fully functional application.

Very exciting to say the least!

Cihan Biyikoglu (@cihangirb) explained Connection Pool Fragmentation: Use Federations and you won’t need to learn about these nasty problems that come with sharding! in a 2/8/2012 post:

Sharding has been around for a while and I have seen quite a few systems that utilize SQL Azure with 100s Databases and 100s of compute nodes and tweeted and written about them in the past. Like this case with Flavorus;

Ticketing Company Scales to Sell 150,000 Tickets in 10 Seconds by Moving to Cloud Computing Solution

James has certainly been a great partner to work with and he is an amazingly talented guy who can do magic to pull some amazing results together in a few weeks. You need to read the case study for full details but basically he got Jetstream to scale to sell 150,00 tickets in 10 seconds roughly with 550 SQL Azure databases and 750 compute nodes. And he did that in about 3 weeks time testing included!

These types of systems are now common but the scale problems at these levels certainly get complex. one of those that hit many of the customers is the problem with connection multiplexing or simply connection pool fragmentation. Bear with me, this one takes a paragraph to explain:

Imagine a sharded system with M shards and N middle tier servers and with a max of C concurrency requests. The number of connections you need to establish is M*N*C. That is because every middle tier server has to establish possibly C connection to every shard given requests come in randomly distributed to the entire site. Now imagine the formula with some numbers; I’ll be conservative and say 50 shards, 75 middle tier servers and 10 concurrent connections. Here is what you end up with;

M * N * C = 50 * 75 * 10 = 37,500 connections

Over 37K… That is a lot of connections! Here are some other numbers;

- Each middle tier server ends up with M connection pools with C connections in each at the worst case. That is 500 connection from each middle tier machine.

- Also, every shard maintains 750 connections from the middle tier servers in the worst case. that is a lot of connections to maintain as well. A large number of connection is bad because that can cause you to become a victim of throttling … not a good thing …

Another fact of life is that life isn’t perfectly distributed so what happens most times is these 500 connections in the middle tier server don’t get used enough to stay alive. SQL Azure terminates them after a while of being idle. You end up with many of these idle connections dead in the pool. That means the next time you hit this dead connection to a shard from this app server, you have to reestablish a cold connection from the client all the way to the db with a full login and whole bunch of other handshakes that cost you orders of magnitude more in latency.

This issue can be referred to as connection pool fragmentation… Connection Pooling documentation also makes references to the issue: http://msdn.microsoft.com/en-us/library/8xx3tyca.aspx and look for fragmentation. Push M or N or C higher and things get much worse.

Federation cures this for apps. With federations the connection string points to the root database. Since we ‘fool’ the middle tier to connect to root in the connection string, M=1 and the total connection from all middle tier servers to SQL Azure is only N*C = 750 total connections. Compared to over 37K that is a huge improvement!

Not only that but each middle tier server has 1 connection pool to maintain with only C=10 connection… Much better than 500. Member connections can get more complicated because of pooling in the gateway tier but it is much much better than 750 connections per shard as well. So this makes the problem go away! This is the magic of USE FEDERATION statement. No more connection pool fragmentation.

Another great benefit of USE FEDERATION is that it give you the ability to maintain your connection object and keep rewiring your connection from gateway to the db nodes in SQL Azure without a disconnect. With sharding, since we don’t support USE statement yet, the only way to connect to another shard is to disconnect and reconnect. With USE FEDERATION you can simply keep doing more USE FEDERATIONs and never have to close your connection!

If you made is this far, thanks for reading all this… If you use federations, you can forget what you read. Just have to remember that you didn’t have to learn more about connection pool fragmentation or frequent disconnects & reconnects you have to face with sharding.

Herve Roggero (@hroggero) described Ways To Load Data In SQL Azure in a 2/7/2012 post:

This blog provides links to a presentation and a sample application that shows how to load data in SQL Azure using different techniques and tools. The presentation compares the following techniques: INSERT, BCP, INSERT BULK, SQLBulkCopy and a few tools like SSIS and Enzo Data Copy.

The presentation contains load tests performed from a local machine with 4 CPUs, using 8 threads (leveraging the Task Parallel Library), to a SQL Azure database using the code provided below. The test loads 40,000 records. Note that however the test was not conducted from a highly controlled environment, so results may vary. However enough differences were found to show a trend and demonstrate the speed of the SQLBulkCopy API versus other programmatic techniques.

You can download the entire presentation deck here: presentation (PDF)

Enzo Data Copy and SSIS

The presentation deck also shows that the Enzo Data Copy wizard loads data efficiently with large tables. However it performs slower for very small databases. The reason the Enzo Data Copy is fast with larger databases is due to its internal chunking algorithm and highly tuned parallel insert operations tailored for SQL Azure. In addition, Enzo Data Copy is designed to retry failed operations that could be the result of network connection issues or throttling; this resilience to connection issues ensures that large databases are more likely to be transferred successfully the first time. The Enzo Data Copy tool can be found here: Enzo Data Copy

In this test, with SSIS left with its default configuration, SSIS was 25% slower than the Enzo Data Copy Wizard with 2,000,000 records to transfer. The SSIS Package created was very basic; the UseBulkInsertWhenPossible property was set to true which controls the use of the INSERT BULK command. Note that a more advanced SSIS developer will probably achieve better results by tuning the SSIS load; the comparison is not meant to conclude that SSIS is slower than the Enzo Data Copy utility; rather it is meant to show that the utility compares with SSIS in load times with larger data sets. Also note that the utility is designed to be a SQL Server Migration tool; not a full ETL product.

Note about the source code

Note that the source code is provided as-is for learning purposes. In order to use the source code you will need to change the connection string to a SQL Azure database, create a SQL Azure database if you don’t already have one, and create the necessary database objects (the T-SQL commands to run can be found in the code).

The code is designed to give you control over how many threads you want to use and the technique to load the data. For example, this command loads 40,000 records (1000 x 40) using 8 threads in batches of 1000: ExecuteWithSqlBulkCopy(1000, 40, 8); While this command loads 40,000 records (500 x 80) using 4 threads in batches of 500: ExecuteWithPROC(500, 80, 4);

Here is the link to the source code: source code

Here is a sample output of the code:

On the whole, George Huey’s SQL Azure Migration Wizard and SQL Azure Federations Data Migration Wizard are the best bet for uploading data with BCP from SQL Server to SQL Azure. See my Loading Big Data into Federated SQL Azure Tables with the SQL Azure Federation Data Migration Wizard v1.2 article of 1/17/2012 for more details.

On the whole, George Huey’s SQL Azure Migration Wizard and SQL Azure Federations Data Migration Wizard are the best bet for uploading data with BCP from SQL Server to SQL Azure. See my Loading Big Data into Federated SQL Azure Tables with the SQL Azure Federation Data Migration Wizard v1.2 article of 1/17/2012 for more details.

Herve Roggero (@hroggero) explained How To Copy A Schema Container To Another SQL Azure Database in a 2/6/2012 post (missed when posted):

This article is written to assist SQL Azure customers to copy a SCHEMA container from one SQL Azure database to another. Schema separation (or compress shard) is a technique used by applications that hold multiple customer “databases” inside the same physical database, but separated logically by a SCHEMA container. At times it may be necessary to copy a given SCHEMA container from one database to another. Copying a SCHEMA container from one database to another can be very difficult because you need to only extract and import the data contained in the tables found in that schema.

If your SQL Azure database has multiple schema containers in it, and you would like to copy the objects under that schema to another database, you can do so by following the steps below. The article uses the Enzo Backup tool (http://bluesyntax.net/backup.aspx), designed to help in achieving this complex task.

Example Overview

Let’s assume you have a database with multiple SCHEMA containers and you would like to copy one of the schema containers to another database. A SCHEMA container holds tables, foreign keys, default constraints, indexes and more. In the screenshot below the selected database has 5 schema contains: the DBO schema and 4 custom SCHEMA container. The tool allows you to copy the objects from any schema into another database.

Backing Up Your SCHEMA Container

The first step is to backup your schema. Enzo Backup gives you the options needed to backup a single SCHEMA container at a time.

- If you have registered your databases previously with the backup tool, select the database from the list of databases on the left pane, right-click on the SCHEMA container (i.e. your logical database) and select Backup to Blob (or file). Otherwise click on the Backup –> To Blob Device from the menu and enter the server credentials, and specify the name of the SCHEMA to backup from the Advanced screen.

- Type the name of your backup device

- Optionally select a cloud agent if you are saving to a Blob on the Advanced tab (note: a cloud agent must be deployed separately)

- Click Start

Restoring Your SCHEMA Container

Once the backup is complete, you can restore the backup device to the database server of your choice. Note that you could restore to a local SQL Server database or to another SQL Azure database server.

- Click on Backups on the left pane to view your backup devices and find your device

- Right-click on your backup device (file or blob)

- Enter the credentials of the server you are restoring to and the name of the database

- If the database does not exist, select the Create If… option

- Optionally, if you are using a Blob device and restoring to a SQL Azure database, check the Use Cloud Agent (note: a cloud agent must be deployed separately)

- Click Start

If for some reason the database you are restoring to is not empty, you may see a warning indicating that the database has existing objects. Click “Yes” to continue. When the operation is complete, you can inspect your database to verify the presence of your logical database.

Considerations

- You can restore additional SCHEMA containers on an existing database. You would simply need to repeat the above steps for each SCHEMA container.

- If your intent was to “move” the SCHEMA container, you will need to clean up the original database. Once your SCHEMA container has been copied, and you have verified all you data is present in the destination database, you will need to manually drop all the objects in the source database before dropping the SCHEMA container.

- This tool does not support backing up SQL Server database. However you can restore a backup device created with Enzo Backup on a local SQL Server database if desired.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

Doug Mahugh (@dmahugh) described Open Source OData Tools for MySQL and PHP Developers in a 2/9/2012 post to the Interoperability@Microsoft blog:

To enable more interoperability scenarios, Microsoft has released today two open source tools that provide support for the Open Data Protocol (OData) for PHP and MySQL developers working on any platform.

The growing popularity of OData is creating new opportunities for developers working with a wide variety of platforms and languages. An ever increasing number of data sources are being exposed as OData producers, and a variety of OData consumers can be used to query these data sources via OData’s simple REST API.

In this post, we’ll take a look at the latest releases of two open source tools that help PHP developers implement OData producer support quickly and easily on Windows and Linux platforms:

- The OData Producer Library for PHP, an open source server library that helps PHP developers expose data sources for querying via OData. (This is essentially a PHP port of certain aspects of the OData functionality found in System.Data.Services.)

- The OData Connector for MySQL, an open source command-line tool that generates an implementation of the OData Producer Library for PHP from a specified MySQL database.

These tools are written in platform-agnostic PHP, with no dependencies on .NET.

OData Producer Library for PHP

Last September, my colleague Claudio Caldato announced the first release of the Odata Producer Library for PHP, an open-source cross-platform PHP library available on Codeplex. This library has evolved in response to community feedback, and the latest build (Version 1.1) includes performance optimizations, finer-grained control of data query behavior, and comprehensive documentation.

OData can be used with any data source described by an Entity Data Model (EDM). The structure of relational databases, XML files, spreadsheets, and many other data sources can be mapped to an EDM, and that mapping takes the form of a set of metadata to describe the entities, associations and properties of the data source. The details of EDM are beyond the scope of this blog, but if you’re curious here’s a simple example of how EDM can be used to build a conceptual model of a data source.

The OData Producer Library for PHP is essentially an open source reference implementation of OData-relevant parts of the .NET framework’s System.Data.Services namespace, allowing developers on non-.NET platforms to more easily build OData providers. To use it, you define your data source through the IDataServiceMetadataProvider (IDSMP) interface, and then you can define an associated implementation of the IDataServiceQueryProvider (IDSQP) interface to retrieve data for OData queries. If your data source contains binary objects, you can also implement the optional IDataServiceStreamProvider interface to handle streaming of blobs such as media files.

Once you’ve deployed your implementation, the flow of processing an OData client request is as follows:

- The OData server receives the submitted request, which includes the URI to the target resource and may also include $filter, $orderby, $expand and $skiptoken clauses to be applied to the target resource.

- The OData server parses and validates the headers associated with the request.

- The OData server parses the URI to resource, parses the query options to check their syntax, and verifies that the current service configuration allows access to the specified resource.

- Once all of the above steps are completed, the OData Producer for PHP library code is ready to process the request via your custom IDataServiceQueryProvider and return the results to the client.

These processing steps are the same in .NET as they are in the OData Producer Library for PHP, but in the .NET implementation a LINQ query is generated from the parsed request. PHP doesn’t have support for LINQ, so the producer provides hooks which can be used to generate the PHP expression by default from the parsed expression tree. For example, in the case of a MySQL data source, a MySQL query expression would be generated.

The net result is that PHP developers can offer the same querying functionality on Linux and other platforms as a .NET developer can offer through System.Data.Services. Here are a few other details worth nothing:

- In C#/.NET, the System.Linq.Expressions namespace contains classes for building expression trees, and the OData Producer Library for PHP has its own classes for this purpose.

- The IDSQP interface in the OData Producer Library for PHP differs slightly from .NET’s IDSQP interface (due to the lack of support for LINQ in PHP).

- System.Data.Services uses WCF to host the OData provider service, whereas the OData Producer Library for PHP uses a web server (IIS or Apache) and urlrewrite to host the service.

- The design of Writer (to serialize the returned query results) is the same for both .NET and PHP, allowing serialization of either .NET objects or PHP objects as Atom/JSON.

For a deeper look at some of the technical details, check out Anu Chandy’s blog post on the OData Producer Library for PHP or see the OData Producer for PHP documentation available on Codeplex.

OData Connector for MySQL

The OData Producer for PHP can be used to expose any type of data source via OData, and one of the most popular data sources for PHP developers is MySQL. A new code generator tool, the open source OData Connector for MySQL, is now available to help PHP developers implement OData producer support for MySQL databases quickly and simply.

The OData Connector for MySQL generates code to implement the interfaces necessary to create an OData feed for a MySQL database. The syntax for using the connector is simple and straightforward:

php MySQLConnector.php /db=mysqldb_name /srvc=odata_service_name /u=db_user_name /pw=db_password /h=db_host_name

The MySQLConnector generates an EDMX file containing metadata that describes the data source, and then prompts the user for whether to continue with code generation or stop to allow manual editing of the metadata before the code generation step.

EDMX is the Entity Data Model XML format, and an EDMX file contains a conceptual model, a storage model, and the mapping between those models. In order to generate an EDMX from a MySQL database, the OData Connector for MySQL needs to be able to do database schema introspection, and it does this through the Doctrine DBAL (Database Abstraction Layer). You don’t need to understand the details of EDMX in order to use the OData Connector for MySQL, but if you’re curious see the .edmx File Overview article on MSDN.

If you’re familiar with EDMX and wish to have very fine-grained control of the exposed OData feeds, you can edit the metadata as shown in the diagram, but this step is not necessary. You can also set access rights for specific entities in the DataService::InitializeService method after the code has been generated, as described below.

If you stopped the process to edit the EDMX, one additional command is needed to complete the generation of code for the interfaces used by the OData Producer Library for PHP:

php MySQLConnector.php /srvc=odata_service_name

Note that the generated code will expose all of the tables in the MySQL database as OData feeds. In a typical production scenario, however, you would probably want to fine-tune the interface code to remove entities that aren’t appropriate for OData feeds. The simplest way to do this is to use the DataServiceConfiguration object in the DataService::InitializeService method to set the access rights to NONE for any entities that should not be exposed. For example, you may be creating an OData provider for a CMS, and you don’t want to allow OData queries against the table of users, or tables that are only used for internal purposes within your CMS.

For more detailed information about working with the OData Connector for MySQL, refer to the user guide available on the project site on Codeplex.

These tools are open-source (BSD license), so you can download them and start using them immediately at no cost, on Linux, Windows, or any PHP platform. Our team will continue to work to enable more OData scenarios, and we’re always interested in your thoughts. What other tools would you like to see available for working with OData?

<Return to section navigation list>

Windows Azure Access Control, Service Bus and Workflow

• Edo van Asseldonk (@EdoVanAsseldonk) posted Azure webrole federated by ACS on 2/9/2012:

Problem

If you deploy a Web Role secured with ACS, and you have two or more instances of your Web Role, you probably have seen this error message:Key not valid for use in specified state

The problem is that the cookie that is created if you log in with ACS, is encrypted with the machine key by default. If the cookie is created by one of the instances, the cookie can not be read by the other instances because their machine key is different.

Solution

There is a solution to this: encrypt the cookie using a certificate that is known to all instances.How to

I'll show you how a self-signed certificate can be used to encrypt the ACS cookie.Create a certificate

First, on your dev machine open IIS and create a self-signed certificate. This certificate can be used on your Azure development environment as well as on the staging and production environment.Give rights on certificate

To be able to use it on your development machine do the following:

- Go to MMC to view the certificates in the Personal store of Local Computer. Right click on the created certificate and choose [All Tasks] [Manage Private Keys...].

- Add the username 'Network Service' with read rights. Network Service is the account under which the Azure simulation environment is executing. It now has rights to read the self-signed certificate.

Add certificate to config

Next we have to use this certificate in our Web Role. Open the ServiceDefinition.csdef file. Add the following code under the WebRole node:<Certificates>

<Certificate name="CookieEncryptionCertificate"

storeLocation="LocalMachine"

storeName="My" />

</Certificates>Now your definition file should look something like this:

<?xml version="1.0" encoding="utf-8"?>

<ServiceDefinition name="AutofacTestAzure"

xmlns="http://schemas.micros[...]">

<WebRole name="[name]" vmsize="Small">

<Certificates>

<Certificate name="CookieEncryptionCertificate"

storeLocation="LocalMachine"

storeName="My" />

</Certificates>

...

</ WebRole >

< ServiceDefinition >Add the following text to all cscfg-files where you want to use the certificate:

<Certificates>

<Certificate name="Cookie" thumbprint="[thumbprint]" thumbprintAlgorith="sha1" />