Windows Azure and Cloud Computing Posts for 9/3/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: Twitter’s @alias links are finally working again!

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Avkash Chauhan described Windows Azure Storage REST API: Conditional PUT operations by using the If-Match request header in a 9/4/2011 post:

As you may know using Windows Azure storage service REST API you can perform conditional PUT operations by using the If-Match request header on your client code.

Here is an interesting scenario:

You have two clients and they both are modifying the same resource by sending the same if-match header. Lets assuming that both client requests reach Azure service at exactly the same time. What will happen?

You might want to know how does Windows Azure handle this concurrency control in a distributed environment.

Here are the details:

The correct answer is "only one client will succeed". In above scenario, it is certain that only one client succeeds, and the other client receiving a 412 (precondition failed) response.

This is because, each update creates a new ETag for the object, so only one update will match and conditional operation succeed and the other one get precondition failed response.

Each update is a single transaction, so the concurrent operations will behave as though one of them happened after the other.

Avkash Chauhan explained Performing conditional update[s] and/or batch update[s] with Windows Azure Table Storage in a 9/3/2011 post:

Here is a Windows Azure Table Update scenario which I found very useful to share:

You objective is to write some code to either do batch update and individual update in Windows Azure tables with or without ETag.

To handle both batch update and individual update process, you will be having two threads.

You created a list of entities and kept them in memory. And let’s assume that your don’t have ETag associated with it.

Thread 1: Batch Update Thread based on Timer:

foreach (Job job in jobsToUpdate)

{

job.JobInvalidAtUtc = DateTime.UtcNow + JobSystemConfiguration.InvalidAfterInSec;

tableContext.UpdateObject(job);

}

try

{

// ContinueOnError specifies that subsequent operations are attempted even if an

//error occurs in one of the operations.

DataServiceResponse response = tableContext.SaveChangesWithRetries(SaveChangesOptions.ContinueOnError);

}

catch (DataServiceRequestException ex)

{

// Pre-condition failed, meaning some other thread is modifying the same job

if (ex.Response.First().StatusCode != 412)

{

tracker.TraceEvent(JobDiag.ErrorTraceCode.HeartBeatUpdateError, ex);

throw;

}

}

Thread 2: Individual entity update thread when called:

context.AttachTo(tablename, individual entity, "*");

With Thread 2, it is very much possible that you may receive “The context is not currently tracking the entity” error because you don’t have entities ETag in the memory.

The best practice is to handle above scenario is to:

- Always use .AttachTo() if you are using “*” as an ETag or keeping track of the actual ETag

- When you are writing conditional update process, you’ll need the ETag

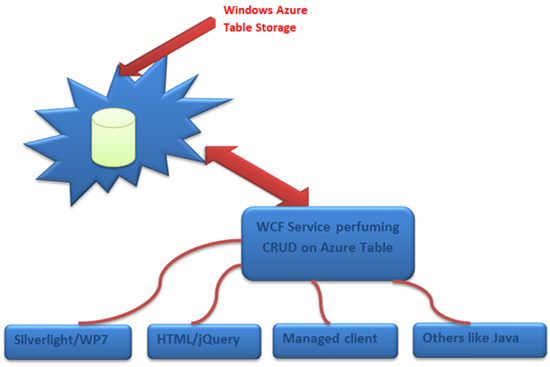

Dhananjay Kumar explained how to perform CRUD operation[s] on Windows Azure table storage as WCF Service in a 9/3/2011 post:

You may have come across requirement of performing CRUD operation on Windows Azure table storage many times. If you have encapsulated functions performing CRUD operation on Azure Table Storage as operation contract of WCF Service then any type of client can work against azure table storage.

You may always want to architecture your application as below,

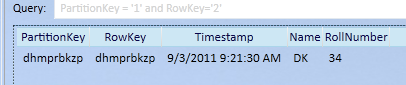

Assume we have Azure table called Student as below,

There are 5 columns

- Partition Key

- Row Key

- Timestamp

- Name

- RollNumber

We need to perform CRUD operation on Student table.

Creating Data Contract

Very first let us create a DataContract representing Azure table.

[DataContract] [DataServiceEntity] public class Student { [DataMember] public string RollNumber { get ; set;} [DataMember] public string Name { get ; set;} public string PartitionKey { get; set; } public string RowKey { get; set; } }There is two of points to be noted in above Data Contract

- Student class is attributed with DataServiceEntity

- Partitionkey and Rowkey are not exposed as Data Member. At the service side only we will set its values with random string values.

Creating Operation Contract

[ServiceContract] public interface IService1 { [OperationContract] List<Student> GetStudents(); [OperationContract] bool InsertStudent(Student s); [OperationContract] bool DeleteStudent(string RollNumber); [OperationContract] bool UpdateStudent(string RollNumber); }We have created four operation contract for corresponding CRUD operation.

Implementing Service

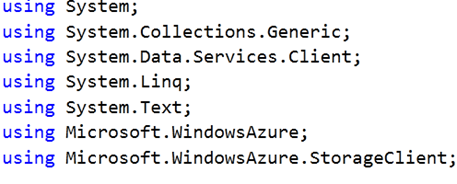

Before implementing the service add below references to project,

- Microsoft.WindowsAzure.DevelopmentStorage.Store.dll

- Microsoft.WindowsAzure.StorageClient.dll

- System.Data.Services.Client.dll

Make sure to put your own connection string to azure storage to parse.

Include below namespaces,

Retrieving records

public List<Student> GetStudents() { CloudStorageAccount account = CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName=uraccount;AccountKey=abcdefgh="); DataServiceContext context = account.CreateCloudTableClient().GetDataServiceContext(); var result = from t in context.CreateQuery<Student>("Student") select t; return result.ToList(); }This is simple LINQ retrieving all the records

Insert Entity

</span> <pre> public bool InsertStudent(Student s) { try { CloudStorageAccount account = CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName=uraccount;AccountKey=abcdefgh="); DataServiceContext context = account.CreateCloudTableClient().GetDataServiceContext(); s.PartitionKey = RandomString(9, true); s.RowKey = RandomString(9, true); context.AddObject("Student", s); context.SaveChanges(); return true; } catch (Exception ex) { return false; } }We are putting random string as value of partition key and row key.

Random string function is taken from Mahesh Chand article and it is as below,

</span> <pre> private string RandomString(int size, bool lowerCase) { StringBuilder builder = new StringBuilder(); Random random = new Random(); char ch; for (int i = 0; i < size; i++) { ch = Convert.ToChar(Convert.ToInt32(Math.Floor(26 * random.NextDouble() + 65))); builder.Append(ch); } if (lowerCase) return builder.ToString().ToLower(); return builder.ToString(); }Update Entity

public bool UpdateStudent(string RollNumber) { try { CloudStorageAccount account = CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName=uraccount;AccountKey=abcdefgh="); DataServiceContext context = account.CreateCloudTableClient().GetDataServiceContext(); Student result = (from t in context.CreateQuery<Student>("Student") select t).FirstOrDefault(); result.Name = "UpdatedName"; context.UpdateObject(result); context.SaveChanges(); return true; } catch (Exception ex) { return false; } }We are passing roll number to update. First we are fetching the entity to be updated and then updating it.

Delete Entity

public bool DeleteStudent(string RollNumber) { try { CloudStorageAccount account = CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName=uraccount;AccountKey=abcdefgh="); DataServiceContext context = account.CreateCloudTableClient().GetDataServiceContext(); Student result = (from t in context.CreateQuery<Student>("Student") select t).FirstOrDefault(); context.DeleteObject(result); context.SaveChanges(); return true; } catch (Exception ex) { return false; } }We are passing roll number to delete. First we are fetching the entity to be deleted and then deleting it.

Consuming at managed client

Get the Students

</span><span class="Apple-style-span" style="color: #222222; font-size: 15px; line-height: 21px; white-space: pre; background-color: #eeeeee;">Service1Client proxy = new Service1Client();</span> <pre> var result = proxy.GetStudents(); foreach (var r in result) { Console.WriteLine(r.Name); }Insert Student

</span> <pre> Service1Client proxy = new Service1Client(); bool b = proxy.InsertStudent(new Student { RollNumber = "34", Name = "DK" }); if (b) Console.WriteLine("Inserted"); else Console.WriteLine("Sorry");Update Student

</span> <pre> Service1Client proxy = new Service1Client(); bool b2 = proxy.UpdateStudent("34"); if (b2) Console.WriteLine("Updated"); else Console.WriteLine("Sorry");Delete Student

Service1Client proxy = new Service1Client(); bool b1 = proxy.DeleteStudent("34"); if (b1) Console.WriteLine("Deleted"); else Console.WriteLine("Sorry");This is all you have to do to perform CRUD operation on Azure Table.

<Return to section navigation list>

SQL Azure Database and Reporting

Pinal Dave and Dhananjay Kumar posted A Quick Look at Cloud and SQL Azure to the ThinkDigit blog on 8/28/2011 (missed when published):

What is a Cloud Database?

Cloud databases are a hot new idea to the world of computing. Everyone is talking about the “cloud” and how it is going to revolutionize database storage. But what is a cloud database, really?

The short answer is that “the cloud” refers to the internet. This is because the fuzzy edges of the cloud are reminiscent of the fuzzy edges of the internet, and how there is no defining line between the internet and your network. This can obviously cause some security problems, but software companies are beginning to take advantage of this fuzzy nature of the internet.

This means that a Cloud Database is your database storage – on the internet. This sounds redundant, so let me explain. Software companies – like Microsoft – are now coming out with programs that will resource your database needs with their programs, using abstract servers and server hosts rather than requiring you to buy a program and provide your own server. This not only means you have one less expense, but depending on your storage needs you can even choose a “pay-as-you-go” option that limits your daily use costs as well.

Saving money isn’t the only appeal of a Cloud Database. For companies that want to begin serving their customers quickly without all the programming and patching issues, a cloud database is the perfect option. For Microsoft’s SQL Azure at least, this program comes with tech support and professionals who will be working behind the scenes to make sure everything runs smoothly.

There are many, many cloud database options out there, but today I will be focusing on Microsoft’s SQL Azure.

Source: Microsoft Developer Network

Why Use Windows Azure?

With all the database choices available today, what are the perks of using Windows Azure?

First of all, you can direct your resources towards more important areas, and away from buying expensive equipment. Also, Windows Azure offers automated service management, which will help you save time on repairs and protect you in case of a hardware failure.

Windows Azure is also easy to use. It features the same type of tools (Eclipse, Visual Studio) that you know and love, so you don’t have to learn new programs. Even better, Azure allows you to debug your applications before going live.

Windows Azure is compatible with the same programs you already know how to use. You can still create applications in Java or PHP. Best of all, with Windows Azure your business can become known worldwide. It features a global datacenter and CDN footprint so that you can take advantage of partner offers and programs.

What is SQL Azure?

SQL Azure is cloud-based service from Microsoft. SQL Azure delivers cloud database services which enable you to focus on your application, instead of building, administering and maintaining databases. In a very generic statement we can say SQL Azure allows creating Database on the cloud.

SQL Azure will replace physical servers because it uses databases located all over the globe. This will not only speed up access for your users, but you will no longer have hardware costs eating up all your profits. And because SQL Azure features pay-as-you-go pricing, you can customize your usage to meet your needs. It is all highly affordable.

SQL Azure has been designed to make your life easier. Software installation and patching is included, and fault tolerance is built in. There is also a web-based database manager which will allow you to manager and administer your data quickly and easily. SQL Azure is compatible with T-SQL and earlier SQL Server models.

Given the compatibility between SQL Server and SQL Azure, there has been a lot of confusion about when to use Azure, and whether you should switch if you are already comfortable with SQL Server. To put it simply, SQL Server is a more traditional database server while SQL Azure is a cloud database – using the internet to act as your servers. Even better, SQL Azure features pay-as-you-go or a one-time “commitment” fee.

SQL Server is a program that an individual or company purchases and can be used on a variety of hardware – which is then hosted by a Service Provider or Hosting Partner. On the other hand, SQL Azure is a service that users can purchase in order to focus more on building up their business, rather than worrying about (and paying for) expensive hardware and the requisite management, programming, and patching.

SQL Azure is perfect for businesses that are looking for a database solution with a lot of “behind the scenes” support. There are still developers and IT technicians who will be installing and overseeing your database, so you know that things will be running smoothly. SQL Server is the perfect option if you’re looking to quickly start serving your customers without the need for the large expense of a traditional server and all the software.

For more information, check out these helpful links: http://shannonlowder.com/2010/05/what-is-sql-azure/ and http://www.microsoft.com/windowsazure/sqlazure/database/.

Creating Database in SQL Azure

Creating database in SQL Azure is very easy process. Here it is described in few basic steps.

Login SQL Azure portal with your live credential https://sql.azure.com/

Click on SQL Azure tab and select Project

Click on the project, this will list entire databases on SQL Azure

Create database by clicking ‘Create Database’.

While creating the database user have two options for editions 1) Web Edition 2) Business Edition. Choose appropriate edition and you have your database ready to use.

The basic difference between the editions is size. Web Editions supports up to 5 GB of size and Business Edition supports up to 50 GB of size. Here is the quick list of the salient features of both the editions.

Web Edition Relational Database includes:

Up to 5 GB of T-SQL based relational database

Self-managed DB, auto high availability and fault tolerance

Support existing tools like Visual Studio, SSMS, SSIS, BCP

Best suited for Web application, Departmental custom apps

Business Edition DB includes:

Up to 50 GB of T-SQL based relational database

Self-managed DB, auto high availability and fault tolerance

Additional features in the future like auto-partition, CLR, fan-outset

Support existing tools like Visual Studio, SSMS, SSIS, BCP

Best suited for SAAS ISV apps, custom Web application, Departmental apps

New SQL Azure portal has a great Silverlight-based user interactive and many more operations can be performed through the UI. There is a new database manager and it allows us to perform operations at the table and row level.

Now we can perform many more operations through the database option of the Windows Azure portal.

Create a database

Create/ delete a table

Create/edit/delete rows of table

Create/edit stored procedure

Create/edit views

Create / execute queries

In this way, you can perform almost all the basic operations from new SQL Azure Data Base Manager.

For security we can configure the firewall rules as well. By default, a database created on SQL Azure is blocked by the firewall for the security reason. Any attempt at external access or access from any other Azure application is blocked by the firewall.

Connecting from a Local system

When we want to connect to SQL Azure portal from a network system or local system, then we need to configure firewall at the local system. We need to create an exception for port 1433 at the local firewall.

Connecting from the Internet

The request to connect to SQL Azure from the Internet is blocked by the SQL Azure firewall. When a request comes from the Internet:

SQL Azure checks the IP address of the system making the request

If the IP address is in between the range of IP addresses set as a firewall rule of SQL Azure portal, then the connection is established.

Firewall rules can be Added, Updated and Deleted in two ways:

Using SQL Azure Portal

Using SQL Azure API

SQL Azure Architecture

SQL Azure resides in the Microsoft Data center, which provides a relational database to applications with four layer of abstraction.

Four layers of abstraction can be depicted as below:

Client Layer

This layer is closest to the application. SQL Azure connects to client applications with a Tabular Data Stream interface in exactly the same way SQL Server does. The client layer can range from a managed .NET application to a PHP application. It could be a SilverLight web application or WCF Data Service. Client layers connect with other layers using a Tabular Data Stream. The client layer may be in a client data center [On premise] or it may be in a Data center of service provider [Cloud].

Service Layer

The service layer performs the task of provisioning the database to the user, cross-ponding to Azure account. This mainly performs

Provisioning of Database to an Azure account

Billing of the usage

It performs the task of connection routing between client applications from the client layer to the actual physical SQL server in the Platform layer.

Platform Layer

Physically, SQL Server resides in this layer. There could be many instances of SQL server.

SQL Azure Fabric manages the different SQL Servers.

Tasks performed by SQL Azure Fabric:

It enables automatic failover

It does load balancing

It does automatic replication between physical servers.

Each instance, SQL Server is individually managed by SQL Azure Fabric.

Management Services performs these tasks:

Monitoring health of individual servers.

Installing patch up

Upgrading services

Automated installations

Infrastructure Layer

In this layer IT, level administration tasks are performed. Physical hardware and operating systems are being administered in this layer.

Scalability in SQL Azure

Federations are introduced in SQL Azure for scalability. Federations help both admins and developers scale data. It helps admins by making repartitioning and redistributing of data easier. It helps developers in routing layers and sharding of data. It helps in routing without application downtime.

Federation is the basic scaling of objects in the SQL Azure Database. Federations are the partitioned data. There can be multiple Federations within a database, and each different Federation represents a different distribution scheme.

We create Federations based on different distribution schemes and requirements. The Student table and Grades table of a School Database may have different distribution requirement so they are put into different Federations.

Each Federation object scales out data to many system-managed nodes. Federation objects contain: Distribution Scheme and Range, Distribution key and Data type.

Federation members are managed directly by the federation while data are partitioning. All Federation objects are stored in a central system Database called the Federation Root. Each Federation object is identified by a Federation Distribution key. The Federation key is used for partitioning the Data. A Federation key represents Distribution style and Data Domain.

Data is being scaled out in Atomic Units. All Data of a particular atomic unit stick together. Atomic units can be separated and all the rows of a particular Partition key stay together and are distributed together. An Atomic unit is an example of a Federation key.

Billing of SQL Azure

Web edition databases are billed at the 1GB rate for databases below 1GB of total data or at 5GB rate for databases between 1GB and 5GB size.

Business edition databases are billed at 10GB increments (10GB, 20, 30, 40 and 50GB).

In a particular day, billing is done on the pick DB size. To understand billing better let us look at an example. Suppose there is a Web Edition DB with a maximum size of 5 GB. On a particular day if database usages are 800MB then you will be charged for 1 GB on that day. If next day DB usage increases to 2 GB then you would be charge for 5 GB. In the Business edition the charging window is 10 GB.

SQL Azure introduces two dynamic management views: database_usage and bandwidth_usage. These views can be used in TSQL for billing and bandwidth information.

Summary

So how do you know if SQL Azure is right for you? If you're a small company and don’t want to worry about learning all about networks and programming and server needs – SQL Azure will have you covered. If your customers have begun complaining about loading speed, the multiple servers of a cloud database will help and you should check out SQL Azure. If you’re interested in making your business a global enterprise, SQL Azure will also help with this.

A simple explanation of SQL Azure is that it is a database storage provider. It is not a licensed program that requires your own servers, but a service provider that creates “virtual” databases for you and even helps you administer them. It cuts down on the costs of running a business and the headaches associated with database creation.

Creating a database using SQL Azure is very simple, and your knowledge from other products like SQL Server will transfer over. Azure also features easily customized security options that you can set with a few clicks of the mouse. One of the most popular features of SQL Azure is the pay-as-you-go option, which breaks your daily usage down into easily billable units.

Cloud databases like SQL Azure are a great new option for small businesses, or anyone looking to streamline their storage processes. SQL Azure has the edge that it is easy to use, has great support, and is backed by a reputable company like Microsoft.

Pinal Dave is Microsoft Evangelist (Database and BI) and primarily writes at http://blog.sqlauthority.com. Dhananjay Kumar is Microsoft MVP and primarily writes at http://debugmode.net/.

<Return to section navigation list>

MarketPlace DataMarket and OData

Pete Zerger posted Exploring the Orchestrator 2012 Web Service via OData PowerShell Explorer to the System Center Central blog on 9/3/2011:

Orchestrator’s new secret weapon for a dynamic private cloud? I’m here to tell you it’s going to be the OData web service. The capabilities of Orchestrator and runbooks are great, but they are more powerful when easily accessible from other applications. This web service enables not only enables the external and programmatic initiation of runbooks, but also the tracking of runbooks progress. In post, we will explore what information is exposed through this web service via PowerShell…actually, via a PowerShell-generated GUI called the OData PowerShell Explorer, created by PowerShell MVP Doug Finke. Some people have trouble getting the OData PowerShell Explorer to work on the first try, so I will also provide a tip to help you get past the most common issue.

- What you will need

- WCF Data Services in 100 words or less (the short version)

- Prepping your PowerShell instance for the OData PowerShell Explorer

- Downloading and preparing OData PowerShell Explorer for the Orchestrator Web Service

- Launching OData Explorer and Browsing the Orchestrator Web Service

- What this reveals about the coming System Center 2012 wave

What you will need

To duplicate what is explained in this article, you will need:

- An instance of System Center Orchestrator 2012 (with multiple runbooks imported and run one or more times)

- A copy of the OData PowerShell Explorer

- A PowerShell instance running in STA mode

We’ll start with a bit of background info and explanation before we begin exploration.

WCF Data Services in 100 words or less (the short version)

What is an OData web service? Here is a short explanation…

Disclaimer: I did not actually count the words, but it’s a pretty short description. Any words over 100 are yours at no extra charge!

WCF Data Services enables you to create and consume Open Data Protocol (OData) services in your application. OData exposes your data as resources that are addressable by URIs, allowing you to access and change data by using the semantics of representational state transfer (REST), specifically the standard HTTP verbs of GET, PUT, POST, and DELETE.

For more information, see ASP.NET Dynamic Data Content Map (http://go.microsoft.com/fwlink/?LinkId=199029), WCF Data Services (http://go.microsoft.com/fwlink/?LinkId=199030), and A Developer's Guide to the WCF REST Starter Kit (http://go.microsoft.com/fwlink/?LinkId=199031).

Want the long version? The home page for learning about WCF Data Services on the net HERE.

Prepping your PowerShell instance for the OData PowerShell Explorer

If you download and attempt to run that PowerShell script that launches the OData PowerShell Explorer, you will probably see an error like this one:

This is because PowerShell needs to run in STA mode to display Windows Presentation Foundation (WPF) windows (like the OData PowerShell Explorer). The PowerShell Integrated Scripting Environment (ISE) runs in STA mode by default whereas the console will need to be launched explicitly with the -STA switch. To work around this problem, I created an additional PowerShell shortcut on my desktop in which I added the –sta parameter to the end of the Target window as shown below.

Downloading and preparing PowerShell OData Explorer for the Orchestrator Web Service

Step 1: Download OData Explorer

Begin by downloading and extracting the OData PowerShell Explorer from Codeplex at http://psodata.codeplex.com/releases/view/50039

Step 2: Add the Orchestrator Web Service to ODataServices.csv

The script you will run retrieves a list of OData web services from the ODataServices.csv file, which is located in the directory where you extracted OData PowerShell Explorer. The file comes with a number of web services pre-loaded in this file. I removed all but the Orchestrator web service, as shown below.

Launching OData PowerShell Explorer and Browsing the Orchestrator Web Service

Now you are ready to launch the OData PowerShell Explorer.

- Launch PowerShell using the updated shortcut you created to launch in STA mode.

- Change to the directory where you extracted the PowerShell OData Explorer.

- Run the View_Odata.ps1 script. This launches the UI shown below, which reveals a wealth of information about our Orchestrator environment.

If you have played around with the new Orchestration console in the Orchestrator 2012 beta, this data will look familiar. You can see from the Collections window above, you’ll notice you can see not only runbooks, but many other valuable elements for instantiating and monitoring runbooks from other applications, including:

- Statistics (like many times a runbook has been run)

- RunbookInstances – Instances of the runbook running or that have run in the past and their result.

- AcitivityInstances – Activities (formerly objects) that have run, when they finished, the runbook in which they are located, etc)

- Jobs that have run and the SID of the identity that ran them

- Runbook Servers – Runbook Servers present in this Orchestrator installation. Useful if you want to instantiate a policy on a runbook server that has special components not installed on all Runbook Servers (like a particular version of the .Net Framework)

- Folders – Revealing the folder structure in which runbooks are stored. Presumably one would use this to limit runbooks returned to the calling application.

- RunbookParameters – The parameters exposed in various runbooks, usually in the first object of ad-hoc policies in my case.

Note: Beware of ActivitityInstanceData. Selecting that one locked up the interface every time in my test lab. I suspect that returns a very large data set, possibly larger due to the fact that I have object level logging enabled on most runbooks in my test lab…something that would not be true of a production environment.

What this reveals about the coming System Center 2012 wave

Clearly Microsoft wanted to ensure runbooks could be easily called from the other members of the System Center 2012 family. It will be interesting to see how the Orchestrator web service is leveraged in the other members of the System Center 2012 products as they reach RC and RTM!

Additional Resources

The Opalis Fundamentals Series

- Opalis Runbook Automation Fundamentals (Part 1) (basics)

- Opalis Runbook Automation Fundamentals (Part 2) (advanced concepts)

- Opalis Runbook Automation Fundamentals (Part 3) ( advanced concepts, bridging gaps and extending capabilities )

and How the power of Opalis delivers the magic of the Cloud

And watch the Orchestrator Unleashed blog here on SCC – http://www.systemcentercentral.com/blogs/scoauthor

And spend some time looking through the many Opalis (but Orchestrator-friendly) community resources on CodePlex at http://opalis.codeplex.com

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Ershad Nozari of the Connected Systems Down Under blog posted Windows Azure Service Bus & Windows Azure Connect: Compared & Contrasted on 9/3/2011:

![]()

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

See the above Ershad Nozari of the Connected Systems Down Under blog posted Windows Azure Service Bus & Windows Azure Connect: Compared & Contrasted on 9/3/2011 article.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

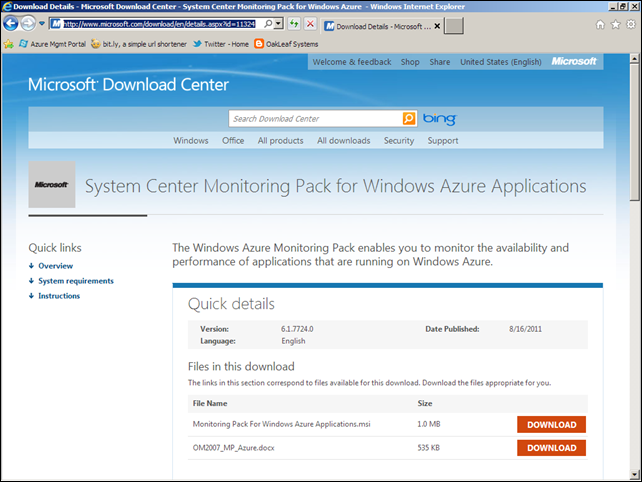

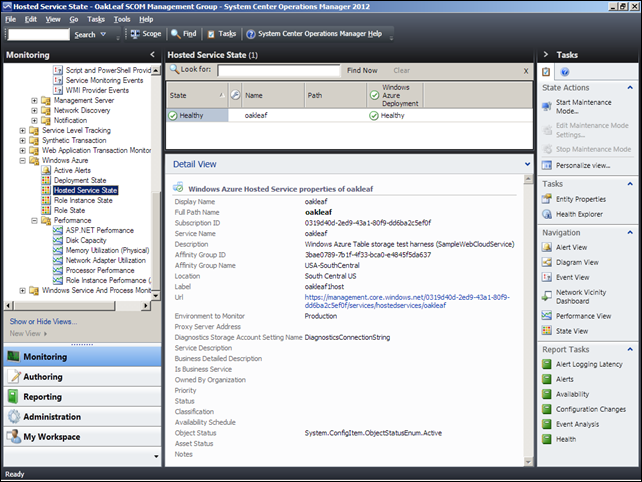

My (@rogerjenn) Installing the Systems Center Monitoring Pack for Windows Azure Applications on SCOM 2012 Beta tutorial described 60 steps to getting started monitoring instances hosted on Windows Azure in a 9/4/2011 post to the OakLeaf Systems blog:

The Systems Center Operations Manager (SCOM) Team released v6.1.7724.0 of the Monitoring Pack for Windows Azure Applications for use with the SCOM 2012 Beta v7.0.8289.0 (Eval) on 8/17/2011. According to the download page’s Overview section:

The Windows Azure Monitoring Management Pack (WAzMP) enables you to monitor the availability and performance of applications that are running on Windows Azure.

Feature Summary

After configuration, the Windows Azure Monitoring Management Pack offers the following functionality:

- Discovers Windows Azure applications.

- Provides status of each role instance.

- Collects and monitors performance information.

- Collects and monitors Windows events.

- Collects and monitors the .NET Framework trace messages from each role instance.

- Grooms performance, event, and the .NET Framework trace data from Windows Azure storage account.

- Changes the number of role instances via a task.

Release History

- 8/17/2011 - version 6.1.7724.0

- 10/25/2010 - Original English release, pre-release version 6.1.7686.0

The following instructions assume that you have installed and are running SCOM 2012 Beta under Windows Server 2008 or 2008 R2 with the latest Service Pack(s) installed. Screen captures are from Windows 2008 R2 SP1.

Download and Run the Installer

1. Navigate to the WAzMP download page at http://www.microsoft.com/download/en/details.aspx?id=11324

2. Download and save the OM2007_MP …

The post continues with 56 more illustrated steps and ends with:

58. Close the Health Explorer for oakleaf window and scroll to and expand the Monitoring \ Windows Azure and Monitoring \ Windows Azure \ Performance nodes. Select the Hosted Service State node to display a Detail View of the object:

59. Verify that Detail Views of the Deployment State, Hosted Service State, Role Instance State and Role State correspond to the known current state of the service.

60. Stay tuned for additional posts about performance monitoring with the Windows Azure Application Monitoring Pack.

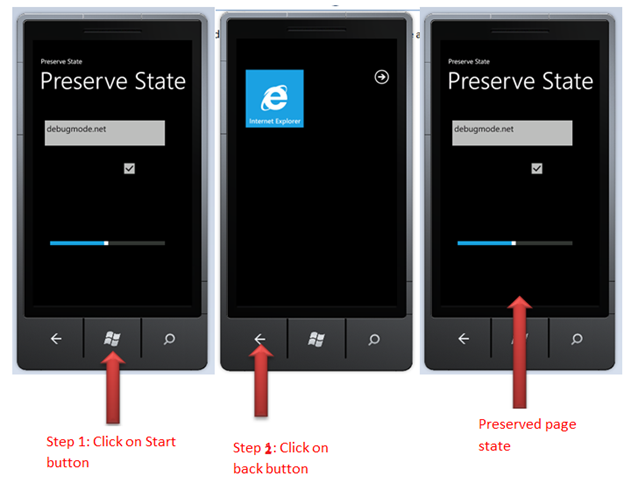

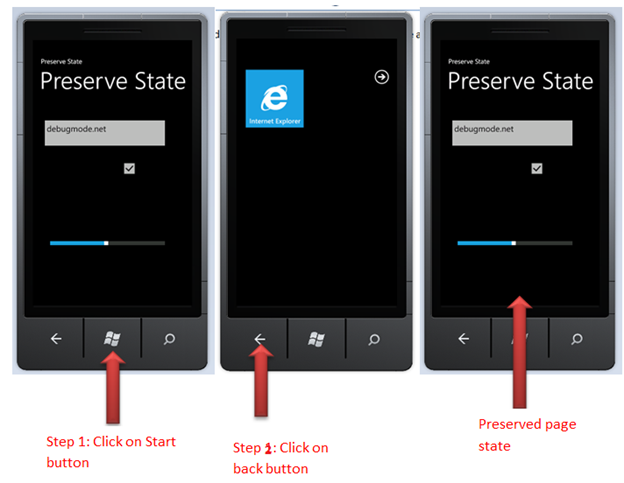

Dhananjay Kumar explained how to Restore and Preserve Page State for Windows Phone 7.5 or Mango Phone in a 9/4/2011 post:

In this post we will show you the way to Restore and Preserve Page state for windows phone application page.

Let us put some control on the phone application page.

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0"> <TextBox x:Name="txtValue" Height="100" Margin="12,37,58,470" /> <CheckBox x:Name="chkValue" Height="100" Margin="251,144,0,364" HorizontalAlignment="Left" Width="134" /> <Slider x:Name="sldValue" Value="30" Height="100" Margin="28,383,58,124" /> </Grid>To bind values of these controls, let us create Data class as below,

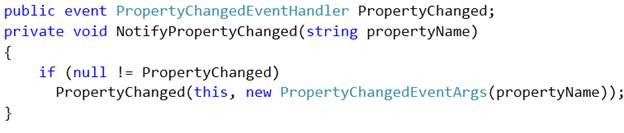

There are three properties to bind values to three controls of the page. Next we need to add PropertyChanged event and implementing a method called NotifyPropertyChanged, which raises the PropertyChanged event.

Next we need to create properties for each control. We need to call NotifyPropertyChanged event in the setter of property to notify when value changed at the control. Control value would be bind with two ways binding with these properties. Eventually Data class will be as below,

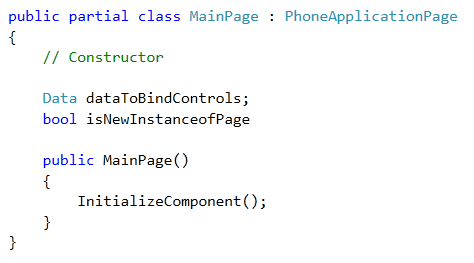

using System.Runtime.Serialization; using System.ComponentModel; namespace RestorePageState { [DataContract] public class Data : INotifyPropertyChanged { private string textValue; private bool chckValue; private double sliderValue; public string TextValue { get { return textValue; } set { textValue = value; NotifyPropertyChanged("TextValue"); } } public bool ChckValue { get { return chckValue; } set { chckValue = value; NotifyPropertyChanged("ChckValue"); } } public double SliderValue { get { return sliderValue; } set { sliderValue = value; NotifyPropertyChanged("SliderValue"); } } public event PropertyChangedEventHandler PropertyChanged; private void NotifyPropertyChanged(string propertyName) { if (null != PropertyChanged) PropertyChanged(this, new PropertyChangedEventArgs(propertyName)); } } }As of now Data class is in place. Next we need to write code to preserve the page state. We need two variables in code behind,

- One bool variable to check whether new instance of page or not?

- Object of Data class to bind values to controls.

Next we need to override OnNavigatedFrom function of page class to persist value in state dictionary

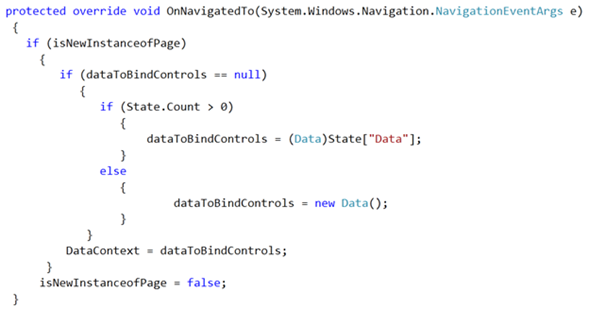

Now we need to override OnNavigatedTo function. This function is called when used navigates to this page.

Essentially there are three tasks we are performing in above function

- Checking if it is new instance of the page.

- If yes then checking if value of data control [object of data class ] is null

- If null then reading from the state else creating new instance of that.

Full source code behind to preserve page value is as below,

using Microsoft.Phone.Controls; namespace RestorePageState { public partial class MainPage : PhoneApplicationPage { Data dataToBindControls; bool isNewInstanceofPage; public MainPage() { InitializeComponent(); isNewInstanceofPage = true; } protected override void OnNavigatedFrom(System.Windows.Navigation.NavigationEventArgs e) { if (e.NavigationMode != System.Windows.Navigation.NavigationMode.Back) { State["Data"] = dataToBindControls; } } protected override void OnNavigatedTo(System.Windows.Navigation.NavigationEventArgs e) { if (isNewInstanceofPage) { if (dataToBindControls == null) { if (State.Count > 0) { dataToBindControls = (Data)State["Data"]; } else { dataToBindControls = new Data(); } } DataContext = dataToBindControls; } isNewInstanceofPage = false; } } }We have code behind now bind the value of the controls, bind the value with mode two way as below,

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0"> <TextBox x:Name="txtValue" Height="100" Margin="12,37,58,470" Text="{Binding TextValue,Mode=TwoWay}"/> <CheckBox x:Name="chkValue" Height="100" Margin="251,144,0,364" HorizontalAlignment="Left" Width="134" IsChecked="{Binding ChckValue,Mode=TwoWay}"/> <Slider x:Name="sldValue" Height="100" Margin="28,383,58,124" Value="{Binding SliderValue,Mode=TwoWay}"/> </Grid>Before debugging last step we need to perform is enable Tombstone upon deactivation while debugging in the properties page.

Now press F5 to run the application.

In this way you can preserve page state in Windows Phone 7.5 or Mango phone.

Tom Laird-McConnell (@thermous) updated his Azure Library for Lucene.Net (Full Text Indexing for Azure) project in the MSDN Code Samples library on 8/31/2011 (missed when updated). From the description:

Project description

This project allows you to create Lucene Indexes via a Lucene Directory object which uses Windows Azure Blob Storage for persistent storage.

About

This project allows you to create Lucene Indexes via a Lucene Directory object which uses Windows Azure BlobStorage for persistent storage.

Background

Lucene.NET

Lucene is a mature Java based open source full text indexing and search engine and property store. Lucene.NET is a mature port of that to C#.

Lucene provides:

- Super simple API for storing documents with arbitrary properties

- Complete control over what is indexed and what is stored for retrieval

- Robust control over where and how things are indexed, how much memory is used, etc.

- Superfast and super rich query capabilities

- Sorted results

- Rich constraint semantics AND/OR/NOT etc.

- Rich text semantics (phrase match, wildcard match, near, fuzzy match etc)

- Text query syntax (example: Title:(dog AND cat) OR Body:Lucen* )

- Programmatic expressions

- Ranked results with custom ranking algorithms

AzureDirectory

AzureDirectory smartly uses local file storage to cache files as they are created and automatically pushes them to blobstorage as appropriate. Likewise, it smartly caches blob files back to the a client when they change. This provides with a nice blend of just in time syncing of data local to indexers or searchers across multiple machines.

With the flexibility that Lucene provides over data in memory versus storage and the just in time blob transfer that AzureDirectory provides you have great control over the composibility of where data is indexed and how it is consumed.

To be more concrete: you can have 1..N worker roles adding documents to an index, and 1..N searcher webroles searching over the catalog in near real time.Usage

To use you need to create a blobstorage account on http://azure.com.

Create an App.Config or Web.Config and configure your accountinto:<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<appSettings>

<!-- azure SETTINGS -->

<add key="BlobStorageEndpoint" value="http://YOURACCOUNT.blob.core.windows.net"/>

<add key="AccountName" value="YOURACCOUNTNAME"/>

<add key="AccountSharedKey" value="YOURACCOUNTKEY"/>

</appSettings>

</configuration>To add documents to a catalog is as simple as

AzureDirectory azureDirectory = new AzureDirectory("TestCatalog");

IndexWriter indexWriter = new IndexWriter(azureDirectory, new StandardAnalyzer(), true);

Document doc = new Document();

doc.Add(new Field("id", DateTime.Now.ToFileTimeUtc().ToString(), Field.Store.YES, Field.Index.TOKENIZED, Field.TermVector.NO));

doc.Add(new Field("Title", “this is my title”, Field.Store.YES, Field.Index.TOKENIZED, Field.TermVector.NO));

doc.Add(new Field("Body", “This is my body”, Field.Store.YES, Field.Index.TOKENIZED, Field.TermVector.NO));

indexWriter.AddDocument(doc);

indexWriter.Close();

}And searching is as easy as:

IndexSearcher searcher = new IndexSearcher(azureDirectory);

Lucene.Net.QueryParsers.QueryParser parser = QueryParser("Title", new StandardAnalyzer());

Lucene.Net.Search.Query query = parser.Parse("Title:(Dog AND Cat)");

Hits hits = searcher.Search(query);

for (int i = 0; i < hits.Length(); i++)

{

Document doc = hits.Doc(i);

Console.WriteLine(doc.GetField("Title").StringValue());

}Caching and Compression

AzureDirectory compresses blobs before sent to the blob storage. Blobs are automatically cached local to reduce roundtrips for blobs which haven't changed.

By default AzureDirectory stores this local cache in a temporary folder. You can easily control where the local cache is stored by passing in a Directory object for whatever type and location of storage you want.This example stores the cache in a ram directory:

AzureDirectory azureDirectory = new AzureDirectory("MyIndex", new RAMDirectory());And this example stores in the file system in C:\myindex

AzureDirectory azureDirectory = new AzureDirectory("MyIndex", new FSDirectory(@"c:\myindex"));Notes on settings

Just like a normal lucene index, calling optimize too often causes a lot of churn and not calling it enough causes too many segment files to be created, so call it "just enough" times. That will totally depend on your application and the nature of your pattern of adding and updating items to determine (which is why lucene provides so many knobs to configure it's behavior).

The default compound file support that Lucene uses is to reduce the number of files that are generated...this means it deletes and merges files regularly which causes churn on the blob storage. Calling indexWriter.SetCompoundFiles(false) will give better performance.

We run it with a RAMDirectory for local cache and SetCompoundFiles(false);

The version of Lucene.NET checked in as a binary is Version 2.3.1, but you can use any version of Lucene.NET you want by simply enlisting from the above open source site.FAQ

How does this relate to Azure Tables?

Lucene doesn’t have any concept of tables. Lucene builds its own property store on top of the Directory() storage abstraction which essentially is both query and storage so it replicates the functionality of tables. You have to question the benefit of having tables in this case.

With LinqToLucene you can have Linq and strongly typed objects just like table storage. Ultimately, Table storage is just an abstraction on top of blob storage, and so is Lucene (a table abstraction on top of blob storage).

Stated another way, just about anything you can build on table storage you can build on lucene storage.If it is important that you have table storage as well as an Lucene index then any time you create a table entity you simply add that Entity to lucene as a document (either by a simply hand mapping or via reflection Linq To Lucene Annotations) as well. Queries can then be against lucene, and properties retrieved from table storage or from Lucene.

But if you think about it you are duplicating your data then and not really getting much benefit.

There is 1 benefit to the table storage, and that is as an archive of the state of your data. If for some reason you need to rebuild your index you can simply reconstitute it from the table storage, but that’s probably the only time you would use the table storage then.

How does this perform?

Lucene is capable of complex searches over millions of records in sub second times depending on how it is configured. (see http://lucene.apache.org/java/2_3_2/benchmarks.html for lots of details about Lucene in general.)

But really this is a totally open ended question. It depends on:

- the amount of data

- the frequency of updates

- the kind of schema

- etc.

Like any flexible system you can configure it to be supremely performant or supremely unperformant.

The key to getting good performance is for you to understand how Lucene works.

Lucene performs efficient incremental indexing by always appending data into files called segments. Periodically it will merge smaller segments into larger segments (a merge). The important thing to know is that it will NEVER modify an old segment, but instead will create new segments and then delete old segments when they are no longer in use.Lucene is built on top of an abstract storage class called a "Directory" object, and the Azure Library creates an implementation of that class called "AzureDirectory". The directory contract basically provides:

- the ability to enumerate segments

- the ability to delete segments

- providing a stream for Writing a file

- providing a stream for Reading a file

- etc.

Existing Directory objects in Lucene are:

- RAMDirectory -- a in memory directory implementation

- FSDirectory -- a disk backed directory implementation

The AzureDirectory class implements the Directory contract as a wrapper around another Directory class which it uses as a local cache.

- When Lucene asks to enumerate segments, AzureDirectory enumerates the segments in blob storage.

- When Lucene asks to delete a segment, the AzureDirectory deletes the local cache segment and the blob in blob storage.

- When Lucene asks to for a read stream for a segment (remember segments never change after being closed) AzureDirectory looks to see if it is in the local cache Directory, and if it is, simply returns the local cache stream for that segment. Otherwise it fetches the segment from blobstorage, stores it in the local cache Directory and then returns the local cache steram for that segment.

- When Lucene asks for a write stream for a segment it returns a wrapper around the stream in the local Directory cache, and on close it pushes the data up to a blob in blob storage.

The net result is that:

- all read operations will be performed against the local cache Directory object (which if it is a RAMDirectory is near instaneous).

- Any time a segment is missing in the local cache you will incure the cost of downloading the segment once.

- All Write operations are performed against the local cache Directory object until the segment is closed, at which point you incur the cost of uploading the segment.

The key piece to understand is that the amount of transactions you have to perform to blob storage depends on the Lucene settings which control how many segments you have before they are merged into a bigger segment (mergeFactor).Calling Optimize() is a really bad idea because it causes ALL SEGMENTS to be merged into ONE SEGMENT...essentially causing the entire index to have to be recreated, uploaded to blob storage and downloaded to all consumers.

The other big factor is how often you create your searcher objects. When you create a Lucene Searcher object it essentially binds to the view of the index at that point in time. Regardless of how many updates are made to the index by other processes, the searcher object will have a static view of the index in it's local cache Directory object. If you want to update the view of the searcher, you simply discard the old one and create a new one and again it will be up to date for the current state of the index.

If you control those factors, you can have a super scalable fast system which can handle millions of records and thousands of queries per second no problem.

What is the best way to build an Azure application around this?

Of course that depends on your data flow, etc. but in general here is an example architecture that works well:

The index can only be updated by one process at a time, so it makes sense to push all Add/Update/Delete operations through an indexing role. The obvious way to do that is to have an Azure queue which feeds a stream of objects to be indexed to a worker role which maintains updating the index.

On the search side, you can have a search WebRole which simply creates an AzureDirectory with a RAMDirectory pointed to the blob storage the indexing role is maintaining. As appropriate (say once a minute) the searcher webrole would create a new IndexSearcher object around the index, and any changes will automatically be synced into the cache directory on the searcher webRole.

To scale your search engine you can simply increase the instance count of the searcher webrole to handle the load.Version History

Version 1.0.4

Replaced mutx with BlobMutexManager to solve local mutex permissions

Thanks to Andy Hitchman for the bug fixes

Version 1.0.3

- Added a call to persist the CachedLength and CachedLastModified metadata properties to the blob (not included in the content upload).

- AzureDirectory.FileLength was using the actual blob length rather than the CachedLength property. The latest version of lucene checks the length after closing an index to verify that its correct and was throwing an exception for compressed blobs.

- Non-compressed blobs were not being uploaded

- Updated the AzureDirectory constructor to use a CloudStorageAccount rather than the StorageCredentialsAccountAndKey so its possible to use the Development store for testing

- works with Lucene.NET 2.9.2

thanks to Joel Fillmore for the bug fixes

Version 1.0.2

- updated to use Azure SDK 1.2

Version 1.0.1

- rewritten to use V1.1 of Azure SDK and the azure storage client

- released to MSDN Code Gallery under the MS-PL license.

Version 1.0

- Initial release- written for V1.0 CTP of Azure using the sample storage lib

- Released under restrictive MSR license on http://research.microsoft.com

Related

There is a LINQ to Lucene provider http://linqtolucene.codeplex.com/Wiki/View.aspx?title=Project%20Documentation on codeplex which allows you to define your schema as a strongly typed object and execute LINQ expressions against the index.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The rssbus Support Team explained Setting Up Pseudo Columns with LightSwitch in a 9/2/2011 Knowledge Base article:

There are a number of ways that you might want to refine your query results that are difficult to do with current table columns; pseudo columns can greatly simplify this. This example shows how to set up a connection using any of the RSSBus ADO.NET data providers and how to expose pseudo columns.

First install the RSSBus product(s) you have purchased. After running the setup, open an instance of Visual Studio LightSwitch. In this tutorial we will make a C# LightSwitch application with the Salesforce Data Provider.

What are pseudo columns?

Pseudo columns are used in WHERE clauses just like regular columns but are not present in any table. As an example you could create a SELECT statement with a psuedo column like this:

SELECT * FROM Accounts WHERE maxresults='10'Passing the pseudo columns to the data provider allows for more granular control over the rows that get returned. Since they are special entities for Tables and Views they do not exist in the data table returned and cannot be read. For a list of the pseudo columns available with a particular Table or View confer with the help file supplied with the RSSBus Data Provider. The pseudo columns are not available by default however so the rest of this tutorial will show you how to add them into your project.

Setting Up a LightSwitch Project

- Step 1: Go to File -> New -> Project (or Ctrl+Shft+N) and select a LightSwitch application as shown in the photo.

- Step 2: Right Click on the "Data Source" folder of the project and select to add a new source

Then select a Database application:

- Step 3: In the Connection Properties Wizard enter your credentials; in this case since we are connecting to Salesforce you will need to put in your user name, password, and access token. To expose the pseudo columns all you need to do after this is enter *=* in the Pseudo Columns tabs found under Misc:

Step 4: Next Select what Tables and Views you wish to expose, here Accounts was selected.

After this if you need more help setting up a LightSwitch Screen, we have another Knowledge Base entry that shows you how to do this here. Once you have created a Screen you can now query from the available columns and pseudo columns.

Using the Pseudo Columns

- Step 1: Once you have created the screen you will see an Edit Query link on the designer page; click on this link.

- Step 2: Select Add Filter from the Filter section and select from the available column and pseudo columns you can query against:

That's it!

That is all it takes to set up a project that uses pseudo columns. This procedure common among all RSSBus Data Providers and additionally can be used to expose pseudo columns with Entity Framework projects.

If you have any further questions about this you can email oursupport team.

Yann Duran described How To Enable Multiple Row Selection In LightSwitch's Grids in an 8/27/2011 post to the MSDN Code Samples site (missed when published). From the Description:

Introduction

In LightSwitch Beta 1, I'm pretty sure that we had the ability to select multiple rows in a grid. In Beta 2, that ability disappeared, and has been sorely missed by many ever since.

People have come up with a couple of workarounds, like adding a boolean property to an entity being displayed in the grid, so that each row has a checkbox in it that can be ticked. But I've never been all that happy with having to do anything like that.

I did some research, and discovered that the Silverlight 4 grid was in fact capable of multiple selection, but for some reason the functionality hadn't been exposed in B2. The situation didn't change for the RTM.

I've been pretty vocal in the community about the fact that I felt that this was a prettybasic grid requirement, and should definitely be included in the default grid. It was one of the first things that my clients asked for when I demoed their application to them for the first time. When I told them that you couldn't do it they were very surprised, and not very happy. They mournfully pointed out that this functionality had been available in the MS Access version of the previous program I had written for them.

So my search continued, & today (2011/08/26) I finally found the solution. I'm going to share that solution with you now, in this article.

Building the Sample

The prerequisites for this sample are:

Description

The first step is to create a LightSwitch project.

To create a LightSwitch project:

On the Menu Bar in Visual Studio:

choose File

choose New Project

In the New Project dialog box

select the LightSwitch node

then select LightSwitch Application (Visual Basic)

in the Name field, type AddUrlToNavigationMenu as the name for your project

click the OK button to create a solution that contains the LightSwitch project

Next, we need to add a table to hold some data.

To add the table:

In the Solution Explorer in:

right-click the Data Sources folder

select Add Table (see Figure 1)

Figure 1. Adding new table

- change the Name to DemoItem (see Figure 2)

Figure 2. Changing table name

double-click the table to open it in the Table Designer

add two string properties (see Figure 3)

Figure 3. Adding two properties

save & close the table

Now we need to add a screen that has a grid on it.

To add the screen:

In the Solution Explorer in:

right-click the Screens folder

select Add Screen

select Editable Grid Screen (see figure 4-1)

set Screen Data to DataItems (see figure 4-2)

set Screen Name to DemoItemList (see figure 4-3)

click OK

Figure 4. Adding new screen

- You'll now have a screen, with a grid that has two columns (see Figure 5)

Figure 5. Screen with two-column grid

Now let's test the application so far, add some data, & demonstrate, that by default we can only select one grid row at a time.

To test the application:

Press F5:

in the screen's grid, enter a few rows of data (see Figure 6)

Figure 6. Adding data

click on the screen's Save button

click on any row

notice that you can't select multiple rows, using the Control andShift keys, as you normally would in other applications

With just a little bit of code, we can enable multiple row selection.

To enable multiple row selection:

We're going to need a reference to System.Windows.Controls.Data (to be able to access the Silverlight grid control), so let's do that now.

In Solution Explorer:

click the View button (see Figure 7)

select File View

Figure 7. File view

right-click the Client project

select Add Reference

find and click System.Windows.Controls.Data (see Figure 8)

click OK

Figure 8. Adding System.Windows.Controls.Data reference

Switch back to Logical View

click the View button (see Figure 9)

select Logical View

Figure 9. Selecting Logical view

In the Screen Designer:

click the Write Code dropdown (see Figure 10)

select DemoItemList_InitializeDataWorkspace

Figure 10. Writing screen code

Replace the all of the code that was created for you in DemoItemList.vb, with the code in Listing 1

Listing 1. DemoItemList class code

C#Visual Basic

Edit|Remove

//we need this to be able to use DataGrid in our code using System.Windows.Controls; namespace LightSwitchApplication { public class DemoItemList { //although not strictly necessary for this small example //it's good practice to use constants/variables like these //in case you want to use them in more than just the ControlAvailable method //this has to match the actual name of the grid control (by default it gets called "grid") private const string ITEMS_CONTROL = "grid"; //this is somewhere to store a reference to the grid control private DataGrid _itemsControl = null; #region Event Handlers private void DemoItemList_InitializeDataWorkspace(System.Collections.Generic.List<Microsoft.LightSwitch.IDataService> saveChangesTo) { //here we're adding an event handler to get a reference to the grid control, when it becomes available //and we have no way of knowing when that will be this.FindControl(ITEMS_CONTROL).ControlAvailable += DemoItems_ControlAvailable; } private void DemoItems_ControlAvailable(object send, ControlAvailableEventArgs e) { //we know that the control is a grid, but we use TryCast, just in case _itemsControl = e.Control as DataGrid; //if the cast failed, just leave, there's nothing more we can do here if (_itemsControl == null) { return; } //set the property on the grid that allows multiple selection _itemsControl.SelectionMode = DataGridSelectionMode.Extended; _itemsControl.SelectionChanged += new SelectionChangedEventHandler(ItemsControl_SelectionChanged); } #endregion } }'we need this to be able to use DataGrid in our code Imports System.Windows.Controls Namespace LightSwitchApplication Public Class DemoItemList 'although not strictly necessary for this small example 'it's good practice to use constants/variables like these 'in case you want to use them in more than just 'the ControlAvailable method 'this has to match the actual name of the grid control '(by default it gets called "grid") Private Const ITEMS_CONTROL As String = "grid" 'this is somewhere to store a reference to the grid control Private WithEvents _itemsControl As DataGrid = Nothing #Region " Event Handlers " Private Sub DemoItemList_InitializeDataWorkspace( _ saveChangesTo As System.Collections.Generic.List(Of Microsoft.LightSwitch.IDataService) _ ) 'here we're adding an event handler to get a reference to the grid control 'when it becomes available 'and we have no way of knowing when that will be AddHandler Me.FindControl(ITEMS_CONTROL).ControlAvailable, AddressOf DemoItems_ControlAvailable End Sub Private Sub DemoItems_ControlAvailable(send As Object, e As ControlAvailableEventArgs) 'we know that the control is a grid, but we use TryCast, just in case _itemsControl = TryCast(e.Control, DataGrid) 'if the cast failed, just leave, there's nothing more we can do here If (_itemsControl Is Nothing) Then Return 'set the property on the grid that allows multiple selection _itemsControl.SelectionMode = DataGridSelectionMode.Extended End Sub #End Region End Class End NamespaceLet's test the application again, & check that now we can in fact select multiple grid rows.

To test the application:

Press F5:

in the screen's grid, select any number rows of data, by holding the Control key while you click each row (see Figure 11)

Figure 11. Selecting multiple rows

- Close the application when you've finshed testing.

Now that we're able to select multiple rows, it'd be nice to be able to do something with them. We'll add a button to the grid, thatprocesses the selected rows when you click it.

To process multiple rows:

In the Screen Designer (double-click the DemoItemList screen, if it's not already open):

expand the data grid's Command Bar (see Figure 12-1)

expand the Add dropdown (see Figure 12-2)

select New Button (see Figure 12-3)

Figure 12. Adding a grid button

set the Name to ProcessItems (see Figure 13)

Figure 13. New Method name

In the Left Pane:

click on the ProcessItems method (see Figure 14)

Figure 14. ProcessItems method

In the Properties Pane:

click on the Edit CanExecute Code link (see Figure 15-1)

add the code for the CanExecute method (see Listing 2)

Listing 2. CanExecute method code

C#Visual Basic

Edit|Remove

//insert this event handler wireup in the screen's DemoItems_ControlAvailable method _itemsControl.SelectionChanged += new System.EventHandler(ItemsControl_SelectionChanged); //this is an optional advanced technique, discussed in the book //that Tim Leung & I are currently writing //Pro Visual Studio LightSwitch 2011 Development private void ItemsControl_SelectionChanged() { switch (_itemsControl == null) { case true: _selectedCount = 0; break; case false: _selectedCount = _itemsControl.SelectedItems.Count; break; } } private void ProcessItems_CanExecute(ref bool result) { //only enable rows have actually been selected result = (_selectedCount > 0); }'this is an optional advanced technique, discussed in the book that 'Tim Leung & I are currently writing 'Pro Visual Studio LightSwitch 2011 Development Private Sub ItemsControl_SelectionChanged() _ Handles _itemsControl.SelectionChanged Select Case (_itemsControl Is Nothing) Case True _selectedCount = 0 Case False _selectedCount = _itemsControl.SelectedItems.Count End Select End Sub Private Sub ProcessItems_CanExecute(ByRef result As Boolean) 'only enable rows have actually been selected result = (_selectedCount > 0) End Subclick on the Edit Execute Code link (see Figure 15-2)

add the code for the Execute method (see Listing 3)

Listing 3. Execute method code

C#Visual Basic

Edit|Remove

private void ProcessItems_Execute() { //if the variable hasn't been set, just leave //there's nothing more we can do here if (_itemsControl == null) { return; } StringBuilder names = new StringBuilder(); //loop through the selected rows //we're casting each selected row as a DemoItem //so we get access to all the properties of the entity that the row represents foreach (DemoItem item in _itemsControl.SelectedItems) { //you would normally process each row //but here we're just concatenating the properties as //proof that we are processing the selected rows names.Append(item.PropertyOne); names.Append("; "); } //simply display the result this.ShowMessageBox(string.Format("The values of the selected rows are: {0}", names), caption:"Demo Items", button:MessageBoxOption.Ok); }Private Sub ProcessItems_Execute() 'if the variable hasn't been set, just leave, there's nothing more we can do here If (_itemsControl Is Nothing) Then Return Dim names As New StringBuilder 'loop through the selected rows 'we're casting each selected row as a DemoItem 'so we get access to all the properties of the entity that the row represents For Each item As DemoItem In _itemsControl.SelectedItems 'you would normally process each row 'but here we're just concatenating the properties as 'proof that we are processing the selected rows names.Append(item.PropertyOne) names.Append("; ") Next 'simply display the result Me.ShowMessageBox(String.Format("The values of the selected rows are: {0}", names) _ , caption:="Demo Items" _ , button:=MessageBoxOption.Ok _ ) End Sub

Figure 15. Code links

The code in the DemoItemList.vb file should now look like Listing 4

Listing 4. Complete DemoItemList class code

C#Visual Basic

Edit|Remove

//we need this to be able to use DataGrid in our code using System.Windows.Controls; //this is for the StringBuilder using System.Text; namespace LightSwitchApplication { public class DemoItemList { //although not strictly necessary for this small example //it's good practice to use constants/variables like these //in case you want to use them in more than just the ControlAvailable method //this has to match the actual name of the grid control (by default it gets called "grid") private const string ITEMS_CONTROL = "grid"; //this is somewhere to store a reference to the grid control private DataGrid _itemsControl = null; //this is somewhere to store the selected row count private int _selectedCount = 0; #region Event Handlers private void DemoItemList_InitializeDataWorkspace(System.Collections.Generic.List<Microsoft.LightSwitch.IDataService> saveChangesTo) { //here we're adding an event handler to get a reference to the grid control //when it becomes available //and we have no way of knowing when that will be this.FindControl(ITEMS_CONTROL).ControlAvailable += DemoItems_ControlAvailable; } private void DemoItems_ControlAvailable(object send, ControlAvailableEventArgs e) { //we know that the control is a grid, but we use TryCast, just in case _itemsControl = e.Control as DataGrid; //if the cast failed, just leave, there's nothing more we can do here if (_itemsControl == null) { return; } //set the property on the grid that allows multiple selection _itemsControl.SelectionMode = DataGridSelectionMode.Extended; _itemsControl.SelectionChanged += new SelectionChangedEventHandler(ItemsControl_SelectionChanged); } //this is an optional advanced technique, discussed in the book //that Tim Leung & I are currently writing //Pro Visual Studio LightSwitch 2011 Development private void ItemsControl_SelectionChanged() { switch (_itemsControl == null) { case true: _selectedCount = 0; break; case false: _selectedCount = _itemsControl.SelectedItems.Count; break; } } #endregion #region Process Items private void ProcessItems_CanExecute(ref bool result) { //only enable the button if the variable has been initialised //& the rows have actually been selected result = (_selectedCount > 0); } private void ProcessItems_Execute() { //if the variable hasn't been set, just leave, there's nothing more we can do here if (_itemsControl == null) { return; } StringBuilder names = new StringBuilder(); //loop through the selected rows //we're casting each selected row as a DemoItem //so we get access to all the properties of the entity that the row represents foreach (DemoItem item in _itemsControl.SelectedItems) { //you would normally process each row //but here we're just concatenating the properties as //proof that we are processing the selected rows names.Append(item.PropertyOne); names.Append("; "); } //simply display the result this.ShowMessageBox(string.Format("The values of the selected rows are: {0}", names), caption: "Demo Items", button: MessageBoxOption.Ok); } #endregion } }'we need this to be able to use DataGrid in our code Imports System.Windows.Controls 'this is for the StringBuilder Imports System.Text Namespace LightSwitchApplication Public Class DemoItemList 'although not strictly necessary for this small example 'it's good practice to use constants/variables like these 'in case you want to use them in more than just the ControlAvailable method 'this has to match the actual name of the grid control (by default it gets called "grid") Private Const ITEMS_CONTROL As String = "grid" 'this is somewhere to store a reference to the grid control Private WithEvents _itemsControl As DataGrid = Nothing 'this is somewhere to store the selected row count Private _selectedCount As Integer = 0 #Region " Event Handlers " Private Sub DemoItemList_InitializeDataWorkspace( _ saveChangesTo As System.Collections.Generic.List(Of Microsoft.LightSwitch.IDataService) _ ) 'here we're adding an event handler to get a reference to the grid control 'when it becomes available 'and we have no way of knowing when that will be AddHandler Me.FindControl(ITEMS_CONTROL).ControlAvailable, AddressOf DemoItems_ControlAvailable End Sub Private Sub DemoItems_ControlAvailable(send As Object, e As ControlAvailableEventArgs) 'we know that the control is a grid, but we use TryCast, just in case _itemsControl = TryCast(e.Control, DataGrid) 'if the cast failed, just leave, there's nothing more we can do here If (_itemsControl Is Nothing) Then Return 'set the property on the grid that allows multiple selection _itemsControl.SelectionMode = DataGridSelectionMode.Extended End Sub 'this is an optional advanced technique, discussed in the book that 'Tim Leung & I are currently writing 'Pro Visual Studio LightSwitch 2011 Development Private Sub ItemsControl_SelectionChanged() _ Handles _itemsControl.SelectionChanged Select Case (_itemsControl Is Nothing) Case True _selectedCount = 0 Case False _selectedCount = _itemsControl.SelectedItems.Count End Select End Sub #End Region #Region " Process Items " Private Sub ProcessItems_CanExecute(ByRef result As Boolean) 'only enable the button if the variable has been initialised, & 'the rows have actually been selected result = (_selectedCount > 0) End Sub Private Sub ProcessItems_Execute() 'if the variable hasn't been set, just leave, there's nothing more we can do here If (_itemsControl Is Nothing) Then Return Dim names As New StringBuilder 'loop through the selected rows 'we're casting each selected row as a DemoItem 'so we get access to all the properties of the entity that the row represents For Each item As DemoItem In _itemsControl.SelectedItems 'you would normally process each row 'but here we're just concatenating the properties as 'proof that we are processing the selected rows names.Append(item.PropertyOne) names.Append("; ") Next 'simply display the result Me.ShowMessageBox(String.Format("The values of the selected rows are: {0}", names) _ , caption:="Demo Items" _ , button:=MessageBoxOption.Ok _ ) End Sub #End Region End Class End Namespace- Run the program again. Select some rows, & click on the Process Items button.

More Information

For more information on LightSwitch Development, see the LightSwitch Developer Center, or theLightSwitch Forum.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

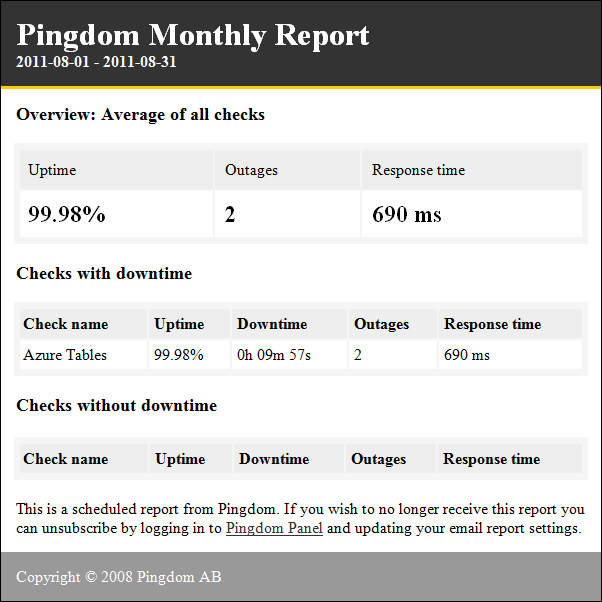

My (@rogerjenn) Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: August 2011 post of 9/4/2011 again shows Windows Azure meets its SLA:

My live OakLeaf Systems Azure Table Services Sample Project demo runs two small Azure Web role instances from Microsoft’s US South Central (San Antonio, TX) data center. Here’s its uptime report from Pingdom.com for August 2011:

The ~10 minutes of downtime occurred when I made the design, monitoring and storage analytics updates to the project on 8/22/2011 as described in my OakLeaf Systems Windows Azure Table Services Sample Project Updated with Tools v1.4 and Storage Analytics post. I was unable to perform a VIP swap between staging and production instances because of an erroneous Web Role instance count in the staging instance (1 instead of 2) by the portal.

The Azure Table Services Sample Project

This is the third uptime report for the two-Web role version of the sample project. Reports will continue on a monthly basis. See my Republished My Live Azure Table Storage Paging Demo App with Two Small Instances and Connect, RDP post of 5/9/2011 for more details of the Windows Azure test harness instance.

Following is a capture of Quest Software, Inc.’s Spotlight on Azure management application displaying Health metrics for the sample project under no external load. Diagnostics, instance metrics and storage analytics are consuming the resources shown below:

Requests for storage analytics data and other metrics (Application Load) currently are running at a rate of about 608 billable transaction units (US$0.01 per 10,000) per month. My MSDN subscription includes 200 units, so the above Application Load costs me $4.08 per month. Current total Windows Azure Storage is 19.6 GB, all of which is analytics and metrics data; the MSDN subscription includes 30 GB.

You can download a 30-day trial version of Spotlight on Azure here.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

The Cloud Journal Staff reported New Zealand Prepares Its Own Cloud Computing Code of Practice on 9/4/2011:

From ComputerWorld:

“New Zealand is to get its own Cloud Computing Code of Practice, following discussions between several providers of cloud-based software.

The initative is similar in nature to a Cloud Computing Code of Practice that has been developed in the UK.

The early development of the Code will be facilitated by the New Zealand Computer Society.

According to a statement from the NZ Computer Society and the founding members of the Code, “The initiative was the outcome of a workshop called recently by Xero’s Rod Drury following a number of industry discussions, including at the recent NetHui Conference, identifying the need to proactively address standards and accountability around Cloud Computing.”

NZCS chief executive Paul Matthews says in the statement: “Cloud computing offers exciting new opportunities and avenues for New Zealand but with that comes responsibilities for service providers.”

The rest of this article may be found here.

<Return to section navigation list>

Cloud Computing Events

Ryan Dunn (@dunnry) reported that Steve Marx (@smarx) will be the speaker at the Seattle Azure Users Group meeting on 9/29/2011, 6:30 to 8:00 PM PDT, at Microsoft’s South Lake Union office. From the Event Details:

Welcome to the Windows Azure User Group. We at NW Cloud Group have organized this group to discuss the latest feautres, current capabilities, and other awesome capabilities of Windows Azure!

Join us for conversation, presentations, and more. Our first guest speaker, in this kick off meeting for Seattle Windows Azure User Group will be none other than Steve Marx (Blog: http://blog.smarx.com/ Twitter: @smarx) of the Windows Azure Team.

Steve Marx is a Technical Product Manager for the Windows Azure Team. He's worked at Microsoft since 2002, focusing on web technology and developer platforms. Check out Steve on Channel 9's Cloud Cover Episodes.

So come and check out our inaug[u]ral meeting and have a good time. In addition we all can figure out what the Windows Azure User Group Schedule should look like, who else we'd all love to come see, talk, and what would be great meeting topics!

Cheers!

-NW Cloud Group

Vittorio Bertocci, David Aiken, Eugenio Pace, Adron Hall and other Azure notables indicate they will attend.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Craig Knighton and Zach Richardson presented The great debate: Windows Azure vs. Amazon Web Services in a 9/4/2011 post to GigaOm’s Structure blog:

The Infrastructure as a Service (IaaS) and Platform as a Service (PaaS) markets are growing faster than ever. But sometimes it seems like startups base their infrastructure decisions on a popularity contest or random selection. Among the two gorillas in the cloud space, some developers swear by Amazon, others think Azure is the best, but often the details are sparse as to why one option is better than the other.

To find out how these two services measure up in the real world, two startup tech guys — Craig Knighton of LiquidSpace and Zach Richardson of Ravel Data — lay out the cases for their clouds of choice to see how the services compared in real-world use at living, breathing companies.

Knighton is in Windows Azure’s corner, while Richardson takes up the case for AWS.

Why is the choice between AWS and Microsoft? Can no other providers fit the bill?

Knighton: The real choice is between IaaS and PaaS. If we were interested in an IaaS cloud, we would have definitely considered AWS.