Windows Azure and Cloud Computing Posts for 5/18/2011+

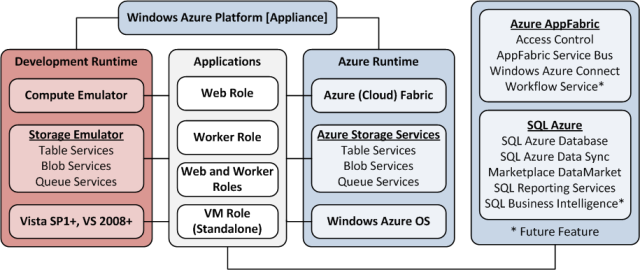

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 5/20/2011 with new articles marked • by The System Center Team, Wade Wegner, Vittorio Bertocci, Kenon Owens, Turker Keskinpala, Karsten Januszewski, Bruce Kyle, Lynn Langit, Jason Bloomberg, and Clemens Vasters.

Note: Further updates will be limited this week due to preparation for my Moving Access Tables to SharePoint 2010 or SharePoint Online Lists Webcast of 3/23/2011. See Three Microsoft Access 2010 Webcasts Scheduled by Que Publishing for March, April and May 2011 for details of the other two members of the series.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework 4+

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Channel9 posted Cihan Biyikoglu’s Building Scalable Database Solutions Using Microsoft SQL Azure Database Federations session video on 5/18/2011:

SQL Azure provides an information platform that you can easily provision,configure and use to power your cloud applications. In this session we explore the patterns and practices that help you develop and deploy applications that can exploit the full power of the elastic,highly available,and scalable SQL Azure Database service.

The session details modern scalable application design techniques such as sharding and horizontal partitioning and dives into future enhancements to SQL Azure Databases.

Steve Yi (pictured below) reported a TechEd 2011: The Data and BI Platform for Today and Tomorrow session in a 5/18/2011 post to the SQL Azure Team blog:

Quentin Clark, Vice President for SQL Server, delivered a session after the TechEd keynote yesterday about the future of SQL Server for data and BI and moving towards the cloud in the upcoming “Denali” release.

There was a great demo by Roger Doherty that illustrates how well SQL Azure and SQL Server work together by making it much easier to move back and forth between on-premises and cloud utilizing improvements in the DAC Framework.

Additionally, new developer tools in “Denali”, specifically SQL Server Developer Tools Codename “Juneau”, provided a central point for developers to create database applications. Database edition and cloud-aware – “Juneau” provides the one tool for any SQL Server or SQL Azure development.

I encourage you to watch it in its entirety. However, if you want to skip ahead the SQL Azure demo starts at the 23:00 mark.

Steve’s embedded video didn’t work for me, so here’s a link to Channel9’s session video: Microsoft SQL Server: The Data and BI Platform for Today and Tomorrow:

Foundational Sessions bridge the general topics outlined in the keynote address and the in-depth coverage in breakout sessions by sharing the company’s vision,strategy and roadmap for particular products and technologies,and are delivered by Microsoft senior executives. Attend this session for a demo-intensive visual tour through the new SQL Server and discover what’s possible for end users,developers and IT alike to help organizations unlock the value of exploding data volumes,create business solutions fast,and deliver mission critical capabilities at low TCO in their enterprise and in the cloud.

Steve Yi announced a Video How To: Advanced Business Intelligence with Cloud Data on 5/18/2011:

We’ve created a new readiness video that introduces some of the features of SQL Azure Reporting and demonstrates the use of advanced analytical tools, such as using SQL Azure data with Excel and PowerPivot. Specifically, users will learn how to use SQL Azure data with Excel and create two pivot tables using employee expense report data. The conclusion points to some additional resources to help users get started.

Neil MacKenzie described the new SQL Azure Management REST API in a 5/17/2011 post:

The SQL Azure Management REST API was released with the May 2011 SQL Azure release. This API is very similar to the Windows Azure Service Management REST API that has been available for some time. They both authenticate using an X.509 certificate that has been uploaded as a management certificate to the Windows Azure Portal.

The primary differences are that the SQL Azure Management REST API uses:

- service endpoint: management.database.windows.net:8443

- x-ms-version: 1.0

while the Windows Azure Service Management REST API uses:

- service endpoint: management.core.windows.net

- x-ms-version: 2011-02-25

This post is a sequel to an earlier post on the Windows Azure Service Management REST API.

SQL Azure Management Operations

The SQL Azure Management REST API supports the following operations:

- Create Server

- Get Servers

- Drop Server

- Set Server Administrator Password

- Set Server Firewall Rule

- Get Server Firewall Rules

- Delete Server Firewall Rule

The Create Server, Set Server Administrator Password and the Set Server Firewall Rule operations all require a request body adhering to a specified XML schema.

Creating the HTTP Request

Each operation in the SQL Azure Management REST API requires that a specific HTTP request be made against the service management endpoint. The request must be authenticated using an X.509 certificate that has previously been uploaded to the Windows Azure Portal. This can be a self-signed certificate.

The following shows how to retrieve an X.509, identified by thumbprint, from the CPersonal (My) level of the certificate store for the current user:

X509Certificate2 GetX509Certificate2(String thumbprint)

{

X509Certificate2 x509Certificate2 = null;

X509Store store = new X509Store(“My”, StoreLocation.CurrentUser);

try

{

store.Open(OpenFlags.ReadOnly);

X509Certificate2Collection x509Certificate2Collection =

store.Certificates.Find(X509FindType.FindByThumbprint, thumbprint, false);

x509Certificate2 = x509Certificate2Collection[0];

}

finally

{

store.Close();

}

return x509Certificate2;

}The following shows how to create an HttpWebRequest object, add the certificate (for a specified thumbprint), and add the required x-ms-version request header:

HttpWebRequest CreateHttpWebRequest(

Uri uri, String httpWebRequestMethod, String version)

{

X509Certificate2 x509Certificate2 = GetX509Certificate2(“THUMBPRINT”);HttpWebRequest httpWebRequest = (HttpWebRequest)HttpWebRequest.Create(uri);

httpWebRequest.Method = httpWebRequestMethod;

httpWebRequest.Headers.Add(“x-ms-version”, version);

httpWebRequest.ClientCertificates.Add(x509Certificate2);

httpWebRequest.ContentType = “application/xml”;return httpWebRequest;

}Making a Request on the Service Management API

As with other RESTful APIs, the Service Management API uses a variety of HTTP operations – with GET being used to retrieve data, DELETE being used to delete data, and POST or PUT being used to add elements.

The following example invoking the Get Servers operation is typical of those operations that require a GET operation:

XDocument EnumerateSqlAzureServers(String subscriptionId)

{

String uriString =

String.Format(“https://management.database.windows.net:8443/{0}/servers”,

subscriptionId);

String version = “1.0″;XDocument responseDocument;

Uri uri = new Uri(uriString);

HttpWebRequest httpWebRequest = CreateHttpWebRequest(uri, “GET”, version);

using (HttpWebResponse httpWebResponse =

(HttpWebResponse)httpWebRequest.GetResponse())

{

Stream responseStream = httpWebResponse.GetResponseStream();

responseDocument = XDocument.Load(responseStream);

}

return responseDocument;

}The response containing the list of servers is loaded into an XML document where it can be further processed as necessary. The following is an example response:

<Servers xmlns=”http://schemas.microsoft.com/sqlazure/2010/12/”>

<Server>

<Name>SERVER</Name>

<AdministratorLogin>LOGIN</AdministratorLogin>

<Location>North Central US</Location>

</Server>

</Servers>Some operations require that a request body be constructed. Each operation requires that the request body be created in a specific format – and a failure to do so causes an error when the operation is invoked.

The following shows how to create the request body for the Set Server Firewall Rule operation:

XDocument GetRequestBodyForAddFirewallRule(String startIpAddress, String endIpAddress)

{

XNamespace defaultNamespace =

XNamespace.Get(“http://schemas.microsoft.com/sqlazure/2010/12/”);

XNamespace xsiNamespace =

XNamespace.Get(“http://www.w3.org/2001/XMLSchema-instance”);

XNamespace schemaLocation = XNamespace.Get(“http://schemas.microsoft.com/sqlazure/2010/12/FirewallRule.xsd”);XElement firewallRule = new XElement(defaultNamespace + “FirewallRule”,

new XAttribute(“xmlns”, defaultNamespace),

new XAttribute(XNamespace.Xmlns + “xsi”, xsiNamespace),

new XAttribute(xsiNamespace + “schemaLocation”, schemaLocation),

new XElement(defaultNamespace + “StartIpAddress”, startIpAddress),

new XElement(defaultNamespace + “EndIpAddress”, endIpAddress));XDocument requestBody = new XDocument(

new XDeclaration(“1.0″, “utf-8″, “no”),

firewallRule

);

return requestBody;

}This method creates the request body and returns it as an XML document. The following is an example:

<FirewallRule

xmlns=”http://schemas.microsoft.com/sqlazure/2010/12/”

xmlns:xsi=”http://www.w3.org/2001/XMLSchema-instance”

xsi:schemaLocation

=”http://schemas.microsoft.com/sqlazure/2010/12/FirewallRule.xsd”>

<StartIpAddress xmlns=”">10.0.0.1</StartIpAddress>

<EndIpAddress xmlns=”">10.0.0.255</EndIpAddress>

</FirewallRule>The following example shows the invocation of the Set Server Firewall Rule operation:

void AddFirewallRule(String subscriptionId, String serverName, String ruleName,

String startIpAddress, String endIpAddress)

{

String uriString = String.Format(

“https://management.database.windows.net:8443/{0}/servers/{1}/firewallrules/{2}”,

subscriptionId, serverName, ruleName);

String apiVersion = “1.0″;XDocument requestBody = GetRequestBodyForAddFirewallRule(

startIpAddress, endIpAddress);

String StatusDescription;

Uri uri = new Uri(uriString);

HttpWebRequest httpWebRequest = CreateHttpWebRequest(uri, “PUT”, apiVersion);

using (Stream requestStream = httpWebRequest.GetRequestStream())

{

using (StreamWriter streamWriter =

new StreamWriter(requestStream, System.Text.UTF8Encoding.UTF8))

{

requestBody.Save(streamWriter, SaveOptions.DisableFormatting);

}

}

using (HttpWebResponse httpWebResponse =

(HttpWebResponse)httpWebRequest.GetResponse())

{

StatusDescription = httpWebResponse.StatusDescription;

}

}

<Return to section navigation list>

MarketPlace DataMarket and OData

• Turker Keskinpala reported OData Service Validation Tool Updated – JSON Payload Validation and new rules on 9/19/2011:

We pushed another new update to the OData Service Validation Tool last Friday. This update has the following:

- Added support for JSON payload validation.

- Added 9 new JSON rules

- Added 2 new Atom/XML metadata rules

- Updated 4 Atom/XML rules (3 metadata and 1 feed)

As always, please give it a try and let us know what you think using the OData Mailing List.

• Karsten Januszewski described The Rise Of JSON to the VisitMIX blog on 3/17/2011:

I’ve been prototyping a new service, sketching out the different pieces: payload protocol, storage, data model, transport, client/server communication, etc. And, upon completion of the prototype, I stepped back and looked at the decisions made. For example, how are we storing the data? Raw JSON. How are we serving data? As JSON.

It suddenly struck me: there was never even a question of what format we would serialize to; JSON was assumed. And the idea that we would support XML as well as JSON wasn’t even considered.

The fact that this architecture was almost assumed instead of deliberated upon got me thinking: when was it that JSON won? After all, the format isn’t that old. But its rise has been quick and triumphant. I’m not the first one to observe this of course. There’s a great piece called “The Stealthy Ascendency of JSON” on DevCentral which does some digging across a range of available web APIs, discovering an increase in the percentage of APIs that support JSON as compared to XML in the last year. The ProgrammableWeb has a piece called “JSON Continues its Winning Streak Over XML“ which similarly documents this trend. And there is also the much blogged about facts that Twitter has removed XML support from their streaming API and Foursquare’s v2 API only supports JSON.

All this begs a different question: Why is JSON so popular? There is the simple fact that JSON is smaller as a payload than XML. And no doubt JSON is less verbose than XML. But there’s much more to it than just size. The crux has to do with programming. JSON is natively tied to Javascript. As an object representation of data, it is so easy to work with inside Javascript. Its untyped nature flows perfectly with how Javascript itself works. Compare this to working with XML in Javascript: ugh. There’s pretty fascinating piece by James Clark called “XML vs. The Web” that really dives into this.

JSON’s untyped nature flows with how the web itself works. The web does not seem like typing; it doesn’t like schemas; it doesn’t like things to be rigid or too structured. Just look at the failure of XHTML. A beautiful idea for the purists, but for the web, its lack of adoption underscores its platonic ideals.

Not to dismiss XML. It turns out, XML works fantastically well with strongly typed languages. Perhaps XML’s crowning glory these days is how it maps to object graphs — elements as objects, attributes as properties — for the purposes of creating client user interfaces. Consider mobile development. Look at both Android development (Java/XML) and Windows Phone development (.NET/XAML). Both models extensively use XML to represent user interface which map directly to an object graph. And both models use this XML representation to facilitate WYSIWYG editors like one finds in Expression Blend, Visual Studio and Eclipse. I wrote about this a fair amount quite a while ago in a paper called The New Iteration about designer/developer workflow with XAML.

Where you find an impedance mismatch is using a loosely typed payload format like JSON with a strongly typed language. This happens all the time on the server, especially if you are a .NET developer. Historically, this has caused plenty of headaches. I know I’ve spent too much time dealing with serialization/deserialization issues on the server when parsing JSON.

Well, I’m happy to say that this mismatch seems to have finally gone away with the dynamic keyword in .NET 4 combined with some great open source work by the WCF team that has resulted in a library called Microsoft.Runtime.Serialization.Json.

Consider the following c# code, which downloads my Foursquare profile:

WebClient webClient = new WebClient(); dynamic result = JsonValue.Parse(webClient.DownloadString("https://api.foursquare.com/v2/users/self?oauth_token=XXXXXXX")); Console.WriteLine(result.response.user.firstName);Notice how the parsing of the JSON returns an object graph that I can drill into. So elegant! If you want to learn more about these new APIs, check out this post called JSON and .NET Nirvana Hath Arrived.

It’s great to see this marriage between JSON and .NET, because it’s clear JSON isn’t going away any time soon.

Glenn Gailey (@ggailey777) described Accessing an OData Media Resource Stream from a Windows Phone 7 Application (Streaming Provider Series-Part 3) in a 5/17/2011 post to the WCF Data Services Team blog:

In this third post in the series on implementing a streaming data provider, we show how to use the OData client library for Windows Phone 7 to asynchronously access binary data exposed by an Open Data Protocol (OData) feed. We also show how to asynchronously upload binary data to the data service. This Windows Phone sample is the asynchronous equivalent to the previous post Data Services Streaming Provider Series-Part 2: Accessing a Media Resource Stream from the Client; both client samples access the streaming provider that we create in the first blog post in this series: Implementing a Streaming Provider. This post also assumes that you are already somewhat familiar with using the OData client library for Windows Phone 7 (which you can obtain from the OData project in CodePlex), as well as phone-specific concepts like paged navigation and tombstoning. For more information about OData and Windows Phone, see the topic Open Data Protocol (OData) Overview for Windows Phone.

OData Client Programming for Windows Phone 7

This application consumes an OData feed exposed by the sample photo data service, which implements a streaming provider to store and retrieve image files, along with information about each photo. This service returns a single feed (entity set) of PhotoInfo entries, which are also media link entries. The associated media resource for each media link entry is an image, which can be downloaded from the data service as a stream. The following represents the PhotoInfo entity in the data model:

This sample streaming data service is demonstrated in Implementing a Streaming Provider. You can download this streaming data service as a Visual Studio project from Streaming Photo OData Service Sample on MSDN Code Gallery. In our client phone application, we bind data from the PhotoInfo feed to UI controls in the XAML page.

First we need to create a Window Phone application that references the OData client library. (Note that the same basic APIs can be used to access and create media resources from a Silverlight client, except for the tombstoning functionality, which is specific to Windows Phone.) I won’t go into too much detail on the XAML that creates the pages in the application, since this is not a tutorial on XAML. You can review for yourself the XAML pages in the downloaded ODataStreamingPhoneClient project. Here are the basic steps to create this application:

- Download and install the OData client library for Windows Phone 7. This includes the System.Data.Services.Client.dll assembly and the DataSvcUtil.exe tool.

- Create the Windows Phone project.

- Run the DataSvcUtil.exe program (included in the OData client library for Windows Phone 7 download) to generate the client data classes for the data service.

Your command line should look like this (except all on one line):DataSvcUtil.exe /out:"PhotoData.cs" /language:csharp /DataServiceCollection

/uri:http://myhostserver/PhotoService/PhotoData.svc/ /version:2.0- Add a reference to the System.Data.Services.Client.dll assembly.

- Create a ViewModel class for the application named MainViewModel. This ViewModel helps connect the view (controls in XAML pages) to the model (OData feed accessed using the client library) by exposing properties and methods required for data binding and tombstoning. The following represents the MainViewModel class that supports this sample:

- Implement tombstoning to store application state when the application is deactivated and restore state when the application is reactivated. This is important because deactivation can happen at any time, including when the application itself displays the PhotoChooserTask to select a photo stored on the phone. To learn more about how to tombstone using the DataServiceState object, see Open Data Protocol (OData) Overview for Windows Phone.

- The MainPage.xaml page displays a ListBox of PhotoInfo objects, which includes the media resources as images downloaded from the streaming data service.

- When one of the items in the ListBox is tapped, details of the selected PhotoInfo object are displayed in a Pivot control the PhotoDetailsWindow page:

Querying the Data Service and Binding the Streamed Data

The following steps are required to asynchronously query the streaming OData service. All code that access the OData service is implemented in the MainViewModel class.

- Declare the DataServiceContext used to access the data service and the DataServiceCollection used for data binding.

// Declare the service root URI.

private Uri svcRootUri =

new Uri(serviceUriString, UriKind.Absolute);// Declare our private binding collection.

private DataServiceCollection<PhotoInfo> _photos;// Declare our private DataServiceContext.

private PhotoDataContainer _context;public bool IsDataLoaded { get; private set; }

- Register a handler for the LoadCompleted event when the binding collection is set.

public DataServiceCollection<PhotoInfo> Photos

{

get { return _photos;}

set

{

_photos = value;NotifyPropertyChanged("Photos");

// Register a handler for the LoadCompleted event.

_photos.LoadCompleted +=

new EventHandler<LoadCompletedEventArgs>(Photos_LoadCompleted);

}

}- When MainPage.xaml is navigated to, the LoadData method on the ViewModel is called; the LoadAsync method asynchronously executes the query URI.

// Instantiate the context and binding collection.

_context = new PhotoDataContainer(svcRootUri);

Photos = new DataServiceCollection<PhotoInfo>(_context);// Load the data from the PhotoInfo feed.

Photos.LoadAsync(new Uri("/PhotoInfo", UriKind.Relative));- The Photos_LoadCompleted method handles the LoadCompleted event to load all pages of the PhotoInfo feed returned by the data service.

private void Photos_LoadCompleted(object sender, LoadCompletedEventArgs e)

{

if (e.Error == null)

{

// Make sure that we load all pages of the Customers feed.

if (_photos.Continuation != null)

{

// Request the next page from the data service.

_photos.LoadNextPartialSetAsync();

}

else

{

// All pages are loaded.

IsDataLoaded = true;

}

}

else

{

if (MessageBox.Show(e.Error.Message, "Retry request?",

MessageBoxButton.OKCancel) == MessageBoxResult.OK)

{

this.LoadData();

}

}

}- When the user selects an image in the list, PhotoDetailsPage.xaml is navigated to, which displays data from the selected PhotoInfo object.

Binding Image Data to UI Controls

This sample displays images in the MainPage by binding a ListBox control to the Photos property of the ViewModel, which returns the binding collection containing data from the returned PhotoInfo feed. There are two ways to bind media resources from our streaming data service to the Image control.

- By defining an extension property on the media link entry.

- By implementing a value converter.

Both of these approaches end up calling GetReadStreamUri on the context to return the URI of the media resource a specific PhotoInfo object, which is called the read stream URI. We ended-up going with the extension property approach, which is rather simple and ends up looking like this:

public partial class PhotoInfo

{

// Returns the media resource URI for binding.

public Uri StreamUri

{

get

{

return App.ViewModel.GetReadStreamUri(this);

}

}

}When you bind an Image control using the read stream URI, the runtime does the work of asynchronously downloading the media resource during binding. The following XAML shows this binding to the StreamUri extension property for the image source:

<ListBox Margin="0,0,-12,0" Name="PhotosListBox" ItemsSource="{Binding Photos}"

SelectionChanged="OnSelectionChanged" Height="Auto">

<ListBox.ItemsPanel>

<ItemsPanelTemplate>

<toolkit:WrapPanel ItemHeight="150" ItemWidth="150"/>

</ItemsPanelTemplate>

</ListBox.ItemsPanel>

<ListBox.ItemTemplate>

<DataTemplate>

<StackPanel Margin="0,0,0,17" Orientation="Vertical"

HorizontalAlignment="Center">

<Image Source="{Binding Path=StreamUri, Mode=OneWay}"

Height="100" Width="130" />

<TextBlock Text="{Binding Path=FileName, Mode=OneWay}"

HorizontalAlignment="Center" Width="Auto"

Style="{StaticResource PhoneTextNormalStyle}"/>

</StackPanel>

</DataTemplate>

</ListBox.ItemTemplate>

</ListBox>Because the PhotoInfo class now includes the StreamUri extension property, the client also serializes this property in POST requests that create new media link entries in the data service. This causes an error in the data service when this unknown property cannot be processed. In our sample, we had to rewrite our requests to remove the StreamUri property from the request body. This payload rewriting is performed in the PhotoDataContainer partial class (defined in project file PhotoDataContainer.cs), which follows the basic pattern described in this post. I cover this and other binding issues related to media resource streams in more detail in my blog.

Uploading a New Image to the Data Service

The following steps are required to create a new PhotoInfo entity and binary image file in the data service.

- When the user taps the CreatePhoto button to upload a new image, we must create a new MLE object on the client. We do this by calling DataServiceCollection.Add in the MainPage code-behind page:

In this case, we don’t need to call AddObject on the context because we are using a DataServiceCollection for data binding.// Create a new PhotoInfo instance.

PhotoInfo newPhoto = PhotoInfo.CreatePhotoInfo(0, string.Empty,

DateTime.Now, new Exposure(), new Dimensions(), DateTime.Now);// Add the new photo to the tracking collection.

App.ViewModel.Photos.Add(newPhoto);// Select the newly added photo.

this.PhotosListBox.SelectedItem = newPhoto;- When the new PhotoInfo is selected from the list, the following SelectionChanged handler is invoked:

This navigates to the PhotoDetailsPage with the index of the newly created PhotoInfo object in the query parameter.var selector = (Selector)sender;

if (selector.SelectedIndex == -1)

{

return;

}// Navigate to the details page for the selected item.

this.NavigationService.Navigate(

new Uri("/PhotoDetailsPage.xaml?selectedIndex="

+ selector.SelectedIndex, UriKind.Relative));selector.SelectedIndex = -1;

- In the code-behind page for the PhotoDetailsPage, the following method handles the OnNavigatedTo event:

If we have a new PhotoInfo object (with a zero ID), the OnSelectedPhoto method is called.if (chooserCancelled == true)

{

// The user did not choose a photo so return to the main page;

// the added PhotoInfo is already removed.

NavigationService.GoBack();// Void out the binding so that we don't try and bind

// to an empty PhotoInfo object.

this.DataContext = null;return;

}// Get the selected PhotoInfo object.

string indexAsString = this.NavigationContext.QueryString["selectedIndex"];

int index = int.Parse(indexAsString);

this.DataContext = currentPhoto

= (PhotoInfo)App.ViewModel.Photos[index];// If this is a new photo, we need to get the image file.

if (currentPhoto.PhotoId == 0

&& currentPhoto.FileName == string.Empty)

{

// Call the OnSelectPhoto method to open the chooser.

this.OnSelectPhoto(this, new EventArgs());

}- In the PhotoDetailsPage, we must initialize the PhotoChooserTask in the class constructor:

// Initialize the PhotoChooserTask and assign the Completed handler.

photoChooser = new PhotoChooserTask();

photoChooser.Completed +=

new EventHandler<PhotoResult>(photoChooserTask_Completed);- In the OnSelectedPhoto method (which also handles the SelectPhoto button tap) we display the photo chooser:

At this point, the PhotoChooserTask is displayed and the application itself is deactivated, to be reactivated when the chooser closes—hence the need to implement tombstoning.// Start the PhotoChooser.

photoChooser.Show();- When the photo chooser is closed, the Completed event is raised. When the application is fully reactivated, we handle the event as follows to set PhotoInfo properties based on the selected photo:

Note that we use the image stream to create a new BitmapImage, which is only used to automatically set the height and width properties of the image.// Get back the last PhotoInfo objcet in the collection,

// which we just added.

currentPhoto =

App.ViewModel.Photos[App.ViewModel.Photos.Count - 1];if (e.TaskResult == TaskResult.OK)

{

// Set the file properties for the returned image.

currentPhoto.FileName =

GetFileNameFromString(e.OriginalFileName);

currentPhoto.ContentType =

GetContentTypeFromFileName(currentPhoto.FileName);// Read remaining entity properties from the stream itself.

currentPhoto.FileSize = (int)e.ChosenPhoto.Length;// Create a new image using the returned memory stream.

BitmapImage imageFromStream =

new System.Windows.Media.Imaging.BitmapImage();

imageFromStream.SetSource(e.ChosenPhoto);// Set the height and width of the image.

currentPhoto.Dimensions.Height =

(short?)imageFromStream.PixelHeight;

currentPhoto.Dimensions.Width =

(short?)imageFromStream.PixelWidth;this.PhotoImage.Source = imageFromStream;

// Reset the stream before we pass it to the service.

e.ChosenPhoto.Position = 0;// Set the save stream for the selected photo stream.

App.ViewModel.SetSaveStream(currentPhoto, e.ChosenPhoto, true,

currentPhoto.ContentType, currentPhoto.FileName);

}

else

{

// Assume that the select photo operation was cancelled,

// remove the added PhotoInfo and navigate back to the main page.

App.ViewModel.Photos.Remove(currentPhoto);

chooserCancelled = true;

}- When the Save button in the PhotoDetailsPage is tapped, we register a handler for the SaveChangesCompleted event in the ViewModel, start the progress bar, and call SaveChanges in the ViewModel:

App.ViewModel.SaveChangesCompleted +=

new MainViewModel.SaveChangesCompletedEventHandler(ViewModel_SaveChangesCompleted);App.ViewModel.SaveChanges();

// Show the progress bar during the request.

this.requestProgress.Visibility = Visibility.Visible;

this.requestProgress.IsIndeterminate = true;- In the ViewModel, we call BeginSaveChanges to send the media resource as a binary stream (along with any other pending PhotoInfo object updates) to the data service:

When BeginSaveChanges is called, the client sends a POST request to create the media resource in the data service using the supplied stream. After it processes the stream, the data service creates an empty media link entry. The client then sends a subsequent MERGE request to update this new PhotoInfo entity with data from the client.// Send an insert or update request to the data service.

this._context.BeginSaveChanges(OnSaveChangesCompleted, null);- In the following callback method, we call the EndSaveChanges method to get the response to the POST request generated when BeginSaveChanges was called:

When creating a new photo, we also need need to execute a query to get the newly created media link entry from the data service, after first detaching the new entity. We must do this because of a limitation in the WCF Data Services client POST behavior where it does not update the object on the client with the server-generated values or the edit-media link URI. To get the updated entity materialized correctly from the data service, we first detach the new entity and then call BeginExecute to get the new media link entry.private void OnSaveChangesCompleted(IAsyncResult result)

{

EntityDescriptor entity = null;

// Use the Dispatcher to ensure that the response is

// marshaled back to the UI thread.

Deployment.Current.Dispatcher.BeginInvoke(() =>

{

try

{

// Complete the save changes operation and display the response.

DataServiceResponse response = _context.EndSaveChanges(result);// When we issue a POST request, the photo ID and

// edit-media link are not updated on the client (a bug),

// so we need to get the server values.

if (response != null && response.Count() > 0)

{

var operation = response.FirstOrDefault()

as ChangeOperationResponse;

entity = operation.Descriptor as EntityDescriptor;var changedPhoto = entity.Entity as PhotoInfo;

if (changedPhoto != null && changedPhoto.PhotoId == 0)

{

// Verify that the entity was created correctly.

if (entity != null && entity.EditLink != null)

{

// Detach the new entity from the context.

_context.Detach(entity.Entity);// Get the updated entity from the service.

_context.BeginExecute<PhotoInfo>(entity.EditLink,

OnExecuteCompleted, null);

}

}

else

{

// Raise the SaveChangesCompleted event.

if (SaveChangesCompleted != null)

{

SaveChangesCompleted(this, new AsyncCompletedEventArgs());

}

}

}

}

catch (DataServiceRequestException ex)

{

// Display the error from the response.

MessageBox.Show(ex.Message);

}

catch (InvalidOperationException ex)

{

MessageBox.Show(ex.GetBaseException().Message);

}

});

}- When we handle the callback from the subsequent query execution, we assign the returned object to a new instance to properly materialize the new media link entry:

Because we detached the new media link entry, we must also assign the now tracked PhotoInfo object to the appropriate instance in the binding collection, otherwise the binding collection is out of sync with the context.private void OnExecuteCompleted(IAsyncResult result)

{

// Use the Dispatcher to ensure that the response is

// marshaled back to the UI thread.

Deployment.Current.Dispatcher.BeginInvoke(() =>

{

try

{

// Complete the query by assigning the returned

// entity, which materializes the new instance

// and attaches it to the context. We also need to assign the

// new entity in the collection to the returned instance.

PhotoInfo entity = _photos[_photos.Count - 1] =

_context.EndExecute<PhotoInfo>(result).FirstOrDefault();// Report that that media resource URI is updated.

entity.ReportStreamUriUpdated();

}

catch (DataServiceQueryException ex)

{

MessageBox.Show(ex.Message);

}

finally

{

// Raise the event by using the () operator.

if (SaveChangesCompleted != null)

{

SaveChangesCompleted(this, new AsyncCompletedEventArgs());

}

}

});

}- Finally, the SaveChangesCompleted event is raised by the ViewModel, to inform the UI that it is OK to turn off the progress bar, which is handled in the following code in the PhotoDetailsPage:

Unfortunately, when the navigation returns to the MainPage, the binding again downloads the images. This is because of the application deactivation that occurs when the PhotoChooserTask is displayed. To avoid this re-download from the data service after tombstoning, you could instead use the GetReadStream method to get a stream that contains the image data and use it to create an image in isolated storage. Then, your binding could access the stored version instead of the web version of the image, but this is outside the scope of this sample.// Hide the progress bar now that save changes operation is complete.

this.requestProgress.Visibility = Visibility.Collapsed;

this.requestProgress.IsIndeterminate = false;// Unregister for the SaveChangedCompleted event now that we are done.

App.ViewModel.SaveChangesCompleted -=

new MainViewModel.SaveChangesCompletedEventHandler(ViewModel_SaveChangesCompleted);

NavigationService.GoBack();

Glenn added the following in a 5/17/2011 New Resources for OData, Streaming, and Windows Phone post to his personal blog:

To support this blog post, I’ve also uploaded a new Windows Phone application (on which this blog post is based) to the MSDN Code Gallery:

OData Streaming Client for Windows Phone

Some additional streaming, XAML, binding resources are:

Revisited: Guidance on Binding Media Resource Streams to XAML Controls

<Return to section navigation list>

AppFabric: Access Control, WIF and Service Bus

• Clemens Vasters (@clemensv) listed Service Bus May 2011 CTP Resources in a 5/19/2011 post:

Here’s a (incomplete) snapshot of what’s out there in terms of material for the new Service Bus CTP:

- First read the release notes where we provide a summary of what’s new and what changed and also point out some areas of caution on parallel installs of the CTP and production SDKs.

- You can get the SDK bits from here. Get the right set of binaries for your machine (x64 or x86) and the right set of samples (CS or VB) and definitely get the user guide. We will have a NuGet package shortly that will allow you integrating the Service bus assembly and all necessary config incantations straight into your apps without even having the SDK on your machine.

- The reference docs are located here. This is a CTP and the documentation is likewise in CTP state, so there are some gaps that we try to fill.

- My introduction to the CTP is on the new AppFabric blog here.

- At the same location you’ll find David Ingham’s primer on Queues.

- My TechEd talk on the new features is now posted on Channel 9.

- We have a video series providing high-level overviews on Service Bus.

- Neudesic’s Rick Garibay provides a community insider’s perspective on the new features. Matt Davey also likes what he sees.

- The forums. Go there for questions or suggestions.

There’s more on the way. Let me know if you write a blog post about what you find out so I can link to it.

• Bruce Kyle reported CTP for Single Sign-On Extends Windows Identity Foundation (WIF) v3.5 for SAML 2.0 in a 5/19/2011 post to the US ISV Evangelism blog:

Windows Identity Foundation (WIF) v3.5 Extension for SAML 2.0 Protocol enables .NET developers to build applications for the enterprise and government that require SAML 2.0 Protocol support and interoperate with identity services on a wide variety of platforms.

The community preview was announced at Tech•Ed and on the team blog at Announcing the WIF Extension for SAML 2.0 Protocol Community Technology Preview.

Key features of this extension include:

- Service Provider initiated and Identity Provider initiated Web Single Sign-on (SSO) and Single Logout (SLO)

- Support for the Redirect, POST, and Artifact bindings

- All of the necessary components to create a SP-lite compliant service provider application

The CTP release that includes the extension and samples is now available here.

For more information, see the Windows Identity Foundation website.

• Vittorio Bertocci (@vibronet) described Adding a Custom OpenID Provider to ACS… with JUST ONE LINE of PowerShell Code in a 5/19/2011 post:

ACS offers you a variety of identity provider you can integrate with. Many of you will be familiar with the list shown by the management portal at the beginning of the add new identity provider wizard.

Some of you may also know that ACS integrates with Yahoo! and Google using OpenID, however from your point of view that doesn’t matter much: the details are abstracted away by ACS.

A less-known factlet is that ACS also supports integration with other OpenId providers: however that capability is not exposed via portal, you can only set it up via management APIs. We do have a tutorial which shows you how to do that step by step using myOpenID, you can find it here.

It’s not hard, that’s just OData after all, but it is still 6 printed pages. Now, how would you feel if I’d tell you that if you use the ACS cmdlets you can do exactly the same in ONE line of PowerShell code? Mind == blown, right?

Here we go:

PS C:\Users\vittorio\Desktop> Add-IdentityProvider –Namespace “myacsnamespace” –ManagementKey “XXXXXXXX” -Type "Manual" -Name "myOpenID" -Protocol OpenId –SignInAddress “http://www.myopenid.com/server”

That’s it! With the –Manual switch I can explicitly create any IP type. In order to maintain my boast that one line of code is enough, I used the inlined syntax for passing the namespace and the management key directly. In the announcement post I first obtained a management token with Get-AcsManagementToken, assigned it to a variable and passed it along for all subsequent commands, which is more appropriate for longer scripts (hence from now on I’ll use it instead).

That did the equivalent of the tutorial: however, that’ not enough to use myOpenID with the application yet. We still need to create rules that will add some claims or ACS won’t even send a token back. Luckily, that’s just another line of PowerShell code:

PS C:\Users\vittorio\Desktop> Add-Rule -MgmtToken $mgmtToken -GroupName "Default Rule Group for myRP" -IdentityProviderName "myOpenID"

Here I didn’t specify any input or output claim, which substantially ends up in a pass-through rule. NOW we’re ready! Let’s see what happens if I hit F5 on a plain vanilla Windows Azure webrole project where I added the SecurityTokenDisplayControl (you can find the VS2010 version in the identity training kit labs about ACS).

Oh hello myOpenID option! I’s there, good sign. Let’s hit it.

As expected, we end up on the auth page of openID. Once successfully authenticated, we get to the consent page:

Note that the consent does not mention any attributes, this fact will become relevant in a moment. Let’s click continue and…

congratulations! You just added an arbitrary OpenID provider, and all it took was just 2 lines of PowerShell (without even touching your application or opening the ACS management portal).

Now, you may notice one thing about this transaction: we got an awfully low amount of information about the user, just the OpenID handle in fact. I am not very deep in OpenID, I’ll readily admit. Luckily Oren, Chao and Andrew from the ACS team came to the rescue (thank you guys) and explained that ACS gets claims in OpenID via Attribute Exchange, which myOpenID does not support (they use Simple Registration).

Bummer! I really wanted to show passing name and email. Luckily adding another OpenID provider which supports AX is just a matter of hitting the up arrow a couple of times in the PowerShell ISE and change the name and signin address accordingly. In the end I settled with http://hyves.net, since I was just recently in the Netherlands :

Add-IdentityProvider -MgmtToken $mgmtToken -Type "Manual" -Name "hyves.net OpenID" -Protocol OpenId -SignInAddress https://openid.hyves-api.nl/

Add-Rule -MgmtToken $mgmtToken -GroupName "Default Rule Group for myRP" -IdentityProviderName "hyves.net OpenID"

Another F5…

…and the new option for hyves.net shows up. Good! Let’s hit it.

We get to their auth page. Let’s log in, we’ll get to the consent page.

Now this looks more promising. Hyves.net asks permission to share the email address with the ACS endpoint, as expected. Let’s grant it and see what happens.

Bingo! This time ACS (hence the RP) got the name and email claims, just like I wanted.

Soo, let me recap. I just enabled users from two arbitrary OpenID providers to authenticate with my application; and all it took was writing two commands in the window below to provision the first provider, then modifying those two commands for provisioning the second. We are talking minutes here, and just because I am not a very good typist nor an expert in PowerShell.

I know it’s bad form that I am the one saying it: but isn’t this really awesome? Come on, do something with the cmdlets too! I am super-curious to see what you guys will be able to accomplish.

The AppFabric Team announced New Sample Access Control Cmdlets Available in a 5/17/2011 post to the team blog:

As announced at TechEd North America we have released PowerShell cmdlets which wrap the management API of Windows Azure AppFabric Access Control service.

Read about this in the blog post by Vittorio Bertocci. [See below.]

This is a very useful addition to to your Access Control service management options that makes it easy to streamline and automate management actions.

As a reminder, we recently released major enhancements to the Access Control service which make it easy to enable single sign-on (SSO) in web applications.

In addition, we have a promotion period in which we are not charging for Access Control usage for billing periods before January 1st, 2012.

If you have not signed up for Windows Azure AppFabric and would like to start using Access Control, be sure to take advantage of our free trial offer. Just click on the image below and get started today!

Vittorio Bertocci (@vibronet) posted Announcing: Sample ACS Cmdlets for the Windows Azure AppFabric Access Control Service on 5/17/2011:

Long story short: we are releasing on Codeplex a set of PowerShell cmdlets which wrap the management API of the Windows Azure AppFabric Access Control Service.

This is hopefully for the joy of our IT admin friends who want to add ACS to their arsenal, but I bet that this will make many developers happy as well. I’ve never really used PowerShell before, and I’m using those cmdlets like crazy since the very first internal drop!

You can use those new cmdlets to save repetitive provisioning processes in form of PowerShell scripts, and consistently reuse them just by passing as parameters the targeted namespace and corresponding management key. You can use them for backing up your namespace settings on file and restore them at a later time, or copy settings from one namespace to the other. You can easily integrate ACS management in your existing scripts, or even just use the cmdlets to perform quick queries and adjustments to your namespace directly from PowerShell ISE or the command line. In fact, you can do whatever you are used to do with PowerShell cmdlets.

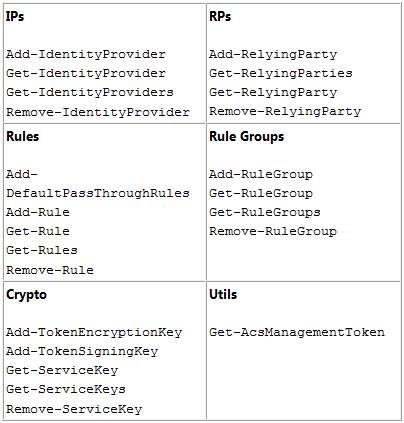

The initial set we are releasing today is not 100% exhaustive, for example we don’t touch the service identities yet, but it already enables most of the scenarios we encountered. The command names are self-explanatory:

There’s just 23 of them, and we might shrink them further in the future. For example: do we really need an Add-DefaultPassThroughRules cmdlet or can we just rely on Add-Rule? You tell us!

All cmdlets support get-help including the –Full option, although things are not too verbose at the moment: in subsequent releases we’ll tidy things up, but we wanted to put this in your hands ASAP.Now for the usual disclaimer: Those cmdlets are distributed in source code form and are not part of the product. you should consider them a code sample, even if we provide you with a setup that will automatically compile and install them so that you can use them without ever opening the project in visual studio if you don’t want to. Of course we are happy to take your feedback, especially now that the package is still a bit rough on the edges, but you should always remember that those cmdlets are unsupported.

Other disclaimer: this release have been thoroughly tested only on Windows 7 x64 SP1, and quickly tested on Windows 7 x86 SP1 and Windows 2008 R2 x64 SP1. There are known problems on older platforms, which we’ll iron out moving forward. Think of this release as one preview.That said, I am sure you’ll have a lot of fun using the cmdlets for exploring the features that ACS offers.

Some More Background, and One Example

If you want to manage your ACS namespaces, there’s no shortage of options: you can take advantage of the management portal (new in 2.0) or you can use the OData API exposed via management service.

In my team we make pretty heavy use of ACS, both for our internal tooling (for example managing content and events) and for the samples, demos and hands on lab we produce.

In order to enable the scenario we want to implement, at setup time all of those deliverables require us to go through fixed sets of configuration steps in ACS. For example, when you use the template in the Windows Azure Toolkit for Windows Phone 7 to generate an ACS-ready project, the initialization code needs to:

- Add Google as an IP

- Add Yahoo! as an IP

- Remove any RP which may collide with the new one

- Create the new RP

- Get rid of all the rules which may already be in the rule group we are targeting

- Generate all the pass-through rules for the various IPs

This is a relatively simple sequence of operations; other setups we have to do, like the enterprise subscription provisioning flow we follow when we handle a new FabrikamShipping subscriber, are WAY more complicated.

In order to automate those processes, we progressively populated a class library of C# wrappers for the ACS management APIs. Then we started including that library in the Setup folder of various projects, together with a console app which calls those wrappers in the sequence that the specific sample being set up dictates; for example, the sequence described above for the Windows Azure toolkit for Windows Phone 7.

In that specific case, the setup solution (it’s C:\WAZToolkitForWP7\Setup\acs\AcsSetup.sln if you have the toolkit and you are curious) is almost 580 lines of code.Now, multiply that for all the projects we have (for the newest ones see this post) and the number starts to look significant. Add it to the frequent requests we get from customers to extend the cmdlets we created for Windows Azure to other services in the Windows Azure platform, and you’ll see why we decided to create a set of cmdlets for ACS.

Quite frankly, it was also because it was a low hanging fruit for us. We already had our wrapper library for the ACS management API, and we had the cmdlets wrapper solution we used for generating the Windows Azure cmdlets; putting the two together was pretty straightforward.Once we had the right set of cmdlets we went ahead and re-created the sequence above in for of PowerShell script, and the improvement in respect to the AcsSetup.sln approach is impressive. Check it out:

# Coordinates of your namespace

$acsNamespace = "<yourNamespace>";

$mgmtKey = "<yourManagementKey>";# Constants

$rpName = "WazMobileToolkit";

$groupName = "Default Rule Group for $rpName";

$signingSymmetricKey = "2RGYmQiFT9uslnxTTUn9MFr/nU+HeVwkmMJ6MwBNGuQ=";$allowedIdentityProviders = @("Windows Live ID","Yahoo!", "Google”);

# Include ACS Management SnapIn

Add-PSSnapin ACSManagementToolsSnapIn;# Get the ACS management token for securing all subsequent API calls

$mgmtToken = Get-AcsManagementToken -namespace $acsNamespace -managementKey $mgmtKey;# Configure Preconfigured Identity Providers

Write-Output "Add PreConfigured Identity Providers (Google and Yahoo!)...";

$googleIp = Add-IdentityProvider -mgmtToken $mgmtToken -type "Preconfigured" –preconfiguredIPType "Google";

$yahooIp = Add-IdentityProvider -mgmtToken $mgmtToken -type "Preconfigured" –preconfiguredIPType "Yahoo!";# Remove RP (if it already exists)

Write-Output "Remove Relying Party ($rpName) if exists...";

Remove-RelyingParty -mgmtToken $mgmtToken -name $rpName;# Remove All Rules In Group (if they already exist)

Write-Output "Remove All Rules In Group ($groupName) if exists...";

Get-Rules -mgmtToken $mgmtToken -groupName $groupName | ForEach-Object { Remove-Rule -mgmtToken $mgmtToken -rule $_ };# Create Relying Party

Write-Output "Create Relying Party ($rpName)...";

$rp = Add-RelyingParty -mgmtToken $mgmtToken -name $rpName -realm "uri:wazmobiletoolkittest" -tokenFormat "SWT" -allowedIdentityProviders $allowedIdentityProviders -ruleGroup $groupName -signingSymmetricKey $signingSymmetricKey;# Generate default pass-through rules

Write-Output "Create Default Passthrough Rules for the configured IPs ($allowedIdentityProviders)...";

$rp.IdentityProviders | ForEach-Object { Add-DefaultPassthroughRules -mgmtToken $mgmtToken -groupName $groupName -identityProviderName $_.Name }Write-Output "Done";

Excluding the comments (but counting the Write-Output) those are 20 lines of very understandable code, which you can modify in notepad (typically just for the namespace and namespace key) and run with a simple double-click; or, if you are fancy, you can open it up in PowerShell ISE and execute it line by line if you want to. Does it show that I am excited about this?

Let’s play a bit more. Let’s say that you now want to add Facebook as an identity provider. First you’ll need to add some config values at the beginning of the script:

$fbAppIPName = "Facebook IP";

$fbAppId = "XXXXXXXXXXXXX";

$fbAppSecret = "XXXXXXXXXXXXX";We can even be fancy and subordinate the Facebook setup to the existance of non-empty facebook app coordinates in the script:

$facebookEnabled = (($fbAppId -ne "") -and ($fbAppSecret -ne ""));

Then we just add those few lines right where we create the preconfigured IPs:

# Configure Facebook App Identity Provider

if ($facebookEnabled)

{

Write-Output "Add Facebook App Identity Provider ($fbAppIPName)...";

# Remove FB App IP (if exists)

Remove-IdentityProvider -mgmtToken $mgmtToken -name $fbAppIPName;

# Add FB App IP

$fbIp = Add-IdentityProvider -mgmtToken $mgmtToken -type "FacebookApp" -name $fbAppIPName -fbAppId $fbAppId -fbAppSecret $fbAppSecret;

}Super straightforward; and the part that I love is that you can just test those commands one by one and see the results immediately, saving them in the script only when you are certain they do what you want them to do. For management tasks, it definitely beats fiddling with the debugger and the immediate window.

Want to play a bit more? Sure. One thing I often need to do is wiping a namespace clean after I did a demo during a session. Sometimes I have many sessions in a day, from time to time even back to back: as you can imagine, clicking around the portal for deleting entities is not fun nor very fast. But now I can just double click on the following script and I am done!

$acsNamespace = "holacsfederation";

$mgmtKey = "XXXXXXXXXXXXXXXXXXXX";

# Include ACS Management SnapIn

Add-PSSnapin ACSManagementToolsSnapIn;$mgmtToken = Get-AcsManagementToken -namespace $acsNamespace -managementKey $mgmtKey;

Write-Output "Wiping IPs (and associated rules)";

Get-IdentityProviders -mgmtToken $mgmtToken | where {$_.SystemReserved -eq $false} | ForEach-Object { Remove-IdentityProvider -mgmtToken $mgmtToken -name $_.Name };

Write-Output "Wiping RPs (and associated rules)";

Get-RelyingParties -mgmtToken $mgmtToken | where {$_.SystemReserved -eq $false} | ForEach-Object { Remove-RelyingParty -mgmtToken $mgmtToken -name $_.Name };

Write-Output "Wiping Rule Groups";

Get-RuleGroups -mgmtToken $mgmtToken | where {$_.SystemReserved -eq $false} | ForEach-Object { Remove-RuleGroup -mgmtToken $mgmtToken -name $_.Name };Here I delete all IPs (which will delete the associated rules), all RPs and all rule groups. All three commands have the same structure. Let’s pick the IP one:

Get-IdentityProviders -mgmtToken $mgmtToken

| where {$_.SystemReserved -eq $false}

| ForEach-Object { Remove-IdentityProvider -mgmtToken $mgmtToken -name $_.Name };Get-IdentityProviders returns all IPs in the namespace; the where clause excludes the system reserved ones (Windows Live ID) which we’d be unable to delete anyway, then the ForEach-Object cycles through all the IPs and removes them. You’ve got to love PowerShell piping.

Well, this barely scratches the surface of what you can do with the ACS cmdlets. Please do check them out! We look forward for your feedback, and for once not just from developers!

The AppFabric Team posted An Introduction to Service Bus Queues on 5/17/2011:

In the new May CTP of Service Bus, we’re adding a brand-new set of cloud-based, message-oriented-middleware technologies including reliable message queuing and durable publish/subscribe messaging. We’ll walk through the full set of capabilities over a series of blog posts but I’m going to begin by focusing on the basic concepts of the message queuing feature. This post will explain the usefulness of queues and show how to write a simple queue-based application using Service Bus.

Let’s consider a scenario from the world of retail in which sales data from individual Point of Sale (POS) terminals needs to be routed to an inventory management system which uses that data to determine when stock needs to be replenished. I’m going to walk through a solution that uses Service Bus messaging for the communication between the terminals and the inventory management system as illustrated below:

Each POS terminal reports its sales data by sending messages to the DataCollectionQueue. These messages sit in this queue until they are received by the inventory management system. This pattern is often termed asynchronous messaging because the POS terminal doesn’t need to wait for a reply from the inventory management system to continue processing.

Why Queuing?

Before we look at the code required to set up this application, let’s consider the advantages of using a queue in this scenario rather than having the POS terminals talk directly (synchronously) to the inventory management system.

Temporal decoupling

With the asynchronous messaging pattern, producers and consumers need not be online at the same time. The messaging infrastructure reliably stores messages until the consuming party is ready to receive them. This allows the components of the distributed application to be disconnected, either voluntarily, e.g., for maintenance, or due to a component crash, without impacting the system as a whole. Furthermore, the consuming application may only need to come online during certain times of the day, for example, in this retail scenario, the inventory management system may only need to come online after the end of the business day.

Load leveling

In many applications system load varies over time whereas the processing time required for each unit of work is typically constant. Intermediating message producers and consumers with a queue means that the consuming application (the worker) only needs to be provisioned to accommodate average load rather than peak load. The depth of the queue will grow and contract as the incoming load varies. This directly saves money in terms of the amount of infrastructure required to service the application load.

Load balancing

As load increases, more worker processes can be added to read from the work queue. Each message is processed by only one of the worker processes. Furthermore, this pull-based load balancing allows for optimum utilization of the worker machines even if the worker machines differ in terms of processing power as they will pull messages at their own maximum rate. This pattern is often termed the competing consumer pattern.

Loose coupling

Using message queuing to intermediate between message producers and consumers provides an inherent loose coupling between the components. Since producers and consumers are not aware of each other, a consumer can be upgraded without having any effect on the producer. Furthermore, the messaging topology can evolve without impacting the existing endpoints – we’ll discuss this further in a later post when we talk about publish/subscribe.

Show me the Code

Alright, now let’s look at how to use Service Bus to build this application.

Signing up for a Service Bus account and subscription

Before you can begin working with the Service Bus, you’ll first need to sign-up for a Service Bus account within the Service Bus portal at http://portal.appfabriclabs.com/. You will be required to sign-in with a Windows Live ID (WLID), which will be associated with your Service Bus account. Once you’ve done that, you can create a new Service Bus Subscription. In the future, whenever you login with your WLID, you will have access to all of the Service Bus Subscriptions associated with your account.

Creating a namespace

Once you have a Subscription in place, you can create a new service namespace. You’ll need to give your new service namespace a unique name across all Service Bus accounts. Each service namespace acts as a container for a set of Service Bus entities. The screenshot below illustrates what this page looks like when creating the “ingham-blog” service namespace.

Further details regarding account setup and namespace creation can be found in the User Guide accompanying the May CTP release.

Using the Service BusTo use the Service Bus namespace, an application needs to reference the AppFabric Service Bus DLLs, namely Microsoft.ServiceBus.dll and Microsoft.ServiceBus.Messaging.dll. You can find these in the SDK that can be downloaded from the CTP download page.

Creating the Queue

Management operations for Service Bus messaging entities (queues and publish/subscribe topics) are performed via the ServiceBusNamespaceClient which is constructed with the base address of the Service Bus namespace and the user credentials. The ServiceBusNamespaceClient provides methods to create, enumerate and delete messaging entities. The snippet below shows how the ServiceBusNamespaceClient is used to create the DataCollectionQueue.

Uri ServiceBusEnvironment.CreateServiceUri("sb", "ingham-blog", string.Empty);

string name = "owner";

string key = "abcdefghijklmopqrstuvwxyz";ServiceBusNamespaceClient namespaceClient = new ServiceBusNamespaceClient(

baseAddress, TransportClientCredentialBase.CreateSharedSecretCredential(name, key) );namespaceClient.CreateQueue("DataCollectionQueue");

Note that there are overloads of the CreateQueue method that allow properties of the queue to be tuned, for example, to set the default time-to-live to be applied to messages sent to the queue.

Sending Messages to the Queue

For runtime operations on Service Bus entities, i.e., sending and receiving messages, an application first needs to create a MessagingFactory. The base address of the ServiceBus namespace and the user credentials are required.

Uri ServiceBusEnvironment.CreateServiceUri("sb", "ingham-blog", string.Empty);

string name = "owner";

string key = "abcdefghijklmopqrstuvwxyz";MessagingFactory factory = MessagingFactory.Create(

baseAddress, TransportClientCredentialBase.CreateSharedSecretCredential(name, key) );From the factory, a QueueClient is created for the particular queue of interest, in our case, the DataCollectionQueue.

QueueClient queueClient = factory.CreateQueueClient("DataCollectionQueue");

A MessageSender is created from the QueueClient to perform the send operations.

MessageSender ms = queueClient.CreateSender();

Messages sent to, and received from, Service Bus queues are instances of the BrokeredMessage class which consists of a set of standard properties (such as Label and TimeToLive), a dictionary that is used to hold application properties, and a body of arbitrary application data. An application can set the body by passing in any serializable object into CreateMessage (the example below passes in a SalesData object representing the sales data from the POS terminal) which will use the DataContractSerializer to serialize the object. Alternatively, a System.IO.Stream can be provided.

BrokeredMessage bm = BrokeredMessage.CreateMessage(salesData);

bm.Label = "SalesReport";

bm.Properties["StoreName"] = "Redmond";

bm.Properties["MachineID"] = "POS_1";ms.Send(bm);

Receiving Messages from the Queue

Messages are received from the queue using a MessageReceiver which is also created from the QueueClient. MessageReceivers can work in two different modes, named ReceiveAndDelete and PeekLock. The mode is set when the MessageReceiver is created, as a parameter to the CreateReceiver operation.

When using the ReceiveAndDelete mode, receive is a single-shot operation, that is, when Service Bus receives the request, it marks the message as being consumed and returns it to the application. ReceiveAndDelete mode is the simplest model and works best for scenarios in which the application can tolerate not processing a message in the event of a failure. To understand this, consider a scenario in which the consumer issues the receive request and then crashes before processing it. Since Service Bus will have marked the message as being consumed then when the application restarts and begins consuming messages again, it will have missed the message that was consumed prior to the crash.

In PeekLock mode, receive becomes a two stage operation which makes it possible to support applications that cannot tolerate missing messages. When Service Bus receives the request, it finds the next message to be consumed, locks it to prevent other consumers receiving it, and then returns it to the application. After the application finishes processing the message (or stores it reliably for future processing), it completes the second stage of the receive process by calling Complete on the received message. When Service Bus sees the Complete, it will mark the message as being consumed.

Two other outcomes are possible. Firstly, if the application is unable to process the message for some reason then it can call Abandon on the received message (instead of Complete). This will cause Service Bus to unlock the message and make it available to be received again, either by the same consumer or by another completing consumer. Secondly, there is a timeout associated with the lock and if the application fails to process the message before the lock timeout expires (e.g., if the application crashes), then Service Bus will unlock the message and make it available to be received again.

One thing to note here is that in the event that the application crashes after processing the message but before the Complete request was issued then the message will be redelivered to the application when it restarts. This is often termed At Least Once processing, that is, each message will be processed at least once but in certain situations the same message may be redelivered. If the scenario cannot tolerate duplicate processing then additional logic is required in the application to detect duplicates which can be achieved based upon the MessageId property of the message which will remain constant across delivery attempts. This is termed Exactly Once processing.

Back to the code, the snippet below illustrates how a message can be received and processed using the PeekLock mode which is the default if no ReceiveMode is explicitly provided.

MessageReceiver mr = queueClient.CreateReceiver();

BrokeredMessage receivedMessage = mr.Receive();try

{

ProcessMessage(receivedMessage);

receivedMessage.Complete();

}

catch (Exception e)

{

receivedMessage.Abandon();

}Wrapping up and request for feedback

Hopefully this post has shown you how to get started with the queuing feature being introduced in the new May CTP of Service Bus. We’ve only really just scratched the surface here; we’ll go in to more depth in future posts.

Finally, remember one of the main goals of our CTP release is to get feedback on the service. We’re interested to hear what you think of the Service Bus messaging features.

We’re particularly keen to get your opinion of the API, for example, do you think it makes sense to have PeekLock be the default mode for receivers? We have a survey for that question.

For other suggestions, critique, praise, or questions, please let us know at the AppFabric CTP forum. Your feedback will help us improve the service for you and other users like you.

The AppFabric Team announced New Service Bus and AppFabric Videos Available in a 3/17/2011 post:

As promised, we have released new videos as part of our Windows Azure AppFabric Learning Series available on CodePlex.

The new videos cover the new capabilities that enable advanced pub/sub messaging in Service Bus which have been released as CTP, and the capabilities that enable to compose and manage applications with AppFabric, which have been announced at TechEd.

More videos and accompanying code samples will be released soon, so keep checking back!

The following videos and code samples are currently available or are coming soon:

We hope the learning series helps you better understand what is AppFabric and how to use it.

The enhancements to Service Bus are available on our LABS previews environment at: http://portal.appfabriclabs.com/. So be sure to login and start checking out these new capabilities. Please remember that there are no costs associated with using the CTPs, but they are not backed up by any SLA.

If you haven’t signed up for Windows Azure AppFabric you can take advantage of our free trial offer. Just click on the image below and get started today!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

The Windows Azure Connect Team explained Windows Azure Connect–Certificate Based Endpoint Activation in a 5/6/2011 post (missed when posted):

If you have deployed Windows Azure Connect endpoint before, you know that the endpoint will be required to present an activation token (which you can get from Windows Azure Management Portal) for activation. This activation token can be specified in the .cscfg file for Windows Azure Roles (this can also be done via Visual Studio). For the endpoints that live on your corporate network (local endpoints), the activation token is part of the install link. We are happy that you like the ease of use and simplicity of this approach. We also heard some of you request an option for secure activation.

To address this feedback, we introduced certificate based activation in our latest CTP Refresh. You can now choose to use existing activation model (token based only - this is the default) or certificate based activation (token + certificate). In this refresh, certificate based activation is only available for local endpoints.

If you already have PKI and/or have a mechanism to securely distribute X509 certificates (private + public key pairs) to your endpoints within your organization, you are just few steps away from benefiting from this new feature:

1. On your corporate network, pick a Certificate Issuer that issues certificates to endpoints via manual/auto-enrollment policies.

For example, the above snapshot shows that a machine that receives certificate from the issuer with CN=SecIssuer. In this case, the public key (.cer file) of CN=SecIssuer will need to be exported and saved for step 3 below.

Note: If you have deeper PKI hierarchy (example: CN=RootIssuer -> CN=SecIssuer -> CN=myendpoint), make sure you export the public key of the direct/immediate issuer i.e., CN=SecIssuer.

2. From the Windows Azure Management Portal, Click on the “Activation Options” as shown in the snapshot below.

3. This will bring up the certificate endpoint activation dialog (shown in the snapshot below):

a. Check the box that says “Require endpoints to use trusted certificate for activation”.

b. Click on “Add” button and choose the certificate (.cer file with public key only) file from Step 1 above.

4. At this point, all the new endpoints (excluding Azure roles) in this subscription will be required to prove their strong identity via the possession of a certificate issued by the issuer in step 1 above. The endpoint must have private keys for this certificate, but there is no requirement for the subject name to match the endpoint’s FQDN or hostname (example: CN=myendpoint can be used on a machine with name ContosoHost.Corp.AdventureWorks.com).

5. If you run into activation issues with this model, you can troubleshoot by checking the event viewer for any error messages such as below:

a. Verify that you have a certificate in the Local Computer\Personal\Certificates store. This certificate should have been directly issued by the issuer in step 1 above.

b. Verify that there is a private key for this certificate.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Wade Wegner (@wadewegner) announced VB.NET and Bug Fixes for Windows Azure Toolkit for Windows Phone 7 (v1.2.1) on 3/19/2011:

Today’s release of the Windows Azure Toolkit for Windows Phone 7 (v1.2.1) has two important updates:

- Support for Visual Basic

- Bug fixes

You can download the latest drop here: http://watoolkitwp7.codeplex.com/releases/view/61952

Visual Basic

When we released on Monday we did not include updated project templates for Visual Basic – turns out that creating these project templates takes a long time, and we did not have enough cycles to complete it in time. However, we’ve had a few additional dates to finish this work, and we’re now providing Visual Basic support – this means can use this toolkit with Visual Basic and benefit from all the updates provided as part of the 1.2 release.

Bug Fixes

We also fixed a few bugs in this release. Many thanks to those of you who helped by reporting them so quickly!

- [Fixed] The VS on-screen documentation for "Windows Phone 7 Empty Cloud App" template is missing.

- [Fixed] Modify VSIX installation scripts to avoid errors when users have PowerShell Profile Scripts.

- [Fixed] The CopyLocal property of the Microsoft.IdentityModel assembly is set to false in the sample and project template solutions. It has now been set to true.

- When creating a new project using the project template wizard:

- [Fixed] If the user sets an invalid ACS Namespace and Management Key, the application shows an non-descriptive error and generates an inconsistent solution.

- [Fixed] Using a real Azure Storage account and not selecting Use HTTPS option makes the generated solution fail when running it.

- [Fixed] An error is displayed when creating a new ‘Windows Phone Cloud Application’ project in Visual Web Developer 2010 Express.

- [Fixed] An error is displayed when creating a new ‘Windows Phone Cloud Application’ project in Visual Studio 2010 Express for Windows Phone.

- [Fixed] The value of the PushServiceName setting is not replaced in the code generated with the project templates.

- [Fixed] The ACS sample was shipped with an invalid configuration and wrong instructions in the Readme document to configure them appropriately.

- [Fixed] The ApplePushNotification class is not used in the samples nor by the code generated with the project templates.

- [Fixed] The solutions for VWD and VPD do not build since some projects are missing.

- [Fixed] The error message shown in the ‘login’ page when there are connectivity issues is non-descriptive.

Again, many thanks to those of you who helped by reporting these bugs. Keep the feedback coming!

Brent Stineman (@BrentCodeMonkey) posted Windows Azure Endpoints – Overview on 5/18/2011:

You ever have those days where you wake up, look around, and wonder how you got where are? Or better yet, what the heck you’re doing there? This pretty much sums up the last 6 months or so of my career. I have recently realized that I’ve been doing so much Windows Azure evangelizing (both internally and externally) as well working on actual projects and potential projects that I haven’t written a single technical blog post in almost 5 months (since my Azure App Fabric Management Service piece in November).

So I have a digital copy of Tron Legacy running in the background as I sit down to write a bit on something that I have found confusing. And judging by the questions I’ve been seeing on the MSDN forums, I’m likely not alone. So I thought I’d share my findings about Windows Azure Endpoints.

For every ending, there is a beginning

Originally, back in the CTP days, Windows Azure did not allow you to connect directly to a worker role. The “disconnected communications” model was pure. The problem with purity is that it’s often limiting. So when the platform moved from CTP to its production form in November of ’09, they introduced a new concept, internal endpoints.

Before this change, input endpoints were how we declared the ports that web roles would expose indirectly via a load balancer. Now with internal endpoints, we could declare one or more ports on which we could directly access the individual instances of our services. And they would work for ANY type of role.

It was a bit of a game-changer. We were now able to reduce latency by allowing direct communication between roles. It was a nice and necessary addition.

What does internal mean?

The question of “what does internal mean” is something I struggled with. I couldn’t find a clear answer for if internal endpoints could be reached from outside of Windows Azure, or more specifically outside of the boundary created by a hosted service.