Windows Azure and Cloud Computing Posts for 1/28/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and ADO.NET Entity Framework

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Christian Weyer explained Querying Windows Azure Table Storage with LINQPad in a 1/28/2011 post:

Last week I wanted to quickly query and test data from one of my Windows Azure Table Storage tables. I did not want to use a storage tool as I wanted to have more fine-grained query control – and I sure did not want to write a custom program in Visual Studio.

What I ended up with is using the wonderful LINQPad to query Azure Table Storage. How does this work?

- Add the necessary DLLs to LINQPad by opening Query Properties from the Query menu. Then add references to these assemblies:

-System.Data.Services.Client.dll

-Microsoft.WindowsAzure.StorageClient.dll

-<all assemblies you need for querying table storage via your custom DataServiceContext />- Next we need to import the .NET namespaces we need in our code. In my case it looks like this:

Now we can use a C# program snippet like the following to successfully query our table(s):

void Main() { var accountName = "…"; var sharedKey = "…"; var storageCredentials = new StorageCredentialsAccountAndKey(accountName, sharedKey); var storageAccount = new CloudStorageAccount(storageCredentials, true); var storageService = new MeldungenDataServiceContext( storageAccount.TableEndpoint.ToString(), storageCredentials); var competitions = from competition in storageService.Wettkaempfe select competition; competitions.Dump(); }Just to prove it:

Nice.

Note: make sure to spend a few bucks on LINQPad to get full IntelliSense – this just rocks

See Wade Wegner (@WadeWegner) described Using Expression Encoder 4 in a Windows Azure Worker Role and provided links to a live Azure demo project on 1/27/2011 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below, which uses blob storage for the source and destination video clips.

<Return to section navigation list>

SQL Azure Database and Reporting

Christian Weyer explained Automating backup of a SQL Azure database to Azure Blob Storage with the help of PowerShell and Task Scheduler in a 1/28/2011 post:

OK, I am being a bit lazy on this one. I am not writing any custom PowerShell CmdLets here but use the powerful Azure Management CmdLets.

- After you have downloaded the trial version of the CmdLets, make sure you have followed the setup instructions outlined in the “Azure Management Cmdlets - Getting Started Guide” document (which can be found in the installation folder of the CmdLets).

- Start PowerShell (this has been tested with PS 2.0 which is installed by default on Windows 7 and Windows Server 2008 R2).

After having registered the CmdLets (again please see “Azure Management Cmdlets - Getting Started Guide”) you can run a backup script using the SQL Azure backup CmdLet (there are tons more in the package!).

Here is a sample script:

########################################################################################### Powershell script to backup a SQL Azure database by downloading its tables data to #### local disk using "bcp" utility and uploading these data files to Azure Blob Storage.############################################################################################ Name of your SQL Azure database server. Please specify just the name of the database server.# For example if your database server name is "abcdefgh", just specify that# and NOT "tcp:abcdefgh.database.windows.net" or "abcdefgh.database.windows.net"$databaseServerName = "<your_sql_azure_server_name>";# User name to connect to database server. Please specify just the user name.# For example if your user name is "myusername", just specify that# and NOT "myusername@myservername".$userName = "<your_sql_azure_user_name>";# Password to connect to database server.$password = "<your_sql_azure_user_password>";# Name of the database e.g. mydatabase.$databaseName = "<your_sql_azure_database_name";# Download location where the contents will be saved.$downloadLocation = "c:\Temp\SQLAzure";# Windows Azure Storage account name to use to store the backup files.$storageAccount = "<your_windows_azure_storage_account_name>";# Windows Azure Storage account primary key.$storagekey = "<your_windows_azure_storage_account_key>";# Blob container to use for storing the SQL Azure backup files.$storageContainer = "sqlazurebackup";Backup-Database -Name $databaseName -DownloadLocation $downloadLocation

-Server $databaseServerName -Username $userName -Password $password

-SaveToBlobStorage -AccountName $storageAccount -AccountKey $storageKey

-BlobContainerName $storageContainer –CompressBlobsThe above script first backups data in all tables to a local temporary folder and then uploads the data in compressed form into blob storage. When everything worked successfully you will see something like this:

Make sure to verify with a storage explorer tool that your backup files actually ended up in blob storage – e.g.:

Last but not least, you could create a recurring task via Windows task scheduler to periodically run your backup PS script (e.g. http://blog.pointbeyond.com/2010/04/23/run-powershell-script-using-windows-server-2008-task-scheduler/). I am not showing the details here – should be pretty straight-forward.

<Return to section navigation list>

MarketPlace DataMarket and OData

Darren Liu explained how to Retrieve Records [by] Filtering on an Entity Reference Field Using OData in a 1/27/2011 post to his MSDN blog:

I ran into a problem today trying to retrieve records filtering on an Entity Reference field using the OData endpoint. Thanks to Jim Daly for helping me. An Entity Reference field contains several properties and Id is one of the its properties. Usually when you are filtering using OData, all you have to do is use the keyword $filter, the search field, operator and the value.

For example, to retrieve all the accounts where address1_city equal Redmond, you’ll do the following:

/AccountSet?$filter=Address1_City eq 'Redmond'However to retrieve from an Entity Reference field is little bit different. To access the properties in an Entity Reference field, you just need to use the forward slash “/” and then follow by the property name.

For example, to retrieve all the accounts where primarycontactid equal to {guid}, you have to do the following:

AccountSet?$filter=PrimaryContactId/Id eq (Guid'567DAA8F-BE0E-E011-B7C7-000C2967EE46')I hope this help in your next project.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Steve Plank (@plankytronixx) posted Office 365 federated access for home workers on 1/28/2011:

If you are a home worker, sat on the Internet outside of your corporate network (VPNs excepted), it’s very likely your organisation’s ADFS 2.0 server will be sat behind a mass of firewall technology that prevents you from accessing it. So how do you get access to a federated Office 365 domain?

Enter stage left: The Federation Proxy.

ADFS includes the concept of an additional service you can publish on the public Internet so that any machines not capable of faffing about with the kerberos protocols you find on your corporate network aren’t required. You type your credentials in to a web form.

This is possible because in federation it’s the client that does all the work. The client is re-directed hither and thither, sometimes with POST data (SAML Tokens), but essentially the client is sent to a URL which it looks up, converts to an IP address and then goes to that endpoint.

This is the normal case with a client inside the corporate network. These are not the real URLs or IP addresses – just simplified examples:

1. The client looks up the office 365 URL in its DNS Server (this process might actually be complicated by say a proxy server, NAT etc – but this is essentially the flow).

2. It connects to office 365 at IP address 1.0.0.1.

3. The client is redirected to http://mfg.com. Before it can go there, it does a look-up (1) in DNS to get the MFG’s IP address – in this case 1.0.0.2.

4. The client goes to the MFG at 10.0.0.2.

5. The client is redirected to http://adfs.com. This is because when you set up identity federation, the MFG gets hold of the federation metadata document which has the URL, not the IP address. Before it can go there, it goes to the corporate DNS server and looks up adfs.com which returns 10.0.0.1.

6. The client goes to http://adfs.com which is at 10.0..0.1.

The story then unwinds itself as in a previous post and setup video and the Office 365 service gets the right SAML token.

Now let’s look at an external client and a federation proxy:

1. The client looks up office 365 in the external DNS server provided (typically) by the ISP who manages their Internet connection. They get back 1.0.0.1.

2. The client connects to Office 365 as in the previous example.

3. The client is redirected to http://mfg.com as in the previous example. This time however, the client looks up the address in the external DNS server. Note the Office 365 environment and the MFG didn’t have to do anything different. All they understand are URLs. Before the client can go to the MFG it does a look up in the External DNS and gets back address 1.0.0.2 as in the previous example.

4. The client goes to 1.0.0.2, which is http://mfg.com.

5. The MFG redirects the client to http://adfs.com. The client needs to look up the IP address and goes to the external DNS. It gets back 2.0.0.1. This is different than the previous example where the internal DNS server had the address of the internally accessible ADFS Server. Although the federation server proxy has the same URL (http://adfs.com), it’s a different service on a different segment of network (the Internet facing DMZ) with a different IP address. The client doesn’t care.

6. The client goes to 2.0.0.1 (http://adfs.com) and tries to authenticate. They are presented with a Webform that asks for username and password. There is no kerberos exchange with the proxy because it’s facing the Internet, not the internal corporate network. It’s not possible in this scenario to do integrated kerberos authentication – so the next best thing is to type your corporate AD credentials in to a form.

7. The proxy talks to the real ADFS server and if the authentication is successful a SAML token is returned to the proxy. The dance unwinds itself in exactly the same way as the previous example only with the SAML token being supplied by the proxy, not the federation server.

Notice in both examples, the ADFS server and the proxy never talk directly to the MFG or Office 365. Traffic goes through the client. So as long as there is a good Internet connection between the client and each of the services (Office 365, MFG, ADFS (or proxy), it will work.

It also means if there is not a good connection, then it won’t. So say if the AD DC on corp-net goes down, or the ADFS Server goes offline, or the Internet connection to the proxy fails, then there can be no authentication and the user will not be able to get to their services in Office 365. Some people have asked me “can they just use a stored password at the Office 365 end” and the answer is no. Once a federated domain is set up, it will use federated access.

If you don’t trust the stability or management of your AD or any of its component parts (DCs, ADFS Servers, ADFS proxies, Internet connections) then think very carefully about the suitability of a federated environment. In a large, well managed, corporate environment, the risks are very low. But in a small business with no specific IT function and neglected or poorly managed infrastructure – be careful. In that case – stick with MS Online IDs and passwords.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

Panagiotis Kefalidis (@pkefal) answered “No” to CDN -- Do you get corrupt data while an update on the content is happening? in a 1/28/2011 post:

A lot of interesting things have been going on lately on the Windows Azure MVP list and I'll be try to pick the best and the ones I can share and make some posts.

During an Azure bootcamp another fellow Windows Azure MVP, had a very interesting question "What happens if someone is updating the BLOB and a request come in for that BLOB to serve it?"

The answer came from Steve Marx pretty quickly and I'm just quoting his email:

"The bottom line is that a client should never receive corrupt data due to changing content. This is true both from blob storage directly and from the CDN.

The way this works is:

- Changes to block blobs (put blob, put block list) are atomic, in that there’s never a blob that has only partial new content.

- Reading a blob all at once is atomic, in that we don’t respond with data that’s a mix of new and old content.

- When reading a blob with range requests, each request is atomic, but you could always end up with corrupt data if you request different ranges at different times and stitch them together. Using ETags (or If-Unmodified-Since) should protect you from this. (Requests after the content changed would fail with “condition not met,” and you’d know to start over.)

Only the last point is particularly relevant for the CDN, and it reads from blob storage and sends to clients in ways that obey the same HTTP semantics (so ETags and If-Unmodified-Since work).

For a client to end up with corrupt data, it would have to be behaving badly… i.e., requesting data in chunks but not using HTTP headers to guarantee it’s still reading the same blob. I think this would be a rare situation. (Browsers, media players, etc. should all do this properly.)

Of course, updates to a blob don’t mean the content is immediately changed in the CDN, so it’s certainly possible to get old data due to caching. It should just never be corrupt data due to mixing old and new content."

So, as you see from Steve's reply, there is no chance to get corrupt data, unlike other vendors, only old data.

Finally, a post about Windows Azure’s CDN.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Christian Weyer explained Redirecting standard-out trace messages from custom processes to Windows Azure diagnostics manager in a 1/28/2011 post:

Recently I met with our friends from runtime and pusld to do a workshop on service-orientation and WCF – and we also chatted about some Windows Azure topics.

One of the discussion points was running a custom .exe server application in a Windows Azure worker role. Nothing new or spectacular so far.

The interesting tidbit was that this .exe already traced a number of useful data via standard-out. How could we direct this output to Windows Azure so that we are able to see it with any Azure storage or even diagnostics tool?This is what the pusld guys came up with - here we go:

- As usual, define a configuration setting for the storage connection string in the configuration file:

<Setting name="DiagnosticsConnectionString"

value="DefaultEndpointsProtocol=https;AccountName=foobar;AccountKey=…" />- In the OnStart() method, configure the default Azure logs to be copied to your storage account every 1 minute (in this case with Verbose log level):

DiagnosticMonitorConfiguration diagnosticConfig =

DiagnosticMonitor.GetDefaultInitialConfiguration(); diagnosticConfig.Logs.ScheduledTransferPeriod = TimeSpan.FromMinutes(1); diagnosticConfig.Logs.ScheduledTransferLogLevelFilter = LogLevel.Verbose; DiagnosticMonitor.Start("DiagnosticsConnectionString", diagnosticConfig);- In the method where we start the executable tell the process info to redirect standard output and standard error:

ProcessStartInfo info = new ProcessStartInfo() { FileName = targetexecutable, WorkingDirectory = targetPath, UseShellExecute = false, ErrorDialog = false, CreateNoWindow = true, RedirectStandardOutput = true, RedirectStandardError = true };- Add two event handlers which would log all the standard output and standard error to the Azure logs and begin to asynchronously read the streams after starting the process:

Process runningProgram = new Process(); runningProgram.StartInfo = info; runningProgram.OutputDataReceived += new DataReceivedEventHandler(

RunningProgramOutputDataReceived); runningProgram.ErrorDataReceived += new DataReceivedEventHandler(

RunningProgramErrorDataReceived); runningProgram.Start(); runningProgram.BeginOutputReadLine(); runningProgram.BeginErrorReadLine();- In the asynchronous handler methods just simply trace the output to the Azure logs, e.g.:

private static void RunningProgramOutputDataReceived(object sender,

DataReceivedEventArgs e) { if (e.Data != null) { string output = e.Data; Trace.WriteLine("[…] Standard output –> "

+ output, "Information"); } }- Voila!

- Finally, use a diagnostics tool like Cerebrata’s Azure Diagnostics Manager just add the Azure storage account specified in your application above and choose to display the default Trace Logs:

Hope this helps.

Christian Weyer (pictured at right) described Trying to troubleshoot Windows Azure Compute role startup issues in another 1/28/2011 post:

This one is actually borrowed from a Steve Marx blog post – I just wanted to explicitly set it in context with role startup issues and provide a small helper class. I guess we will have been struggling with finding out what exactly is going wrong during startup of our Azure role instances.

There are several possible reasons – and some of these issues you can actually see when trying to log exceptions happening the startup phase.

Try to wrap the code in your OnStart methods and catch any exception and immediately write it directly to blob storage.

public class WebRole : RoleEntryPoint { public override bool OnStart() { try { // do your startup stuff here... return base.OnStart(); } catch (Exception ex) { DiagnosticsHelper.WriteExceptionToBlobStorage(ex); } return false; } }And this is the little helper:

using System; using Microsoft.WindowsAzure; using Microsoft.WindowsAzure.ServiceRuntime; using Microsoft.WindowsAzure.StorageClient; namespace Thinktecture.WindowsAzure { public static class DiagnosticsHelper { public static void WriteExceptionToBlobStorage(Exception ex) { var storageAccount = CloudStorageAccount.Parse( RoleEnvironment.GetConfigurationSettingValue(

"Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString")); var container = storageAccount.CreateCloudBlobClient()

.GetContainerReference("exceptions"); container.CreateIfNotExist(); var blob = container.GetBlobReference(string.Format(

"exception-{0}-{1}.log",

RoleEnvironment.CurrentRoleInstance.Id,

DateTime.UtcNow.Ticks)); blob.UploadText(ex.ToString()); } } }… which would then lead to your exceptions showing up in blob storage:

Wade Wegner (@WadeWegner) described Using Expression Encoder 4 in a Windows Azure Worker Role and provided links to a live Azure demo project on 1/27/2011:

One of the most common workloads I hear about from customers and developers is video encoding in Windows Azure. There are certainly many ways to perform video encoding in Windows Azure – in fact, at PDC 2009 I was joined by Mark Richards of Origin Digital who discussed their video transcoding solution on Windows Azure – but until the release of Windows Azure 1.3 SDK, and in particular Startup Tasks and elevated privileges, it was complicated.

As I looked into this workload, I decided that I wanted to prove out a few things in a small spike:

- It is not necessary to use the VM Role. The VM Role was introduced to make the process of migrating existing Windows Server applications to Windows Azure easier and faster, especially when this involves long, non-scriptable or fragile installation steps. In this particular case, I was pretty sure I could script the setup process using Startup Tasks.

- It is possible to use the freely available Expression Encoder 4.

- It is possible to use the Web Platform Installer to install Expression Encoder 4.

Before I go any farther, make sure you review two great blog posts by Steve Marx – be sure to read these as they are a great starting point for Startup Tasks:

Okay, now that everyone’s familiar with Startup Tasks, let’s jump into what it takes to get the video encoding solution working.

I decided to put the encoding service in a Worker Role – it makes the most sense, as all I want the role to do is encode my videos. Consequently, I added a Startup Task to the Service Definition that points to a script:

Code Snippet

- <Startup>

- <Task commandLine="Startup\InstallEncoder.cmd" executionContext="elevated" taskType="background" />

- </Startup>

Note that I have the task type defined as “background”. As Steve explains in his post, this makes it easy during development to debug; however, when you’re ready to push this to production, be sure to change this to “simple” so that the script runs to completion before any other code runs.

The next thing to do is add the tools to our project that are needed to configure the instance and install Encoder. Here’s what you need:

- WebPICmdLine – the executable and it’s assemblies

- InstallEncoder.cmd – this is our script that’s used to set everything up

- PsExec.exe (optional) – I like to include this for debugging purposes (e.g. psexec –s -i cmd)

As I mentioned in my post on Web Deploy with Windows Azure, make sure to mark these files as “Copy to Output Directory” in Visual Studio. I like to organize these files like this:

Now, let’s take a look at the InstallEncoder.cmd script, as that’s where the real work is done. Here’s the script:

Code Snippet

- REM : Install the Desktop Experience

- ServerManagerCMD.exe -install Desktop-Experience -restart -resultPath results.xml

- REM : Make a folder for the AppData

- md "%~dp0appdata"

- REM : Change the location of the Local AppData

- reg add "hku\.default\software\microsoft\windows\currentversion\explorer\user shell folders" /v "Local AppData" /t REG_EXPAND_SZ /d "%~dp0appdata" /f

- REM : Install Encoder

- "%~dp0\webpicmd\WebPICmdLine.exe" /accepteula /Products: ExpressionEncoder4 /log:encoder.txt

- REM : Change the location of the Local AppData back to default

- reg add "hku\.default\software\microsoft\windows\currentversion\explorer\user shell folders" /v "Local AppData" /t REG_EXPAND_SZ /d %%USERPROFILE%%\AppData\Local /f

- REM : Exit gracefully

- exit /b 0

Let’s break it down:

- Line #2: Turns out that you need to install the Desktop Experience in the instance (it’s Windows Server 2008, after all) before you can run Expression Encoder 4. You can do this easily with ServerManagerCMD.exe, but you’ll have to restart the machine for it to take affect.

- Line #4: Create a new folder called “appdata”. (Incidentally, %~dp0 refers the directory where the batch file lives.)

- Line #6: Update the “Local AppData” folder in the registry to temporarily use our “appdata” folder.

- Line #8: Install Expression Encoder 4 using the WebPICmdLine. Note that with the latest drop you’ll need to add the “/accepteula” flag to avoid user interaction.

- Line #10: Revert the “Local AppData” back to the default.

- Line #12: Exit gracefully, so that Windows Azure doesn’t think there was an error.

If you need to restart your machine before you’ve installed everything in your startup task script, as I did, make sure the script is idempotent. Specifically in this case, since we restart the machine after installing the Desktop Experience, line #2 will get run again when the machine starts up. Fortunately ServerManagerCMD.exe has no problem running when the Desktop Experience is installed.

This is all that’s required to setup the Worker Role. At this point, it has all the software required in order to start encoding videos. Pretty slick, huh?

Unfortunately, there’s one other thing to be aware of – the Expression Encoder assemblies are all 32-bit, and consequently they will not run when targeting x64 or Any CPU. This means that you will need to start a new process to host the Encoder assemblies and our encoding process. Not a big deal. Here’s what you can do.

Create a new Console project – I called mine “DllHostx86” based on some guidance I gleamed from Hani Tech’s post – and add the Expression Encoder assemblies:

Update the platform target so that it’s explicitly targeting x86 – this is required with these assemblies, as I’ve noted elsewhere in my blog (see Using the Expression Encoder SDK to encode lots of videos).

Now you can write the code in the Program.cs file to encode the videos using the Encoder APIs. This itself is pretty easy and straightforward, and I invite you to review the post I mention above to see how. Also, if you want to do something kind of fun you can add a text overlay on the video, just to show you can.

The next step is to make sure that the DllHostx86 executable is available to include as part of our Worker Role package – we’ll want to run it as a separate process outside of the Worker Role. Simply update the output path so that it points to a folder that exists in your Worker Role project.

This way the any build will create an executable that exists within the Worker Role project folder, and consequently can be included within the Visual Studio project (be sure to set them to copy to the output directory). The end result will look like this:

Now, all we have to do is write some code that will create a new process and execute our DllHostx86.exe file.

Code Snippet

- private void ExecuteEncoderHost()

- {

- string dllHostPath = @"Redist\DllHostx86.exe";

- ProcessStartInfo psi = new ProcessStartInfo(dllHostPath);

- Trace.WriteLine("Starting DllHostx86.exe process", "Information");

- using (Process dllHost = new Process())

- {

- dllHost.StartInfo = psi;

- dllHost.Start();

- dllHost.WaitForExit();

- }

- }

While I think this is pretty straightforward, a few comments:

- Line #5: If you want to pass parameters to the applications (which I ultimately do in my solution) you can use an overload of ProcessStartInfo to pass them in.

- Line #12: Since my simple spike isn’t meant to handle multiple a lot of these processes at once, I use WaitForExit() to ensure that the encoding process completes before continuing.

And there you have it! That’s all you need to do to setup a Windows Azure Worker Role to use Expression Encoder 4 to encode videos!

If you want to see an example of this running, you can try out my initial spike: http://encoder.cloudapp.net/.

This is just a simple, but hopefully effect, sample that illustrates how simple it is to setup the Worker Role using Startup Tasks. With this solution in place, you can scale out as many Worker Roles as you deem necessary to process video – in fact, at one point I scaled up to 10 instances and watched them all encoding my videos. In fact, I bet you could use some of the tips I illustrate in Using the Expression Encoder SDK to Encode Lots of Videos to run multiple encoding session at a time per instance. Pretty nifty.

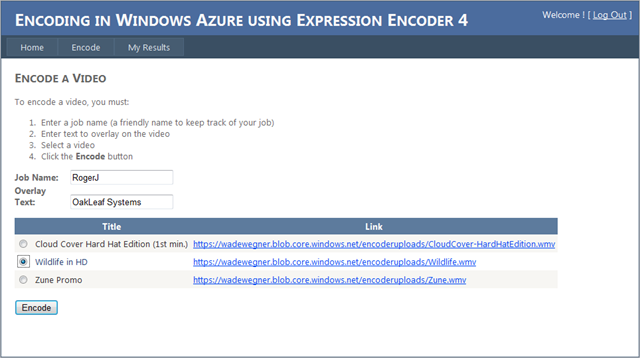

Wade’s demo uses OpenID to identify users. Here’s the opening page for identifying the encoding job, adding overlaid text, and selecting the video clip to encode:

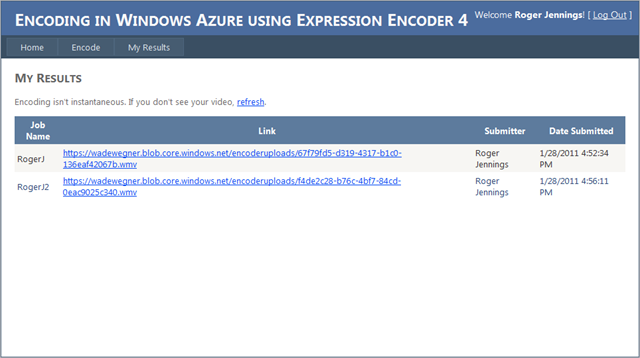

Here’s the My Results page for a couple of Zune Promo videos I re-encoded earlier this morning:

Here’s an example of the text overlaid on the final frames of the Zune Demo clip in Windows Media Player to prove that re-encoding occurred:

Steve Marx (@smarx) described using ASP.NET MVC 3 in Windows Azure in a 1/27/2011 post:

The recently-released ASP.NET MVC 3 is awesome. I’ve been a fan of ASP.NET MVC since the first version, and it keeps getting better. The Razor syntax (which comes from the new ASP.NET Web Pages) makes me very productive, and I love the unobtrusive JavaScript. Scott Guthrie’s blog post announcing MVC 3 has a longer list of the new features. In this post, I’ll give you two options for deploying ASP.NET MVC 3 application to Windows Azure.

A bit of background

Windows Azure virtual machines don’t have ASP.NET MVC installed already, so to deploy an MVC app, you need to also deploy the necessary binaries. (This is true if you’re deploying an MVC app to any server that doesn’t have MVC already installed.) For ASP.NET MVC 2, the Windows Azure tools have a nice template (“ASP.NET MVC 2 Web Role”) that automatically sets the right assemblies to “Copy Local,” meaning they get deployed with your application. ASP.NET MVC 3 just shipped, so there isn’t yet a template in the Windows Azure tools. That means it’s up to you to include the right assemblies.

Technique #1: Include the assemblies in your application

Scott Guthrie has a blog post called “Running an ASP.NET MVC 3 app on a web server that doesn’t have ASP.NET MVC 3 installed,” which covers this technique. Specifically, there’s a link in there to a post by Drew Prog about “\bin deployment.” It lists the assemblies you should reference in your project and mark as “Copy Local.” For convenience, here’s the list from his post:

- Microsoft.Web.Infrastructure

- System.Web.Helpers

- System.Web.Mvc

- System.Web.Razor

- System.Web.WebPages

- System.Web.WebPages.Deployment

- System.Web.WebPages.Razor

Not all of these assemblies are directly referenced by your project when you create a new ASP.NET MVC 3 application. Some are indirectly used, so you’ll have to add them. Scott Hanselman also has a post about \bin deploying ASP.NET MVC 3 in which he argues that adding these as references isn’t the right thing to do:

NOTE: It's possible to just reference these assemblies directly from your application, then click properties on each one and set copyLocal=true. Then they'd get automatically copied to the bin folder. However, I have a problem with that philosophically. Your app doesn't need a reference to these assemblies. It's assemblies your app depends on. A depends on B that depends on C. Why should A manually set a dependency on C just to get better deployment? More on this, well, now.Check out his post for his recommended approach. Both have a similar result, causing the required assemblies to be deployed in the \bin folder with your application.

Technique #2: Installing ASP.NET MVC 3 with a startup task

In my last two blog posts (“Introduction to Windows Azure Startup Tasks” and “Windows Azure Startup Tasks: Tips, Tricks, and Gotchas”), then you already know one way to do this. You can use the new WebPI Command Line tool to install MVC 3. See “Using WebPICmdline to run 32-bit installers” in my tips and tricks post to see my recommended syntax for doing this. Just change the product to “MVC3.”

The WebPI method is pretty easy, but I wanted to show an alternative in this post, which is to directly bundle the ASP.NET MVC 3 installer with your application and execute it in a startup task. This turns out to also be quite easy to do.

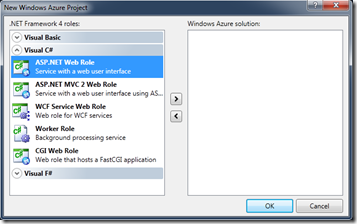

Step 1: Create a new Windows Azure solution and add an ASP.NET MVC 3 project to it

Use “File/New/Project…” to create a new Windows Azure application, but don’t add any roles to it right away (since there’s no template for ASP.NET MVC 3). In other words, just hit “OK” when you see this dialog:

Next, add an ASP.NET MVC 3 project to your solution by right-clicking on the solution and choosing “Add/New Project…” Choose an ASP.NET MVC 3 Web Application, just as you would outside of Windows Azure.

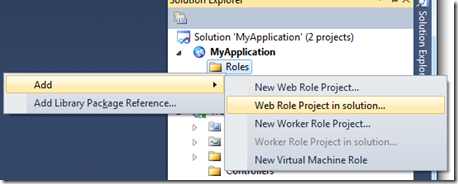

Step 2: Add the ASP.NET MVC 3 application as a web role

Right-click the “Roles” node under your Windows Azure project and choose “Add/Web Role Project in solution…”

Then just pick your ASP.NET MVC 3 project. Now it’s associated as a role in your Windows Azure application.

Step 3: Create a startup task

Just for aesthetics, I like to create a separate folder for any startup task that’s more than a single file. In this case, we’re going to need the ASP.NET MVC 3 installer itself as well as a batch file that will run it. (This is because we can’t pass command-line arguments in a startup task.)

Create a folder called “startup,” and put AspNetMVC3Setup.exe in it. Also add a batch file called “installmvc.cmd” (being careful to avoid the byte order mark Visual Studio will want to add, see my tips and tricks post). Put the following two lines in “installmvc.cmd”:

%~dp0AspNetMVC3Setup.exe /q /log %~dp0mvc3_install.htm exit /b 0Note the

%~dp0, which gives you the directory of the batch file. This is important if your batch file is in a subdirectory, as the current working directory won’t be the same as the location of your startup task files. Also note theexit /b 0, which ensures this batch file always returns a success code. (For extra credit, detect whether ASP.NET MVC 3 is successfully installed and return the proper error code. What you don’t want to do is just rely on the error code of the installer, which probably returns non-success if ASP.NET MVC 3 is already installed.)Set the batch file and the executable to both have a “build action” of “none,” and a “copy to output directory” setting of “copy always” (or “copy if newer” if you prefer). See my introduction to startup tasks post for a screenshot of what those property settings look like.

Finally, edit

ServiceDefinition.csdefto call the batch file:<WebRole name="WebRole"> <Startup> <Task commandLine="startup\installmvc.cmd" executionContext="elevated" /> </Startup>That’s it! Now you can add whatever ASP.NET MVC 3 content you want to the web role, without having to worry about whether the right assemblies will be installed.

Download the code

You can download a full Visual Studio solution that implements the above steps here: http://cdn.blog.smarx.com/files/MVC3WebRole_source.zip

Rob Tiffany described Confronting the Consumerization of IT with Microsoft MEAP in a 1/27/2011 post:

CIOs are asking for help in confronting the tidal wave of mobile devices entering the enterprise. IT departments have raised the white flag as attempts to block consumer-focused smartphones and tablets have failed. The Consumerization of IT has been a growing trend fueled by cloud-delivered services and compelling mobile devices with wireless capabilities. This trend snowballs more and more each year, meaning it’s time to embrace it rather than put your head in the sand. Microsoft MEAP is the answer. I’ve been talking to you about how Microsoft aligns with Gartner’s Mobile Enterprise Application Platform (MEAP) for years now, and I wanted to update you on how we’ve evolved with respect to Gartner’s Critical Capabilities. As a refresher, MEAP is Software + Services that allow IT orgs to extend corporate apps to mobile employees and business partners. This platform must support:

Multiple mobile applications

Multiple mobile operating systems

Multiple backend systems maximizing ROI vs. tactical solutions

It’s already a $1 Billion business and 95% of orgs will choose MEAP over point solutions by 2012. The picture below represents some of our familiar cloud and on-premise servers on top and a wide spectrum of mobile devices from Microsoft and other manufacturers on the bottom:

Let’s do a quick rundown of Gartner’s Critical Capability list so you can see how we rise to their challenge:

- Integrated Development Environment for composing server and client-side logic: Microsoft Visual Studio supports on-premise and cloud server development and targets clients such as Windows, Windows Phone 7, Windows Mobile, the Web, Nokia S60, and the Macintosh.

- Application Client Runtime: Various flavors of Microsoft .NET (Silverlight, .NET, Compact Framework) run on Azure, Windows Server, Windows, the Mac, Windows Phone 7, Windows Mobile, and Nokia S60. Guess what, you can use MonoTouch to take your .NET skills to the iPhone, iPad and iPod Touch. MonoDroid is in the preview stage and will bring .NET to Android phones and tablets in the future.

- Enterprise Application Integration Tools: Connecting mobile devices to a variety of backend packages like Dynamics or SAP is critical. Microsoft supports this integration in the cloud via Windows Azure AppFabric and on-premise though SQL Server Integration Services and dozens of adapters. Tools like our Business Intelligence Dev Studio make EAI a repeatable, drag and drop exercise.

- Packaged Mobile Apps: Microsoft delivers the Office suite across Windows, Windows Phone 7, Windows Mobile, the Web and the Mac. Office will be coming to Nokia in the future and One Note just arrived on iOS.

Multichannel Servers: Windows Server + SQL Server on-premise and Windows Azure + SQL Azure in the cloud represents Microsoft’s mobile middleware platforms. [Emphasis added.]

Windows Communication Foundation (WCF) delivers cross-platform SOAP & REST Web Services and cross-platform wire protocols like XML, JSON and OData. [WCF/OData separated and emphasis added.]

- Software Distribution: Microsoft System Center Configuration Manager supports pushing software out to Windows and Windows Mobile. Windows Phone 7 has Marketplace for this function.

- Security: Data-in-transit is secured by SSL across all platforms. Data-at-Rest security for apps is facilitated on Windows by BitLocker, Windows Mobile through encryption policies and Windows Phone 7 through AESManaged in Silverlight. Cross-platform auth is facilitated by Microsoft Windows Identity Foundation so devices can access resources via a Windows Live ID, Facebook, Google, Yahoo, ADFS and others.

- Hosting: It goes without saying the Microsoft knocks the hosting requirement out of the park with Azure.

So what do I want you to take away from all this?

Microsoft has a great MEAP stack comprised of servers and skillsets you probably already have at your company. You get maximum reuse on our servers and in our cloud which means you save money when it’s time to build and deploy your second, third and fourth mobile app without new training, new servers, and different technologies each time. I hope you’re pleasantly surprised to see that our .NET application runtime lives on so many mobile platforms. Again, this means that your existing .NET skills can be reused on Microsoft devices, the Web, Mac, Nokia and even the iPad. Who knew? I’m looking forward to bring Android into the .NET camp as well.

It’s a brave new world of disparate devices connected to the cloud. Companies have no choice but to target most all of them when constructing B2C apps to sell products or bring in new customers. They’ve also found that this is the case in supporting their own employees and business partners with B2E and B2B apps. No single company has so many different skillsets and competencies to pull this off.

There is one thing that most companies do have though. A Microsoft infrastructure in their data center or the cloud, Windows on desktops, laptops and tablets, plus teams of .NET developers. As I’ve just shown you, these .NET developers armed with Visual Studio or MonoTouch can be unleashed to allow you to reach almost every mobile platform. This dramatically reduces the amount of extra Java and Eclipse skills that you’ll consider bringing in-house or outsourcing in order to target platforms like Android or the Blackberry. Through the magic of WCF, all these platforms can connect to your critical Microsoft back-end resources and beyond. You save money on training, use the servers you already have, resuse business logic and get to market faster. No matter what platform you need to target, Microsoft and its partners want to help you reach your goals.

Looks like you’re already ahead of the game in taking on the Consumerization of IT.

Wesy reminded Azure developers on 1/27/2011 that Windows Phone 7 + Cloud Services SDK Available for Download from Microsoft Research:

This is a software-development kit (SDK) for the creation of Windows Phone 7 (WP7) applications that leverage research services not yet available to the general public. The primary goal of this SDK is to support the efforts of Project Hawaii, a student-focused initiative for exploration of how cloud-based services can be used to enhance the WP7 experience. The first two services were made available today and the developer preview of the Windows Phone 7 + Cloud Services SDK can be downloaded here.

The Hawaii Relay Service was created to allow direct communication between devices. Today, most providers do not provide public IP for mobile devices making it difficult to create those types of applications. The relay service in the cloud, provides an endpoint naming scheme and buffering for messages sent between endpoints. Additionally, it also allows messages to be multicast to multiple endpoints.

The Hawaii Rendezvous Service is a mapping service from well-known human-readable names to endpoints in the Hawaii Relay Service. These well-known human-readable names may be used as stable rendezvous points that can be compiled into applications.

For more information, please visit the Microsoft Research site

<Return to section navigation list>

Visual Studio LightSwitch and ADO.NET Entity Framework

Arthur Vickers posted Using DbContext in EF Feature CTP5 Part 3: Finding Entities to the ADO.NET Team Blog on 1/27/2010:

Introduction

In December we released ADO.NET Entity Framework Feature Community Technology Preview 5 (CTP5). In addition to the Code First approach this CTP also contains a preview of a new API that provides a more productive surface for working with the Entity Framework. This API is based on the DbContext class and can be used with the Code First, Database First, and Model First approaches.

This is the third post of a twelve part series containing patterns and code fragments showing how features of the new API can be used. Part 1 of the series contains an overview of the topics covered together with a Code First model that is used in the code fragments of this post.

The posts in this series do not contain complete walkthroughs. If you haven’t used CTP5 before then you should read Part 1 of this series and also Code First Walkthrough or Model and Database First with DbContext before tackling this post.

Finding entities using a query

DbSet and IDbSet implement IQueryable and so can be used as the starting point for writing a LINQ query against the database. This post is not the appropriate place for an in-depth discussion of LINQ, but here are a couple of simple examples:

using (var context = new UnicornsContext()) { // Query for all unicorns with names starting with B var unicorns = from u in context.Unicorns

where u.Name.StartsWith("B")

select u; // Query for the unicorn named Binky var binky = context.Unicorns

.Where(u => u.Name == "Binky")

.FirstOrDefault(); }Note that DbSet and IDbSet always create queries against the database and will always involve a round trip to the database even if the entities returned already exist in the context.

Finding entities using primary keys

The Find method on DbSet uses the primary key value to attempt to find an entity tracked by the context. If the entity is not found in the context then a query will be sent to the database to find the entity there. Null is returned if the entity is not found in the context or in the database.

Find is different from using a query in two significant ways:

- A round-trip to the database will only be made if the entity with the given key is not found in the context.

- Find will return entities that are in the Added state. That is, Find will return entities that have been added to the context but have not yet been saved to the database.

Finding an entity by primary key

The following code shows some uses of Find:

using (var context = new UnicornsContext()) { // Will hit the database var unicorn = context.Unicorns.Find(3); // Will return the same instance without hitting the database var unicornAgain = context.Unicorns.Find(3); context.Unicorns.Add(new Unicorn { Id = -1 }); // Will find the new unicorn even though it does not exist in the database var newUnicorn = context.Unicorns.Find(-1); // Will find a castle which has a string primary key var castle = context.Castles.Find("The EF Castle"); }Finding an entity by composite primary key

In the model presented in Part 1 of this series, the LadyInWaiting entity type has a composite primary key formed from the PrincessId and the CastleName properties—a princess can have one lady-in-waiting in each castle. The following code attempts to find a LadyInWaiting with PrincessId = 3 and CastleName = “The EF Castle”:

using (var context = new UnicornsContext()) { var lady = context.LadiesInWaiting.Find(3, "The EF Castle"); }Note that in the model the ColumnAttribute was used to specify an ordering for the two properties of the composite key. The call to Find must use this order when specifying the two values that form the key.

Summary

In this part of the series we showed how to find entities using LINQ queries and also how to use Find to find entities using their primary key values.

As always we would love to hear any feedback you have by commenting on this blog post.

For support please use the Entity Framework Pre-Release Forum.

Arthur Vickers, Developer, ADO.NET Entity Framework

Arthur Vickers posted another Using DbContext in EF Feature CTP5 Part 2: Connections and Models to the ADO.NET Team Blog on 1/27/2010:

Introduction

In December we released ADO.NET Entity Framework Feature Community Technology Preview 5 (CTP5). In addition to the Code First approach this CTP also contains a preview of a new API that provides a more productive surface for working with the Entity Framework. This API is based on the DbContext class and can be used with the Code First, Database First, and Model First approaches.

This is the second post of a twelve part series containing patterns and code fragments showing how features of the new API can be used. Part 1 of the series contains an overview of the topics covered together with a Code First model that is used in the code fragments of this post.

The posts in this series do not contain complete walkthroughs. If you haven’t used CTP5 before then you should read Part 1 of this series [see post below] and also Code First Walkthrough or Model and Database First with DbContext before tackling this post.

Using DbContext constructors

Typically an Entity Framework application uses a class derived from DbContext, as shown in the Code First and Model and Database First with DbContext walkthroughs. This derived class will call one of the constructors on the base DbContext class to control:

- How the context will connect to a database—i.e. how a connection string is found/used

- Whether the context will use Code First, Database First, or Model First

- Additional advanced options

The following fragments show some of the ways the DbContext constructors can be used.

Use Code First with connection by convention

If you have not done any other configuration in your application, then calling the parameterless constructor on DbContext will cause DbContext to run in Code First mode with a database connection created by convention. For example:

namespace Magic.Unicorn { public class UnicornsContext : DbContext { public UnicornsContext() // C# will call base class parameterless constructor by default { } } }In this example DbContext uses the namespace qualified name of your derived context class—Magic.Unicorn.UnicornsContext—as the database name and creates a connection string for this database using SQL Express on your local machine.

Use Code First with connection by convention and specified database name

If you have not done any other configuration in your application, then calling the string constructor on DbContext with the database name you want to use will cause DbContext to run in Code First mode with a database connection created by convention to the database of that name. For example:

public class UnicornsContext : DbContext { public UnicornsContext() : base("UnicornsDatabase") { } }In this example DbContext uses “UnicornsDatabase” as the database name and creates a connection string for this database using SQL Express on your local machine.

Use Code First with connection string in app.config/web.config file

You may choose to put a connection string in your app.config or web.config file. For example:

configuration> <connectionStrings> <add name="UnicornsCEDatabase" providerName="System.Data.SqlServerCe.4.0" connectionString="Data Source=Unicorns.sdf"/> </connectionStrings> </configuration>This is an easy way to tell DbContext to use a database server other than SQL Express—the example above specifies a SQL Server Compact Edition database.

If the name of the connection string matches the name of your context (either with or without namespace qualification) then it will be found by DbContext when the parameterless constructor is used. If the connection string name is different from the name of your context then you can tell DbContext to use this connection in Code First mode by passing the connection string name to the DbContext constructor. For example:

public class UnicornsContext : DbContext { public UnicornsContext() : base("UnicornsCEDatabase") { } }Alternatively, you can use the form “name=<connection string name>” for the string passed to the DbContext constructor. For example:

public class UnicornsContext : DbContext { public UnicornsContext() : base("name=UnicornsCEDatabase") { } }This form makes it explicit that you expect the connection string to be found in your config file. An exception will be thrown if a connection string with the given name is not found.

Database/Model First with connection string in app.config/web.config file

Database or Model First is different from Code First in that your Entity Data Model (EDM) already exists and is not generated from code when the application runs. The EDM typically exists as an EDMX file created by the Entity Designer in Visual Studio. The designer will also add an EF connection string to your app.config or web.config file. This connection string is special in that it contains information about how to find the information in your EDMX file. For example:

<configuration>

<connectionStrings>

<add name="Northwind_Entities"

connectionString="metadata=res://*/Northwind.csdl|

res://*/Northwind.ssdl|

res://*/Northwind.msl;

provider=System.Data.SqlClient;

provider connection string=

"Data Source=.\sqlexpress;

Initial Catalog=Northwind;

Integrated Security=True;

MultipleActiveResultSets=True""

providerName="System.Data.EntityClient"/>

</connectionStrings>

</configuration>You can tell DbContext to use this connection by passing the connection string name to the DbContext constructor. For example:

public class NorthwindContext : DbContext { public NorthwindContext() : base("name=Northwind_Entities") { } }DbContext knows to run in Database/Model First mode because the connection string is an EF connection string containing details of the EDM to use.

Other DbContext constructor options

The DbContext class contains other constructors and usage patterns that enable some more advanced scenarios. Some of these are:

- You can use the ModelBuilder class to build a Code First model without instantiating a DbContext instance. The result of this is a DbModel object. You can then pass this DbModel object to one of the DbContext constructors when you are ready to create your DbContext instance.

- You can pass a full connection string to DbContext instead of just the database or connection string name. By default this connection string is used with the System.Data.SqlClient provider; this can be changed by setting a different implementation of IConnectionFactory onto context.Database.DefaultConnectionFactory.

- You can use an existing DbConnection object by passing it to a DbContext constructor. If the connection object is an instance of EntityConnection, then the model specified in the connection will be used in Database/Model First mode. If the object is an instance of some other type—for example, SqlConnection—then the context will use it for Code First mode.

- You can pass an existing ObjectContext to a DbContext constructor to create a DbContext wrapping the existing context. This can be used for existing applications that use ObjectContext but which want to take advantage of DbContext in some parts of the application.

Defining sets on a derived context

DbContext with DbSet properties

The common case shown in Code First examples is to have a DbContext with public automatic DbSet properties for the entity types of your model. For example:

public class UnicornsContext : DbContext { public DbSet<Unicorn> Unicorns { get; set; } public DbSet<Princess> Princesses { get; set; } public DbSet<LadyInWaiting> LadiesInWaiting { get; set; } public DbSet<Castle> Castles { get; set; } }When used in Code First mode, this will configure Unicorn, Princess, LadyInWaiting, and Castle as entity types, as well as configuring other types reachable from these. In addition DbContext will automatically call the setter for each of these properties to set an instance of the appropriate DbSet.

DbContext with IDbSet properties

There a situations, such as when creating mocks or fakes, where it is more useful to declare your set properties using an interface. In such cases the IDbSet interface can be used in place of DbSet. For example:

public class UnicornsContext : DbContext { public IDbSet<Unicorn> Unicorns { get; set; } public IDbSet<Princess> Princesses { get; set; } public IDbSet<LadyInWaiting> LadiesInWaiting { get; set; } public IDbSet<Castle> Castles { get; set; } }This context works in exactly the same way as the context that uses the DbSet class for its set properties.

DbContext with read-only set properties

If you do not wish to expose public setters for your DbSet or IDbSet properties you can instead create read-only properties and create the set instances yourself. For example:

public class UnicornsContext : DbContext { public IDbSet<Unicorn> Unicorns { get { return Set<Unicorn>(); } } public IDbSet<Princess> Princesses { get { return Set<Princess>(); } } public IDbSet<LadyInWaiting> LadiesInWaiting { get { return Set<LadyInWaiting>(); } } public IDbSet<Castle> Castles { get { return Set<Castle>(); } } }Note that DbContext caches the instance of DbSet returned from the Set method so that each of these properties will return the same instance every time it is called.

Discovery of entity types for Code First works in the same way here as it does for properties with public getters and setters.

Summary

In this part of the series we showed how to use the various constructors on DbContext to configure connection strings and the Code First, Model First, or Database First mode of the context. We also looked at the different ways that sets can be defined on a derived context.

As always we would love to hear any feedback you have by commenting on this blog post.

For support please use the Entity Framework Pre-Release Forum.

Arthur Vickers, Developer, ADO.NET Entity Framework

Arthur Vickers posted yet another Using DbContext in EF Feature CTP5 Part 1: Introduction and Model to the ADO.NET Team Blog on 1/27/2010:

Introduction

In December we released ADO.NET Entity Framework Feature Community Technology Preview 5 (CTP5). In addition to the Code First approach this CTP also contains a preview of a new API that provides a more productive surface for working with the Entity Framework. This API is based on the DbContext class and can be used with the Code First, Database First, and Model First approaches. This is the first post of a twelve part series containing collections of patterns and code fragments showing how features of the new API can be used.

The posts in this series do not contain complete walkthroughs. If you haven’t used CTP5 before then you should read Code First Walkthrough or Model and Database First with DbContext before tackling this post.

Series Contents

New parts will be posted approximately once per day and the links below will be updated as the new parts are posted.

Part 1: Introduction and Model

- Introduces the topics that will be covered in the series

- Presents a Code First model that will be used in the subsequent parts

Part 2: Connections and Models

- Covers using DbContext constructors to configure the database connection and set the context to work in Code First, Model First, or Database First mode

- Shows different ways that the DbSet properties on the context can be defined

Part 3: Finding Entities

- Shows how an entity can be retrieved using its primary key value.

Part 4: Add/Attach and Entity States

- Shows different ways to add a new entity to the context or attach an existing entity to the context

- Covers the different entity states and shows how to change the state of an entity

Part 5: Working with Property Values

- Shows how to get and set the current and original values of individual properties

- Covers different ways of working with all the property values of an entity

- Shows how to get and set the modified state of a property

Part 6: Loading Related Entities

- Covers the loading related entities eagerly, lazily, and explicitly

Part 7: Local Data

- Shows how to work locally with entities that are already being tracked by the context

- Provides some general information on the facilities provided by DbContext for data binding

Part 8: Working with Proxies

- Presents some useful tips for working with dynamically generated entity proxies

Part 9: Optimistic Concurrency Patterns

- Shows some common patterns for handling optimistic concurrency exceptions

Part 10: Raw SQL Queries

- Shows how to send raw queries and commands to the database

Part 11: Load and AsNoTracking

- Covers the Load and AsNoTracking LINQ extension methods for loading entities into the context and querying entities with having them be tracked by the context

Part 12: Automatically Detecting Changes

- Describes when and how DbContext automatically detects changes in entities provides some pointers for when to switch this off

The Model

Many of the code fragments in the posts of this series make use of the following Code First model. Assume that the context and entity types used in the fragments are from this model unless they are explicitly defined differently in the post.

The code below can be pasted into Program.cs of a standard C# console application and many of the code fragments can then be tested by inserting them into the Main method.

Model Description

The model below contains four entity types: Princess, Unicorn, Castle, and LadyInWaiting. A princess can have many unicorns and each unicorn is owned by exactly one princess. A princess has exactly one lady-in-waiting in each castle.

A castle has a location represented by the complex type Location, which in turn has a nested complex type ImaginaryWorld

Model Code

using System; using System.Collections.Generic; using System.ComponentModel.DataAnnotations; using System.Data; using System.Data.Entity; using System.Data.Entity.Database; using System.Data.Entity.Infrastructure; using System.Data.Metadata.Edm; using System.Data.Objects; using System.Linq; namespace Magic.Unicorn { public class Princess : IPerson { public int Id { get; set; } public string Name { get; set; } public virtual ICollection<Unicorn> Unicorns { get; set; } public virtual ICollection<LadyInWaiting> LadiesInWaiting { get; set; } } public class Unicorn { public int Id { get; set; } public string Name { get; set; } [Timestamp] public byte[] Version { get; set; } public int PrincessId { get; set; } // FK for Princess reference public virtual Princess Princess { get; set; } } public class Castle { [Key] public string Name { get; set; } public Location Location { get; set; } public virtual ICollection<LadyInWaiting> LadiesInWaiting { get; set; } } [ComplexType] public class Location { public string City { get; set; } public string Kingdom { get; set; } public ImaginaryWorld ImaginaryWorld { get; set; } } [ComplexType] public class ImaginaryWorld { public string Name { get; set; } public string Creator { get; set; } } public class LadyInWaiting : IPerson { [Key, Column(Order = 0)]

[DatabaseGenerated(DatabaseGenerationOption.None)] public int PrincessId { get; set; } // FK for Princess reference [Key, Column(Order = 1)] public string CastleName { get; set; } // FK for Castle reference public string FirstName { get; set; } public string Title { get; set; } [NotMapped] public string Name { get { return String.Format("{0} {1}", Title, FirstName); } } public virtual Castle Castle { get; set; } public virtual Princess Princess { get; set; } } public interface IPerson { string Name { get; } } public class PrincessDto { public int Id { get; set; } public string Name { get; set; } } public class UnicornsContext : DbContext { public DbSet<Unicorn> Unicorns { get; set; } public DbSet<Princess> Princesses { get; set; } public DbSet<LadyInWaiting> LadiesInWaiting { get; set; } public DbSet<Castle> Castles { get; set; } } public class UnicornsContextInitializer

: DropCreateDatabaseAlways<UnicornsContext> { protected override void Seed(UnicornsContext context) { var cinderella = new Princess { Name = "Cinderella" }; var sleepingBeauty = new Princess { Name = "Sleeping Beauty" }; var snowWhite = new Princess { Name = "Snow White" }; new List<Unicorn> { new Unicorn { Name = "Binky" , Princess = cinderella }, new Unicorn { Name = "Silly" , Princess = cinderella }, new Unicorn { Name = "Beepy" , Princess = sleepingBeauty }, new Unicorn { Name = "Creepy" , Princess = snowWhite } }.ForEach(u => context.Unicorns.Add(u)); var efCastle = new Castle { Name = "The EF Castle", Location = new Location { City = "Redmond", Kingdom = "Rainier", ImaginaryWorld = new ImaginaryWorld { Name = "Magic Unicorn World", Creator = "ADO.NET" } }, }; new List<LadyInWaiting> { new LadyInWaiting { Princess = cinderella,

Castle = efCastle, FirstName = "Lettice",

Title = "Countess" }, new LadyInWaiting { Princess = sleepingBeauty,

Castle = efCastle, FirstName = "Ulrika",

Title = "Lady" }, new LadyInWaiting { Princess = snowWhite,

Castle = efCastle, FirstName = "Yolande",

Title = "Duchess" } }.ForEach(l => context.LadiesInWaiting.Add(l)); } } public class Program { public static void Main(string[] args) { DbDatabase.SetInitializer(new UnicornsContextInitializer()); // Many of the code fragments can be run by inserting them here } } }Summary

In this part of the series we introduced the topics that will be covered in upcoming parts and also defined a Code First model to work with.

As always we would love to hear any feedback you have by commenting on this blog post.

For support please use the Entity Framework Pre-Release Forum.

Arthur Vickers, Developer, ADO.NET Entity Framework

Return to section navigation list>

Windows Azure Infrastructure

John C. Stame announced why he left the Windows Azure team (and Microsoft) in November 2010 to become a Staff Business Solutions Strategist at VMware in his detailed The Journey – MS and beyond… post of 1/28/2011:

How did I get here? What led me to walk away from the hot team at Microsoft – Windows Azure, and even more interesting, leave Microsoft after 9+ years.

First, its important to note that most of the 9 years I spent at Microsoft were incredibly rewarding. I worked with some really smart and good people. And Microsoft is indeed a great company to work for.

So why leave? Well, first I need to get something off my chest – let me start with Innovation and R&D. Every year for the last 9 years, Microsoft executives remind all the employees, customers, partners, the world that they spend between $6 – 8 billion in R&D annually! Really? Now, I am not implying that there is a lack of innovation going on in the mildew forest (redmond); there are some really cool things like XBOX Live, Surface, .Net, Windows Azure, Live Mesh. But lets face it, Windows XP to Vista to Windows 7 (a Vista SP) over 9 years was not very innovative – especially now that I am using OS X! Windows Mobile Phone strategy? Search? Over $60 billion in R&D over 9 years – I can probably come up with a list 10 startups that had less then $300 million in funding in total that are more relevant today and innovative. …OK. I feel better now.

I mentioned being on the hot Windows Azure team, as well as listing it as innovative, and I still think there is some really cool technology there. Some of the best innovation in the last 9 years at Microsoft. Public PaaS Cloud is certainly innovative, interesting and very relevant to future deployment scenarios (not all, but some). But I started to question the overall “cloud” strategy and approach. I really think Azure technology should have first been introduced as a private cloud (on – premise) platform, enabling enterprises to build on their virtualization footing, and start with customized private PaaS. Then compliment that with a Public PaaS strategy that included ISPs and Partners and enable hybrid cloud scenarios. Instead, its sort of a huge leap for most enterprises to think about what workloads to build onto this primarily new and proprietary platform running in Microsoft Data Centers, and have a completely different perspective and approach to cloud patterns on-premise. Sorry, its not Hyper-V and Windows Server.

Then there is Ray Ozzie’s departure (not to mention all the other executives leaving to this day). Ozzie was the new Bill Gates at Microsoft. The “architect” of the new Microsoft and leading the transformation and vision for the cloud – the driving force and vision behind Azure. All of a sudden, while on the Azure team and coming to, Ozzie resigns! And then Ozzie writes his “Dawn of a New Day” . As I wrote in my blog post regarding the memo – Its a very interesting read and as one of my colleagues tweeted; “Shut the door, turn off your phone and read Ray Ozzie’s Dawn of a New Day” .

Anyway – my Azure bubble was popped. I was no longer a believer, nor passionate about the direction. There were other things that I won’t go into, and there were also things that made me want to stick around – mostly some good people. I will absolutely treasure some of the work and people I was fortunate to work with – some of who are still at Microsoft. Keep up the good work!!!

I have found a renewed love for something from Microsoft that runs on the Mac – MacOffice 2011. Now, we just need OneNote on the Mac.

I am finding an incredibly rich, innovative, and very relevant approach and strategy at my new gig! With some incredibly compelling dialogue with enterprises regarding cloud. More on that later….

John’s bio is here.

My Windows Azure Compute Extra-Small VM Beta Now Available in the Cloud Essentials Pack and for General Use contained a “Problem with Microsoft Online Services and Duration of Cloud Essentials for Partners Benefit” section at the end:

Update 1/28/2010: Today, two weeks after posting my original service request, I received a phone call from Greg. He stated that the one-month duration shown in Figure 19 (above) is normal and that the duration of the subscription in one year. In the interim, I had learned from an out-of-band source at Microsoft (a Senior Marketing Manager in Microsoft’s US SMB Solution Partners group) that the Cloud Essentials for Partners benefit doesn’t expire. However, it’s classified as “Pay per use,” so the ending Active date is advanced one month at a time automatically.

Ironically, the out-of-band source’s subscription screen capture showed a one-year spread between the two Active dates.

The gory details are here.

Tim Anderson (@timanderson) asked (but didn’t answer) Server and Tools shine in Microsoft results – so why is Bob Muglia leaving? on 1/28/2011:

Quarter ending December 31 2010 vs quarter ending December 31 2009, $millions

Segment Revenue Change Profit

Change Client (Windows + Live) 5054 -2139 3251 -2166 Server and Tools 4390 412 1776 312 Online 691 112 -543 -80 Business (Office) 5126 612 3965 1018 Entertainment and devices 3698 1317 679 314

Microsoft highlighted strong sales for Xbox (including Kinect) as well as for Office 2010, which it said in the press release is the “fastest-selling consumer version of Office in history.”

Why is Office 2010 selling better than Office 2007? My hunch is that this is a Windows 7 side-effect. New Windows, new Office. I do think Office 2010 is a slightly better product than Office 2007, but not dramatically so. SharePoint Workspace 2010, about which I mean to post when I have a moment, is a big disappointment, with a perplexing user interface and limited functionality.

Windows 7 revenue is smaller than that of a year ago, but then again the product was released in October 2009 so this is more a reflection of its successful launch than anything else.

What impressed me most is the strong performance of Server and Tools, at a time when consolidation through virtualisation and growing interest in cloud computing might be reducing demand. Even virtual machines require an OS licence though, so maybe HP should worry more than Microsoft about that aspect.

I still think they are good figures, and make Server and Tools VP Bob Muglia’s announced departure even more puzzling. Just what was his disagreement with CEO Steve Ballmer?

Server and Tools revenue includes Windows Azure, but it sounds like Microsoft’s cloud is not generating much revenue yet. Here is what CFO Peter Klein said:

Moving on to Server and Tools. For Q3 and the full year, we expect non-annuity revenue, approximately 30% of the total, to generally track with the hardware market. Multi-year licensing revenue which is about 50% of the total, and enterprise services, the remaining 20%, should grow high-single digits for the third quarter and low double-digits for the full fiscal year.

This suggests that 80% of the revenue is from licensing and that 20% is “enterprise services” – which as I understand it is the consulting and enterprise support division at Microsoft. So where is Azure? [Emphasis added.]

Online services, which is Bing and advertising, announced another set of dismal results. Another part of Microsoft’s cloud, Exchange and SharePoint online, is lost somewhere in the Business segment. Overall it is hard to judge how well the company’s cloud computing products are performing, but I think it is safe to assume that revenue is tiny relative to the old Windows and Office stalwarts.

Windows Phone 7 gets a mention:

While we are encouraged by the early progress, we realize we still have a lot of work ahead of us, and we remain focused and committed to the long-term success of Windows Phone 7.

It looks like revenue here is tiny as well; and like most corporate assertions of commitment, this is a reflection of the doubts around Microsoft’s mobile strategy overall: how much of it is Windows Phone 7, and how much a future version of full Windows running on ARM system-on-a-chip packages?

Still, these are good figures overall and show how commentators such as myself tend to neglect the continuing demand for Windows and Office when obsessing about a future which we think will be dominated by cloud plus mobile.

Related posts:

One possible answer to the Muglia question: “Current Azure revenue is a rounding error in the STB income statement.”

Rich Miller reported Microsoft’s Belady Gets Back to Building data centers in this 1/27/2011 post to the Data Center Knowledge blog:

After nearly a year at Microsoft Research, Christian Belady [pictured at right] has returned to Global Foundation Services, the unit of Microsoft that builds the company’s data centers. Belady will be the General Manager of Datacenter Research (DCR), reporting to GFS head Kevin Timmons.

The new position marks Belady’s return to working on data center designs that will be implemented in upcoming facilities. That marks a shift from his post at the Extreme Computing Group at Microsoft Research, which was focused on a longer-term vision for transforming hardware design.

“DCR will be an advanced development lab (versus a traditional research lab), whose horizon is one step beyond the data centers we have on the drawing board today,” Belady wrote in a blog post announcing his transition. “I am blessed to have yet another great opportunity.”

After 11 months in the research unit, Belady said he was inspired by a recent visit to Microsoft’s new modular data center in Quincy, Washington, which brought to life many of the design concepts he’d participated in during his time at GFS.

“I wanted to be part of that team again, it was that simple,” Christian writes. ” I also have complete respect and trust for the GFS management team in their values and strategic direction. So when the leadership in GFS offered the opportunity, there was no question in my mind on how to proceed.”

While Belady relished the opportunity to explore potentially paradigm-shifting hardware designs at the eXtreme Computing Group, the data center industry has entered an exciting phase of evolution in the design and construction of energy efficient data centers, one of Belady’s favorite causes.

“Whatever you see today in our data centers, you’re not likely to see tomorrow, as we are a company that is constantly evolving and innovating,” he said of Microsoft. “As a result, the opportunities are endless for its data center engineers and services operations team, and for the customers that we serve.”

Sudesh Oudi listed Questions to answer when determining your platform as a service provider (PaaS) in a 1/24/2011 post to the ServiceXen blog:

If you’re reading this, I am sure your somewhat familiar with Service and Infrastructure offerings. In this post I take a look at what features and functions to look for in a platform. Think of the selection as similar to choosing the operating system for your next smart phone.

When considering the options in helps to condense your decision making factors.

- Extent of useable feature set (things that your organizational will want to do)- PaaS products fall into 2 general categories those designed to satisfy specific development scenarios. On the other hand there are others are designed as general-purpose development environments that compete with popular Java, .NET, and scripting platforms.

- Application Purpose – Some PaaS platforms are designed for content-oriented applications, while others are designed for transactional business applications. How will your application or service be used, is the platform selection designed for you?

- Programming Language – Some of the PaaS environments are designed for programmers who work in Java, C#, or similar third-generation languages , while others use dynamic scripting languages such as PHP, Python, and Rails. Some PaaS products provide visual tools appropriate for developers who are used to working in Visual Studio and other fourth-generation language environments, and others provide tools non technical business analysts.

What to review prior to making a platform choice

- What kind of database services does the organization need? (platform provide)

- What kind of application logic would you want to build or configure with the platform? ( I think the tree planting)

- What kinds of development tools does the platform provide?

- Does the platform allow on-premise deployment?

- Does the platform provide application authentication?

- Does the platform provide a detailed billing service for application usage?

There is of course one other option if you find that PaaS doesn’t meet your needs. Infrastructure-as-a-service vendors provide developers with access to virtual machines, storage, and network capacity, and most use a subscription-based billing model to charge developers based on the resources they consume. In most IaaS offerings, APIs exist but no programming model, lack of a development environment, and only basic application: administration. Some common examples are below

- The regaining vendor is Amazon Web Services. Amazon Web Services (AWS) includes the Elastic Compute Cloud (EC2), Simple Storage Service (S3), and Simple DB. These services are widely used by development groups creating custom “cloud” applications and by PaaS providers that don’t want to build and run their own data centers.

- For those creating slick web 2.0 “shiny” web applications, Engine Yard is a hosting environment for Ruby on Rails applications. Engine Yard is a startup that provides “cloud,” managed hosting, and on-premise deployment options for applications built using the Ruby language and either the Rails or the Merb framework. The “cloud” options are subscription-based “slices” of Ruby on Rails clusters, with additional options for dedicated cluster resources; the on-premise option is a locally installed software image.