Windows Azure and Cloud Computing Posts for 10/28/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Updated 10/30/2010 with PDC 2010-related articles marked ••

• Updated 10/29/2010 with articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Azure DataMarket and OData

- AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

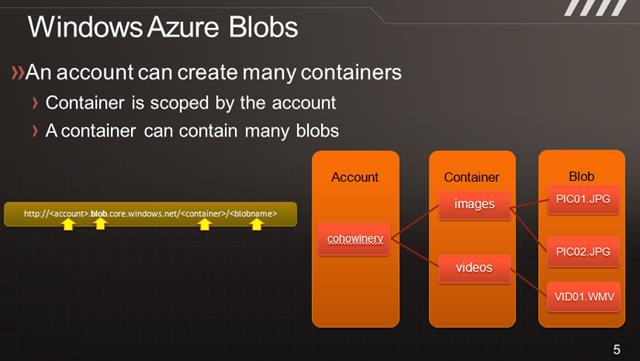

Azure Blob, Drive, Table and Queue Services

•• Jai Haridas presented Windows Azure Storage Deep Dive at PDC 2010:

Windows Azure Storage is a scalable cloud storage service that provides the ability to store and manipulate blobs, structured non-relational entities, and persistent queues. In this session you will learn tips, performance guidance, and best practices for building new applications or migrating an existing applications that use Windows Azure Storage.

<Return to section navigation list>

SQL Azure Database, Azure DataMarket and OData

•• James Staten lauds the Windows Azure DataMarket in his Unlock the value of your data with Azure DataMarket post of 10/29/2010 to the Forrester Research blogs:

If the next eBay blasts onto the scene but no one sees it happen does it make a sound? Bob Muglia, in his keynote yesterday at the Microsoft Professional Developers Conference, announced a slew of enhancements for the Windows Azure cloud platform but glossed over a new feature that may turn out to be more valuable to your business than the entire Platform as a Service (PaaS) market. That feature (so poorly positioned as an “aisle” in the Windows Azure Marketplace) is Azure DataMarket, the former Project Dallas. The basics of this offering are pretty underwhelming – it’s a place where data sets can be stored and accessed, much like Public Data Sets on Amazon Web Services and those hosted by Google labs. But what makes Microsoft’s offering different is the mechanisms around these data sets that make access and monetization far easier.

Every company has reams of data that are immensely valuable. Sales data, marketing analytics, financial records, customer insights and intellectual property it has generated as a course of business. We all know what data in our company is of value but like our brains, we are lucky if we mine a tenth of its value. Sure we hit the highlights. Cable and satellite television providers know what shows we watch and, from our DVRs, whether we fast forward through commercials. Retailers know, by customer, what products we buy, how frequently and whether we buy more when a sales promotion has been run. But our data can tell us so much more, especially when overlayed with someone else’s data.

For example, an advertising agency can completely change the marketing strategy for Gillette with access to Dish Network’s or TiVo’s DVR data (and many do). Telecom companies collect GPS pings from all our smart phones. Wouldn’t it be nice if you could cross correlate this with your retail data and find out what type of buyers are coming into your stores and leaving without buying anything? Wouldn’t it be even better if you knew the demographics of those lookie-loos and what it would take to get them to open their wallets? Your sales data alone can’t tell you that.

Wouldn’t it be nice if you could cross correlate satellite weather forecast data with commodity textile inventories and instruct your factories to build the right number of jackets of the right type and ship them to the right cities at just the right time to maximize profits along all points of the product chain? These relationships are being made today but not easily. Data seekers need to know where to find data providers, negotiate access rights to the data then bring in an army of data managers and programmers to figure out how to integrate the data, acquire the infrastructure to house and analyze the data and the business analysts to tweak the reports. Who has time for all that?

What eBay did for garage sales, DataMarket does for data. It provided

threefour key capabilities:

- A central place on the Internet where items can be listed, searched for and marketed by sellers

- A simple, consistent ecommerce model for pricing, selling and getting paid for your items

- A mechanism for separating the wheat from the chaff.

- A simple and trusted way of receiving the items

With Azure DataMarket now you can unlock the potential of your valuable data and get paid for it because it provides these mechanisms for assigning value to your data, licensing and selling it, and protecting it.

If you anonymized your sales data, what would it be worth? If you could get access to DirecTV’s subscriber data, AT&T’s iPhone GPS pings, Starbuck’s sales data or the statistics necessary to dramatically speed up a new drug test what would it be worth to you? Now there is a commercial market means of answering these questions.

Azure DataMarket solves the third problem with some governance. DataMarket isn't a completely open market where just anyone can offer up data. Microsoft is putting in place mechanisms for validating the quality of the data, authorization to vend it. And through data publication guidelines it is addressing the fourth factor - assurance that the data can be easily consumed.

A key feature of DataMarket is preparing the data in the Odata format making it easy to access directly through such simple business intelligence tools as Microsoft Excel. Where other information services give you access to raw data in a variety of cryptic or proprietary formats, Azure Datamarket sets can be pulled right into pivot tables -- no programming required.

Now there aren’t a huge number of datasets in the market today; it will take time for it to reach its full potential. This presents an opportunity for first movers to capture significant advantage. And while there is a mechanism for pricing and selling your data, there’s little guidance on what the price should be. And yes, you do think your data is worth more than it really is…now. I’d like to see a data auction feature that will bring market forces into play here.

There are a variety of companies already making money off their datasets; some making nearly as much from their data as they are leveraging that data themselves. Are you? Could you? It’s time to find out.

•• Pat Wood (@patrickawood) pictured below) posted Microsoft Unveils New Features for SQL Azure on 10/30/2010:

Steve Yi’s PDC 2010 video, “What’s New in SQL Azure?”, reveals the new features Microsoft is now adding to SQL Azure. The features include a new SQL Azure Developer Portal and web-based Database management tools. The new SQL Azure Data Sync CTP2 enables SQL Azure databases or tables to be synchronized between SQL Azure and on-premises SQL Server databases. Additionally, the new SQL Azure Reporting CTP enables developers to save SQL Azure Reports as Microsoft Word, Microsoft Excel, and PDF files.

Microsoft announced that all of these features will be available before the end of the year. The SQL Azure Reporting CTP and the SQL Azure Data Sync CTP2 are beginning to be made available to developers now. You can apply for the CTPs here.

Visit our Gaining Access website to learn more about SQL Azure and download our SQL Azure and Microsoft Access demonstration application.

Other free downloads include the Appointment Manager which enables you to save Access Appointments to Microsoft Outlook.

•• Chris Koenig [pictured below] posted OData v2 and Windows Phone 7 on 10/30/2010:

Yesterday at PDC10, Scott Guthrie demonstrated a Windows Phone 7 application that he was building using Silverlight. During this time, he mentioned that a new OData stack had been released for .NET which included an update to the library for Windows Phone 7. Now you might think that this was just a regular old upgrade – you know… bug fixes, optimizations, etc. It was, but it also signaled a rewrite of the existing CTP that had been available for Windows Phone 7. In this rewrite, there are some important feature changes that you need to be aware of, as announced by Mike Flasko on the WCF Data Services Team Blog yesterday:

LINQ support in the client library has been removed as the core support is not yet available on the phone platform. That said, we are actively working to enable this in a future release. Given this, the way to formulate queries is via URIs.

We’ve added a LoadAsync(Uri) method to the DataServiceCollection class to make it simple to query services via URI.

So you can easily support the phone application model we’ve added a new standalone type ‘DataServiceState’ which makes it simple to tombstone DataServiceContext and DataServiceCollection instances

In this post, I’ll go through the first 2 of the changes explain what they mean to you, and show you how to adapt your application to use the new library. In a future post, I’ll explore the new support for the phone application model and tombstoning.

Where are the new bits?

First things first – we need to get our hands on the new bits. There are 3 new download packages available from the CodePlex site for OData:

- OData for .NET 4.0, Silverlight 4 and Windows Phone 7 (Source Code, if you need it)

- OData Client Libraries and Code Generation Tool (just the binaries, and a new DataSvcUtil tool)

- OData Client Sample for Windows Phone 7 (great for learning)

After downloading, don’t forget to “unblock” the ZIP files, or Visual Studio will grouch at you. Just right-click on the ZIP file and choose Properties from the menu. If there is a button at the bottom labeled “Unblock”, click it. That’s it.

Once you get the Client Libraries and Code Generation Tool zip file unblocked, and unzipped, replace the assembly reference in your project from the old CTP version of the System.Data.Services.Client.dll to the new version supplied in this new download.

You’ll also need to re-generate the client proxy in your code using the new version of DataSvcUtil. Remember how? Just run this command from a Command Prompt opened to the folder where you unzipped the tools

DataSvcUtil /uri:http://odata.netflix.com/catalog /dataservicecollection /version:2.0 /out:NetflixCatalog.cs

Note: You must include the /dataservicecollection attribute (which itself requires the /version:2.0 attribute) to get INotifyPropertyChanged attached to each of your generated entities. If you’re not going to use the DataServiceCollection objects as I will, then you might not need this, but I’m using it in my samples if you’re following along at home.

Now you can replace your old proxy classes with these new ones. Now is when the real fun begins…

Wherefore art thou LINQ?

The most impactful change has got to be the removal of LINQ support by the DataServiceProvider. From Mike’s post:

LINQ support in the client library has been removed as the core support is not yet available on the phone platform. That said, we are actively working to enable this in a future release. Given this, the way to formulate queries is via URIs.

Wow. I don’t know about you, but I have come to depend on LINQ for almost everything I do in .NET anymore, and this one really hits me square between the eyes. Fortunately, these URI-based queries aren’t too complicated to create, and Mike also points out that they’ve added a new method on the context to make this a bit easier on us:

We’ve added a LoadAsync(Uri) method to the DataServiceCollection class to make it simple to query services via URI.

With my custom URI and this new method, it’s actually almost as simple as before, sans LINQ doing all my heavy lifting.

Surgery time

So – to finish upgrading my Netflix application, I’ve got to make some changes to the existing MainViewModel.LoadRuntimeData method. Here’s what it looks like from the last implementation:

As you can see, this contains quite a bit of LINQ magic. Unfortunately, that’s going to have to go. The only thing we can really salvage, is the instantiation of the NetflixCatalog class based on the Uri to the main OData service endpoint. This is important – we don’t use a URI with all the query string parameters in one shot because the DataContext class needs to have a reference to the base URI for the service, and future queries will be based on that base URI.

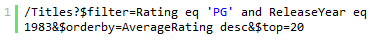

Rebuild the query

Now that we have a reference to the main service, we now have to build our own query. To do this, we’ll need to manually convert the LINQ query into something that the previous DataContext would have done for us. There are a couple ways to figure this out. First, we could dive into the OData URI Conventions page on the OData web site and read all about how to use the various $ parameters on the URL string to get the results we want. The sneaky way, however, would be to run the old code with the old provider and look at the URI that got sent to the Netflix service by snooping on it with Fiddler. The results are the same – one long URL that has all the parameters included.

Although it’s pretty long, note how clean the URL is – Where becomes $filter, OrderBy becomes $orderby and Take becomes $top. This is what is so great about OData: clean, clear, easy to read URLs.

Armed with our new URI, we can go in and replace the LINQ query with a new Uri declaration. Since we already have the new DataContext based on the service’s URI, we can remove that from here and just use the stuff that comes after:

Don’t forget to mark this new URI as UriKind.Relative. This new URI is definitely NOT an absolute URI, and you’ll get an error if you forget. Here’s what the new code looks like so far:

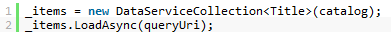

ObservableCollection –> DataServiceCollection

Now that the DataContext is created, and the replacement query is built, it’s time to load up the data. But what are we going to load it up into? Depending on which version of my old application you were using, you might see code like I listed above with ObservableCollection or you might have the version that I converted to use the DataServiceCollection. This new model definitely wants us to use DataServiceCollection as it has some neat ways to manage loading data for us. That means we will have to swap out our definition of the Items property with a DataServiceCollection.

First, replace instances of ObservableCollection with DataServiceCollection. Second, remove the initializer for the Items property variable – it’s no longer needed. Third, and this is optional, you can tap into the Paging aspects of the DataServiceCollection by adding a handler to the DataServiceCollection.Loaded event.

Note: I don’t need this feature now, so I’m not going to add code for it. I’ll leave it as an exercise for the reader,or you can hang on for a future post where I add this back in.

Run the query

Now that my query URIs are defined, and my DataServiceCollection objects are in place, it’s time to wire up the final changes to the new query. For this, all I have to do is initialize the Items property with a DataServiceCollection and ask it to go run the query for us.

Notice the simplified version of the loading process. Instead of having to go through and manually load up all the items in the ObservableCollection, here the DataServiceQuery handles all that hard work for us. The main thing we need to remember is to initialize it with the DataContext before calling out to it.

Wrapping it all up

Now that we’ve got everything working, let’s take a look at the whole LoadRuntimeData method:

Except for a few minor changes to the ViewModel properties (all we really did was change a type from ObservableCollection to DataServiceQuery) the actual code changes were pretty minimal. I still don’t like that I have to write my own URL string, but the team is going to address that for me in the future, so I guess I can hang on until then.

I’ve uploaded the project to my Skydrive, so you can download this version to see it in action. It’s still not a very exciting application, but it does show off how to use the new OData library. As always, thanks for reading, I hope you found it valuable, and let me know if you have any questions.

Chris Koenig is a Senior Developer Evangelist with Microsoft, based in Dallas, TX. Prior to joining Microsoft in March of 2007, Chris worked as a Senior Architect for The Capital Group in San Antonio, and as an Architect for the global solution provider Avanade.

Chris Woodruff observed in a comment to the preceding post:

To work with the new OData Client Library with developing Windows phone 7 applications there is a hint and two gotcha's:

- Hint -- If you are a LINQ'er and want to get your URI's written quickly go to my blog post "Examining OData – Windows Phone 7 Development — How to create your URI’s easily with LINQPad" at http://www.chriswoodruff.com/index.php/2010/10/28/examining-odata-how-to-create-your-uris-easily-with-linqpad/

- Gotcha -- Seems the ability to use the Reactive Framework has been broken with this new update of the OData Client Library. To do Async loads of data calls on the DataServiceCollection<t>, you need to have an observer tied to your class like most MVVM patterns show. Rx is different as it allows Observers to subscribe to your events which does not seem to be handled in the code for the DataServiceCollection<t> written for WP7.

- One more Gotcha -- If you have the new Visual Studio Async CTP installed on your machine you will not be able to compile the source of the OData Client Library that is on the CodePlex site. It has variable names of async that conflict with the new C#/VB.NET keywords async and await.

•• Yavor Georgiev reported wcf.codeplex.com is now live in a 10/29/2010 post to The .NET Endpoint blog:

Over the last few weeks the WCF team has been working on a variety of new projects to improve WCF’s support for building HTTP-based services for the web. We have also focused on a set of features to enable JavaScript-based clients such as jQuery.

We are proud to announce that these projects are now live and available for download on http://wcf.codeplex.com. You can get both the binaries and the source code, depending on your preference. Please note that these are prototype bits for preview purposes only.

For more information on the features, check out this post, this post, this PDC talk, and the documentation on the site itself.

Our new CodePlex site will be the home for these and other features, and we will continue iterating on them with your help. Please download the bits and use the CodePlex site’s Issue Tracker and Discussion tab to let us know what you think!

•• Peter McIntyre posted a detailed syllabus of Data access for WCF WebHttp Services for his Computer Science students on 10/29/2010:

At this point in time, you have created your first WCF WebHttp Service. In this post, intended as an entry-level tutorial, we add a data access layer to the service.

Right at the beginning here, we need to state a disclaimer:

Like many technologies, WCF WebHttp Services offers functionality that spans a wide range, from entry-level needs, through to high-scale, multi-tier, enterprise-class requirements. This post does NOT attempt to cover the range.

Instead, we intend to provide an entry-level tutorial, to enable the developer to build a foundation for the future, where the complexity will be higher. We are intentionally limiting the scope and use case of the topics covered here, so that the new WCF service developer can build on what they know.

WCF WebHttp Services, as delivered in .NET Framework 4, are still fairly new as of this writing (in October 2010). The MSDN documentation is typically very good. However, the information needed by us is scattered all over the documentation, and there’s no set of tutorials – by Microsoft or others – that enable the new WCF service developer to get success quickly. The blog posts by the ADO.NET Entity Framework and WCF teams are good, but the examples tend to be focused on a specific scenario or configuration.

We need a tutorial that is more general, consistent, and predictable – a tutorial that enables the developer to reliably add a data access layer to a WCF WebHttp Service, and get it working successfully. Hopefully, this tutorial meets these needs.

Topics that this post will cover

In the last blog post, titled “WCF WebHttp Services introduction”, you learned about the programming model, and got started by creating a simple project from the template. Make sure you cover that material before you continue here.

This blog post will cover the following topics:

- Principles for adding a data access layer to a WCF WebHttp Service

- Brief introduction to POCO

- A brief introduction to the enabling C# language features (generics, LINQ, lambdas)

- Getting started by creating a data-enabled service

Principles for adding a data access layer to a WCF WebHttp Service

The Entity Framework is the recommended data access solution for WCF WebHttp Services.

Review – Entity Framework data model in a WCF Data Service

In the recent past, you gained experience with the creation of Entity Framework data models, as you programmed WCF Data Services. That programming model (WCF Data Services) is designed to expose a data model in a RESTful manner, using the OData protocol.

A feature of the OData protocol is that the data is delivered to the consumer in AtomPub XML, or in JSON. Let’s focus on the XML for a moment.

The AtomPub XML that expresses the entities is “heavy” – it includes the AtomPub wrapping structure, schema namespace information, and related attributes for the properties and values.

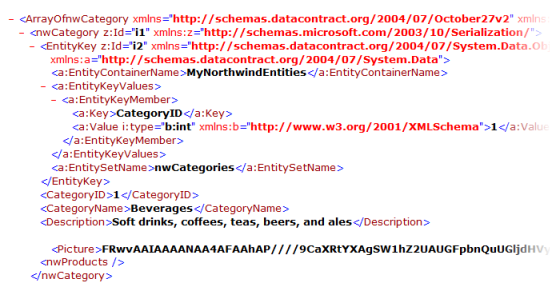

The image below is the XML that’s returned by a WCF Data Service, for the Northwind “Categories” collection. Only the first object in the collection is shown. As you can see, the actual data for the Category object is near the bottom, in about four lines of code.

Preview – Entity Framework data model in a WCF WebHttp Service

Now, let’s preview the use of an Entity Framework data model in a WCF WebHttp Service.

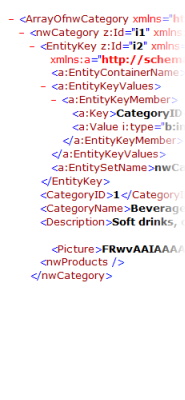

The default XML format for an entity, or a collection of entities, is known as entity serialization. It too is “heavy”, although not as heavy as WCF Data Service AtomPub XML. Entity serialization includes schema namespace information, and related attributes for the properties and values.

The image below is the XML that’s returned by a non-optimized WCF WebHttp Service, for the Northwind “Categories” collection. Only the first object in the collection is shown. As you can see, the actual data for the Category object is near the bottom, in about four lines of code.

WCF WebHttp Services should consume and provide data in easy-to-use concise formats. Therefore, we optimize the entity serialization process, by performing one additional step after we create our Entity Framework data model: We generate POCO classes. Generating plain old CLR classes (POCO) enables WCF to serialize (and deserialize) objects in a very concise XML format.

The image below is the XML that’s returned by a WCF WebHttp Service that has had POCO classes generated for the data model, for the Northwind “Categories” collection. Only the first object in the collection is shown. As you can see, most of the XML describes the actual data for the Category object.

The following is a summary, a side-by-side comparison, of these three XML formats:

AtomPub EF serialization POCO serialization

Peter continues with a “Brief Introduction to POCO” and other related topics.

•• Azure Support shared my concern about lack of news about SQL Azure Transparent Data Encryption (TDE) and data compression in a SQL Azure Announcements at PDC 2010 post of 10/29/2010:

Microsoft saves its big announcements for Azure till its PDC conference, so what did we get at PDC 2010? The biggie was SQL Azure Reporting, this was a major announcement and a much requested feature for SQL Azure. In the demonstrations the compatibility with the SQL Server 2008 R2 Reporting Services looked very good – simply drag a report onto the design surface in an ASP.NET or ASP.NET MVC Web Role and the deploy it to Azure and then the report will be visible on the web page. SQL Azure Reporting is currently in CTP and will only be available per invite later in 2010.

SQL Azure Data Sync CTP 2 was announced with the major new feature being the ability to sync different SQL Azure databases. You can sign up for the Data Sync and Reporting CTPs here

Project Houston which is an online tool for managing an querying SQL Azure databases is now named Database Manager for SQL Azure. This is essentially a lightweight and online version of SSMS and addresses some of the weaknesses of the quite frankly under-powered Azure developer portal.

And What We Didn’t Get…

First and foremost we didn’t get any smaller versions of SQL Azure, this is a pity since it would be an excellent compliment to the new ultra-small Windows Azure instances. The high barrier to entry is the most persistent complaint about Azure and the 1GB starter size for a SQL Azure database is definitely way too large for most new web projects. Also a deafening silence on backups (we were promised two backup types – clone and continuous by the end of 2010 but this is almost certain not to be met. Also no news on compression and encryption.

•• The OData Blog posted New OData Producers and Consumers on 10/28/2010:

eBay now exposes its catalog via OData

- SAP is working to add OData support to its next generation of products

- Twitpic now exposes its Images, Users, Comments etc via OData

- Facebook Insights has updated its OData service and added more content

- Netflix will soon allow you to manage your Netflix Queue using OData - more details coming soon

- Windows Azure Marketplace DataMarket (formerly Dallas) has been released and it supports OData

- Windows Live now exposes its data via OData, and you can read a walk-thru here

- Microsoft Dynamics CRM 2011 allows you to query its data using OData

- Microsoft Pinpoint marketplace exposes its data using OData - more details coming soon.

- WCF Data Services October 2010 CTP was released with support for PATCH, Prefer, Multi-valued properties and Named Resource Stream.

- OData client library for Windows Phone 7 source has been released.

- Tableau - an excellent client-side analytics tool - can now consume OData feeds

•• Cihangir Biyikoglu continued his series with Building Scalable Database Solution in SQL Azure - Introducing Federation in SQL Azure of 10/28/2010:

In the previous post [see below], I described the scale-out vs scale-up techniques and why scale-out was the best thing after slides bread and why specifically sharding or horizontal partitioned wins as the best pattern in the world of scale-out.

I also talked about the advantages of using SQL Azure as the platform for building sharded apps. In this post, I’d like address a few areas that make the life of the admin and the developer more challenging when working with sharded apps and how SQL Azure will address these issues in future. Basically we’ll address these 2 challenges;

#1 …so I built the elastic app that can repartition but repartition operation requires quite a lot of acrobatics. how do I easily repartition?

#2 Robust runtime connection routing sounds great. How do I do that? How about routing while repartitioning of data is happening at the backend? What if the data partition, the atomic unit, moves by the time I look up and connect to a shard?

First thing first; Introducing Federation in SQL Azure

To address some of these challenges above and more, we will be introducing federations in SQL Azure. Federation are key to understanding how scale-out will work. Here are the basic concepts;

- Federations represent all data being partitioned. It defined the distribution method as well as the domain of valid values for the federation key. In the picture below, you can see that the customer_federation is part of sales_db.

- Federation Key is the key used for partitioning the data.

- Atomic Unit (AU) represent a single instance value of the federation key. Atomic units cannot be separated thus all rows that contain the same instance value of the federation key always stay together.

- Federation Member (aka Shard) is the physical container for a range of atomic units.

- Federation Root is the database that houses federations and federation directory.

- Federated Tables refer to tables in federation members that contain partitioned data, as opposed to Reference Tables refer to table that contain data that is repeated in federation members for lookup purposes.

Following figure shows how it all look when you put these concepts together. Sales_DB is the database that contains a federation (a.k.a the federation root). Federation called customer_federation is blown up to show you the details. Federation members contain ranges of atomic units (AU) such as [min to 100). AUs contain the collection of all data for the given federation key instance such as 5 or 25.

Repartitioning Operations with Federations

Federation provide operation for online repartitioning. SPLIT operation allows spreading of a federation members data (collection of atomic units) to many federation members. MERGE allows gluing back of federation members data together. This is exactly how federation address the challenge #1 above. With the SPLIT and MERGE operations administrators can simply trigger repartitioning operations on the server side and most importantly they can do this without downtime!

Connecting to Federations

Federation also allow connection to the federation’s data using a special statement; “USE FEDERATION federation_name(federation_key_value)”. All connections are established to the database containing the federation (a.k.a root database). However to reach a specific AU or a federation member, instead of requiring a database name, applications only need to provide the federation key value. This eliminates the need for apps to cache any routing or directory information when working with federations. Regardless of any repartitioning operation in the environment, apps can reliably connect to the AU or to the federation member that contain the given federation key value, such as tenant_id 55, simply by issuing USE FEDERATION orders_federation(55). Again the important part is, SQL Azure guarantees that you will always be connected to the correct federation member regardless of any repartitioning operation that may complete right as you are establishing a connection. This is how federations address the challenge #2 above.

I’d love to hear feedback from everyone on federations. Please make sure to leave a note here or raise issues in the SQL Azure forums here.

• Moe Khosravy sent a 10/28/2010 e-mail message: Former Microsoft Codename Dallas will be taken offline on January 28, 2011. New Portal Announced:

Former Microsoft Codename Dallas will be taken offline on January 28, 2011.

Microsoft Codename Dallas, now Windows Azure DataMarket is commercially available!!!

How does this impact you?

With this announcement, the old Dallas site will be taken offline on Jan 28th 2011. Until then, your applications will continue to work on the old site but customers can register on our new portal at https://datamarket.azure.com . Additional details on the transition plan are available HERE.

Commercial Availability Announced

Today Microsoft announced commercial availability of DataMarket aka Microsoft Codename “Dallas” which is part of Windows Azure Marketplace. DataMarket will include data, imagery, and real-time web services from leading commercial data providers and authoritative public data sources.

DataMarket enables developers worldwide to access data as a service. DataMarket exposes API’s which can be leveraged by developers on any platform to build innovative applications. Developers can also use service references to these API’s in Visual studio, making it easy for developers to consume the datasets.

Millions of information workers will now be able to make better data purchasing decisions using rich visualization on DataMarket. DataMarket connectivity from Excel and PowerPivot will enable information workers to perform better analytics by mashing up enterprise and industry data.

Today’s key announcements around Dallas include:

- Windows Azure Marketplace is all up marketplace brand for Microsoft’s cloud platform and Dallas is DataMarket section of Windows Azure Marketplace

- DataMarket has been released with new user experience and expanded datasets from leading content providers!

- Excel Add-in for DataMarket is now available for download at https://datamarket.azure.com/addin

- DataMarket is launching with 40+ content provider partners with content ranging from Demographic, geospatial, financial, retail, sports, weather, environmental and health care.

Thank you for being early adopter customer of Dallas Service and hoping to see you on new DataMarket.

• The SQL Server Team posted Announcing an updated version of PowerPivot for Excel on 10/28/2010:

Today, Microsoft is announcing the launch of Windows Azure Marketplace (formerly codenamed “Dallas”). The Windows Azure Marketplace is an online marketplace to find, share, advertise, buy and sell building block components, premium data sets and finished applications. Windows Azure Marketplace includes a data section called DataMarket, aka Microsoft Codename Dallas.

With this announcement, the SQL Server team has released an updated version of PowerPivot for Excel with functionality to support true 1-click discovery and service directly to DataMarket. Using this new PowerPivot for Excel add-in, customers will be able to directly access trusted premium and public domain data from DataMarket. Download the new version of PowerPivot at http://www.powerpivot.com

FAQ

1. I’ve installed the new PowerPivot for Excel add-in… now what do I do?

Once you install the new add-in, go to the PowerPivot work area and you’ll notice under the “Get External Data” section a new icon that allows you directly discover and consume data from DataMarket

2. In addition to being able to directly connect to DataMarket, are there any other new functionality in PowerPivot for Excel or PowerPivot for SharePoint?

This updated version only adds direct access to Marketplace and no additional functionality is being shipped in PowerPivot for Excel. There’re no changes in PowerPivot for SharePoint either.

3. I just installed the latest version of PowerPivot from Download Center but I don’t see the DataMarket icon.

It takes about 24 hours to replicate the new bits into all the servers WW. Please be patient and again after a few hours

• Wayne Walter Berry (@WayneBerry) suggested on 10/28/2010 watching Steve Yi’s prerecorded Video: What's New in SQL Azure? session for PDC 2010:

SQL Azure is Microsoft’s cloud data platform. Initially offering a relational database as a service, there are new enhancements to the user experience and additions to the relational data services provided. Most notably, updates to SQL Azure Data Sync enable data synchronization between on-premises SQL Server and SQL Azure.

Additionally, SQL Azure Reporting will soon become available for customers and partners to provide reporting on SQL Azure databases. This session will provide an overview and demonstration of all the enhancements to the SQL Azure services, and explores scenarios on how to utilize and take advantage of these new features.

• Steve Yi [pictured below] recommended on 10/29/2010 Eric Chu’s pre-recorded Video: Introduction to Database Manager for SQL Azure from PDC 2010’s Player app:

The database manager for SQL Azure (previously known as Microsoft® Project Code-Named “Houston”) is a lightweight and easy to use database management tool for SQL Azure databases. The web-based tool is designed specifically for Web developers and other technology professionals seeking a straightforward solution to quickly develop, deploy, and manage their data-driven applications in the cloud. In this session, we will demonstrate the database manager in depth and show how to access it from a web browser, how to create and edit database objects and schema, edit table data, author and execute queries, and much more.

View Introduction to Database Manager for SQL Azure by Eric Chu as a pre-recorded video from PDC 2010.

• Steve also pointed on 10/29/2010 to Liam Cavanagh’s (@liamca) PDC 2010 Video: Introduction to SQL Azure Data Sync:

In this session we will show you how SQL Azure Data Sync enables on-premises SQL Server data to be easily shared with SQL Azure allowing you to extend your on-premises data to begin creating new cloud-based applications. Using SQL Azure Data sync’s bi-directional data synchronization support, changes made either on SQL Server or SQL Azure are automatically synchronized back and forth.

Next we show you how SQL Azure Data Sync provides symmetry between SQL Azure databases to allow you to easily geo-distribute that data to one or more SQL Azure data centers around the world. Now, no matter where you make changes to your data, it will be seamlessly synchronized to all of your databases whether that be on-premises or in any of the SQL Azure data centers.

View Introduction to SQL Azure Data Sync by Liam Cavanagh video from PDC 2010.

To learn more about SQL Azure Data Sync and how to sign up for the upcoming CTP, visit: http://www.microsoft.com/en-us/sqlazure/datasync.aspx

• Cihangir Biyikoglu explained Building Scalable Database Solutions Using SQL Azure – Scale-out techniques such as Sharding or Horizontal Partitioning on 10/28/2010:

As I spend time at conferences and customer event, the top faq I get has to be this one; SQL Azure is great but a single database is limited in size and computational capacity. How do I build applications that need larger computational capacity? The answer invariably is; You build it the same way most application are built in the cloud J! That is by using scale-out techniques such as horizontal partitioning or commonly referred to as sharding.

Rewinding back to the top…

When building large scale database solutions, there are a number of approaches that can be employed.

Scaling-Up refer to building apps using a single large unified HW and typically single database that can house all the data of an app. This approach works as long as you are able to find hardware that can handle the peak load and you are ok with typically exponential incremental cost for a none linear increase in scalability. Scale-up class of hardware typically have high administration cost due to its complex configuration and management requirements.

Scaling-Out refer to building apps using multiple databases spread over multiple independent nodes. Typically nodes are cost effective, commodity class hardware. There are multiple approaches to scale-out but among alternatives patterns such as sharding and horizontal partitioning provides the best scalability. With these patterns, apps can decentralize their processing, spread their workload to many nodes and harness the collective computational capacity. You can achieve linear cost to scalability ratio as you add more nodes.

How do you shard and app?

You will find many definition and approaches when it comes to sharding but here are the two principals I’ll recommend for best scalability characteristics.

1. Partition your workload to independent parts of data. Let’s call single instance of this data partition an atomic unit. The atomic unit can be a tenants data in a multi-tenant apps or a single customer in a SaaS app, a user in a web app or store for a branch enabled app etc. Atomic unit should be the focus of majority of your app workload. This boils down to ensuring that transactions are scoped to atomic units and the workload mostly filters to an instance of this atomic unit. In this case of a multi-tenant app for example, the tenant_id, user_id, store_id, customer_id etc is present in most interactions of the app with the database such as the following query;

SELECT … FROM … WHERE … and customer_id=55 OR UPDATE … WHERE … and customer_id=55

2. Build elastic apps that understand that data is partitioned and has robust dynamic routing to the partition that contains the atomic unit. This is about developing app that discover at runtime where a given atomic unit is located and do not tie to a specific static distribution of data. This typically entails building apps that cache a directory of where data is at any given time.

Clearly, the two requirements above place constraints on the developers but they result in great scalability and price-performance characteristics at the end. Once you have built the app with these principals, now app administrators can capacity plan flexibly based on the load they expect. In the inception of the app, maybe a few databases are enough to handle all the traffic to 100s of atomic units. As your workload grow such as more tenants, more traffic per tenant or larger tenants etc, you can provision new databases and repartition your data. If your workload shrink, again you can repartition your data and de-provision existing databases.

Why is SQL Azure the best platform for sharded apps?

1. SQL Azure will help reduce administrative complexity and cost. It boils down to no physical administration for OS and SQL. No patching or VMs to maintain. built-in HA with load balancing to best utilize capacity of the cluster.

2. SQL Azure provides great elasticity with easy provisioning and de-provisioning of databases. So you don’t need to but HW, wire it up, install Windows and SQL on top, you can simply run “CREATE DATABASE” and be done. Better yet you can run “DROP DATABASE” and no longer incur a cost.

Given the benefits, many customers using SQL Azure service build large scale database solution like multi-tenant cloud apps such as Exchange Hosted Achieve service or internet scale apps such as TicketDirect that require massive scale on the web.

This is the way life is today. In the next few weeks with PDC and PASS 2010, we will be talking about features that will make SQL Azure even a better platform for sharded apps, enhancing the lives of both developers and DBAs. Stay tuned to PDC and PASS and to my blog. I’ll be posting details on the features here as we unveil the functionality at these conferences.

Mike Flasko of the WCF Data Services Team reported Data Services Client for Win Phone 7 Now Available! on 10/28/2010:

Today, at the PDC, we announced that a production-ready version of the WCF Data Services client for Windows Phone 7 is available for download from http://odata.codeplex.com. This means that it is now simple to create an app that connects your Windows Phone 7 to all the existing OData services as well as the new ones we’re announcing at this PDC.

The release includes a version of System.Data.Services.Client.dll that is supported on the phone (both the assembly and the source code) and a code generator tool to generate phone-friendly client side proxies

The library follows most of the same patterns you are already used to when programming with OData services on the desktop version of Silverlight. The key changes from Silverlight desktop to be aware of are:

- LINQ support in the client library has been removed as the core support is not yet available on the phone platform. That said, we are actively working to enable this in a future release. Given this, the way to formulate queries is via URIs.

- We’ve added a LoadAsync(Uri) method to the DataServiceCollection class to make it simple to query services via URI.

- So you can easily support the phone application model we’ve added a new standalone type ‘DataServiceState’ which makes it simple to tombstone DataServiceContext and DataServiceCollection instances

Liam Cavanagh (@limaca) posted Announcing SQL Azure Data Sync CTP2 on 10/28/2010:

Earlier this week I mentioned that we will have one additional sync session at PDC that would open up after the keynote. Now that the keynote is complete, I am really excited to point you to this session “Introduction to SQL Azure Data Sync” and tell you a little more about what was announced today.

In the keynote today, Bob Muglia announced an update to SQL Azure Data Sync (called CTP2) to enable synchronization of entire databases or specific tables between on-premises SQL Server and SQL Azure, giving you greater flexibility in building solutions that span on-premises and the cloud.

As many of you know, using SQL Azure Data Sync CTP1, you can now synchronize SQL Azure database across datacenters. This new capability will allow you to not only extend data from your on-premises SQL Servers to the cloud, but also enable you to easily extend data to SQL Servers sitting in remote offices or retail stores. All with NO-CODING required!

Later in the year, we will start on-boarding customers to this updated CTP2 service. If you are interested in getting access to SQL Azure Data Sync CTP2, please go here to register.

If you would like to learn more and see some demonstrations of how this will work and some of the new features we have added to SQL Azure Data Sync, please take a look at my PDC session recording. Here is the direct video link and abstract.

Video: Introduction to SQL Azure Data Sync (Liam Cavanagh) – 27 min

In this session we will show you how SQL Azure Data Sync enables on-premises SQL Server data to be easily shared with SQL Azure allowing you to extend your on-premises data to begin creating new cloud-based applications. Using SQL Azure Data sync’s bi-directional data synchronization support, changes made either on SQL Server or SQL Azure are automatically synchronized back and forth. Next we show you how SQL Azure Data Sync provides symmetry between SQL Azure databases to allow you to easily geo-distribute that data to one or more SQL Azure data centers around the world. Now, no matter where you make changes to your data, it will be seamlessly synchronized to all of your databases whether that be on-premises or in any of the SQL Azure data centers.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

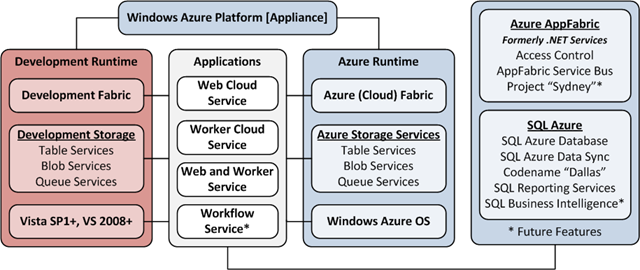

•• The Windows Azure App Fabric Team published a detailed Windows Azure App Fabric PDC 2010 Brief as a *.docx file that didn’t receive large-scale promotion. Here’s a reformatted HTML version:

Overview

Businesses of all sizes experience tremendous cost and complexity when extending and customizing their applications today. Given the constraints of the economy, IT must increasingly find new ways to do more with less but at the same time simultaneously find new innovative ways to keep up with the changing needs of the business. Developers are now starting to evaluate newer cloud-based platforms as a way to gain greater efficiency and agility; the promised benefits of cloud development are impressive, by enabling greater focus on the business and not in the running of infrastructure.

However, customers already have a very large base of existing heterogeneous and distributed business applications spanning different platforms, vendors and technologies. The use of cloud adds complexity to this environment, since the services and components used in cloud applications are inherently distributed across organizational boundaries. Understanding all of the components of your application – and managing them across the full application lifecycle – is tremendously challenging. Finally, building cloud applications often introduces new programming models, tools and runtimes making it difficult for customers to transition from their existing server-based applications.

To address these challenges, Microsoft has delivered Windows Azure AppFabric.

Windows Azure AppFabric: Comprehensive Cloud Middleware

Windows Azure AppFabric provides a comprehensive cloud middleware platform for developing, deploying and managing applications on the Windows Azure Platform. It delivers additional developer productivity, adding in higher-level Platform-as-a-Service (PaaS) capabilities on top of the familiar Windows Azure application model. It also enables bridging your existing applications to the cloud through secure connectivity across network and geographic boundaries, and by providing a consistent development model for both Windows Azure and Windows Server. Finally, it makes development more productive by providing a higher abstraction for building end-to-end applications, and simplifies management and maintenance of the application as it takes advantage of advances in the underlying hardware and software infrastructure.

There are three key components of Windows Azure AppFabric:

- Middleware Services: platform capabilities as services, which raise the level of abstraction and reduce complexity of cloud development.

- Composite Applications: a set of new innovative frameworks, tools and composition engine to easily assemble, deploy, and manage a composite application as a single logical entity

- Scale-out application infrastructure: optimized for cloud-scale services and mid-tier components.

Following is a high-level overview of these Windows Azure AppFabric components and features.

Middleware Services

Windows Azure AppFabric provides pre-built, higher level middleware services that raise the level of abstraction and reduce complexity of cloud development. These services are open and interoperable across languages (.NET, Java, Ruby, PHP…) and give developers a powerful pre-built “class library" for next-gen cloud applications. Developers can use each of the services stand-alone, or combine services to provide a composite solution.

- Service Bus (Commercially available now; updated CTP delivered October 2010) provides secure messaging and connectivity capabilities that enable building distributed and disconnected applications in the cloud, as well as hybrid applications across both on-premise and the cloud. It enables using various communication and messaging protocols and patterns, and removes the need for the developer to worry about delivery assurance, reliable messaging and scale.

- Access Control (Commercially available now; updated CTP delivered August 2010) enables an easy way to provide identity and access control to web applications and services, while integrating with standards-based identity providers, including enterprise directories such as Active Directory®, and web identities such as Windows Live ID, Google, Yahoo! and Facebook.

- Caching (New CTP service delivered October 2010; commercially available in H1 CY11) accelerates performance of Windows Azure and SQL Azure based apps by providing a distributed, in-memory application cache, provided entirely as a service (no installation or management of instances, dynamically increase/decrease cache size as needed). Pre-integration with ASP.NET enables easy acceleration of web applications without having to modify application code.

- Integration (New CTP service coming in CY11) will provide common BizTalk Server integration capabilities (e.g. pipeline, transforms, adapters) on Windows Azure, using out-of-box integration patterns to accelerate and simplify development. It will also deliver higher level business user enablement capabilities such as Business Activity Monitoring and Rules, as well as self-service trading partner community portal and provisioning of business-to-business pipelines.

- Composite App (New CTP service coming in H1 CY11) will provide a multi-tenant, managed service which consumes the .NET based Composition Model definition and automates the deployment and management of the end to end application - eliminating manual steps needed by both developers and ITPros today. It also executes application components to provide a high-performance runtime optimized for cloud-scale services and mid-tier components (automatically delivering scale out, availability, multi-tenancy and sandboxing of application components). Finally, it delivers a complete hosting environment for web services built using Windows Communication Foundation (including WCF Data Services and WCF RIA Services) and workflows built using Windows Workflow Foundation.

It’s a key characteristic of all AppFabric Middleware Services that they are consistently delivered as true multi-tenant services – you simply provision, configure, and use (no installation or management of machines/instances).

Composite Applications

As developers build next-generation applications in the cloud, they are increasingly assembling their application as a composite from many pre-built components and services (either developed in house or consumed from third parties cloud services). Also, given that many cloud applications need to access critical on-premises business applications and data, there is a need to be able to compose from on-premises services easily and securely. Finally, the highly distributed nature of these composite applications require more sophisticated deployment and management capabilities for managing all of the distributed elements that span the web, middle-tier and database tier.

AppFabric Composition Model

& Visual DesignerMicrosoft is advancing its Windows Azure AppFabric cloud middleware platform to provide a full composite application environment for developing, deploying and managing composite applications. The AppFabric composition environment delivers three main benefits:

Composition Model A set of .NET Framework extensions for composing applications on the Windows Azure platform. This builds on the familiar Azure Service Model concepts and adds new capabilities for describing and integrating the components of an application. It also provides a consistent composition model for both Windows Azure and Windows Server.

Visual Design Experience A new Visual Studio based designer experience allows you assemble code from your existing application components, along with newer cloud services, and tie them together as a single logical entity.

Managed as a service The Composite Application service is a multi-tenant, managed service which consumes the Composition Model definition and automates the deployment and management of the end to end application - eliminating manual steps needed by both developers and ITPros today.

The composite application environment offers the following benefits:

- Greater developer productivity through rapid assembly, linking of components and automated deployment of the entire end-to-end application;

- Easier configuration and control of entire application and individual components;

- End-to-end application monitoring (events, state, health and performance SLAs);

- Easier troubleshooting (through richer diagnostics and debugging of the whole application);

- Performance optimization of the whole application (scale-out/in, fine-tuning, migration, etc);

- Integrated operational reporting (usage, metering, billing).

Scale-out Application Infrastructure

Both the AppFabric Services and your own composite applications built using the Composition Model are built upon an advanced, high-performance application infrastructure that has been optimized for cloud-scale services and mid-tier components. The AppFabric Container provides base-level infrastructure such as automatically ensuring scale out, availability, multi-tenancy and sandboxing of your application components. The main capabilities provided by the AppFabric Container are:

- Composition Runtime This manages the full lifecycle of an application component including loading, unloading, starting, and stopping of components. It also supports configurations like auto-start and on-demand activation of components.

- Sandboxing and Multi-tenancy This enables high-density and multi-tenancy of the hosted components. The container captures and propagates the tenant context to all the application and middleware components.

- State Management This provides data and persistence management for application components hosted in the container.

- Scale-out and High Availability The container provides scale-out by allowing application components to be cloned and automatically distributed; for stateful components, the container provides scale-out and high availability using partitioning and replication mechanisms. The AppFabric Container shares the partitioning and replication mechanisms of SQL Azure.

- Dynamic Address Resolution and Routing In a fabric-based environment, components can be placed or reconfigured dynamically. The container automatically and efficiently routes requests to the target components and services.

Bridging On-Premises and Cloud

Finally, one of the important capabilities needed by businesses as they begin their journey to the cloud is being able to leverage existing on-premise LOB systems and to expose them selectively and securely into the cloud as web services. However, since most organizations are firewall protected, the on-premise LOB systems are typically not easily accessible to cloud applications running outside the organization’s firewall.

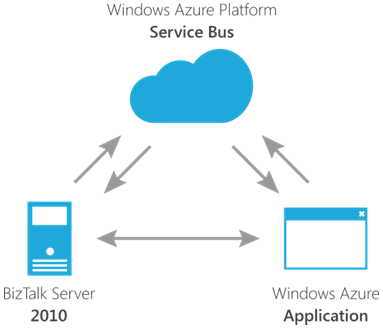

AppFabric Connect allows you to leverage your existing LOB integration investments in Windows Azure using the Windows Azure AppFabric Service Bus, and Windows Server AppFabric. This new set of simplified tooling extends BizTalk Server 2010 to help accelerate hybrid on/off premises composite application scenarios which we believe are critical for customers starting to develop hybrid applications.

(AppFabric Connect is available now as a free add-on for BizTalk Server 2010 to extend existing systems to both Windows Azure AppFabric and Windows Server AppFabric.)

•• Clemens Vasters announced the availability of his pre-recorded PDC10 session in a PDC10 Windows Azure AppFabric - Service Bus Futures post of 10/29/2010:

My PDC10 session is available online (it was pre-recorded). I talk about the new ‘Labs’ release that we released into the datacenter this week and about a range of future capabilities that we’re planning for Service Bus. Some of those future capabilities that are a bit further out are about bringing back some popular capabilities from back in the .NET Services incubation days (like Push and Service Orchestration), some are entirely new.

One important note about the new release at http://portal.appfabriclabs.com – for Service Bus, this is a focused release that provides mostly only new features and doesn’t provide the full capability scope of the production system and SDK. The goal here is to provide insight into an ongoing development process and opportunity for feedback as we’re continuing to evolve AppFabric. So don’t derive any implications from this release on what we’re going to do with the capabilities already in production.

•• Rajesh Makhija summarizes Windows Azure Identity and Access In the Cloud in this 10/29/2010 post:

Identity & Access in the Cloud

For building applications that leverage the Windows Azure Platform one needs to put some thought on how one would manage Identity and Access especially in scenarios were the application needs to leverage on-premise resources as well as Cloud Services for enterprise and inter-enterprise collaboration. Three key technologies that can ease this task are:

- Windows Azure AppFabric Access Control Service

- Active Directory Federation Services 2.0

- Windows Identity Foundation

The Windows Azure AppFabric Access Control Service helps build federated authorization into your applications and services, without the complicated programming that is normally required to secure applications that extend beyond organizational boundaries. With its support for a simple declarative model of rules and claims, Access Control rules can easily and flexibly be configured to cover a variety of security needs and different identity-management infrastructures. It acts as a Security Token Service in the cloud.

Active Directory Federation Services 2.0 is a server role in Windows Server that provides simplified access and single sign-on for on-premises and cloud-based applications in the enterprise, across organizations, and on the Web. AD FS 2.0 helps IT streamline user access with native single sign-on across organizational boundaries and in the cloud, easily connect applications by utilizing industry standard protocols and provide consistent security to users with a single user access model externalized from applications.

Windows® Identity Foundation (WIF) is a framework for building identity-aware applications. The framework abstracts the WS-Trust and WS-Federation protocols and presents developers with APIs for building security token services and claims-aware applications. Applications can use WIF to process tokens issued from security token services and make identity-based decisions at the web application or web service.

Scenarios

All application scenarios that involve AppFabric Access Control consist of three service components:

- Service provider: The REST Web service.

- Service consumer: The client application that accesses the Web service.

- Token issuer: The AppFabric Access Control service itself.

For this release, AppFabric Access Control focuses on authorization for REST Web services and the AppFabric Service Bus. The following is a summary of AppFabric Access Control features:

- Cross-platform support. AppFabric Access Control can be accessed from applications that run on almost any operating system or platform that can perform HTTPS operations.

- Active Directory Federation Services (ADFS) version 2.0 integration. This includes the ability to parse and publish WS-Federation metadata.

- Lightweight authentication and authorization using symmetric keys and HMACSHA256 signatures.

- Configurable rules that enable mapping input claims to output claims.

- Web Resource Authorization Protocol (WRAP) and Simple Web Token (SWT) support.

Acm.exe Tool

The Windows Azure AppFabric Access Control Management Tool (Acm.exe) is a command-line tool you can use to perform management operations (CREATE, UPDATE, GET, GET ALL, and DELETE) on the AppFabric Access Control entities (scopes, issuers, token policies, and rules).

View:

Downloads & References

- Windows Azure AppFabric SDK September Release is now available here ">herehere "> for download (both 32-bit and 64-bit versions).

- AppFabric LABS ">AppFabric LABSAppFabric LABS "> is an environment which the AppFabric team is using to showcase early bits and get feedback from the community. Usage for this environment will not be billed.

- Datasheet for Customers">Datasheet for Customers

• Tim Anderson (@timanderson) described AppFabric [as] Microsoft’s new middleware in this 10/29/2010 post:

I took the opportunity here at Microsoft PDC to find out what Microsoft means by AppFabric. Is it a product? a brand? a platform?

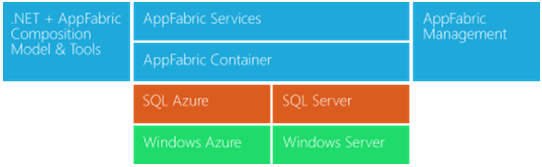

The explanation I was given is that AppFabric is Microsoft’s middleware brand. You will normally see the work in conjunction with something more specific, as in “AppFabric Caching” (once known as Project Velocity) or “AppFabric Composition Runtime” (once known as Project Dublin. The chart below was shown at a PDC AppFabric session:

Of course if you add in the Windows Azure prefix you get a typical Microsoft mouthful such as “Windows Azure AppFabric Access Control Service.”

Various AppFabric pieces run on Microsoft’s on-premise servers, though the emphasis here at PDC is on AppFabric as part of the Windows Azure cloud platform. On the AppFabric stand in the PDC exhibition room, I was told that AppFabric in Azure is now likely to get new features ahead of the on-premise versions. The interesting reflection is that cloud customers may be getting a stronger and more up-to-date platform than those on traditional on-premise servers. [Emphasis added.]

Related posts:

• Wade Wegner summarized New Services and Enhancements with the Windows Azure AppFabric on 10/28/2010:

Today’s an exciting day! During the keynote this morning at PDC10, Bob Muglia announced a wave of new building block services and capabilities for the Windows Azure AppFabric. The purpose of the Windows Azure AppFabric is to provide a comprehensive cloud platform for developing, deploying and managing applications, extending the way you build Windows Azure applications today.

PDC09, we announced both Windows Azure AppFabric and Windows Server AppFabric, highlighting a commitment to deliver a set of complimentary services both in the cloud and on-premises. While this has long been an aspiration, we haven’t yet delivered on it – until today!

Let me quickly enumerate the some of the new building block services and capabilities:

- Caching (CTP at PDC) – an in-memory, distributed application cache to accelerate the performance of Windows Azure and SQL Azure-based applications. This Caching service is the complement to Windows Server AppFabric Caching, and provides a symmetric development experience across the cloud and on-premises.

- Service Bus Enhancements (CTP at PDC) – enhanced to add durable messaging support, load balancing for services, and an administration protocol. Note: this is not a replacement of the live, commercial Service Bus offering, but instead a set of enhancements provided in the AppFabric LABS portal.

- Integration (CTP in CY11) – common BizTalk Server integration capabilities (e.g. pipeline, transforms, adapters) as a service on Windows Azure.

- Composite Application (CTP in CY11) – a multi-tenant, managed service which consumes the .NET based Composition Model definition and automates the deployment and management of the end-to-end application.

As part of the end-to-end environment for composite applications, there are a number of supporting elements:

- AppFabric Composition Model and Tools (CTP in CY11) – a set of .NET Framework extensions for composing applications on the Windows Azure platform. The Composition Model provides a way to describe the relationship between the services and modules used by your application.

- AppFabric Container (CTP in CY11) – a multitenant, high density host optimized for services and mid-tier components. During the keynote, James Conard showcased a standard, .NET WF4 running in the container.

Want to get up to speed quickly? Take a look at these resources for Windows Azure AppFabric:

- Official website: www.microsoft.com/appfabric/azure

- Team Blog: http://blogs.msdn.com/b/windowsazureappfabric/

- MSDN website: http://msdn.microsoft.com/en-us/windowsazure/netservices.aspx

- LABS Portal: http://portal.appfabriclabs.com/

Watch these great sessions from PDC10 which cover various pieces of the Windows Azure AppFabric:

- Composing Applications with AppFabric Services

- Connecting Cloud & On-Premises Apps with the Windows Azure Platform

- Identity & Access Control in the Cloud

- Building High Performance Web Applications in the Windows Azure Platform

- Introduction to Windows Azure AppFabric Caching

- Microsoft BizTalk Server 2010 and Roadmap

- Service Bus Enhancements

Also, be sure to check out this interview [embedded in Wade’s article] with Karandeep Anand, Principal Group Program Manager with Application Platform Services, as he talks about the new Windows Azure AppFabric Caching service.

Early next week my team, the Windows Azure Platform Evangelism team, will release a new version of the Windows Azure Platform Training Kit – in this kit we’ll have updated hands-on-labs for the Caching service and the Service Bus enhancements. Be sure and take a look!

Of course, I’ll have more to share over the new few days and weeks.

The Windows Azure AppFabric team posted Introduction to Windows Azure AppFabric Caching CTP on 10/28/2010:

This blog post provides a quick introduction to Windows Server AppFabric Caching. You might also want to watch this short video introduction.

As mentioned in the previous post announcing the Windows Azure AppFabric CTP October Release, we've just introduced Windows Azure AppFabric Caching, which provides a distributed, in-memory cache, implemented as a cloud service.

Earlier this year we delivered Windows Server AppFabric Caching, which is our distributed caching solution for on-premises applications. But what do you do if you want this capability in your cloud applications? You could set up a caching technology on instances in the cloud, but you would end up installing, configuring, and managing your cache server instances yourself. That really defeats one of the main goals of the cloud - to get away from managing all those details.

So as we looked for a caching solution in Windows Azure AppFabric, we wanted to deliver the same capabilities available in Windows Server AppFabric Caching, and in fact the same developer experience and APIs for Windows Azure applications, but in a way that provides the full benefit of cloud computing. The obvious solution was to deliver Caching as a service.

To start off, let's look at how you set up a cache. First you'll need to go to the Windows Azure AppFabric LABS environment developer portal (http://portal.appfabriclabs.com/ ) to set up a Project, and under that a Service Namespace. Then you simply click the "Cache" link to configure a cache for this namespace.

With no sweat on your part you now have a distributed cache set up for your application. We take care of all the work of configuring, deploying, and maintaining the instances.

The next screen gives you the Service URL for your cache and an Authentication token you can copy and paste into your application to grant it access to the cache.

So how do you use Caching in your application?

First, the caching service comes with out-of-the-box ASP.NET providers for both session state and page output caching. This makes it extremely easy to leverage these providers to quickly speed up your existing ASP.NET applications by simply updating your web.config files. We even give you the configuration elements in the developer portal (see above) that you can cut and paste into your web.config files.

You can also programmatically interact with the cache to store and retrieve data, using the same familiar API used in Windows Server AppFabric Caching. The typical pattern used is called cache-aside, which simply means you check first if the data you need is in the cache before going to the database. If it's in the cache, you use it, speeding up your application and alleviating load on the database. If the data is not in the cache, you retrieve it from the database and store it in the cache so its available next the application needs it.

The delivery of Caching as a service can be seen as our first installment on the promise of AppFabric - to provide a consistent infrastructure for building and running applications whether they are running on-premises or in the cloud. You can expect more cross-pollination between Windows Server AppFabric and Windows Azure AppFabric in the future.

We invite you to play with the Caching CTP and give us feedback on how it works for you and what features you would like to see added. One great way to give feedback is to fill out this survey on your distributed caching usage and needs.

As we move towards commercial launch, we'll look to add many of the features that make Windows Server AppFabric Caching extremely popular, such as High Availability, the ability to emit notifications to clients when they need to refresh their local cache, and more.

Windows Azure AppFabric team reported Windows Azure AppFabric SDK October Release available for download on 10/28/2010:

A new version of the Windows Azure AppFabric SDK V1.0 is available for download starting 10/27. The new version introduces a fix to an issue which causes the SDK to rollback on 64 bit Windows Server machines with BizTalk Server installed.

The fix is included in the updated SDK download. If you are not experiencing this issue, there is no need to download the new version of the SDK.

<Return to section navigation list>

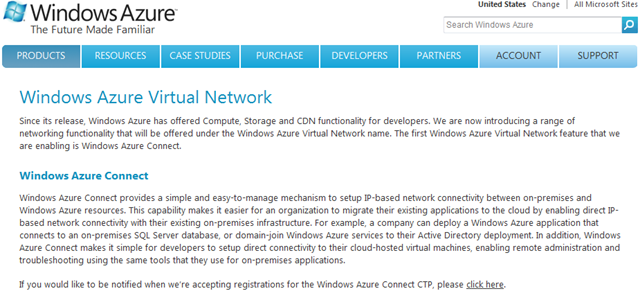

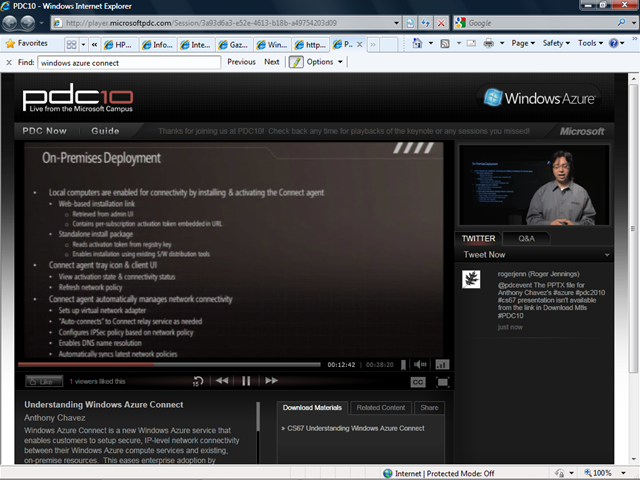

Windows Azure Virtual Network, Connect, and CDN

Daniel J. St. Louis explained PDC: Why Steve Jobs' Pixar uses Microsoft Windows Azure in this 10/29/2010 post:

Everyone knows Pixar, the studio that makes fantastic computer-graphics movies. Most people probably realize they couldn’t just make one of those films with their desktop PC. But few people may know that’s not necessarily because of the CG software, but mainly because of the way Pixar converts all that computer data into video.

(Steve Jobs is one of the three founding fathers of Pixar Animation Studios)

Through a process called rendering, a CG movie is converted from data to video frame by frame. There are 24 frames per second in film. With all the data that go into one frame of, say, "Up," it would take one computer more than 250 years to render a Pixar movie, said Chris Ford, a business director with the studio.

"Generally, if you’re a studio you’ll have a data center, known as a render farm, typically with 700 to 800 processors," he said today. Ford joined Bob Muglia, president of the Microsoft Business Division, on stage at the Professional Developers Conference in Redmond.

Why was he in Redmond? Because, in a proof of concept, Pixar has taken its industry-leading rendering technology — software called RenderMan — and put it in the cloud. The idea is that anybody can use Renderman — via Microsoft’s Windows Azure cloud-computing platform — taking advantage of thousands of processors, connected via the Internet, to render CG video at relatively quick speeds.

Click to enlarge - Image courtesy of PixarAll this data — each balloon, each car, each building, each light source, each texture — are compiled into CG files that must be rendered to create one frame of "Up."

Click to enlargeWith the tool, users could choose the speed at which their video is rendered, paying more for rush jobs and paying less if they have some time to wait. That’s possible because of the elasticity of cloud platforms such as Azure — they can automatically manage how many processors are working on projects at a time.

Ford said Pixar — which, by the way, was co-founded by Apple CEO Steve Jobs — likes Windows Azure also because it is dependable and is a choice that’s guaranteed to be around for a while. Cloud-based RenderMan, of course, represents a revenue source for Pixar not that typical for a movie studio.

Pixar’s RenderMan technology is widely used across the industry. Ford said just about every CG shot you’ve seen on the silver screen since the 1995 release of "Toy Story" was rendered using RenderMan.

Through Windows Azure, Pixar wants to make sure every CG shot you see on the small screen is as well.

Image courtesy of Nick Eaton/seattlepi.comMicrosoft’s Bob Muglia, left, and Pixar’s Chris Ford talk to the audience at PDC in Redmond.

•• Jim O’Neill posted a paean to interoperability in his Windows Azure for PHP and Java article of 10/29/2010:

Historically Microsoft’s Professional Developer Conference (PDC) is the pre-eminent developer conference for Microsoft technologies, and while that remains true, you may have noticed this year’s session titles included technologies like “PHP” and “Java” – typically with “Azure” in close proximity. Although there’s a lingering perception of Microsoft as ‘closed’ and ‘proprietary’, Windows Azure is actually the most open of any of the cloud Platform-as-a-Service vendors today, and recent announcements solidify that position.

You might have seen my post on the Azure Companion a few weeks ago, and today at PDC there were even more offerings announced providing additional support for Java and PHP in the cloud:

- The Windows Azure tools for Eclipse/Java, an open source project sponsored by Microsoft, and developed and released by partner Soyatec. We expect them to make a Community Technology Preview of the Windows Azure tools for Eclipse/Java available by December 2010.

- Release of version 2.0 of the Windows Azure SDK for Java, also from Soyatec.

- November 2010 CTP of the Windows Azure Tools for Eclipse/PHP as well the November 2010 CTP of the Windows Azure Companion.

- The launch of a new website dedicated to Windows Azure and PHP.

All of the sessions at PDC are available online, so if you’re looking for more details on using PHP or Java in the cloud, check out these sessions (and actually let me know as well!):