Windows Azure and Cloud Computing Posts for 7/26/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Azure Blob, Drive, Table and Queue Services

Jai Haridas of the Windows Azure Storage Team explains How WCF Data Service Changes in OS 1.4 Affects Windows Azure Table Clients in this 7/26/2010 post:

The release of Guest OS 1.4 contains an update to .NET 3.5 SP1 which contains some bug fixes to WCF Data Services. We have received some feedback on backward compatibility issues in Windows Azure Tables with respect to the WCF Data Services update. The purpose of this post is to go over some of the breaking changes when moving from .NET 3.5/.NET 3.5 SP1 to the above mentioned update to .NET 3.5 SP1. In addition, we hope this also helps when you upgrade your application to use .NET 4.0 from .NET 3.5 SP1, since the same breaking changes are present in .NET 4.0 too.

Issue #1 - PartitionKey/RowKey ordering in Single Entity query

Before .NET 4.0 and the update to WCF Data Services in .NET 3.5SP1, no exception was thrown when trying to get a single entity which does not exist in the storage service. Take for example the following LINQ query, where the RowKey match is in the expression before the PartitionKey match:

var q = from entity in context.CreateQuery<MyEntity>(“MyTable”) where entity.RowKey == “Bar” && entity.PartitionKey == “Foo” select entityThis would generate the following Uri:

http://myacocunt.table.core.windows.net/MyTable?$filter=PartitionKey eq ‘Foo’ and RowKey eq ‘Bar’If the entity did not exist, the use of $filter to specify the filter did not result in an exception and an empty result set was returned before the update. The bug is that the above query should have resulted in the following Uri:

http://myacocunt.table.core.windows.net/MyTable(PartitionKey=“Foo”,RowKey=”Bar”)This Uri format always results in a DataServiceQueryException with error code “ResourceNotFound” when the entity does not exist.

In the update, when querying for a single entity if the RowKey is filtered before the PartitionKey in the query, it now results in the above Uri which addresses a single entity (i.e. $filter is not used). An exception is now raised if the entity is not present on the server irrespective of the order in which the keys are specified in the LINQ query.

Note, the following LINQ query:

var q = from entity in context.CreateQuery<MyEntity>(“MyTable”) where entity.PartitionKey == “Foo” && entity.RowKey == “Bar” select entityhas always resulted in the following query Uri:

http://myacocunt.table.core.windows.net/MyTable(PartitionKey=“Foo”,RowKey=”Bar”)which always results in a DataServiceQueryException with error code “ResourceNotFound” when the entity does not exist. This has always been the case and has not changed.

Any dependency on the behavior that an empty set will be returned when an entity is not found will break your application because the new behavior is to raise an exception even when RowKey precedes PartitionKey in the LINQ query.

The suggestion from WCF Data Services team for this breaking change is:

- In update to .NET 3.5 SP1 (available in the Guest OS 1.4 release) and in .NET 4.0, a new flag “IgnoreResourceNotFoundException” on the context is provided to control this. Use IgnoreResourceNotFoundException to ignore exceptions by specifying the following:

context.IgnoreResourceNotFoundException = true;- Always Catch exceptions and then ignore ”Resource Not Found” exceptions if required by your application logic.

Issue #2 - Uri double escaping that impacts queries

Previous versions of the WCF Data Services library did not escape certain characters when forming the Uri. This allowed some entities to be inserted but not retrieved or deleted. A blog post covered the characters that had problems. The solution was to encode/escape them before using them. However, the updated .NET 3.5SP1 (used in OS 1.4) and .NET 4.0 has fixed this issue by using the appropriate encoded/escaped value. This now would break existing applications that had already escaped their values.

The resolution is to review your application to see if values are being escaped and undo these changes to see if it works with the WCF Data Service release.

Issue #3 - Uri escaping that impacts AttachTo

The DataServiceContext tracks entities using its address when entities are either returned in the query result or when AddObject/AttachTo is invoked. The address is basically the Uri that contains PartitionKey and RowKey. For example, an entity with PartitionKey=foo and RowKey=bar is tracked using

http://myaccount.table.core.windows.net/MyTable(PartitionKey='foo',RowKey='bar').With the Uri escaping fix mentioned above, the previous version (pre .NET 3.5SP1 update and pre .NET 4.0) has a mismatch in how it creates this address to track the entities when the address has a special character in it, which needs to be escaped. The mismatch is between the address (that is appropriately escaped) it uses for an entity it receives from the server and the address it uses (that is un-escaped) when AddObject/AttachObject is invoked. This mismatch causes entities with same key to be tracked twice.

For example, for an entity with PartitionKey = ‘foo@bar.com’ and RowKey = ‘’, the address used to search the list of tracked entities at the time of AttachTo and AddObject is:

.NET 3.5 SP1 update and .NET 4.0 uses the same address that is used to track the entity:

http://myaccount.table.core.windows.net/Emails(PartitionKey='jai%40com',RowKey='')Pre .NET 4.0 and .NET 3.5 SP1 update however uses a different (un-escaped) address:

http://myaccount.table.core.windows.net/Emails(PartitionKey='jai@com',RowKey='')So let us use an example to see exactly where the inconsistency is:

New Client Library (update to .NET 3.5 SP1 and .NET 4.0):

When entity returned from server as result of a query, server returns ID that is escaped:

http://myaccount.table.core.windows.net/Emails(PartitionKey='jai%40com',RowKey='')

and WCF Data Service Client library tracks using this id for the entity.Then assume an AddObject/AttachTo is invoked for an object with the same key, so the WCF Data Service Client library uses the escaped URI to try to add/attach the object:

http://myaccount.table.core.windows.net/Emails(PartitionKey='jai%40com',RowKey='')This resuls in an InvalidOperationException exception being thrown with the message “Context is already tracking a different entity with the same resource Uri”. This is the behavior that the client library wants, since the object was already being tracked in the context, so the program should not be able add/attach another object with the same key.

Old Client Library:

Now let us look at the example using the client library before the update. When entity returned from server as result of a query, server returns the ID that is escaped:

http://myaccount.table.core.windows.net/Emails(PartitionKey='jai%40com',RowKey='')

and WCF Data Service Client library tracks using this id for the entity.Then when the AddObject/AttachTo is invoked, the WCF Data Service Client library does not escape it and uses

http://myaccount.table.core.windows.net/Emails(PartitionKey='jai@com',RowKey='')

to track the newly added object and hence causing the inconsistency. Instead, the client library should have escaped the keys in order to know that it was already tracking an object of that name, which is what the update in the new client library now does.For the old client library, this can lead to strange behavior since two instances that represent the single server entity will be tracked in a single context (one is tracked via a query result and the other is tracked via either AttachTo or AddObject)

- If both instances are unconditionally updated, the user may inadvertently lose some changes.

- Let us assume a scenario where a table is used like a lookup table. An application may choose to query all entities from this lookup table with the context tracking these entities. Here the context uses IDs that are appropriately escaped. Now an application may rely on “Context is already tracking…” exception when it adds a new entity. However, the bug can cause the context to track it using an un-escaped URI and the collision is not detected during AddObject and the context tracks two instances that represent the same key. When SaveChanges is invoked, the server fails because the entity already exists on the server and the server correctly returns “Conflict”. However, an application may not be expecting this behavior since it expected the conflict to be detected while “AddObject” was invoked rather than SaveChanges.

- If conditional update is used on both instances, only first update that is processed by the server will succeed and second will fail because of ETag check. The order in which the entities are added to the context (via query or AddObject/AttachTo) will determine the order of requests dispatched to the server.

However, if the address does not contain special characters, then AddObject and AttachTo would throw InvalidOperationException with message “Context is already tracking a different entity with the same resource Uri”, and everything would work fine in the old client library.

This bug has been fixed in the .NET 3.5 SP1 update and in .NET 4.0 where the context escapes the Uri even when AddObject/AttachTo is invoked hence recreating the same address (and hence correctly leading to an InvalidOperationException mentioned above).

Let us go over this using a code example to show how the issue could occur:

TableServiceContext context = tableClient.GetDataServiceContext(); // For simplicity we have ignored the code that uses CloudTableQuery to // handle continuation tokens. var q = from entity in context.CreateQuery<MyEntity>("Emails") select entity; // Let us assume entityInTable is an already existing entity in table retrieved // using the above query and will now be tracked by the context. var entityInTable = q.FirstOrDefault(); // now let us create a new instance but with the same PartitionKey and RowKey var someEntity = new MyEntity { PartitionKey = entityInTable.PartitionKey, RowKey = entityInTable.RowKey }; try { // NOTE: Depending on WCF release and key values, AttachTo may throw // an InvalidOperationException with message: // "The context is already tracking a different entity with the same resource Uri." // CASE 1> Pre .NET 3.5SP1 update => Depending on the key value, an exception may be thrown. // If the key contains a character that needs to be encoded, // then an exception is NOT thrown, otherwise, an exception is // always thrown. // CASE 2> .NET 3.5SP1 update and .NET 4.0 => An exception is always thrown // // Example of an value: If PartitionKey = ‘foo@bar.com’ and RowKey = ‘’ then // the entity is tracked as: // http://myaccount.table.core.windows.net/MyTable(PartitionKey='foo%40bar.com',RowKey='') // However, when attaching a new object in CASE 1, the id is not escaped and hence // the duplicate entity is not tracked and an exception is not thrown. // leading to strange behavior if the application unconditionally updates both the instances context.AttachTo("Emails", someEntity, "*"); } catch (InvalidOperationException e) { // Check if message is "The context is already tracking a different entity with the // same resource Uri." and handle this case as required by your application } context.UpdateObject(someEntity); context.SaveChanges();The resolution is to upgrade the WCF Data Services library. However, after upgrading, you should ensure that your code handles exceptions. This is one of the recommended best practices.

One can also check for entity existence using key equality check rather than instance equality before attaching/adding a new object instance. So in the example below, the first LINQ query finds the tracked entity but the second one does not since it does an equality check on reference which it is not the same. If we Attach only if an entity is not found using the first LINQ query, we will never have duplicates. Also, remember that WCD Data Service recommends that a new instance of context be used for every logical operation. Using a new context for every logical operation should reduce the chances of tracking duplicate entities.

Example:

// Create a new instance and let entityInTable represent an entity retrieved via a query var someEntity = new MyEntity { PartitionKey = entityInTable.PartitionKey, RowKey = entityInTable.RowKey }; // This will find the tracked entity instance since we are looking for key equality. If // trackedEntityKeySearch is not null, it means entity is tracked so do not invoke AddObject/AttachTo var trackedEntityKeySearch = (from e in context.Entities where ((TableServiceEntity)e.Entity).PartitionKey == someEntity.PartitionKey && ((TableServiceEntity)e.Entity).RowKey == someEntity.RowKey select ((TableServiceEntity)e.Entity)).FirstOrDefault<TableServiceEntity>(); // NOTE: This will not find the tracked entity even if it is tracked since it is not the same // object instance. So the above query is preferred to see if a particular entity is being tracked var trackedEntityReferenceSearch = (from e in context.Entities where e.Entity == someEntity select ((TableServiceEntity)e.Entity)).FirstOrDefault<TableServiceEntity>();We apologize for any inconvenience this has caused and hope this helps you make a smooth transition as possible to .NET 3.5 SP1 update or .NET 4.0. However, we would like to end this by reiterating a couple of best practices that the WCF Data Service team recommends:

- Always handle exceptions in AddObject, UpdateObject, AttachTo and in Queries

- DataServiceContext is not thread safe. It is recommended to create a new context for every logical operation

We will have more on best practices in the near future.

Jai Haridas

SQL Azure Database, Codename “Dallas” and OData

Wayne Walter Berry’s Getting Started With Project Houston: Part 1 to the SQL Azure Team blog of 7/26/2010 covers the startup basics:

Microsoft Project Code-Named “Houston” (Houston) is a light weight database management tool for SQL Azure. Houston can be used for basic database management tasks like authoring and executing queries, designing and editing a database schema, and editing table data. Currently, Houston is its first community technology preview (CTP), the instructions and screen shots shown in this blog post are from the CTP version of Houston. In this blog post I am going to show how to get started using Houston.

Houston is a web based, Silverlight application, when means that you can access and use it from any web browser that supports Silverlight (for a list see this web page), anywhere you have an Internet connection. Houston isn’t software that you install, however Silverlight is. You first need to have Silverlight installed to use Houston. If you don’t have it installed, you will be prompted to install it when you access Houston for the first time.

You can start using Houston by going to: https://manage.sqlazurelabs.com/. SQL Azure labs is the location for projects that are either in CTP or incubation form. The URL will probably change on release.

Logging In

Currently for the CTP you need to enter your SQL Azure server, database, administrative login and password to when logging in.

Remember, Houston is a Silverlight application, it is running locally client side on your computer. It is not a web application, but it does communicate with web services hosted within Windows Azure.

The Start Page

Once you are logged in you are presented front and center with the start page. The start page consists of a rotating cube that displays information about the database you choose to access. One thing to note is that Houston only allows you to manage one database in the browser windows. If you want to manage more than one database, use the tabs on your browser to open more than one instance of Houston.

Click on the arrows to rotate through the spinning box, make sure to say “oh” and “ah” as it rotates.

On the help page, note the links to the Houston videos that will give you further help.

Navigation

At the top of the application is a toolbar that changes depending what is being displayed in the main tab pane.

The database toolbar appears like this:

The table toolbar changes to look like this:

You can jump back to the database toolbar at any time by clicking on the Database link at the top left of the application, regardless of whether you are done working within the current tab.

One thing to note is that Houston maintains the state of your current changes as you navigate between different tabs. If you modify a table, then open another one without saving your change, you change is not lost. The application indicates that the table/tab needs to be saved by writing a pencil icon inside the tab.

If you try to exit the application you are warned that there are changes that need to be saved, so that you don’t lose your work

The change tracking that Houston performs is a nice touch that allows you to work on several tables and their corresponding stored procedures at the same time. Because it feels like you are using a HTML application, it might not feel like change tracking would be in place, however the functionality is very similar to SQL Server Management Studio.

Just a reminder, Houston is in CTP. Because of this, there will be various bugs in the product still, like the spelling error in the dialog above. I reported it already via Microsoft Connect and we would appreciate your bug reports and feedback on this product.

Feedback or Bugs?

This release of project Houston is not supported by standard Microsoft support services. For community-based support, post a question to the SQL Azure Labs MSDN forums. The product team will do its best to answer any questions posted there.

To provide feedback or log a bug about project Houston in this release, use the following steps:

- Navigate to Https://connect.microsoft.com/SQLServer/Feedback

- You will be prompted to search our existing feedback to verify your issue has not already been submitted.

- Once you verify that your issue has not been submitted, scroll down the page and click on the orange Submit Feedback button in the left-hand navigation bar.

- On the bug form, select Version = Houston build CTP 1 - 10.50.9610.34.

- On the bug form, select Category = Tools (SSMS, Agent, Profiler, Migration, etc.).

- Complete your request.

If you have any questions about the feedback submission process or about accessing the portal, send us an email message: sqlconne@microsoft.com.

Summary

This is just the beginning of our Microsoft Project Code-Named “Houston” (Houston) blog posts, make sure to subscribe to the RSS feed to be alerted as we post more information.

For examples of working with table, query, stored procedure and view objects, see my Test Drive Project “Houston” CTP1 with SQL Azure post of 7/23/2010.

Kellandved explains Deploying phpBB on Windows [and SQL] Azure in this 7/26/2010 post to the phpBB blog:

Windows Azure is the Mircrosoft cloud computing solution. One thing that might come as a surprise is that is specifically intended to run php applications (well, it runs .net too, even java). Long story short, we got introduced to the azure platform during the JumpIn! Camp. The project to get phpBB running on the Azure platform started right there – now it’s showing results.

The pre[re]quisite for deploying on Azure is the new support for the native MSSQL driver, which was contributed by Microsoft.

However, a few issues had to be tackled to actually run phpBB in the cloud, namely:

SQL Azure is not quite the same as SQL Server and requires a few tweaks

- Files (uploads) can’t be stored on the local file system, but have to be shared among all instances

- A few oddities of the Azure platform, especially regarding values reported by the webserver

To expand a bit on the issues: SQL Azure requires primary (“clustered”) keys on all tables, something the default phpBB schema does not deliver. On the flip side, the phpBB MSSQL schema includes partition clauses, which are not supported on Azure. Long story short: it needs a different schema. Files cannot be stored locally in a multi-server environment – for instance a cloud – but have to be shared between all instances. This required a few patches to the phpBB core. Finally, Azure includes a load balancer, which reports an incorrect – internal – port via the ‘SERVER_PORT’ variable. This had to be corrected.

All of these changes can be found in my azure branches of my fork at github: http://github.com/kellanved/phpbb3/tree/feature/azure_blob_storage and http://github.com/kellanved/phpbb3/tree/bug/9725

So, how to use these? The answer is:

- Download the source from the bug/9725 branch

- Create a SQL Azure database

- Add a firewall rule to allow your own machine to connect to the SQL Azure database

- Start the phpBB installation, using the bug/9725 branch on your local machine

- Use the credentials of the SQL Azure database during the install

- Add the line define(‘AZURE_INSTALL’, true); to the config.php file

- Delete the install directory

- Create an Azure package with the phpBB installation you had locally

- Deploy the package on Azure

- Add a firewall rule to SQL Azure to allow your phpBB instance to connect with the database

Congrats, you’re running phpBB on Azure.

Jamesy (@bondigeek) claims OData – Multi-dimensional javascript arrays made easy in this 7/26/2010 post:

This might seem like an obvious thing to some but it struck me just how bloody easy it is to create multi-dimensional arrays in javascript when you’re using OData.

Pretty much you don’t need to do anything except make your OData call and it will take care of the rest.

As is always the way I was working on a project yesterday that inspired this blog post and solving a problem where my Ajax calls were getting a tad too chatty and slowing down the performance of my context menus.

Read on for more…

Setting the scene

In my project I have a menu list of files and folders and each time you select one of the files the display is updated and the context menus re-configured depending on the state of the selected item.

This in turn was triggering a change on a dropdown menu that populated an unordered list of groups and categories.

Initially I was making the call to get a list of categories and groups. Each group itself has child records of type ImageOutput and each category has child records of type ImageOutput.

When a group was selected it would pop across to the server and get the list of ImageOutputs. This could happen many, many times but the Groups, Categories and ImageOutput lists will rarely, if ever change.

So caching this on the client side in a multidimensional array was an obvious choice.

OData to the rescue

So to solve this problem I could have manually created the array and made all the appropriate calls to the server to get each set of lists but that would involve multiple round trips to the server. Why not do it the easy way?

First off I have my helper function to make the Ajax calls:

function GetListData(url,async){ var list = ""; $.ajax({ type: "GET", url: url, async: async, contentType: "application/json; charset=utf-8", dataType: "json", success: function (msg) { list = msg.d; }, error: function (xhr) { ShowError(xhr); } }); return list; }The above code just wraps the call to my OData urls and returns the results as json.

Armed with the wrapper function I just need make my calls to the OData urls that expose the data I am after. The first call to ImageOutputCategories just gets a simple list of categories.

The second call uses the $expand system query option which tells it to not only get the OutputGroups but also to return the associated ImageOutputs for each OutputGroup.

You can read more about the $expand system query option here.

if (categories == null) { categories = GetListData("/DataServices/AMPLibrary.svc/ImageOutputCategories", false); } if (groups == null) { groups = GetListData("/DataServices/AMPLibrary.svc/ImageOutputGroups?$expand=ImageOutputs", false); }This second call will return me a multi-dimensional array that I can store on the client in memory and access whenever I please, no trip to the server required.

Some sample code below illustrates how the multi-dimensional array is used:

Populate the Group List:

$("#output-group").children().remove(); for (var i = 0; i <= groups.length - 1; i++) { var option = "<option value='" + groups[i]["Id"] + "'>" + groups[i]["ImageOutputGroupName"] + "</option>"; $("#output-group").append(option); if (groups[i]["Id"] == $("#output-group").val()) { cropHeight = groups[i].CropHeight; cropWidth = groups[i].CropWidth; GetImageGroupOutputs(groups[i]["ImageOutputs"]); } }Populate the OutputGroup list for the selected Group:

function GetImageGroupOutputs(imageOutputs){ $(".image-formats-row").children().remove(); var checkAll = '<div><input id="check-all-formats" type="checkbox" /><label class="check-all" id="all-formats-label">Check all</label></div>'; $(".image-formats-row").append(checkAll); for (var i = 0; i <= categories.length - 1; i++) { if (imageOutputs.length>0) { var ul = "<ul>" for (var k = 0; k <= imageOutputs.length - 1; k++) { if (imageOutputs[k]["ImageOutputCategoryId"] == categories[i]["Id"]) { var title = "<span class='category-title'>" + categories[i]["CategoryName"] + "</span>"; $(".image-formats-row").append(title); break; } } for (var k = 0; k <= imageOutputs.length - 1; k++) { if (imageOutputs[k]["ImageOutputCategoryId"] == categories[i]["Id"]) { var li = "<li><input id='OutputFormat_" + imageOutputs[k]["Id"] + "' type='checkbox' outputid='" + imageOutputs[k]["Id"] + "'>"; li += "<label class='output-format-name'>" + imageOutputs[k]["OutputName"] + "</label></li>"; ul += li; } } ul+="</ul>"; $(".image-formats-row").append(ul); } } }LOVE IT, LOVE IT, LOVE IT.

Thanks OData Team.

BondiGeek

Kevin Kell describes Migrating a SQL Server Database to SQL Azure in his 7/25/2010 post to Learning Tree’s Cloud Computing blog:

As a follow on to my colleague’s recent excellent post I thought that this week I would present a practical, hands-on example of moving a real-world on-premise SQL Server database to SQL Azure.

There are at least three ways to migrate data into SQL Azure:

SQL Script

- Bulkcopy (bcp)

- SQL Server Integration Services (SSIS)

Each has benefits and limitations. Don’t use the script option to move very large data volumes, for example.

Here we are going to take an approach based on kind of a combination of 1 and 3. We will script our database schema and then use SSIS to replicate the data to the cloud. Since there are some features in SQL Server 2008 that are not supported in SQL Azure we will have to do a little hand work to modify the code that is generated for us. We can minimize that hand work if we make some changes in the default options the wizard gives us before we generate the script.

Here is what I had to do:

In SQL Server Management Studio, right click the on-premise database

Choose Tasks | Generate Scripts

Change the default options so that

- ANSI Padding = False

- Convert UDDT to Base Types = True

- Set Extended Properties = False

- USE DATABASE = False

Delete all the stuff having to do with creating the database, etc. I have already created the database in the SQL Azure Developer Portal.

Delete the unsupported features. In my case these included:

- SET ANSI_NULLS ON

- SET ANSI_NULLS OFF

- ON [PRIMARY]

- NOT FOR REPLICATION

- PAD_INDEX = OFF, (

- WITH ( …

- TEXTIMAGE

- NONCLUSTERED

I moved some things around in the code so that tables were created before views, etc. Stuff like that just seemed to make sense to me. You also have to move the creation of things that other things depend on higher up in the code.

- I went through an iterative process of running the code, examining the errors, making changes, and running the code again. Yeah, it is a lot of code. It took me about 30 minutes to get it right. Your time may vary.

Once the schema exists on SQL Azure it is straightforward replicate the data with SSIS. The wizard pretty much takes care of everything!

Click here to view the screencast:

http://www.youtube.com/watch?v=SW3TcF4W1Ws

Okay, so perhaps it is a little more tedious than one would like. Somebody has to actually look at the code. That, I think, is part of the developer’s job. Yes, maybe it should be easier and maybe someday it will be but for now it is what it is. There is an interesting project on CodePlex that attempts to further automate this process. I recommend that you check it out.

If you get a chance, try this out yourself! Then consider attending Learning Tree’s Windows Azure Course!

I don’t know why Kevin would select the SQL Server Migration Wizard for the last choice. SQLMW uses BCP for bulk inserts and saves more than half the time of any other technique.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Radiant Logic offers a downloadable Gartner Report: "The Emerging Architecture of Identity Management" white paper with this description:

Your current identity management infrastructure is built for a world that’s changing quickly—one based on pushing identity from the center, instead of pulling it from many disparate sources. But today’s centralized identity infrastructure can’t keep up with tomorrow’s increasingly federated demands, from user-centric identity, Identity-as-a-service, and the cloud. Luckily, Gartner has outlined a vision for tomorrow’s identity landscape.

Vice President and Research Director Bob Blakley’s groundbreaking new paper offers a roadmap for a new Identity Management infrastructure—one with virtualization at its core. Don’t miss this free resource!

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Richard Seroter makes a Cloud Provider Request: Notification of Exceeded Cost Threshold in this 7/26/2010 post:

I wonder if one of the things that keeps some developers from constantly playing with shiny cloud technologies is a nagging concern that they’ll accidentally ring up a life-altering usage bill. We’ve probably all heard horror stories of someone who accidentally left an Azure web application running for a long time or kept an Amazon AWS EC2 image online for a month and were shocked by the eventual charges. What do I want? I want a way to define a cost threshold for my cloud usage and have the provider email me as soon as I reach that value.

Ideally, I’d love a way to set up a complex condition based on various sub-services or types of charges. For instance, If bandwidth exceeds X, or Azure AppFabric exceeds Y, then send me an SMS message. But I’m easy, I’d be thrilled if Microsoft emailed me the minute I spent more than $20 on anything related to Azure. Can this be that hard? I would think that cloud providers are constantly accruing my usage (bandwidth, compute cycles, storage) and could use an event driven architecture to send off events for computation at regular intervals.

If I’m being greedy, I want this for ANY variable-usage bill in my life. If you got an email during the summer from your electric company that said “Hey Frosty, you might want to turn off the air conditioner since it’s ten days into the billing cycle and you’ve already rung up a bill equal to last month’s total”, wouldn’t you alter your behavior? Why are most providers stuck in a classic BI model (find out things whenever reports are run) vs. a more event-driven model? Surprise bills should be a thing of the past.

Are you familiar with any providers who let you set charge limits or proactively send notifications? Let’s make this happen, please.

Rob Blackwell’s AzureRunMe project Release 1 of 7/24/2010 on CodePlex lets you “[r]un your Java, Ruby, Python, Clojure or (insert language of your choice) project on Windows Azure Compute:”

Latest

I'm using AzureRunMe to host a Clojure + Compojure project.

Also now runs Tomcat - contact me if you want to know how, but it's much neater than the TomCat Solution Accelerator because your WAR files can just come from Blob Store!

I've used it to run Restlet and also Jetty (although without the NIO support).Introduction

There are a number of code samples that show how to run Java, Ruby, Python, etc. on Windows Azure, but they all vary in approach and complexity. I thought there ought to be a simplified, standardised way.

I wanted something simple that took a self contained ZIP file, unpacked it and just executed a batch file, passing the HTTP port as an argument.

I wanted ZIP files to be stored in Blob store to allow them to be easily updated with all Configuration settings in the Azure Service Configuration.

Trace messages, debug, console out and exceptions are easiest to surface via the ServiceBus.

Prerequisites

(see http://msdn.microsoft.com/en-us/windowsazure/cc974146.aspx )

- The Windows Azure SDK & Tools for Visual Studio

- The Windows Azure AppFabric

Instructions

Organise your project so that it can all run from under one directory and has a batch file at the top level.

In my case, I have a directory called c:\foo. Under that I have copied the Java Runtime JRE. I have my JAR files in a subdirectory called test and a runme.bat above those that looks like this:

cd test

..\jre\bin\java -cp Test.jar;lib\* Test %1I can bring up a console window using cmd and change directory in

c:\fooThen I can try things out locally by typing

C:>Foo> runme.bat 8080The application runs and serves a web page on port 8080.

I package the jre directory as jre.zip and the test directory along with the runme.bat file together as dist.zip.

Having two ZIP files saves me time - I don't have to keep uploading the JRE each time I change my Java application.

My colleague has a ruby.zip file containing Ruby and Mongrel and his web application in rubyapp.zip in a similar way.

Upload the zip files to blob store. Create a container called "packages" and put them in there. The easiest way to do this is via Cerebrata Cloud Studio http://clumsyleaf.com/products/cloudxplorer.

Another alternative is to use the UploadBlob command line app distributed with this project.

The next step is to build and deploy Azure RunMe ..

- Load Azure RunMe in Visual Studio and build.

- Change the ServiceConfiguration.cscfg file.

- Update DataConnectionString with your Windows Azure Storage account details so that AzureRunme can get ZIP files from Blob store.

- Change the TraceConnectionString to your appFabric Service Bus credentials so that you can use the CloudTraceListener to trace your applications.

- By default, Packages is set to "packages\jre.zip;packages\dist.zip" which means download and extract jre.zip then download and extract dist.zip, before executing runme.bat

- Click on AzureRunMe and Publish your Azure package.

- Sign into the Windows Azure Developer Portal at http://windows.azure.com

- Create a New Hosted Service and upload the package and config to your Windows Azure account. You are nearly ready to go.

- Change the app.config file for TraceConsole to include your own service bus credentials.

- Run the TraceConsole locally on your desktop machine.

- Now run the Azure instance by clicking on Run in the Windows Azure Developer Portal.

Deployment might take some time (maybe 10 minutes or more), but after a while you should see trace information start spewing out in your console app. You should see that it's downloading your ZIP files and extracting the[m]. Finally it should run your runme.bat file.

If all goes well your app should now be running in the cloud!

Future Ideas

- Look at changing the TraceListener to use Hybrid mode and reducing service bus costs

- Consider attaching to an X Drive for persistent storage (via config)

- Try to find a way of exposing an internal end point via the ServiceBus to allow Clojure users to hook up a SLIME / SWANK connection.

Credits

- This project uses Ionic Zip library, part of a CodePlex project at http://www.codeplex.com/DotNetZip which is distributed under the terms of the Microsoft Public License.

- TraceConsole and TraceListener are code samples from the Microosft appFabric SDK (with minor modifications).

Rob Blackwell

July 2010

Joel Jeffery’s Microsoft Azure Cloud Services and Mobile Applications post of 7/22/2010 reviews Jason Zander’s presentation at UK Tech days:

I just come across another great video from Jason Zander at the UK Tech Days event in Reading. This time it’s about mobile applications and the Azure platform.

I’ve been following the Microsoft cloud service offerings for a couple of years since the Microsoft Architect Insight Conference 2008. What started as SQL Server Data Services and the nascent BizTalk Services, has now grown into an offering that in my opinion does not just complete with Amazon and Google cloud services, but far exceeds the capabilities of their model.

Here’s a screenshot of a really simple ASP.NET page that I’m going to run in the local Azure test harness. You need to make sure it’s running as Administrator, or you can’t launch the Azure Simulation Environment.

If I’ve done all that, I can hit F5 and get this:

Shortly followed by the web application as if it were running in the cloud:

In the http://www.youtube.com/watch?v=ava6yFMewN8'>video, Jason builds a quick application connecting some .NET entity classes to a SQL Azure instance and shows how quickly a simple web service can be deployed to the cloud.

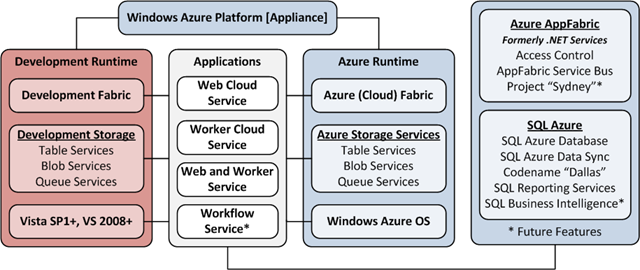

You also get to see the developer tools for Visual Studio 2010 and Azure, including local simulations of the App Fabric and Dev Fabric infrastructure of Azure. In other words, you can test your Azure apps by running them on your local machine without needing to actually deploy them.

Instead of deploying an ASP.NET Web Application like I did above, Jason shows you the newly released Visual Studio Express and he walks through the steps to build a Silverlight mobile application to consume the service he just deployed on Azure.

The key takeaway here is just how easy it is to build and consume cloud services and applications across a broad spectrum of platforms. Follow this link for more information about Microsoft Azure, or give us a call a JFDI Phoenix in the UK.

Joel is a Microsoft Certified SharePoint 2010 specialist and Microsoft Certified Trainer.

The Microsoft Case Studies Team posted Lockheed Martin Merges Cloud Agility with Premises Control to Meet Customer Needs:

Headquartered in Bethesda, Maryland, Lockheed Martin is a global security company that employs about 136,000 people worldwide and is principally engaged in the research, design, development, manufacture, integration, and sustainment of advanced technology systems, products, and services. The company wanted to help its customers obtain the benefits of cloud computing, while balancing security, privacy, and confidentiality concerns. The company used the Windows Azure platform to develop the Thundercloud™ design pattern, which integrates on-premises infrastructure with compute, storage, and application services in the cloud. Now, Lockheed Martin can provide its customers with vast computing power, enhanced business agility, and reduced costs of application infrastructure, while maintaining full control of their data and security processes.

Organization Profile

With 136,000 employees and 2009 sales of U.S.$45.2 billion, Lockheed Martin operates in four business areas: Aeronautics, Space Systems, Electronic Systems, and Information Systems and Global Solutions.

Business Situation

Lockheed Martin wanted to deliver the performance and flexibility of cloud computing to its customers, while enabling them to balance security, privacy and confidentiality concerns.

Solution

Lockheed Martin used the Windows Azure platform to develop the Thundercloud™ design pattern, which integrates on-premises infrastructures with compute, storage, and application services in the cloud.

Benefits

- Agility and speed

- Enhanced infrastructure at lower costs

- On-demand, usage-based model

- Ubiquitous access

Return to section navigation list>

Windows Azure Infrastructure

Ed Sperling interviews Mark McDonald, group vice president and head of research at Gartner Executive Programs to determine “[w]hy some IT organizations are progressing while others seem to be stuck in neutral” in this 7/26/2010 post to Forbes.com’s One On One colum:

What makes one CIO more successful than another is changing. In the past, deep knowledge of technology was critical. Now, a deep understanding of the business and how to move it forward has become the key metric.

That change is reflected not just in how much money the CIO's organization receives, but also who the CIO reports to. To find out what's driving these changes, Forbes caught up with Mark McDonald, group vice president and head of research at Gartner Executive Programs.

Forbes: What's changing across the CIO landscape?

Mark McDonald: The differences between rich and poor IT organizations are real and getting bigger.

How do you define rich and poor?

Richness is a level of IT performance as well as budget and standing in the enterprise. It's not just who has the most money. It's whether you're a strategic player and effective at what you do. These CIOs do tend to get more money, but they have a higher propensity to spend it more strategically. The richer IT organizations have been more proactive about cutting waste out of IT and they also do things faster. The poor organizations are hedging their bets across the whole organization

Is size of the overall corporation a factor?

No, it's more an understanding of how to create value in the enterprise. The poor IT organizations believe they create value by properly managing IT resources. In other words, "I'm proving to you that I don't waste the company's money." The rich IT organizations view it as, "Look at what we can do and how promptly we can be responsive to the business."

Does cloud-based computing level the playing field here?

No, absolutely not. There are two reasons. One is that the cloud is a giant red herring for IT organizations that are not rich because all they're looking at is cost arbitrage. That only gets you into trouble. You think you're solving a problem but you're just getting another one. We've seen a lot of people aggressively move applications into the cloud. They're moving 50% to 60% of applications that are not mission-critical. The poor organizations see this as a way of solving their infrastructure problems. The rich organizations are making this move to create more room for more value to the enterprise.

Using your terminology of rich and poor, do the rich get richer by doing this?

The rich are definitely getting richer, as measured in terms of budget, standing in the enterprise, creating the company strategy, and CIOs not reporting to CFOs.

What percentage of CIOs are on the rich side?

About 22%.

How many are in the middle?

Almost 30%. And there is a solid 50% that are poor and getting poorer.

What happens to that 50%? Do they get replaced or do the companies not realize they have a problem in the first place?

The reality is they'll be relegated to administrative irrelevance. That's the unifying theme. If you define your IT organization as enabling the business, that's an indication you're headed in the poorer direction. When you have organizations that talk about how IT contributes to the business and makes it transformational or directional, that's an early indicator the attitude of the CIO is pointed toward the richer side.

Page: 1, 2, 3, Next >

Lori MacVittie (@lmacvittie) asserts “When strategies are formed it quickly becomes obvious that cloud computing is more about balance than anything else” as a preface to her The Battle of Economy of Scale versus Control and Flexibility post of 7/26/2010 to F5’s DevCentral blog:

At a time when you’d think cloud computing would be the primary “go to” strategy for managing scale and rapid growth, multiple well-known and demanding organizations are building their own data centers instead.

With all the hype around cloud being faster, cheaper, and more efficient these folks must be crazy, right?

Not at all. In fact, these moves illustrate the growing friction between the economy of scale offered by cloud computing and the control and flexibility that is part and parcel of owning one’s own data center.

In April Twitter announced plans to build a data center of its own. On Wednesday it provided additional details on the Twitter Engineering blog.

“Later this year, Twitter is moving our technical operations infrastructure into a new, custom-built data center in the Salt Lake City area,” wrote Twitter’s Jean-Paul Cozzatti, who said having dedicated data centers will provide more capacity to accommodate growth of 300,000 new users per day. “Keeping pace with these users and their Twitter activity presents some unique and complex engineering challenges. Importantly, having our own data center will give us the flexibility to more quickly make adjustments as our infrastructure needs change.”

-- Data Center Knowledge, “Twitter Picks Utah for New Data Center”

Twitter isn’t the only Web 2.0 savvy organization moving to their own data center. Facebook earlier this year announced it, too, was also investing in building out its own data center.

BUT CLOUD AUTO-SCALES and STUFF!

It’s not all about scalability. I know that sounds nearly heretical, but it’s not. And it’s not a new mantra, either. Scalability is certainly a factor in why one would choose cloud computing over a localized deployment, but also important are control and flexibility.

Another consideration is the ability to customize your data center infrastructure to provide more granular control of operations. “That control gives us a ton of flexibility, and we can build new things without having to wait for our partner,” said Heiliger [Jonathan Heiliger, Facebook’s VP of Technical Operations]

-- Data Center Knowledge, “Data Centers: For When The Cloud is Not Enough”

If I’ve said it once I’ve said it a thousand times: control is a huge factor in the decision making process and something that isn’t effectively offered by today’s public cloud computing offerings. Remember the Information week analytics Cloud computing survey in 2009?

Even though security remains concern number one, control and configurability are on the top of the list, as well. The issue of control has almost always gone hand in hand with cloud adoption inhibitors, but it always takes a back seat to the more glamorous and scary “security” issue. These are not minor stumbling blocks in many cases, and the inability to rapidly adapt an infrastructure to meet growth and scale and make architectural changes, if necessary, are paramount to success. If cloud computing cannot provide the agility necessary to meet these challenges then it is logical to assume that organizations will either (a) stay in the local data center or (b) move to a local data center from the cloud when it becomes obvious the environment is inhibiting forward momentum.

Current adoption patterns indicate that this is not an anomaly, but will instead likely become the norm for organizations. Applications that are initially deployed “in the cloud” will, upon becoming a critical business application or growing beyond the meager means of control and flexibility offered by the cloud, will migrate to the data center, where control and agility are provided by the simple fact that the organization can change at will any piece of the infrastructure – from its physical implementation to its logical organization – at will. This is evident in the percentage of organizations using cloud for “dev and test” but not for production. Clearly the economy of scale and rapidity of deployment makes the cloud a perfect environment for development and testing but not necessarily production.

ECONOMY of SCALE MAY be INHIBITING SCALE

The irony is that the economy of scale offered by cloud may well be biting cloud in the proverbial derrière as it becomes the inhibitor to effective scale by limiting or making extremely difficult the architectural changes necessary for an application to scale in a cloud environment.

At some point scalability can become not about the application but about its infrastructure and the way in which that infrastructure interacts. It can become about the network and its components and how applications end up interacting with and through that infrastructure. In a cloud computing environment it is rarely the case that a customer can impact that infrastructure and, when it can, it is then limited by other factors such as underlying virtualization technology and the physical server infrastructure on which the application is ultimately deployed. If the answer to a scalability obstacle is more bandwidth and higher throughput, you can’t really add another NIC to a server in the cloud. That’s not your call. But it is if you’re in the data center, and it is virtualization – not cloud - that ultimately provides the agility to make such a change and rapidly propagate that change across the application deployment.

It isn’t always about costs. Well, okay, it is about cost but in IT it’s about cost as it relates to performance, or flexibility, or other operational functionality required to successfully meet data center and business goals. When spending less on infrastructure results in higher operational costs, the organization really hasn’t saved money at all. Savvy CIO and CTOs understand that it’s not a battle, but a balancing act. It’s not about achieving the highest economy of scale, but the best economy of scale given the specific operational and business needs.

Control is the obvious reason that Microsoft launched the Windows Azure Platform Appliance (WAPA) at WPC 2010.

RDA Corp. offers the following Key Reasons to Migrate to Azure whitepaper:

The Azure cloud computing platform offers tremendous advantages to organizations of all sizes. Here’s a list of key reasons to consider migrating, while you evaluate Azure and its benefits.

Focus on building apps, rather than being a data center

For small companies, especially startups, cash flow management is critical. Deploying a web application typically involves a significant hardware investment, as one considers redundancy, reliable Internet connections, load balancers, networking equipment, and any other infrastructure-related costs. Azure eliminates the need for such capital expenses and provides everything needed to deploy applications immediately, including application hosting, scaling, SQL hosting, and massive-scale storage.

Remove hardware and software requisition delays

For those organizations considering on-premise hosting, take into account the turnaround time typically associated with new hardware purchases. Between the specification, approval, ordering, delivery, configuration, and deployment activities, this could take a month or more. If any of these involve server software, such as Windows Server 2008 R2 or SQL Server 2008, there are licensing requisitions as well.

With Azure, there are no hardware specifications or licenses to deal with. Simply select the size of your virtual machine (VM), along with the number of instances, and your application is deployed to the Azure fabric in as little as 15 minutes. SQL Azure, the Azure-hosted version of SQL Server, is deployed in under a minute, with sizes up to 50GB.

Scale deployed applications without infrastructure expertise

Today’s applications have heavy demands placed on them during peak times. Sometimes traffic spikes are caused by advertisements, viral marketing, or other unexplained (and unexpected) reasons. Azure offers the ability to scale an application by simply specifying the number of VM instances an application is to be deployed to. The Azure platform takes care of everything else: load-balancing, multiple fault domains, health monitoring… Within minutes, your application’s capacity is increased to meet demand.

And when your traffic falls off, simply reduce the instance count, and Azure removes unneeded resources just as quickly.

Increase speed to market

Imagine having your Next Great Application idea. Now think of the lead-time for getting that idea up and running on a test platform. Then imagine the additional time to shift to a production environment. This is a typical challenge with on-premise or legacy hosting solutions. While the application is ready, there are servers, operating systems, load balancers, software patches, configuration tweaks, monitoring, and many other infrastructure details that must be ironed out.

With Azure, a completed application can be deployed in less than a day, including both a staging and production environment.

Reduce up-front capital expense by shifting to an operating expense model.

Systems with low-traffic periods really benefit from this model.

Hardware is a significant investment, especially when considering redundancy, load-balancing, and peak-capacity handling. This translates into a hefty up-front capital investment. Further, that investment might sit unused during low-traffic periods.

Azure provides an alternative, consumption-based model, with no capital expenses; only operating expenses. This results in a significant reduction in up-front investment. It also lets businesses focus on costs directly applicable to the application and related traffic. During down-time periods, Azure resources may be reduced or removed, resulting in additional cost savings.Built-in disaster recovery

Azure distributes an application’s virtual machines across separate server racks, as well as separate locations within a data center. This provides a 99.95% uptime Software Licensing Agreement (SLA), as no two servers are in the same fault-domain.

Going further, Azure backs up its SQL Azure relational databases and Windows Azure highly-scalable storage in a minimum of three locations, to provide a durable storage guarantee.

If an application’s hardware ever fails, the Azure fabric re-deploys an application’s affected instance immediately to new hardware.

Systems are automatically updated with the latest operating system patches

Microsoft provides operating system patches, as well as security-specific patches, for their server operating system products. Azure provides transparent maintenance with automatic operating system updates (these updates have an opt-out option).

During update rollouts, an application running a minimum of two VM instances will be updated in groups. The application will never be taken offline, as updates are only performed to one group at a time.Scale applications without any server licensing dependencies

As traffic increases, it’s critical to increase the number of running VM instances to provide adequate customer-facing performance. In a typical hosted environment, this means purchasing additional server licenses for both Windows Server 2008 and SQL Server 2008.

Azure, in its consumption-based model, has no additional charges for licensing. Simply select the number of running instances desired, and pay for those instances, starting at $0.12 per hour. Period.

Simplify SLA

With Azure providing the entire infrastructure and platform stack, including Internet, power, hardware, load-balancing, operating systems, deployment, management, redundancy, and upgrades, Microsoft provides a straightforward SLA for uptime and data availability.

If you are interested in getting started with Windows Azure, click here to learn about RDA's two-day Architecture/Design Session (ADS) for organizations interested in moving to cloud computing.

<Return to section navigation list>

Windows Azure Platform Appliance

JBarnes recommends that you Get Connected With News & Analysis From The Microsoft WPC 2010 in this 7/26/2010 post to the Innovation Showcase blog:

Peter Laudati and Dmitry Lyalin host the edu-taining Connected Show developer podcast on cloud computing and interoperability. Check out Episode #33, “Dmitry’s Soapbox”. Guest host Andrew Brust is back again, joining Dmitr Lyalin and Peter Laudati to talk about all of the tech news from Microsoft's Wordwide Partner Conference in Washington, DC. The trio talks about the new WebMatrix toolset and how it applies to both ASP.NET & PHP Developers.

Also on tap, news and analysis on Internet Explorer 9 Preview 3, Windows Phone 7, and the newly announced Windows Azure Appliance. A raging Dmitry also shares his poppin’ passion for HTML5. [Emphasis added.]

CLICK HERE TO LISTEN!

If you like what you hear, check out previous episodes of the Connected Show at www.connectedshow.com. You can subscribe on iTunes or Zune. New episodes approximately every two weeks!

Scott Wilson asks “what exactly is wrong with using your existing Microsoft-based datacenter?” in his Door Number Two post of 7/25/2010 to the CIO Weblog:

First, a question of semantics: is it insulting to describe anything to do with cloud computing as "vaporware?" Clouds being vaporous conglomerations themselves, it seems like we might need a new term to describe promised, but undelivered, cloud-based services.

I'm not sure that's what is going on with Microsoft's Azure right now, but just going by historical yardsticks, you have to wonder. The oh-so-mysterious Windows Azure Platform Appliance is "not for the faint of heart" writes Carl Brooks, but it's still pretty unclear who it is for. Very large enterprises, says Microsoft, but other than billing the device as a "proven cloud platform" it's not getting much more specific.

It sounds like a return to the "private cloud" schtick, which is its own sort of vaporware, if I may still use the term. Put a bunch of these bad boys in your datacenter, and you'll have Azure, only private. But if you're going to put a bunch of boxes in a datacenter and want to run the same stuff you can run on Azure, then what exactly is wrong with using your existing Microsoft-based datacenter?

Microsoft still hasn’t decided on a WAPA logo.

<Return to section navigation list>

Cloud Security and Governance

Ewald Roodenrijs asserts “Cloud computing is an emerging phenomenon that offers enormous advantages” as a preface to his Mitigation of the Threats of the Cloud article of 7/26/2010:

Cloud computing is an emerging phenomenon that offers enormous advantages, such as shorter time to market, flexible computing capabilities and limitless power, but the cloud market, still in a very early stage, continues to grow and evolve.

As cloud computing evolves, it creates a global infrastructure for new possibilities used in software quality assurance and testing. Businesses can share public or hybrid clouds with each other or create private clouds to be shared within the whole company, instead of using separate options for different enterprise departments. However the cloud is also threatened by some risks. These risks should be addressed to create the highest result in implementing the cloud and avoid threats on the other hand.

Infrastructure requirements should be correct

With a flexible environment opportunity as the cloud the requirements of those environments should be made clear. When the requirements are not set correct or appropriate to what is really wanted, the result can be the direct opposite of what was the goal.When this happens a lot of negative noise will be generated about the possibilities of the cloud. This can result in a negative view on cloud computing itself. Cloud computing was at the top of the Gartner Hype Cycle of 2009 and a lot of negative points will show up in the media the coming months, a lot of these points will be the result of bad requirements around the infrastructure. Using a simple checklist this enables the opportunity to reduce this risk to a minimum.

Legacy systems can still be used

Almost all types of services and systems can be virtualized, even some legacy systems. But 5-10% of all systems cannot be virtualized, and most of these are legacy systems. Systems that are very important for the business, often the core business of a company makes use of these old mainframes for example. With using an interface with these legacy systems they can still be incorporated in the cloud. For example using a VPN connection between the cloud and the clients own servers can create a connection between the legacy systems and the cloud systems.Standardization and Virtualization

One of the first steps clients can take are test environments in the cloud. This is a short-term opportunity for solution integrators and is generating action from companies like IBM, CloudOne and various Telcos. The creation of standardized server and service models and the standardization of clients infrastructure has much more impact in the long term.For this cloud computing is a catalyst and a perfect excuse for IT modernization and to improve internal IT services maturity. It has an indirect impact on other infrastructure activities. Like application consolidation and portfolio rationalization, and therefore helping business figure out the cost of providing services internally and, subsequently, improving the efficiency and transparency of your IT operations.

Security

With all external forms of cloud computing data is transferred, processed and stored in an external (public) cloud. However, data owners are very skeptical to place their data outside their own control sphere. When (test) data is stored in the cloud this can lead to a compliancy issue for most businesses. The data owners these companies are responsible for the integrity and confidentiality of their data, even when the data is outside their direct control. Traditional solution integrators are forced to comply to external audits and obtain security certifications, so should cloud computing providers. But also the availability of data is inside their own control. Another company is responsible for the uptime of the servers. One of the cloud principles is to guarantee a very high uptime but this is still a threat they have to deal with.The most thorough security controls are needed to protect the most sensitive data. This may not be guaranteed in a public cloud, while it can be realized in a private cloud.

Transparency and traceability of (test) data

With cloud computing (test) data can be located in systems in other countries, which may be in conflict with regulations prohibiting data to leave a country or union. For example, the EU Data Protection Directive places restrictions on the export of personal data from the EU to countries whose data protection laws are not judges as "adequate" by EU standards (European Commission 1995a). If not properly attended to, European personal data may be located outside the EU without being compliant to the directive.Data segregation

The shared, massive scale characteristics of cloud computing makes it likely that clients data is stored alongside data of others consumers. Encryption is often used to segregate data-at-rest, but it is not a cure-all. It's advised to do a thorough evaluation of the encryption systems used by cloud providers.A proper built, but poorly managed encryption scheme may be just as devastating as no encryption at all, because although the confidentiality of data may be preserved, availability of data may be at risk when data availability is not guaranteed.

Cloud strategy

Currently cloud computing is a hype, but the cloud not only offers a lot of opportunities, but also threats. All these threats should be taken into account when creating a cloud strategy for your business!

Ewald is a senior test manager and a member of the business development team within Sogeti Netherlands.

James Staten asks How Much Infrastructure Integration Should You Allow? in this 7/25/2010 post to his Forrester blog:

There's an old adage that the worst running car in the neighborhood belongs to the auto mechanic. Why? Because they like to tinker with it. We as IT pros love building and tinkering with things, too, and at one point we all built our own PC and it probably ran about as well as the mechanic's car down the street.

While the mechanic's car never ran that well, it wasn't a reflection on the quality of his work on your car because he drew the line between what he can tinker with and what can sink him as a professional (well, most of the time). IT pros do the same thing. We try not to tinker with computers that will affect our clients or risk the service level agreement we have with them. Yet there is a tinkerer's mentality in all of us. This mentality is evidenced in our data centers where the desire to configure our own infrastructure and build out our own best of breed solutions has resulted in an overly complex mishmash of technologies, products and management tools. There's lots of history behind this mess and lots of good intentions, but nearly everyone wants a cleaner way forward.

In the vendors' minds, this way forward is clearly one that has more of their stuff inside and the latest thinking here is the new converged infrastructure solutions they are marketing, such as HP's BladeSystem Matrix and IBM's CloudBurst. Each of these products is the vendor's vision of a cleaner, more integrated and more efficient data center. And there's a lot of truth to this in what they have engineered. The big question is whether you should buy into this vision.

<Return to section navigation list>

Cloud Computing Events

CloudTweaks reported Skytap and Customer, Nuance Communications, to Speak on Cloud Computing at Burton Group (Gartner) Catalyst Conference based on a 7/26/2010 press release:

SEATTLE, WA–(Marketwire – July 26, 2010) – Skytap, Inc., the leading provider of self-service cloud automation solutions, today announced that Deanne Harper, senior manager of Speech University at Nuance Communications, and Sundar Raghavan, chief product and marketing officer at Skytap, will present at the Burton Group Catalyst Conference on July 28, 2010 in San Diego, CA.

At the event, Harper and Raghavan will provide real world insight into how the cloud can be used to accelerate business productivity, and share practical tips for companies to move to the cloud successfully. Sessions featuring Skytap and its customer, Nuance Communications, include:

Hands-on Training from the Cloud: Speech University’s Global Solution

Wednesday, July 28 at 4:05 PM

Speaker: Deanne Harper (Nuance Communications, Inc.)

Description: In this session, Deanne will describe the cloud-based training solution adopted by Nuance Speech University (NSU) in 2008. She will discuss the challenges that led NSU to consider the cloud as a training solution and the company’s requirements for a successful solution. Deanne will review her vendor comparison and identify factors that led to the solution adopted by Nuance.Cloud Economics and Licensing

Wednesday, July 28 at 3:10 PM

Panel Participants: Drue Reeves (Gartner), Sundar Raghavan (Skytap), Mario Olivarez (GoGrid), and Nathan Day (SoftLayer Technologies, Inc.)

Description: The roundtable will discuss the economics around the cloud and the myriad of licensing issues that also accompany a move to the cloud.Vendor Lightning Round

Wednesday, July 28 at 5:05 PM

Speakers: Sundar Raghavan (Skytap), Matt Tavis (Amazon.com), Nathan Day (SoftLayer Technologies, Inc.), and Jeff Samuels (GoGrid)

Description: In this session, cloud vendors present their vision and product in five minutes. The audience will vote to select a winner and the winner will receive 10 additional minutes to present.Catalyst is a five-day, industry-shaping conference exploring cutting-edge ideas, current challenges and emerging technologies shaping today’s and tomorrow’s enterprise. Catalyst Conference is renowned for its attendee-driven agenda, high-profile speakers, in-depth content and fiercely independent point of view.

The Burton Catalyst Conference will include 1.5 days of cloud-computing sessions on 2/27 and 2/29/2010 in San Diego, CA:

Session tracks include:

Networks in Motion Life After SOA: Next Generation Application Architecture Collaborate or Perish: The Business Value of Relationships The New Identity Architecture: Getting There from Here Security in Context: New Models for New Business Virtualization: Transforming IT Infrastructure Leveraging Information to Gain Insight Enterprise Ready Clouds: Realistic Strategies

Chris Hoff (@Beaker) asked See You At Black Hat 2010 & Defcon 18? in this 7/25/2010 post:

This year looks to be another swell get-together in Vegas. I had to miss last year (first time in…forever) so I’m looking forward to 112 degrees, recirculated air, and stumble-drunk hax0rs jackpotting ATMs and commandeering elevators.

I’ll be getting in on the 27th. I have a keynote at the Cloud Security Alliance Summit on the 28th (co-located within Black Hat,) a talk on the 29th at Black Hat (Cloudinomicon) from 10am-11am and I’ll be on another FAIL panel at Defcon with the boys. I’ve got a bunch of (gasp!) customer meetings and (gasp! x2) work stuff to do, but plenty of time for the usual.

I’m going to try to hit Cobra Kai, Xtreme Couture or the Tapout facilities whilst there for some no-gi grappling or even BJJ if I can find a class. Either way, there are some hard core P90X’ers that I’m sure I can con into working out in 90 degree, 6am weather.

Rumors of mojitos and cigars at Casa Fuente are completely unfounded. Completely.

Oh, parties? They have parties?

See y’all there!

/Hoff

Check out @Beaker’s Reflections on SANS ’99 New Orleans: Where It All Started reminiscences of the same date.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Alex Handy’s CouchDB brings peer-based data replication article of 7/26/2010 for SDTimes explains CouchDB 1.0’s replication techniques:

Mobile devices and the move to the cloud have combined to form problems for which traditional SQL databases are unsuited. This is why the NoSQL movement has sprouted dozens of newly crafted databases for every imaginable problem.

For the Apache Foundation's CouchDB, replication and stability were the first priorities. On July 14, four years of work culminated in the release of Apache CouchDB 1.0, bringing with it the security features needed for enterprise applications.

Damien Katz created the CouchDB project after leaving the Lotus Notes team. He used his Notes knowledge to build a document database that could perform peer-based replication, along with a ground-up approach to tolerating node failure.

Version 1.0 puts the finishing touches on CouchDB's underpinnings, said Katz. Key to the purpose of the database is replication on a reliable and grand scale. Any node of a CouchDB cluster can be written to, and those changes will automatically trickle out to the other nodes, even if some of those other nodes are turned off at the time. And it doesn't matter how those nodes were turned off; Katz said that CouchDB is built to crash.

That's because the only way to turn off a CouchDB instance is with the Unix “kill” command. This is actually what the code does itself when a CouchDB instance is told to turn off. Katz claimed that, because CouchDB is designed to suddenly stop running, it can never corrupt the data it stores. It may sound unorthodox, but using a crash as the standard termination means there may be no way to surprise CouchDB.

“The replication stuff is the killer feature of CouchDB,” said Katz. “A lot of databases have some sort of replication capability, almost always master/slave, so reads can be spread across servers to reduce the read load. CouchDB uses peer-based replication, so any update can happen on any node and automatically replicate out. We have the ability to take a database offline so it's not connected to its other replicas, individually query it, then push that back to the replicas. It's fairly unique in the database world."

Alex reports “Katz has since founded Couchio, a company based in downtown Oakland and tasked with the creation of office productivity software on top of CouchDB.” [Emphasis and URL added.] You can learn more and sign up for a free cloud instance or download CouchDB at the Couchio web site:

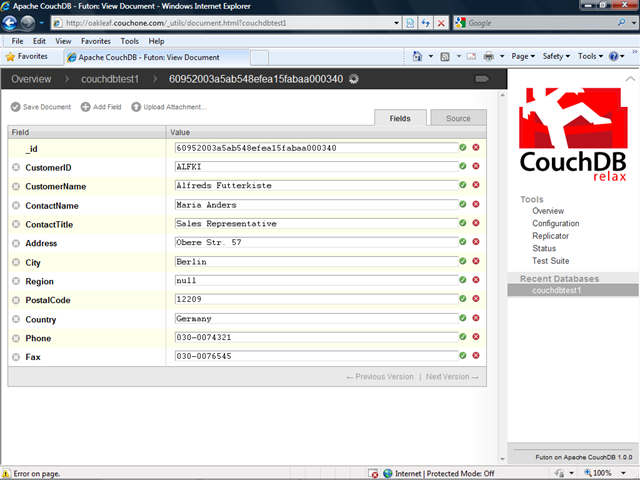

Here’s an example of entering data into a new database and document using the Futon management tool:

Matthew Weinberger passed on to the MSPMentor blog on 7/26/2010 the LATimes report that Google Apps Misses Los Angeles Migration Deadline:

This is a case where the headline says it all. A Google Apps rollout at the City of Los Angeles has missed its migration deadline, the Los Angeles Times reports. The roadblock? The Los Angeles Police Department, which is refusing to move off the old system to Google’s SaaS-based approach. Here’s why.

Let’s take a step back. When Google and Los Angeles finalized the high-profile deal back in December 2009, both sides said the deal aimed at helping the municipal government save on infrastructure costs. But MSPmentor noted that privacy concerns could hold the project back.

That turned out to be prophetic. May 2010 brought rumors that the LAPD had security and compliance concerns that were holding up the project. Google itself dispelled those rumors for us the next day, and migration appeared to be proceeding apace.

Fast forward to July 2010: The LA Times story says Google missed the June 30th deadline, leaving almost 20,000 city workers on an aging Novell legacy platform and forcing the city to pay licenses for both systems — at a cost that could reach as much as $400,000 over the next year. The cause? The Los Angeles Police Department and their dissatisfaction with the SaaS platform’s security.

“Google executive Jocelyn Ding said the company was committed to fulfilling its contract but admitted that it had missed ‘some details’ in the original requirements,” writes the LA Times.

Naturally, no one’s happy: the City of Los Angeles is left scratching its head as to how their great bargain didn’t encompass LAPD requirements, and Google’s committed themselves to picking up the tab on the legacy system until at least November, according to the report.

The City of Los Angeles engagement was supposed to be Google’s poster child for SaaS email replacing more traditional on-premises email systems from Microsoft, IBM Lotus and Novell. Google seems serious about the enterprise space, but we’re curious to see how other potential Google Apps customers react to the City of Los Angeles situation.

Google doesn’t appear to have commented on the matter publicly beyond the above quote, and the official Los Angeles migration project blog has been silent since June 23rd, 2010. Needless to say, MSPmentor is keeping its ears open for further developments.

Read More About This Topic

It’s ironic that ebizQ reported on the same day that Google Announces Google Apps for Government and claims to have received FISMA certification for them:

This was posted today on the Google blog: Today we’re excited to announce a new edition of Google Apps. Designed with guidance from customers like the federal government, the City of Los Angeles and the City of Orlando, Google Apps for Government includes the same great Google applications that people know and love, with specific measures to address the policy and security needs of the public sector.