Windows Azure and Cloud Computing Posts for 3/22/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in February 2010.

Azure Blob, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

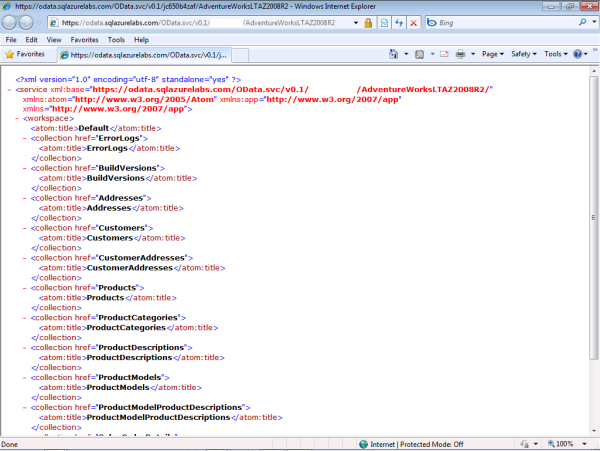

My Enabling and Using the OData Protocol with SQL Azure post of 3/23/2010:

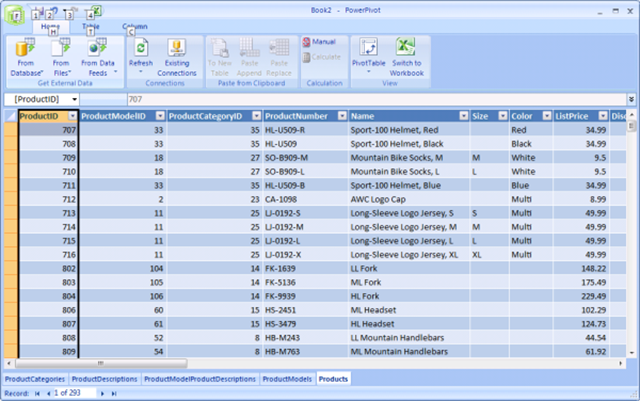

… shows you how to enable the OData protocol for specific SQL Azure instances and databases, query OData sources, and display formatted Atom 1.0 data from the tables in Internet Explorer 8 and Excel 2010 PowerPivot tables. A later post will describe using the OData protocol with SharePoint 2010 PowerPivot tables.

and continues with the instructions for munging OData content in Excel 2010 PowerPivot windows:

The SQL Azure Team delivered a SQL Azure Connectivity Troubleshooting Guide on 3/22/2010:

This post was put together by one of our incredible support engineers, Abi Iyer, to help you to troubleshoot some of the common connectivity error messages that you would see while connecting to SQL Azure as listed below:

- A transport-level error has occurred when receiving results from the server. (Provider: TCP Provider, error: 0 - An existing connection was forcibly closed by the remote host.)

- System.Data.SqlClient.SqlException: Timeout expired. The timeout period elapsed prior to completion of the operation or the server is not responding. The statement has been terminated.

- An error has occurred while establishing a connection to the server. When connecting to SQL Server 2005, this failure may be caused by the fact that under the default settings SQL Server does not allow remote connections

- Error: Microsoft SQL Native Client: Unable to complete login process due to delay in opening server connection.

- A connection attempt failed because the connected party did not properly respond after a period of time, or established connection failed because connected host has failed to respond. …

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Eugenio Pace announces Windows Azure Guidance – First version of a-Expense in the cloud in this 3/23/2010 post:

Available for download here, you’ll find the first step in taking a-Expense to Windows Azure. Highlights of this release are:

- Use of SQL Azure as the backend store for application entities (e.g. expense reports)

- Uses Azure storage for user profile information (the “Reimbursement method” user preference)

- Application no longer queries AD for user attributes needed in the application (Cost Center and employee’s Manager, the expense default approver). These are received as claims in the security token issued by Adatum’s STS.

- Authentication is now claims based.

- Logging and tracing are now done through Windows Azure infrastructure.

- Deployment to Windows Azure is automated.

#1 is straight forward thanks to SQL Azure relatively high fidelity with SQL Server.

For #2, we used the providers included in the Windows Azure training kit (with a few modifications/simplifications). The main challenge here is to initially migrate the data from the “on premises” SQL Server to Azure storage.

#3 is relatively simple by using WIF. The main changes are in the UserRepository class where instead of calling the (Simulated)LDAPProvider, we simply inspect the claims collection in the current principal:

#4 requires “claims enabling” a-Expense. Look inside the web.config for all extra config sections added by WIF:

It also requires a valid “Issuer” of claims. We have included a “Simulated” Issuer that creates the token we need. You’ll find this as part of the solution: …

Eugenio continues with additional implementation details.

Jason Follas continues his Windows Azure AppFabric series with Part III: Windows Azure platform AppFabric Access Control: Obtaining Tokens of 3/22/2010:

- Our distributed nightclub system has four major components: A Customer application coupled with some external identity management system, a Bartender web service, and ACS, which acts as our nightclub’s Bouncer.

- A Customer application ultimately represents a user that wishes to order a drink from the Bartender web service. The Bartender web service must not serve drinks to underage patrons, so it needs to know the user’s age (this is known as a “claim”). The nightclub has no interest in maintaining a comprehensive list of customers and their ages, so instead, a Bouncer ensures that the user’s claimed age comes from a trusted source (this is known as an “issuer”). If the claim’s source can be verified, the Bouncer will give the customer a token containing its own set of claims that the Bartender web service will recognize. In the end, the Bartender doesn’t have to concern itself with all of the possible issuers – it only needs to recognize a single token generated by the Bouncer (ACS). …

Jason continues with “Anatomy of a Token Request, Implementation, Where to Execute This Code, and From Here topics:

This article demonstrated how to obtain a token from ACS. In the next article, I will show how to use this token in order to access a protected resource (such as our Bartender web service) and how to validate a token when a protected resource receives one. At some point, I’m also hoping to be able to demonstrate how ADFS v2.0 and WIF fits into the picture (one challenge at the moment is getting an Active Directory environment, either real or mocked, up and running). Stay tuned!

Jason’s earlier members of this series are:

- Part 1: Windows Azure platform AppFabric Access Control: Introduction (3/8/2010)

- Part 2: Windows Azure platform AppFabric Access Control: Service Namespace (3/17/2010)

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The “Geneva” team describes problems with Using the Windows Identity Foundation SDK with Visual Studio 2010 RC in this 3/23/2010 post:

There are some known issues with using the WIF SDK on VS 2010 RC that do not exist with VS 2008. When VS 2010 is released, we expect to refresh the SDK to resolve these problems, but in the meantime, we have some simple guidance for using the SDK with VS 2010. …

Jim Nakashima’s Fixing the Silverlight Design Time in a Windows Azure Cloud Service post of 3/23/2010 is a workaround for:

- Visual Studio 2008 (works fine on VS 2010)

- Windows Azure Tools for Microsoft Visual Studio February 2010 Release

- Silverlight 3 Tools for Visual Studio 2008

- Building a Silverlight application in a Windows Azure Cloud Service

This is the issue reported in this forum thread.

To reproduce the issue:

1. Create a new Windows Azure Cloud Service project. (File | New Project) …

Jim continues with the repro process.

Jim’s GAC Listing for Windows Azure Guest OS 1.1 offers a 227-member “list of the contents of the GAC for Windows Azure Guest OS 1.1 (Release 201001-01):”

Please see this post: http://blogs.msdn.com/jnak/archive/2010/03/21/viewing-gac-d-assemblies-in-the-windows-azure-os.aspx for a walkthrough of how this listing was obtained and how you can obtain the listing for any Windows Azure OS you choose.

Vijay Rajagopalan announces “we just released an updated version the including many bug fixes and compatibility with the latest Windows Azure SDK (version 1.1)” in his Eclipse shines on Windows 7: Microsoft and Tasktop partnering to contribute code enhancement to Eclipse post of 3/22/2010:

… To close, I’d like to give you a quick update on other Eclipse related projects, which we are working on with Soyatec:

- Windows Azure Tools for Eclipse: we just released an updated version the including many bug fixes and compatibility with the latest Windows Azure SDK (version 1.1).

- Eclipse Tools for Silverlight (eclipse4SL) for Mac and Windows: the next release is planned to this spring. Stay tuned!

David Chou wants you to Run Java with Jetty in Windows Azure, according to this 3/22/2010 post:

Jetty is a Java-based, open source Web Server which provides a HTTP server and Servlet container capable of serving static and dynamic content either from a standalone or embedded instantiations. Jetty is used by many popular projects such as the Apache Geronimo JavaEE compliant application server, Apache ActiveMQ, Apache Cocoon, Apache Hadoop, Apache Maven, BEA WebLogic Event Server, Eucalyptus, FioranoMQ Java Messaging Server, Google App Engine and Web Toolkit plug-in for Eclipse, Google Android, RedHat JBoss, Sonic MQ, Spring Framework, Sybase EAServer, Zimbra Desktop, etc. (just to name a few).

The Jetty project provides:

- Asynchronous HTTP Server

- Standard based Servlet Container

- Web Sockets server

- Asynchronous HTTP Client

- OSGi, JNDI, JMX, JASPI, AJP support …

David continues with an analysis of “Java Support in Windows Azure.”

Joannes Vermorel makes a case for special Windows Azure classroom pricing in his Thinking an academic package for Azure post of 3/22/2010:

This year, this is the 4th time that I am a teaching Software Engineering and Distributed Computing at the ENS. The classroom project of 2010 is based on Windows Azure, like the one of 2009.

Today, I have been kindly asked by Microsoft folks to suggest an academic package for Windows Azure, to help those like me who wants to get their students started with cloud computing in general, and Windows Azure in particular.

Thus, I decided to post here my early thoughts on that one. Keep in mind I have strictly no power whatsoever on Microsoft strategy. I am merely publishing here some thoughts in the hope of getting extra community feedback.

I believe that Windows Azure, with its strong emphasis on tooling experience, is a very well suited platform for introducing cloud computing to Computer Science students.

Unfortunately, getting an extra budget allocated for the cloud computing course in the Computer Science Department of your local university is typically complicated. Indeed, very few CS courses require a specific budget for experiments. Furthermore, the pay-as-you-go pricing of cloud computing goes against nearly every single budgeting rule that universities have in place - at least for France - but I would guess similar policies in other countries. Administrations tend to be wary of “elastic” budgeting, and IMHO, rightfully so. This is not a criticism, merely an observation.

In my short 2-years cloud teaching experience, a nice academic package sponsored by Microsoft has tremendously simplified my situation to deliver the “experimental” part of my cloud computing course.

Obviously, unlike software licenses, offering cloud resources costs very real money. In order to make the most of resources allocated to academics, I would suggest narrowing the offer down to the students who are the most likely to have an impact on the software industry in the next decade. …

Joannes continues with the details of his suggested academic pricing schedule.

Jim Nakashima explains Viewing GAC’d Assemblies in the Windows Azure OS in this 3/22/2010 post:

One of the main issues you can run into when deploying is that one of your dependent assemblies is not available in the cloud.

This will result in your cloud service never hitting the running state, instead it will cycle between the initializing, busy and stopping states.

(the other main reason is that a storage account, especially the one included in the default template, is pointing to development storage – see this post about running the app on the devfabric with devstorage turned off to weed that issue out)

To help you understand what assemblies are available in the cloud and which are not, this post goes through the steps of building a cloud service that will output the assemblies in the GAC – a view into the cloud, similar to my certificate viewing app. (Download the Source Code)

Here’s the final product showing the canonical example of how System.Web.Mvc is not a part of the .NET Framework 3.5 install and needs to be XCOPY deployed to Windows Azure as part of the service package:

Since the GAC APIs are all native, I figured the easiest way to do what I want and leverage a bunch of existing functionality is to simply include gacutil as part my cloud service, run it and show the output in a web page.

Marius Oiaga delivers a third-party Introducing the Facebook Azure Toolkit post of 3/22/2010 that begins:

An open source project hosted on CodePlex promises to streamline the development of Facebook applications that also leverage Microsoft’s Cloud platform. The Facebook Azure Toolkit is currently available for download under a Microsoft Public License (Ms-PL), which is an open source license. Ahead of anything else, the toolkit is designed as a resource for developers that are looking to bridge their Facebook applications with Windows Azure. Its makers underlined the fact that the Toolkit should be used by devs that already know how to build Facebook apps, or are willing to learn on their own.

“The Facebook Azure toolkit provides the ability to rapidly develop Facebook applications that leverage Windows Azure to profit from all the benefits of the cloud with a solid framework based on best practices. Facebook applications hosted in Azure provide a flexible and scalable cloud computing platform for the smallest and largest of Facebook applications. Whether you have millions of users or just a few thousand, the Facebook Azure Toolkit helps you to build your app correctly so that if your app is virally successful, you won't have to architect it again. You will just need to increase the number of instances you are using, and then you are done scaling,” revealed Christian Klasen, Microsoft senior business development manager.

In order to access the project, devs will need to be running the Release Candidate (RC) development milestone of Visual Studio 2010. According to the Toolkit’s description, it brings to the table the Facebook Developers Toolkit, the Ninject 2.0 for Dependency Injection, Asp.Net MVC 2, Windows Azure Software Development Kit (February 2010), AutoMapper, Azure Toolkit - Simplified library for accessing Message Queues, Table Storage and SQl Server and automated build scripts for one-click deployment from TFS 2010 to Azure. …

Return to section navigation list>

Windows Azure Infrastructure

R. “Ray” Wang claims “Confusion Continues With Cloud Computing And SaaS Definitions” in his Tuesday’s Tip: Understanding The Many Flavors of Cloud Computing and SaaS of 3/23/2010:

Coincidence or just brilliance must be in the air as three esteemed industry colleagues, Phil Wainewright, Michael Cote, and James Governor, have both decided to clarify definitions on SaaS and Cloud within a few days of each other. In fact, this couldn’t be more timely as SaaS and Cloud enter into mainstream discussion with next gen CIO’s evaluating their apps strategies. A few common misconceptions often include:

“That hosting thing is like SaaS”

“Cloud, SaaS, all the same, we don’t own anything”

“OnDemand is Cloud Computing”

“ASP, Hosting, SaaS seems all the same”

“It all costs the same so what does it matter to me?”

“Why should I care if its multi-tenant or not?

“What’s this private cloud versus public cloud?”

Cloud Computing Represents The New Delivery Model For Internet Based IT services

Traditional and Cloud based delivery models share 4 key parts (see Figure 1):

Consumption – how users consume the apps and business processes

Creation – what’s required to build apps and business processes

Orchestration – how parts are integrated or pulled from an app server

Infrastructure – where the core guts such as servers, storage, and networks reside

As the über category, Cloud Computing comprises of

Business Services and Software-as-a-Service (SaaS) – The traditional apps layer in the cloud includes software as a service apps, business services, and business processes on the server side.

Development-as-a-Service (DaaS) – Development tools take shape in the cloud as shared community tools, web based dev tools, and mashup based services.

Platform-as-a-Service (PaaS) – Middleware manifests in the cloud with app platforms, database, integration, and process orchestration.

Infrastructure-as-a-Service (IaaS) – The physical world goes virtual with servers, networks, storage, and systems management in the cloud.

Figure 1. Traditional Delivery Compared To Cloud Based Delivery

Ray continues with a claim that “The Apps Layer In The Cloud Represents Many Flavors From Hosted To True SaaS.”

Brian Sommer analyzes The Expanding Gap Between On-Premise and SaaS Solutions in this 3/23/2010 post:

On-premise vendors that are trying to play in the SaaS world (and I use the word ‘trying’ on purpose) are moving at a glacial pace and falling ever further behind SaaS application software vendors.

Let’s look at the scorecard so far.

On-premise vendors, in general, have either ignored SaaS, been dismissive of SaaS or have been struggling to get SaaS versions of their older products ready for prime time. Specifically, on-premise vendors have been troubled with:

- Getting multi-tenancy to work with their products. Products that were designed to be one-off implementations at customers may need a complete re-think to work in the cloud for scores of different customers simultaneously. If multi-tenancy wasn’t designed into the products at the onset, a retro-fit is going to be costly (and possibly ugly).

- Getting a Platform as a Service (PaaS) integrated with their SaaS solution. On-premise vendors keep getting confused with their legacy SOA platforms and a PaaS. While both can do many of the same things, on-premise SOA platforms have not turned into commercial software development platforms like PaaS solutions like Force.com and NS-BOS. It’s time on-premise vendors realize that their SOA architectures are fine for on-premise products but insufficient and inappropriate for SaaS products. Just look at the multitude of applications that have already been built on PaaS solutions. Where are the FinancialForce.com and App Exchanges in the on-premise world? I don’t see them and I don’t see them coming either.

- Getting Integration as a Service (IaaS) incorporated into the solution set. The biggest innovations in getting application software implementation costs lowered (at all) in the last few years have come from third parties like Business Process Outsourcers and not from on-premise vendors. Granted, SAP has done some work here with some success, but on-premise vendors haven’t made this a focus area for decades. SaaS vendors have leveraged IaaS technologies from the start and the speed of these implementations is proof-positive of their success.

In the last few weeks, I’ve read a couple of interesting announcements that show the on-premise vendors are losing more ground to faster moving SaaS competitors. Let’s look at three of these announcements:

Joe McKendrick asserts “Call it what you want: a service is a service is a service” in his SOA vendors embark on 'cloudwashing' post of 3/22/2010:

Perhaps it’s a good thing we had SOA to noodle around with before cloud came along big time.

Dave Linthicum, an entrepreneur extraordinaire himself, has raised an interesting point about something vendors in the SOA space have been doing lately: Many are no longer just calling themselves “SOA” vendors. They are now also “cloud” vendors.

They are, in fact, engaged in something called “cloudwashing” — “renaming technology, strategies, and services to using the term ‘cloud.’“ Dave observes that almost all of the SOA technology players have ‘cloud washed’ their products and messaging to use ‘cloud.’” He also links to John Treadway, who originally coined the term to describe Salesforce.com’s move from “Software as a Service” to “cloud.”

Dave also makes the following observation:

“Indeed, almost every day I’m told that ‘We’re no longer a SOA company, we’re a cloud computing company.’ Which is not at all logical.”

Dave also adds a new term to the lexicon: “Cloud-efying,” or the process of moving SOA technology to a virtualized and multi-tenant platform. In the case of SOA vendors, many are providing an on-demand version of their software. …

Lori MacVittie asserts “In the short term, hybrid cloud is going to be the cloud computing model of choice” in her The Three Reasons Hybrid Clouds Will Dominate post of 3/22/2010:

Amidst all the disconnect at CloudConnect regarding standards and where “cloud” is going was an undercurrent of adoption of what most have come to refer to as a “hybrid cloud computing” model. This model essentially “extends” the data center into “the cloud” and takes advantage of less expensive compute resources on-demand. What’s interesting is that the use of this cheaper compute is the granularity of on-demand. The time interval for which resources are utilized is measured more in project timelines than in minutes or even hours. Organizations need additional compute for lab and quality assurance efforts, for certification testing, for production applications for which budget is limited. These are not snap decisions but rather methodically planned steps along the project management lifecycle. It is on-demand in the sense that it’s “when the organization needs it”, and in the sense that it’s certainly faster than the traditional compute resource acquisition process, which can take weeks or even months.

Also mentioned more than once by multiple panelists and speakers was the notion of separating workload such that corporate data remains in the local data center while presentation layers and GUIs move into the cloud computing environment for optimal use of available compute resources. This model works well and addresses issues with data security and privacy, a constant top concern in surveys and polls regarding inhibitors of cloud computing.

It’s not just the talk at the conference that makes such a conclusion probabilistic. An Evans Data developer survey last year indicated that more than 60 percent of developers would be focusing on hybrid cloud computing in 2010.

Results of the Evans Data Cloud Development Survey, released Jan. 12, show that 61 percent of the more than 400 developers polled said some portion of their organizations' IT resources "will move to the public cloud within the next year," Evans Data said. "However, over 87 percent [of the developers] say half or less then half of their resources will move ... As a result, the hybrid cloud is set to dominate the coming IT landscape."

There are three reasons why this model will become the de facto standard strategy for leveraging cloud computing, at least in the short term and probably for longer than some pundits (and providers) hope. …

Lori continues with addition arguments in favor of hybrid cloud deployment, but bear in mind that hybrid clouds have the potential to ring up more sales for F5 products that public clouds.

Matthew Casperson posits 7 Ways to Beat the Glut of Cloud APIs – Cloud Computing in this 3/18/2010 post:

Cloud computing is big right now, but the sheer number of options, and the lack of interoperability, can be an issue for developers. Vendor lock-in reduces the options available to those working on cloud computing initiatives, while the variability between APIs inevitably leads to many hours spent pouring over documentation when comparing cloud service providers.

There are a number of projects that can reduce, or even eliminate, some of these problems by exposing the functionality of a number of cloud service providers through a consistent interface. Here is a list of 7 such projects.

- DeltaCloud is a RehHat initiative that provides a REST API and user tools to manage EC2, RHEV-M, RackSpace and RimuHosting, with VMWare ESX and more coming soon.

…

- OpenNebula is an open and flexible tool that fits into existing data center environments to build any type of Cloud deployment. OpenNebula 1.4 supports Xen, KVM and VMware virtualization platforms and on-demand access to Amazon EC2 and ElasticHosts Cloud providers. It also features new local and Cloud interfaces, such as libvirt, EC2 Query API and OGC OCCI API.

- Cloudloop is a new open source Java API and command-line management tool for cloud storage. Unfortunately the cloudloop web site was hacked recently, but you can find more information with this post on TheServerSide.

Just as with the growth of these cloud APIs and platforms, there are more libraries and abstraction initiatives all the time. Feel free to share any others we missed in the comments.

David Linthicum claims “Although few companies actually have private clouds yet, the basic principles for success are clear” in his Building private clouds: 3 dos and 3 don'ts article of 3/22/2010 for InfoWorld’s Cloud Computing blog:

The patterns of success are emerging around the use of private cloud computing within the enterprise, and it's time to begin sharing what's working and what's not working. Here are three each of my dos and don'ts [abbreviated].

- Do make sure to leverage SOA …

- Do consider performance …

- Do consider security and governance …

- Don't preselect private cloud software based on what's popular …

- Don't call it "elastic scalability" …

- Don't let the vendor drive your solution …

<Return to section navigation list>

Cloud Security and Governance

Steve Hanna and Jesus Molina claim “Here are some answers” to your Cloud Security Questions? in this 3/23/2010 post:

For companies considering a transition to cloud computing (CC), one of the major concerns is (or should be) security. If addressed properly while selecting a cloud computing provider or cloud provider (CP), security can actually improve for many companies. For many firms, a cloud computing provider can provide better security than their in-house facilities. This is because the CPs are devoting huge resources to making security a non-issue for customers and, in fact, a selling point versus other CPs. With billions of dollars of potential business at stake, CPs are going to do their best to secure their environment. However, there are many new risks with CPs that should concern potential users.

Before trusting a particular provider, potential customers must perform adequate due diligence to make sure that the CP has the proper controls in place to protect their data and applications so they can obtain the required security and reliability. Fortunately, the competitive environment in which CPs operate provides selection options and, in many cases, more control than customers had with their own IT organization. Savvy cloud shoppers can play one provider against another to their advantage - if they know what to look for.

Customers must start by determining their overall system requirements including security. Then they can go to CPs and query them to make sure the customer's requirements are met. Asking the right questions and knowing what to look for in answers is the key to getting the expected level of security. …

<Return to section navigation list>

Cloud Computing Events

Bernard Gordon observes on page 2 of his Cloudnomics: The Economics of Cloud Computing post of 3/22/2010:

As the general value proposition of cloud computing has bcome accepted there is an increased interest in cloud TCO. CIO.com's Bernard Golden discusses the economics of cloud computing and shares other notes from the CloudConnect conference.

CloudClub PaaS. As an add-on to the main conference, on Tuesday night a CloudClub meeting was held. CloudClub is a monthly event held in the Bay Area for people involved in cloud computing companies. This month's event focused on PaaS (Platform-as-a-Service). Several of the presenters asserted that, long-term, PaaS is how people will use cloud computing. The first presenter, Mitch Garnaat, showed a number of forum postings from AWS from people who it was clear assumed that AWS would offer transparent application scaling with no effort required from the application developer. Just to be clear, AWS provides the resources to scale an application, but expects the application to arrange for the new resources to join the application. Consequently, according to these presenters, people will turn to PaaS providers, which do arrange for transparent scaling for applications written to their framework. The flip side to depending upon the platform provider for scalability is, of course, lock-in. As to the question about naive users expecting IaaS vendors to provide more services than they really do, there's no doubt. We recently interacted with a company that thought their admin costs would fall through the floor because AWS would take complete application responsibility for only 8.5 cents per hour. They were disappointed when they found out that AWS explicitly abjures that responsibility. I'm not 100 percent convinced on the "inevitable PaaS" question, though; however, I think Microsoft offers a pretty interesting play in this regard and deserves some respect for what it's delivering. We'll see. …

Eric Nelson announces a Free Windows Azure event next Monday in London (29th March) in this 3/23/2010 post:

I just heard that we still have spaces for this event happening next week (29th March 2010). Whilst the event is designed for start-ups, I’m sure nobody would notice if you snuck in :-) Just keep it to yourself ;-)

Register using invitation code: 79F2AB. Hope to see you there.

The agenda is looking pretty swish:

- 09:00 – 09:30 Registration

- 09:30 - 10:15 Keynote ‘I’ve looked at clouds from both sides now....’– John Taysom, Active Seed Investor

- 10:15 - 10:45 The Microsoft Vision for Cloud Computing – Steve Clayton, Director Software + Services, EMEA

- 10:45 - 11:00 Break

- 11:00 - 12:30 “Windows Azure in Real World” – hear from startups that have built their business around the Azure platform, moderated by Alistair Beagley, Azure UK Developer and Platform Lead

- 12:30 - 13:15 Lunch and networking

- 13:15 - 14:15 Breakout Tracks, moderated by our Azure Experts …

- 14:15 - 14:30 Session change over

- 14:30 - 15:30 Breakout Tracks, moderated by our Azure Experts …

- 15:30 - 16:00 Break & Session change over

- 16:00 - 17:00 Breakout Tracks, moderated by our Azure Experts …

- 17:00 - 18:00 Pitches and Judging

- 18:15 Wrap-up and close

- 18:15 - 20:00 Drinks & Networking

Bruno Terkaly reviewed the Meetup hosted by Bruno Terkaly at Microsoft San Francisco in this 3/23/2010 post:

Hands On Lab

During my last Azure launch event I promised my audience that I would put together an informal training session on Azure at Microsoft San Francisco.

Last night I delivered on that message.

Happy with the turnout

In a matter of a few weeks I managed to get 56 signups for the event. About 40 showed up which pleased me in terms of the ratio of sign ups and attendees.

Many of the attendees were extremely experienced developers. Some of them are part of startups, scientists, founders of software companies, CTOs, employees at Lawrence Livermore Labs. I was humbled by my audience.

You can read more about it here: http://www.meetup.com/Cloud-Computing-Developers-Group/. …

Ryan Dunn and Steve Marx present a 00:24:06 Cloud Cover Episode 5 - MIX Edition Channel9 video segment:

Join Ryan and Steve each week as they cover the Microsoft cloud with news, features, tips, and tricks. You can follow and interact with the show at @cloudcovershow

In this special MIX episode:

- Get the inside scoop on the Windows Azure Presentations at MIX

- Discover a tip on using cloud storage locally during development

Show Links:

- Lap around the Windows Azure Platform – Steve Marx

- Building and Deploying Windows Azure Based Applications with Microsoft Visual Studio 2010 – Jim Nakashima

- Building PHP Applications using the Windows Azure Platform – Craig Kitterman, Sumit Chawla

- Using Ruby on Rails to Build Windows Azure Applications – Sriram Krishnan

- Microsoft Project Code Name “Dallas": Data for your apps – Moe Khosravy

- Using Storage in the Windows Azure Platform – Chris Auld

- Building Web Applications with Windows Azure Storage – Brad Calder

- Building Web Application with Microsoft SQL Azure – David Robinson

- Connecting Your Applications in the Cloud with Windows Azure AppFabric – Clemens Vasters

- Microsoft Silverlight and Windows Azure: A Match Made for the Web – Matt Kerner

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Mike Kirkwood’s Enterprise Cloud Control: Q&A with Eucalyptus CTO Dr. Rich Wolski post of 3/23/2010 begins:

Eucalyptus a software layer that forms private clouds patterns in the enterprise. Private clouds are bringing together the best of Linux, Amazon, and VMware in a practical way.

It could be argued that the cloud itself is a product of the open source spirit. So, with that in mind, we took a closer look at Eucalyptus and sat down with Dr. Rich Wolski, Chief Technology Officer of the Eucalyptus team to figure out what is the opportunity and why it is gathering the attention of successful open source entrepreneurs, investors, and partners. …

James Hamilton explains one of the reasons he works at Amazon Web Services in his Using a Market Economy post of 3/23/2010:

Every so often, I come across a paper that just nails it and this one is pretty good.. Using a market Economy to Provision Resources across a Planet-wide Clusters doesn’t fully investigate the space but it’s great to progress on this important area and the paper is a strong step in the right direction.

I spend much of my time working on driving down infrastructure costs. There is lots of great work that can be done in datacenter infrastructure, networking, and server design. It’s both a fun and important area. But, an even bigger issue is utilization. As an industry, we can and are driving down the cost of computing and yet it remains true that most computing resources never get used. Utilization levels at large and small companies typically run in the 10 to 20% range. I occasionally hear reference to 30% but it’s hard to get data to support it. Most compute cycles go wasted. Most datacenter power doesn’t get useful work done. Most datacenter cooling is not spent supporting productive work. Utilization is a big problem. Driving down the cost of computing certainly helps but it doesn’t address the core issue: low utilization.

That’s one of the reasons I work at a cloud computing provider. When you have very large, very diverse workloads, wonderful things happen. Workload peaks are not highly correlated. For example, tax preparation software is busy around tax time. Retail software towards the end of the year. Social networking while folks in the region are awake. All these peaks and valleys overlay to produce a much flatter peak to trough curve. As the peak to trough ratio decreases, utilization sky rockets. You can only get these massively diverse workloads in public clouds and its one of the reasons why private clouds are a bit depressing (see Private Clouds are not the Future). Private clouds are so close to the right destination and yet that last turn was a wrong one and the potential gains won’t be achieved. I hate wasted work as much as I hate low utilization. [Emphasis added.] …

Colin Clark claims “If a resource can’t be tied to a necessary, sponsored, or revenue producing activity then it is cut” in his “Dynamic Cloud Resources and Accountability” post of 3/22/2010:

I reread this article from time to time just to make sure that I stay within some boundaries – 21 Experts Define Cloud Computing. Among the 21, there are a couple that I really like; I’m going to cite a few of them over the next few days, and tell you what I like and don’t like about them (Also – remember, the title reads “21 Experts…” – it didn’t say “21 Experts in Cloud Computing…” – details matter). This article was brought to my attention in a blog post, “Rumblings in the Cloud,” by Louis Lovas at Progress Apama.

Dynamic Cloud Resources – While You Wait (or “I’ll have an EC2 grid, monster that, please”)

“What is cloud computing all about? Amazon has coined the word “elasticity” which gives a good idea about the key features: you can scale your infrastructure on demand within minutes or even seconds, instead of days or weeks, thereby avoiding under-utilization (idle servers) and over-utilization (blue screen) of in-house resources. With monitoring and increasing automation of resource provisioning we might one day wake up in a world where we don’t have to care about scaling our Web applications because they can do it alone.”

Markus Klems

I like this quote for a couple of reasons – but there’s something missing here, and it’s missing in the rest of quotes in the article as well. There’s no mention of accountability. If an organization is making use of cloud resources, where is the demand coming from? There are a couple of broad categories that this could fall into, 1) outsourced apps like email, project management, analytics, google apps, etc. (for a great list, see Bessemer’s Rules for the Cloud), 2) operational stuff – development, testing, storage, and 3) REVENUE producing activities. …

SunGard’s public relations department posted SunGard Availability Services announces enterprise-grade cloud computing platform on 3/22/2010:

SunGard Availability Services today announced expansion of its enterprise-grade cloud computing platform to deliver application availability and ultimately recovery in the cloud. Customer demand for the SunGard cloud solutions is strong.

As a pioneer and leader in IT operations and information availability management, SunGard is strongly positioned to deliver a highly available and resilient cloud computing solution for enterprise-grade requirements. Customers also benefit from access to a portfolio of managed hosting, storage and recovery services for physical and virtual environments.

SunGard cloud solutions in North America will take advantage of a Vblock™ Infrastructure Package, recently announced by the Virtual Computing Environment coalition, which was formed by Cisco and EMC together with VMware.

The SunGard cloud solutions are part of the company’s strategy to help customers simplify the way they leverage IT infrastructure to drive business agility and differentiation in a cost-effective manner. SunGard’s cloud platform will provide the foundation for delivering Infrastructure-, Platform- and Software-as-a-Service in an enterprise-grade, secure and highly available environment. SunGard will provide IT infrastructure on demand, which allows companies to grow or contract cloud resources based on business conditions while also addressing their need for cloud-based hot sites as low-cost recovery options.

Matt Barcus, chief technology officer of New Tech Network, a subsidiary of KnowledgeWorks Foundation, said, “We chose SunGard because its cloud platform is truly an enterprise-grade environment. New Tech needed a partner with a reputation for addressing availability and data protection, but at the same time could easily scale to meet our demanding growth. We currently have 40 high schools nationwide that rely on our applications daily, and we will double that number in the next two years. With SunGard, we have peace of mind that our applications are up and running on the latest cloud technology and managed by a skilled and experienced team.” …

<Return to section navigation list>