Windows Azure and Cloud Computing Posts for 3/19/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release and new features announced at MIX10 in March 2010.

Azure Blob, Table and Queue Services

Stefan Tilkov reports about RFC 5789: PATCH Method for HTTP in this 3/19/2010 post to the InnoQ blog:

After a long, long time, the HTTP PATCH verb has become an official standard: IETF RFC 5789. From the abstract:

- Several applications extending the Hypertext Transfer Protocol (HTTP) require a feature to do partial resource modification. The existing HTTP PUT method only allows a complete replacement of a document. This proposal adds a new HTTP method, PATCH, to modify an existing HTTP resource.

That's pretty great news, even though it will probably take some time before you can actually gain much of a benefit from it. Until now, there were two options of dealing with resource creation (and update, for that matter):

- Use a POST to create a new resource when you want the server to determine the URI of the new resource

- Use a PUT to do a full update of a resource (or create if it's not there already)

Sometimes, though, what you're looking for is a partial update. You have a bunch of different choices: You can design overlapping resources so that one of them reflects the part you're interested in, and do a PUT on that; or you can use POST, which is so unrestricted it can essentially mean anything.

With PATCH, you have a standardized protocol-level verb that expresses the intent of a partial update. That's nice, but its success depends on two factors:

- The availability of standardized patch formats that can be re-used independently of the application

- The support for the verb in terms of infrastructure, specifically intermediaries and programming toolkits

In any case, I will definitely start advocating its use for the purpose it's been intended to support, even if this means going with home-grown patch formats for some time: It's still better than POST, and using some sort of

x-http-method-override-style workaround should work nicely if needed.Kudos to James Snell for investing the time and energy to take this up.

Martin Fowler climbs on the REST bandwagon with his Richardson Maturity Model: Steps toward the glory of REST article of 3/18/2010:

A model (developed by Leonard Richardson) that breaks down the principal elements of a REST approach into three steps. These introduce resources, http verbs, and hypermedia controls.

Recently I've been reading drafts of Rest In Practice: a book that a couple of my colleagues have been working on. Their aim is to explain how to use Restful web services to handle many of the integration problems that enterprises face. At the heart of the book is the notion that the web is an existence proof of a massively scalable distributed system that works really well, and we can take ideas from that to build integrated systems more easily.

Figure 1: Steps toward REST

To help explain the specific properties of a web-style system, the authors use a model of restful maturity that was developed by Leonard Richardson and explained at a QCon talk. The model is nice way to think about using these techniques, so I thought I'd take a stab of my own explanation of it. (The protocol examples here are only illustrative, I didn't feel it was worthwhile to code and test them up, so there may be problems in the detail.) …

Martin continues with his traditional detailed analyses of architectural models.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

Mary Jo Foley’s Microsoft to provide customers with more cloud storage post of 3/19/2010 reports:

Microsoft made a couple of somewhat under-the-radar storage announcements this past week.

At the Mix 10 conference, during one of the sessions, the SQL Azure team announced that existing SQL Azure customers will be given access to the SQL Azure 50 GB preview on a request basis. Microsoft isn’t yet sharing availability or pricing details for the 50 GB option, but a spokesperson said they’d share those details “in the coming months” as part of the next SQL Azure service update. (Thanks to OakLeaf Systems’ blogger Roger Jennings for the heads up on this one.)

On the cloud-hosted Exchange front, Microsoft also announced this week that it has increased the size of Exchange Online default mailboxes from 5 GB to 25 GB.

Thanks to Mary Jo for the link.

The WCF Data Services Team’s post of 3/19/2010 links to an explanation of Developing an OData consumer for the Windows Phone 7:

A couple of days back at Mix we announced the CTP release of the OData Client Library for Windows Phone 7 series.

Cool stuff undoubtedly. But how do you use it?

… Phani [RajuYN], a Data Services team member, shows you how on his blog.

Phani’s example uses the NetFlix OData service, but could equally well use an SQL Azure OData service.

David Robinson summarizes SQL Azure announcements at MIX in this 3/19/2010 post:

This was an incredible week here at MIX and I presented a session on Developing Web Applications with SQL Azure. During the session I tried to drive home the point that we value and act upon the feedback you provide to us. We also recognize that we need to be more transparent on what features we are working on and when they will be available.

With that in mind, I was happy to announce the following features / enhancements:

Support for MARS

In SU2 (April) we are adding support for Multiple Active Row Sets. This is a great feature available in SQL Server that allows you to execute multiple batches in a single connection.

50GB Databases

We heard the feedback and will be offering a new 50gb size option in SU3 (June). If you would like to become an early adopter of this new size option before SU3 is generally available, send an email to EngageSA@microsoft.com and it will auto-reply with instructions to fill out a survey. Fill the survey out to nominate your application that requires greater than 10gb of storage. More information can be found at Cihan’s blog.

Support for Spatial Data

One of the biggest requests we received was to support spatial data in SQL Azure and that feature will be available for you in SU3 (June). Within this feature is support for the Geography and Geometry types as well as query support in T-SQL. This is a significant feature and now opens the Windows Azure Platform to support spatial and location aware applications.

SQL Azure Labs

We are launching a new site call SQL Azure Labs. SQL Azure Labs provides a place where you can access incubations and early preview bits for products and enhancements to SQL Azure. The goal is to gather feedback to ensure we are providing the features you want to see in the product. All technologies on this site are for testing and are not ready for production use. Some of these features might not even make it into production – it’s all based upon your feedback. Also please note, since these features are actively being worked on, you should not use them against any production SQL Azure databases.

The first preview on the site is the OData Service for SQL Azure. This enables you to access your SQL Azure Databases as an OData feed by checking a checkbox. It also provides you the ability to secure this feed using the Access Control Services that are provided by Windows Azure Platform AppFabric. You also have the ability to access the feed via Anonymous access should you wish to do so. More details on this can be found at the Data Services Team blog.

Keep those great ideas coming and submit them at http://www.mygreatsqlazureidea.com

Steven Forte’s An easy way to set up an OData feed from your SQL Azure database post of 3/19/2010 begins:

Ever since the “new” SQL Azure went to beta, I have craved an automated way to set up an OData (Astoria) Service from my SQL Azure database. My perfect world would have been to have a checkbox next to each table in my database in the developer portal asking to “Restify” this table as a service. It always seemed kind of silly to have to build a web project, create an Entity Framework model of my SQL Azure database, build a WCF Data Services (OData) service on top of that, and then deploy it to a web host or Windows Azure. (This service seems overkill for Windows Azure.) In addition to all of that extra work, in theory it would not be the most efficient solution since I am introducing a new server to the mix.

At Mix this week and also on the OData team blog, there is an announcement as how to do this very easily. You can go to the SQL Azure labs page and then click on the “OData Service for SQL Azure” tab and enter in your SQL Azure credentials and assign your security and you will be able to access your OData service via this method: https://odata.sqlazurelabs.com/OData.svc/v0.1/<serverName>/<databaseName> …

I went in and gave it a try. In about 15 seconds I had a working OData feed, no need to build a new web site, build an EDM, build an OData svc, and deploy, it just made it for me automatically. Saved me a lot of time and the hassle (and cost) of deploying a new web site somewhere. Also, since this is all Azure, I would argue that it is more efficient to run this from Microsoft’s server’s than mine: less hops to the SQL Azure database. (At least that is my theory.) …

To really give this a test drive, I opened up Excel 2010 and used SQL Server PowerPivot. I choose to import from “Data Feeds” and entered in the address for my service. I then imported the Customers, Orders, and Order Details tables and built a simple Pivot Table.

This is a great new feature!

If you are doing any work with Data Services and SQL Azure today, you need to investigate this new feature. Enjoy!

Stephen O’Grady analyzes The Problem with Big Data in this 3/18/2010 post:

He who has the most data wins. Right?

Google certainly believes this. But how many businesses, really, are like Google?

Not too many. Or fewer than would constitute a healthy and profitable market segment, according to what I’m hearing from more and more of the Big Data practitioners.

Part of the problem, clearly, is definitional. What is Big to you might not be Big to me, and vice versa. Worse, the metrics used within the analytics space vary widely. Technologists are used to measuring data in storage: Facebook generates 24-25 terabytes of new data per day, etc. Business Intelligence practitioners, on the other hand, are more likely to talk in the number of rows. Or if they’re very geeky, the number of variables, time series and such.

Big doesn’t always mean big, in other words. But big is increasingly bad, from what we hear. At least from a marketing perspective. …

Stephen continues his analysis, which is peripherally related to the current NoSQL kerfluffle.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Bruce Kyle’s Windows Azure AppFabric Goes Commercial April 9 post of 3/19/2010 provides a summary of the Azure AppFabric’s features and billing practices:

Windows Azure platform AppFabric will be commercially available on a paid and fully SLA-supported basis on April 9.

You can find our pricing for AppFabric here. You can also go to the pricing section of our FAQs for additional information as well as our pricing blog post.

For more information, see Announcing upcoming commercial availability of Windows Azure platform AppFabric.

About Windows Azure AppFabric

The Windows Azure platform AppFabric provides secure connectivity as a service to help developers bridge cloud, on-premises, and hosted deployments. You can use AppFabric Service Bus and AppFabric Access Control to build distributed and federated applications as well as services that work across network and organizational boundaries.

Service Bus

Service Bus helps to provide secure connectivity between loosely-coupled services and applications, enabling them to navigate firewalls or network boundaries and to use a variety of communication patterns.

You can use Service Bus to connect Windows Azure applications and SQL Azure databases with existing applications and databases. It is often used to bridge on and off-premises applications or to create composite applications.

Service Bus lets you expose apps and services through firewalls, NAT gateways, and other problematic network boundaries. So your could connect to an application behind a firewall or one where your customer does not even expose the application as an endpoint. You can use Service Bus to lower barriers to building composite applications by exposing endpoints easily, supporting multiple connection options and publish and subscribe for multicasting.

Service Bus provides a lightweight, developer-friendly programming model that supports standard protocols and extends similar standard bindings for Windows Communication Foundation (WCF) programmers.

It blocks malicious traffic and shields your services from intrusions and denial-of-service attacks.

See Windows Azure platform AppFabric on MSDN.

Access Control

Access Control helps you build federated authorization into your applications and services, without the complicated programming that is normally required to secure applications that extend beyond organizational boundaries. With its support for a simple declarative model of rules and claims, Access Control rules can easily and flexibly be configured to cover a variety of security needs and different identity-management infrastructures.

You can create user accounts that federate a customer's existing identity management system that uses Active Directory service, other directory systems, or any standards-based infrastructure. With Access Control, you exercise complete, customizable control over the level of access that each user and group has within your application. It applies the same level of security and control to Service Bus connections

Identity is federated using access control through rule based authorization that enable your applications to respond as if the user accounts were managed locally. As a developer you use a lightweight developer-friendly programming model based on the Microsoft .NET Framework and Windows Communication Foundation to build the access rules based on your domain knowledge. The flexible standards-based service supports multiple credentials and relying parties.

Bruce continues with links to Resources: Whitepapers, SDK & Toolkits, PDC09 Videos and the AppFabric Labs.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Eugenio Pace’s Windows Azure Guidance – A (simplistic) economic analysis of a-Expense migration post of 3/19/2010 analyzes Microsoft’s billings for Azure in this guidance example, including the first mention I’ve seen of $500/month for a 50 GB database:

A big motivation for considering hosting on Windows Azure is cost. Each month, Microsoft will send Adatum a bill for the Windows Azure resources used. This is a very fast feedback loop on how they are using the infrastructure. Did I say that money is a great motivator yet? (another favorite phrase :-) )

Small digression: I had a great conversation with my colleague Danny Cohen in Tel Aviv a few weeks ago. We talked (among a million other topics :-) ) about Windows Azure pricing and its influence in design. He told me a story about “feedback loops” and their influence in behavior that I liked very much. I’ve been using it pretty frequently. He wrote a very good summary here.

So, what are the things that Adatum would be billed for in the a-Expense application?

At this stage of a-Expense migration, there are 5 things that will generate billing events:

- In/Out bandwidth. This is web page traffic generated between user’s browsers and a-Expense web site. ($0.10-$0.15/GB)

- Windows Azure Storage. In this case it will just be the Profile data. Later it will also be used for storing the receipt scans. ($0.15/GB)

- Transactions. Each interaction with the storage system is also billed. ($0.01/10K transactions)

- Compute. This is the time a-Expense web site nodes are up. (Small size role is $0.12/hour)

- SQL Storage. SQL Azure comes in 3 sizes: 1GB, 10GB and 50GB. ($10, $100, $500/month respectively).

Eugenio continues with a detailed cost analysis.

Dimitri Sotkinov describes how his company’s QuestOnDemand service uses “Windows Azure as it underlying technology” in his IT Management as a Service: Discussion and Demo post of 3/19/2010:

Microsoft’s TechNet EDGE posted a video with quite detailed discussion of Systems Management as a Service concept, example of such a service (Quest OnDemand), how it uses Windows Azure as the underlying technology, the security model behind it, and so on. Obviously a demo is in there as well.

Check out the video here.

Steve Marx plugs the Windows Azure Firestarter Event: April 6th, 2010 in this 3/18/2010 post:

The Windows Azure Firestarter event here in Redmond, WA is coming up in just a few short weeks. You can attend in person or watch the live webcast by registering at http://www.msdnevents.com/firestarter/.

Will you be there? I’ll be presenting a platform overview at the beginning of the day. If you’re going to attend (virtually or in person), drop me a tweet and let me know what you’d like to see covered.

The Windows Azure Team’s Real World Windows Azure: Interview with Sarim Khan, Chief Executive Officer and Co-Founder of sharpcloud post of 3/18/2010 provides additional backgroud to the sharpcloud Case Study reported in Windows Azure and Cloud Computing Posts for 3/18/2010+

As part of the Real World Windows Azure series, we talked to Sarim Khan, CEO and Co-Founder of sharpcloud, about using the Windows Azure platform to run its visual business road-mapping solution. Here's what he had to say:

MSDN: What service does sharpcloud provide?

Khan: sharpcloud helps businesses better communicate their strategic plans. Our service applies highly visual and commonly used social-networking tools to the crucial task of developing long-term business road maps and strategy.

MSDN: What was the biggest challenge sharpcloud faced prior to implementing Windows Azure?

Khan: To support the global companies that we see as our prime market, we knew that we needed a cloud computing solution that would be easy to scale and deploy. We wanted to devote all of our resources to developing a compelling service, not managing server infrastructure. We initially started using Amazon Web Services, but that still required us to focus time on maintaining the Amazon cloud-based servers

MSDN: Can you describe the solution you built with Windows Azure to help maximize your resources?

Khan: With the sharpcloud service, executives and other users can work within a Web browser to create a framework and real-time dialogue on their road maps. They can define attributes and properties, such as benefit, cost, and risk. They can also add "events" to the road map, such as technologies and trends. Once the road map is populated with events, users can explore it through a three-dimensional view, add information, and explore relationships between events. Because we were already creating an application based on the Microsoft .NET Framework and the Microsoft Silverlight browser plug-in, we only had to rewrite a modest amount of code to ensure that our service and storage mechanism would communicate properly with the Windows Azure application programming interfaces.

Figure 1. The sharpcloud application makes it possible for a virtual team to view events and the relationships among them in a three-dimensional road map and to post comments for other team members to review in real time.

tbtechnet chimes in on the sharpcloud project in his Sharpcloud Leverages Front Runner and Bizspark post of 3/18/2010:

http://www.microsoft.com/casestudies/Case_Study_Detail.aspx?CaseStudyID=4000006685

It was great working with these folk:

sharpcloud took advantage of the Front Runner for Windows Azure Platform. Front Runner is an early adopter program for Microsoft solution partners. It provides technical resources, such as application support for Windows Azure from Microsoft development experts by phone and e-mail, as well as access to Windows Azure technical resources in one, central place. Once an application is certified as compatible with Windows Azure, the program provides additional marketing benefits. These benefits include a promotional toolkit, including a stamp and news release to use on marketing materials, a discount on Ready-to-Go campaign costs, and visibility on a Windows Azure Web site.

sharpcloud also joined the Microsoft BizSpark program for software startup companies. BizSpark unites startups with the resources—including software, support, and visibility—that they need to succeed. The program includes access to Microsoft development tools, platform technologies, and production and hosting licenses.

“The key for us has been the software and support available without licensing cost,” says Khan. “As a small startup, we have to maximize our investment, and these programs have certainly made our development budget go much further than it would have gone otherwise. We’ve also had good support from Microsoft with technologies including Windows 7 and Windows Server 2008 R2. And it’s stimulating to get a window into what other startups are doing with these technologies.”

Thuzi’s Facebook Azure Toolkit v0.9 Beta on CodePlex as of 3/12/2010 carries the following introduction:

Welcome to the Facebook Azure Toolit. This toolkit was built by Thuzi in collaboration with Microsoft to give the community a good starter kit for getting Facebook apps up and running in Windows Azure. Facebook apps hosted in Azure provide a flexible and scalable cloud computing platform for the smallest and largest of Facebook applications. Whether you have millions of users or just a few thousand, the Facebook Azure Toolit helps you to build your app correctly so that if your app is virally successful, you won't have to architect it again. You will just need to increase the number of instances you are using, then you are done scaling. :)

DISCLAIMER: This toolkit does not demonstrate how to create a facebook application. It is assumed that you already know how to do that or are willing to learn how to do that. there are plenty of links below on how to do this. Here is one, Link that is also listed below that helps explain how to set up an app and there are plenty of sample in the Facebook Developers Toolkit.

NOTE: You must use Visual Studio 2010 RC to open the project.

What does this Tool[k]it include?

- Facebook Developers Toolkit Link

- Ninject 2.0 for Dependency Injection Link

- Asp.Net MVC 2 Link

- Windows Azure Software Development Kit (February 2010) Link

- AutoMapper Link

- Azure Toolkit - Simplified library for accessing Message Queues, Table Storage and Sql Server

- Automated build scripts for one-click deployment from TFS 2010 to Azure …

Thuzi continues with How do I get started?, Getting Setup and Running Locally, and Setting up Deployment in TFS 2010 topics.

Return to section navigation list>

Windows Azure Infrastructure

David Linthicum asserts “Once it got past the vendor hype, the Cloud Connect event revealed the three key issues that need to be addressed” in The cloud's three key issues come into focus post of 3/19/2010 for InfoWorld’s Cloud Computing blog:

I'm writing this blog on the way back from Cloud Connect held this week in Santa Clara. It was a good show, all in all, and there was a who's-who in the world of cloud computing. I've really never seen anything like the hype around cloud computing, possibly because you can pretty much "cloudwash" anything, from disk storage to social networking. Thus, traditional software vendors are scrambling to move to the cloud, at least from a messaging perspective, to remain relevant. If I was going to name a theme of the conference, it would be "Ready or not, we're in the cloud." …

But beyond the vendor hype, it was clear at the conference that several issues are emerging, even if the solutions remain unclear:

- Common definitions …

- Standards …

- Security …

David expands on the three issues in his post.

Phil Wainwright asks Is SaaS the same as cloud? in this 3/19/2010 post to the Enterprise Irregulars blog:

From the customer’s perspective, it’s all the same. If it’s provided over the Internet on a pay-for-usage basis, it’s a cloud service. Within the industry, we argue about definitions more than is good for us. Customers look in from the outside and see a much simpler array of choices.

Why is this important? It matters to how we market and support cloud services (of whatever ilk). Yesterday EuroCloud UK (disclosure: of which I’m chair) had a member meeting, hosted at SAP UK headquarters, that covered various aspects of the transition to SaaS for ISVs. From the title, you’d imagine it would have little content of relevance to raw cloud providers at the infrastructure-as-a-service layer. (One of our challenges in the early days of EuroCloud, whose founders are more from the SaaS side of things, is to make sure we bring the infrastructure players on board with us). But in fact, much of the discussion covered topics of equal interest at any level of the as-a-service stack: How to work with partners? How to compensate sales teams? What sort of contract to offer customers? How to reconcile paying for resources on a pay-per-use basis with a per-seat licence fee? What instrumentation and reporting of service levels should the provider’s infrastructure include?

And then came the customer presentation, by Symbian Foundation’s head of IT, Ian McDonald. He was there as a customer of SAP’s Business ByDesign SaaS offering, whose team were hosting the meeting. But it soon became clear that his organization’s voracious consumption of cloud services runs the gamut from high-level applications like ByDesign and Google Apps through to Amazon Web Services, Jungle Disk storage and file sharing (stored on either Amazon or Rackspace), even Skype. Symbian’s developers still build their own website infrastructure using open-source platforms but that too is hosted in the cloud. The imperative for Symbian, as a not-for-profit consortium, is to stay flexible and minimize costs. An important part of that is having the capacity to scale rapidly if needed but without having to pay up-front for that capacity. …

Phil continues his argument with takeaways from other EuroCloud UK sessions.

Lori MacVittie claims “Talking about standards apparently brings out some very strong feelings in a whole lot of people” in her Now is the conference of our discontent … post of 3/19/2010:

From “it’s too early” to “we need standards now” to “meh, standards will evolve where they are necessary”, some of the discussions at CloudConnect this week were tinged with a bit of hostility toward, well, standards in general and the folks trying to define them. In some cases the hostility was directed toward the fact that we don’t have any standards yet.

[William Vambenepe has a post on the subject, having been one of the folks hostility was directed toward during one session ]

Lee Badger, Computer Scientist at NIST, during a panel on “The Standards Real Users Need Now” offered a stark reminder that standards take time. He pointed out the 32 months it took to define and agree on consensus regarding the ASCII standard and the more than ten years it took to complete POSIX. Then Lee reminded us that “cloud” is more like POSIX than ASCII. Do we have ten years? Ten years ago we couldn’t imagine that we’d be here with Web 2.0 and Cloud Computing, so should we expect that in ten years we’ll still be worried about cloud computing?

Probably not.

The problem isn’t that people don’t agree standards are a necessary thing, the problem appears to be agreeing on what needs to be standardized and when and, in some cases, who should have input into those standards. There are at least three different constituents interested in standards, and they are all interested in standards for different reasons which of course leads to different views on what should be standardized.

Lori continues with “WHAT are we STANDARDIZING?” and “CLOUDS cannot be BLACK BOXES” topics.

Neil MacKenzie’s Service Runtime in Windows Azure post of 3/18/2010 analyzes Windows Azure’s architecture:

Roles and Instances Windows Azure implements a Platform as a Service model through the concept of roles. There are two types of role: a web role deployed with IIS; and a worker role which is similar to a windows service. Azure implements horizontal scaling of a service through the deployment of multiple instances of roles. Each instance of a role is allocated exclusive use of a VM selected from one of several sizes from a small instance with 1 core to an extra-large instance with 8 cores. Memory and local disk space also increase in going from a small instance to an extra-large instance.

All inbound network traffic to a role passes through a stateless load balancer which uses an unspecified algorithm to distribute inbound calls to the role among instances of the role. Individual instances do not have public IP addresses and are not directly addressable from the Internet. Instances are able to connect to other instances in the service using TCP and HTTP.

Azure provides two deployment slots: staging for testing in a live environment; and production for the production service. There is no real difference between the two slots.

It is important to remember that Azure charges for every deployed instance of every role in both production and staging slots regardless of the status of the instance. This means that it is necessary to delete an instance to avoid being charged for it.

Fault Domains and Upgrade Domains There are two ways to upgrade an Azure service: in-place upgrade and Virtual IP (VIP) swap. An in-place upgrade replaces the contents of either the production or staging slot with a new Azure application package and configuration file. A VIP swap literally swaps the virtual IP addresses associated with roles in the production and staging slots. Note that it is not possible to do an in-place upgrade where the new application package has a modified Service Definition file. Instead, any existing service in one of the slots must be deleted before the new version is uploaded. A VIP swap does support modifications to the Service Definition file.

The Windows Azure SLA comes into force only when a service uses at least two instances per role. Azure uses fault domains and upgrade domains to facilitate adherence to the SLA.

When Azure instances are deployed, the Azure fabric spreads them among different fault domains which means they are deployed so that a single hardware failure does not bring down all the instances. For example, multiple instances from one role are not deployed to the same physical server. The Azure fabric completely controls the allocation of instances to fault domains but an Azure service can view the fault domain for each of its instances through the RoleInstance.FaultDomain property.

Similarly, the Azure fabric spreads deployed instances among several upgrade domains. The Azure fabric implements an in-place upgrade by bringing down all the services in a single upgrade domain, upgrading them, and then restarting them before moving on to the next upgrade domain. The number of upgrade domains is configurable through the upgradeDomainCount attribute to the ServiceDefinition root element in the Service Definition file. The default number of upgrade domains is 5 but this number should be scaled with the number of instances. The Azure fabric completely controls the allocation of instances to upgrade domains, modulo the number of upgrade domains, but an Azure service can view the upgrade domain for each of its instances through the RoleInstance.UpdateDomain property. (Shame about the use of upgrade in one place and update in another.) …

Neil continues with explanations of Service Definition and Service Configuration, RoleEntryPoint, Role, RoleEnvironment, RoleInstance, RoleInstanceEndpoint and LocalResource.

<Return to section navigation list>

Cloud Security and Governance

Joseph Trigliari’s Cloud computing and payment processing security: Not mutually exclusive after all? post of 3/19/2010 to Pivotal Payments: Canadian Merchant Industry News reports:

With cloud computing becoming a more and more popular and attractive IT model for organizations, one serious concern that has arisen is what the implications are for PCI compliance and overall payment processing security.

Many payment processing industry experts maintain that the cloud computing and PCI compliance are mutually exclusive, at least now while there are no set guidelines or requirements governing cloud computing security.

However, PCI expert Walt Conway thinks PCI compliance may be possible in the cloud.

"When you look at the cloud, keep your security expectations realistic," he wrote in an article for StorefrontBacktalk.com. "Don't expect 100 percent security. You don't have 100 percent security anywhere, so don't expect it in the cloud. What you want is the same, hopefully very high, level of security you have now or maybe a little higher."Some things that organisations moving into the cloud must consider, he writes, are the security of the cloud provider, the new scope of PCI compliance, and the notification and data availability procedures should any client sharing the organisation's cloud experience a breach or subpoena of their data.

The question of PCI compliance in the cloud is only going to become an even larger concern as cloud computing grows in popularity - a recent survey from Mimecast, for example, found that 70 percent of IT decision makers surveyed who already use cloud services plan to increase their cloud investments in the near future.

This is a much more optimistic view of PCI compliance with cloud computing than I’ve read previously.

<Return to section navigation list>

Cloud Computing Events

Sebastian added the first four three slide decks for the San Francisco Cloud Computing Club’s 3/16/2010 meetup colocated with the Cloud Connect Conference in Santa Clara, CA:

- PaaS seen by Ezra: Ezra's presentation of PaaS at EngineYard

- Makara: Tobias' presentation of Makara at Cloud Connect

- Appirio Appirio's presentation at Cloud Club (at Cloud Connect)

- Cloud club – Heroku: Oren's excellent presentation on the need for PaaS

Updated 3/20/2010 for Cloud club – Heroku.

Liz MacMillan reports Microsoft’s Bill Zack to Present at Cloud Expo East on 3/19/2010:

You are interested in cloud computing, but where do you start? How are vendors defining Cloud Computing? What do you need to know to figure out which applications make sense in the cloud? And is any of this real today?

In his session at the 5th International Cloud Expo, Bill Zack, an Architect Evangelist with Microsoft, will explore a set of five patterns that you can use for moving to the cloud, together with working samples on Windows Azure, Google AppEngine, and Amazon EC2. He will provide the tools and knowledge to help you more clearly understand moving your organization to the cloud. …

Bill Zack is an Architect Evangelist with Microsoft.

Roger Struckhoff claims “Microsoft Architect Evangelist Bill Zack Will Tell All at Cloud Expo” in his Patterns? In Cloud Computing? post of 3/19//2010:

You are interested in cloud computing, but where do you start?"

This question was posed by Microsoft Architect Evangelist Bill Zack, who will present a session on the topic of Patterns in Cloud Computing at Cloud Expo.

People often see sheep or little doggies or Freudian/Rohrsachian images in physical clouds, but fortunately, the patterns in Cloud Computing are less fanciful and more discrete. That said, there remains a lot of confusion about basic definitions within the nascent Cloud Computing industry, no doubt because Cloud is not a new technology, but rather, a new way of putitng things together and delivering computing services. Zack asks, "How are vendors defining Cloud Computing? What do you need to know to figure out which applications make sense in the cloud? And is any of this real today?"

His answer to the last question would be "yes," as his session is "based on real-world customer engagements," he notes. Specfically, "the session explores a set of five patterns that you can use for moving to the cloud, together with working samples on Windows Azure, Google AppEngine, and Amazon EC2. Avoiding the general product pitches, this session provides the tools and knowledge to help you more clearly understand moving your organization to the cloud."

William Vambenepe’s “Freeing SaaS from Cloud”: slides and notes from Cloud Connect keynote post of 3/19/2010 begins:

I got invited to give a short keynote presentation during the Cloud Connect conference this week at the Santa Clara Convention Center (thanks Shlomo and Alistair). Here are the slides (as PPT and PDF). They are visual support for my bad jokes rather than a medium for the actual message. So here is an annotated version.

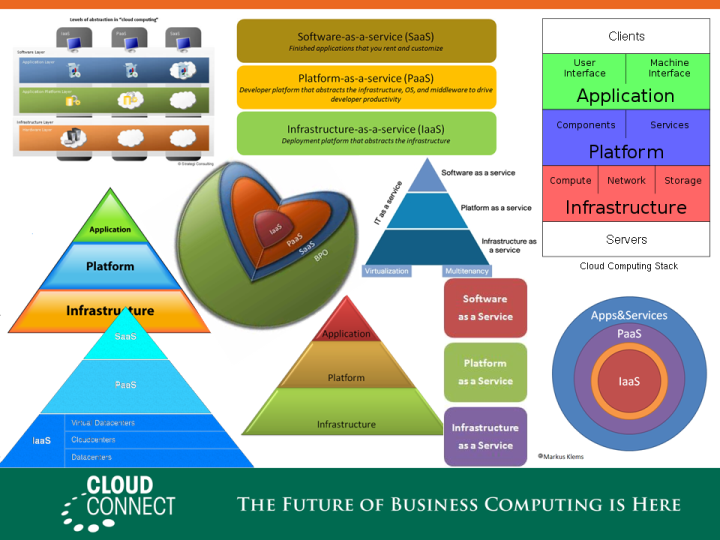

I used this first slide (a compilation of representations of the 3-layer Cloud stack) to poke some fun at this ubiquitous model of the Cloud architecture. Like all models, it’s neither true nor false. It’s just more or less useful to tackle a given task. While this 3-layer stack can be relevant in the context of discussing economic aspects of Cloud Computing (e.g. Opex vs. Capex in an on-demand world), it is useless and even misleading in the context of some more actionable topics for SaaS: chiefly, how you deliver such services, how you consume them and how you manage them.

In those contexts, you shouldn’t let yourself get too distracted by the “aaS” aspect of SaaS and focus on what it really is.

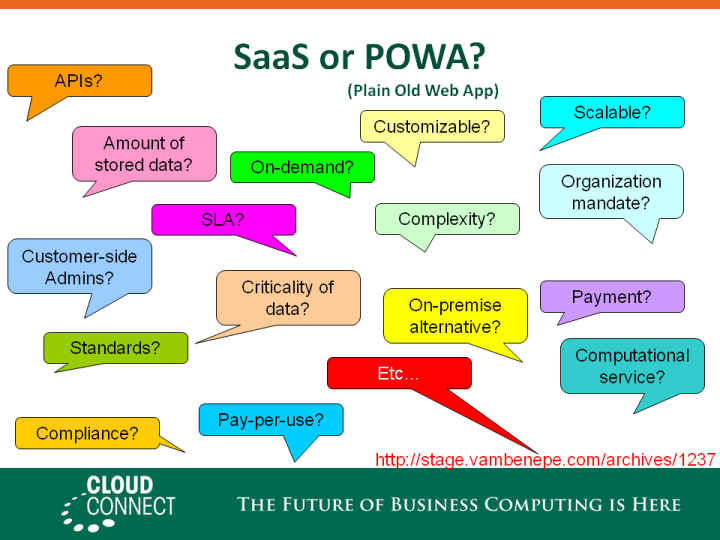

Which is… a web application (by which I include both HTML access for humans and programmatic access via APIs.). To illustrate this point, I summarized the content of this blog entry. No need to repeat it here. The bottom line is that any distinction between SaaS and POWA (Plain Old Web Applications) is at worst arbitrary and at best concerned with the business relationship between the provider and the consumer rather than technical aspects of the application. …

William, who’s an Oracle Corp. architect, continues with an interesting analogy involving a slide with a guillotine. I had the pleasure of sitting next to him the San Francisco Cloud Computing Club meet-up on 3/16/2010 at the Cloud Connect Conference in Santa Clara, CA.

Update 3/20/1020: Sam Johnston asserts in a 3/20/2010 tweet:

Observation: @vambenepe's exclusion of SaaS from the #cloud stack also effectively excludes Google from #cloud: http://is.gd/aQqfM

William replies in this 3/20/2010 tweet:

Not excluding SaaS from #Cloud. Just pointing out that providing/consuming/managing SaaS is 90% app-centric (not Cloud-specific) & 10% Cloud

The Voices for Innovation blog’s DC-Area Microsoft Hosted Codeathon, April 9-11 post of 3/19/2010 announces:

If you or your company is in the Washington, DC, area -- or will be passing through on April 9-11 -- please consider coming to a Microsoft-hosted codeathon at the Microsoft offices in Chevy Chase, MD. Organized in conjunction with the League of Technical Voters, the codeathon will focus on making government documents more accessible and citable. You can learn more about the event and sign up for a project at http://dccodeathon.com.

The codeathon will especially benefit from the participation of developers with skills in the following areas:

1) ASP.NET, HTTP/REST, JavaScript, micro formats

2) Windows Azure (PHP and other OSS technologies on Azure a plus)

3) SharePoint 2010/2007

4) Open source technologies, e.g.; PHP, Python, R-on-RIn addition, if you would be interested in getting started on a project before the codeathon, email us at info@voicesforinnovation.org, and we'll put you in touch with the right person at Microsoft. [Emphasis added.]

Clay Ryder casts a jaundiced eye on cloud marketing projections and analyses at the Cloud Connect Conference in his Some Thoughts on the Cloud Connect Conference post of 3/18/2010 to the IT-Analysis blog:

I ventured out to the Cloud Connect conference and expo at the Santa Clara Convention Center this morning. Unlike most trips to industry events where I take part in the transportation cloud, usually of the rail nature, today I was firmly self reliant with a set of four tires on the parking lot known as CA-237. Part of my reasoning was to go get out of office on a sunny day, but more importantly was my intrigue on this market segment known more or less as Cloud Computing. Readers know that I have a heavy dose of skepticism about the marketing fluff related to Clouds, or the often ill defined opportunity that purports to be the next greatest thing in IT. With few exceptions, vendors and the industry as a whole have historically done a poor job of defining this market opportunity. Is Cloud a market segment? Is Cloud a delivery model? Is it both? So far, this mornings keynotes have only reinforced my skepticism, which is unfortunate. For all of its technical promise, the continued lack of industry definition clarity remains deeply troubling.

By mid morning, we had heard from a Deutsche Bank Securities analyst, a market researcher, a couple of systems vendors, a startup pro, and some ancillary folks. The analyst talked about equipment, vague notions of markets, and then hardware sales he projected were related to cloud sales. While his sales projection of $20 billion is non-trivial, it utterly lacked any definition by which to differentiate these sales from plain old enterprise hardware sales. Just what is the uniquely Cloud stuff that accounts for this expenditure? From this presenter, it seemed that Cloud meant nothing more than enterprise IT with virtualization of servers and network switches. This conveniently left out perhaps the fastest growing segment of IT, i.e. storage. This Cloud discussion was underwhelming at best; an example of a 2002 mindset fixated on server virtualization with lip service to linking those servers together. This doesnt sound like a game changer to me and begs the question of why would VCs plow money into such an ill defined opportunity?

Clay continues giving equally low marks to other presenters. He concludes:

Ultimately, the right answer may be to stop looking for the Cloud market altogether. Perhaps Cloud is really just an intelligent delivery model that addresses the state of art in IT. Maybe Cloud is a process, not a product. As such, things would make a whole lot more sense than the confusing overlap of jargon and techno-obfuscation that so many undertake in the name of the Cloud. This would be a welcome improvement not only in nomenclature, but perhaps in market clarity, which would then help drive market adoption. Money tends to follow well defined paths to ROI. Why should Clouds be any different?

<Return to section navigation list>

Other Cloud Computing Platforms and Services

John Treadway asserts VMware Should Run a Cloud or Stop Charging for the Hypervisor (or both) in this 3/19/2010 post to the CloudBzz blog. It begins:

I had a number of conversations this past week at CloudConnect in Santa Clara regarding the relative offerings of Microsoft and VMware in the cloud market. Microsoft is going the vertically integrated route by offering their own Windows Azure cloud with a variety of interesting and innovated features. VMware, in contrast, is focused on building out their vCloud network of service providers that would use VMware virtualization in their clouds. VMware wants to get by with a little help from their friends.

The problem is that few service providers are really VMware’s friend in the long run. Sure, some enterprise-oriented providers will provide VMware capabilities to their customers, but it is highly likely that they will quickly offer support for other hypervisors (Xen, Hyper-V, KVM). The primary reason for this is cost. VMware charges too much for the hypervisor, making it hard to be price-competitive vs. non-VMware clouds. You might expect to see service providers move to a tiered pricing model where the incremental cost for VMware might be passed onto the end-customers, which will incentivize migration to the cheaper solutions. If they want to continue this channel approach but stop enterprises from migrating their apps to Xen, perhaps VMware needs to give away the hypervisor – or at least drop the price to a level that it is easy to absorb and still maintain profitability ($1/month per VM – billed by the hour at $0.0014 per hour plus some modest annual support fee would be ideal).

Think about it… If every enterprise-oriented cloud provider lost their incentive to go to Xen, VMware would win. Being the default hypervisor for all of these clouds would provide even more incentive for enterprise customers to continue to adopt VMware for internal deployments (which is where VMware makes all of their money). Further, if they offered something truly differentiated (no, not vMotion or DRS), then they could charge a premium. …

CloudTweaks explains Why Open Source and Operations Matter in Cloud Computing on 3/19/2010:

Earlier this week, IBM announced a cloud computing program offering development and test services for companies and governments. That doesn’t sound like much, yet on closer inspection it’s a flagstone in the march toward a comprehensive cloud offering at Big Blue. It also demonstrates how operational efficiency is a competitive weapon in our service economy. Let me explain.

As the IT industry shifts from a product base economy to a service-based economy, operational competency is a competitive weapon. Contrast this with the past where companies could rely on closed-APIs, vendor lock in or the reliance on vast resources to build business and keep out the competition. Today, anyone with a good idea can connect to a cloud provider and build a software business over-night –- without massive investment dollars. Instead of forcing people to pay for a CD with your software on it, you deliver a service. In that type of environment where service is king, operational efficiency is crucial. It’s the company with the best execution and operational excellence that prospers. Yes, it’s leveled the playing field, yet ironically the cloud providers themselves are the best examples of operational excellence being the competitive advantage of the 21st century. …

Alex Williams’ The Oracle Effect: Sun's Best and Brightest Move On to New Places post of 3/18/2020 begins:

What is the effect of the Oracle acquisition of Sun Microsystems on cloud computing? Well, there have been quite a few if you look at where Sun's best and brightest have moved on to in the past few months.

Tim Bray is the latest Sun star to move on. You may know Bray as the co-founder of XML. Eve Maler is also a co-founder of XML. She had worked with Bray for many years until her departure from Sun last Spring to join PayPal. Eve as many of you many know, is one of the leaders in developing identity standards and initiatives.

Perhaps the clearest example is evident at Rackspace where five developers from Sun were recently hired to work on Drizzle, a heavy duty system for high scaling applications in the cloud:

“When it's ready, Drizzle will be a modular system that's aware of the infrastructure around it. It does, and will run well in hardware rich multi-core environments with design focused on maximum concurrency and performance. No attempt will be made to support 32-bit systems, obscure data types, language encodings or collations. The full power of C++ will be leveraged, and the system internals will be simple and easy to maintain. The system and its protocol are designed to be both scalable and high performance.” …