Windows Azure and Cloud Computing Posts for 3/1/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Update 3/4/2010: Added link to Jan Algermissens’ "Classification of HTTP-based APIs" study to the Azure Blob, Table and Queue Services section at the suggestion of Mike Amundsen (see his comment to this post.)

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in February 2010.

Azure Blob, Table and Queue Services

Lori MacVittie claims REST API Developers Between a Rock and a Hard Place in this 3/2/2010 post:

A recent blog on EBPML.ORG entitled “REST 2010 - Where are We?” very aggressively stated: “REST is just a "NO WS-*" movement.” The arguments presented are definitely interesting but the most compelling point made is in the way that REST APIs are constructed, namely that unlike the “ideal” REST API described where HTTP methods are used to define action (verb) and the path the resource (noun), practical implementations of REST are using a strange combination of both actions (verbs) and resources (nouns) in URIs.

What this does is simulate very closely SOA services, in which the endpoint is a service (resource) upon which an action (method) is invoked. In the case of SOAP the action is declared either in the HTTP header (old skool SOAPaction) or as part of the SOAP payload. So the argument that most REST APIs, in practice, are really little more than a NO WS-* API is fairly accurate.

In “REST != HTTP “ Ted Neward very convincingly argues that the problem isn’t that most “REST” APIs aren’t REST (based on the definition and constraints set down by Fielding in the first place) but that folks are calling them REST despite the fact that they aren’t. That’s not necessarily the developer’s fault and, in the cases pointed out it’s quite possible that the decision to call the API a RESTful API was made by someone other than the developer.

The fact is that there are artificial environmental constraints that force RESTful developers into diverging from the “one true REST path” that leads to a more services-based and less structured implementation than perhaps many would like. …

Lori continues with an analysis of “problems developers face” when attempting to adhere to strict REST standards and concludes:

… The solution is, ultimately, just to not call such APIs REST. Nor RESTful, nor even REST-like. In the same way that we shouldn’t be calling every web-based application “cloud computing” we shouldn’t be calling APIs “REST” when they really aren’t. The argument that what we’re calling today “REST APIs” are really “NO WS-* APIs” is fairly accurate. But to call them simply “web services” would certainly confuse them with their SOAPy counterparts and cause the other side of the fence to rebel just as fiercely.

So until someone comes up with a better name that sticks, we may have to fall back on the tried and true “formerly known as” formula. I propose the “Formerly Unintentionally Counterfactually Known as REST” API.

It still has REST in the name, so marketing should be happy with that, right?

Update 3/4/2010: Jan Algermissens’ Classification of HTTP-based APIs study offers a pair of tables that document REST interface constraints:

Classifies HTTP-based APIs. “The classification achieves an explicit differentiation between the various kinds of uses of HTTP and provides a foundation to analyse and describe the system properties induced.”

Describes the “effect of removing a certain interface constraint the following table provides an overview of the impact upon selected system properties. Removing constraints can reduce start-up cost which in some scenarios might be of more concern than long-term maintenace- or evolution cost. The right hand side columns of the table place the impact of removing constraints into an overall context of start up-, maintenance- and evolution cost.”

Thanks to Mike Amundsen for the pointer (see comments to this post).

William Vambenepe asserts Two versions of a protocol is one too many in this 3/1/2010 post:

There is always a temptation, when facing a hard design decision in the process of creating an interface or a protocol, to produce two (or more) versions. It’s sometimes a good idea, as a way to explore where each one takes you so you can make a more informed choice. But we know how this invariably ends up. Documents get published that arguably should not. It’s even harder in a standard working group, where someone was asked (or at least encouraged) by the group to create each of the alternative specifications. Canning one is at best socially awkward (despite the appearances, not everyone in standards is a psychopath or a sadist) and often politically impossible.

And yet, it has to be done. Compare the alternatives, then pick one and commit. Don’t confuse being accommodating with being weak.

The typical example these days is of course SOAP versus REST: the temptation is to support both rather than make a choice. This applies to standards and to proprietary interfaces. When a standard does this, it hurts rather than promote interoperability. Vendors have a bit more of an excuse when they offer a choice (“the customer is always right”) but in reality it forces customers to play Russian roulette whether they want it or not. Because one of the alternatives will eventually be left behind (either discarded or maintained but not improved). If you balance the small immediate customer benefit of using the interface style they are most used to with the risk of redoing the integration down the road, the value proposition of offering several options crumbles.

Pedantic disclaimer: I use the term "REST" in this post the way it is often (incorrectly) used, to mean pretty much anything that uses HTTP without a SOAP wrapper. The technical issues are a topic for other posts.]

CMDBf

CMDBf [Configuration Management Database Federation] v1 is a DMTF [Distributed Management Task Force] standard. It is a SOAP-based protocol. For v2, it has been suggested that there should a REST version. I don’t know what the CMDBf group (in which I participate) will end up doing but I’ve made my position clear: I could go either way (remain with SOAP or dump it) but I do not want to have two versions of the protocol (one SOAP one REST). If we think we’re better off with a REST version, then let’s make v2 REST-only. Supporting both mechanisms in v2 would be stupid. They would address the same use cases and only serve to provide political ass-coverage. There is no functional need for both. The argument that we need to keep supporting SOAP for the benefit of those who implemented v1 doesn’t fly. As an implementer, nobody is saying that you need to turn off your v1 services the second you launch the v2 version. …

William continues with arguments against dual REST and SOAP API implementations, including Amazon’s S3 API.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

Guy Barrette asks SQL Azure – is master billable? in this 3/2/2010 post:

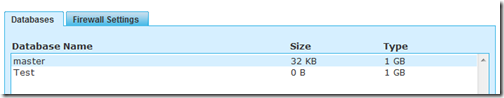

In SQL Azure, when you create a “server”, a master database is automatically created and you’ll see it listed in the databases list. Here’s a screenshot after I created a new database called Test.

I was surprised that master is listed like a standard 1GB database and I feared that it might be billable. I could not find any information so this morning, after a day of usage, I went into my billings.

Looks like only my Test database was counted as billable.

I came to the same conclusion when I checked over a longer duration, as noted in my Determining Your Azure Services Platform Usage Charges post of 2/11/2010.

Guy is a Microsoft Regional Director for Montreal, Canada.

<Return to section navigation list>

AppFabric: Access Control, Service Bus and Workflow

Vittorio Bertocci announces on 3/2/2010 Microsoft’s U-Prove Community Technical Preview release at the RSA Converence:

Conventional wisdom suggests that if you want to increase security, you need to sacrifice some privacy: if you took a flight in the last decade you know exactly what I mean. We are so used to that tradeoff that you consider it one of the many Heisenberg principles governing computer science.

But what if this status quo could be broken? What if you could have security AND privacy?

Enter the U-Prove technology, a groundbreaking innovation that allows you to do just that. Introduced by cryptographer Dr. Stefan Brands in 2004, it took the industry by storm (1, 2). In 2008 Microsoft acquired the technology and hired Stefan & his crew (Christian Paquin, Greg Thompson) in the identity and access division. They have been busy ever since to integrate U-Prove in the Identity Metasystem: today we can finally let the world give a first glance to their work.

Today, March 2, 2010, at the RSA Security Conference, Microsoft is taking a first step to make the U-Prove technology available to the public and interested parties:

- First, we are opening up the U-Prove intellectual property with a cryptographic specification under the Microsoft Open Specification Promise (OSP). Two open source software toolkits are being made available-- C# and Java editions-- so the broadest audience of commercial and open source software developers can access the technology.

- Second, we are releasing a public Community Technology Preview (CTP), which integrates the U-Prove technology with the Microsoft identity platform technologies (Active Directory Federation Services 2.0, Windows Identity Foundation, and Windows CardSpace v2). A second specification (under the OSP) is available for integrating U-Prove into open source identity selectors.

As it is customary by now, the IdElement is providing extensive coverage of the U-Prove CTP [with these Channel9 videos]:

- Announcing Microsoft’s U-Prove Community Technical Preview: Stefan describes U-Prove, some typical scenarios where traditional technologies fall short while U-Prove provides a solution, and a summary of what we are releasing.

- U-Prove CTP: a Developers’ Perspective: Christian and Greg explore the CTP, clarifying U-Prove’s role in the Identity Metasystem and describing how ADFSv2, WIF and CardSpace have been extended in the CTP for accommodating U-Prove’s functionality.

- Deep Dive into U-Prove Cryptographic protocols: A feast for cryptographers and mathematicians! in this video Stefan describes in details the cryptography behind U-Prove’s algorithm. Not for the faint of heart!

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Eric Nelson shares links for Getting PhP and Ruby working on Windows Azure and SQL Azure in this 3/2/2010 post:

When Windows Azure was first released in 2008 the only programming languages you could use were .NET languages such as Visual Basic and C# and the applications you built had to run in Partial Trust (Similar to ASP.NET Medium Trust).

In the interim we have made a number of changes including allowing applications to run in full trust, native code is now supported (no longer had to be managed code such as C#) and we support FastCGI applications. Which means:

- Windows Azure supports the Internet Information Server (IIS) 7.0 FastCGI module, so that you can host web roles that require an interpreter, or that are written in native code.

- You can host web roles and worker roles written in managed code under Windows Azure full trust. A role running under full trust can call .NET libraries requiring full trust, read the local server registry, and call native code libraries.

- You can develop a service in native code and test and publish this service to Windows Azure. A role running native code can interact with the Windows Azure runtime by calling functions in the Microsoft Service Hosting Runtime Library.

As a result it is now possible to build applications for Windows Azure using languages such as Java, PhP and Ruby. A couple of UK examples have popped up in the last few days.

PhP on Windows Azure: Last Saturday at the Open Space Coding Day on Azure, Martin Beeby and another developer sat down to get PhP running on Azure. It turned out to be surprisingly straightforward to do.

- Walkthrough of getting PhP working

- Check out the application (if it is still live) http://php.cloudapp.net/

Ruby on Rails on Windows Azure and SQL Azure: Last week Nick Hill in Microsoft UK Consulting Services posted on getting Ruby on Rails running on Azure after a customer engagement.

- Walkthrough of getting Ruby on Rails working

- Check out the application (if it is still live) http://rubysqlazure.cloudapp.net/

Related Links

The Windows Azure Team announced Updated Release of Windows Azure Service Management Cmdlets Now Available on 3/1/2010:

An updated release of the Windows Azure Service Management (WASM) Cmdlets for PowerShell is now available. These cmdlets enable developers to effectively automate and manage all services in Windows Azure such as:

- Deploy new Hosted Services

- Upgrade your Services

- Remove your Hosted Services

- Manage your Storage accounts

- Manage your Certificates

- Configure your Diagnostics

- Transfer your Diagnostics Information

Built to meet the demand for an automation API that would fit into the standard toolset for IT professionals and based on PowerShell so they can also be used as the basis for very complicated deployment and automation scripts, these cmdlets make it simple to script out deployments, upgrades, and scaling of Windows Azure applications as well as set and manage diagnostics configurations.

To learn more about these cmdlets, as well as see a few examples of how to use them for a variety of common tasks, read the blog post, "WASM Cmdlets Updated" by Ryan Dunn, technical evangelist for Windows Azure. You can also find more examples and documentation on these cmdlets by typing ‘Get-Help <cmdlet> -full' from the PowerShell cmd prompt.

Every call to the Service Management API requires an X509 certificate and subscription ID for the account. To get started, you will need to upload a valid certificate to the portal and have it installed locally to your workstation. If you are unfamiliar with how to do this, you can follow the procedure outlined in "Exercise 2 - Using PowerShell to Manage Windows Azure Applications" on the Windows Azure Channel9 Learning Center.

Simon Davies answers the How does IP address allocation work for Compute Services in Windows Azure? question in this 3/1/2010 post:

Recently I have seen a lot of questions asking how Windows Azure allocates IP addresses to services. At this time Windows Azure does not offer the capability to fix an IP address for your service however there are some scenarios where this can be a requirement, e.g. some external services require IP addresses of the caller to be pre-configured before allowing traffic to flow (SQL Azure does this) . The information in this post is designed to help you understand how IP addresses are allocated in Windows Azure and what you can do to ensure that they do not change.

Each compute service that is created in windows Azure has two deployment slots, one for production and one for staging, each of these slots has a DNS name and associated IP address. The DNS name associated with the production slot is assigned at the time that the service is created and is fixed for the lifetime of that service, the DNS name for the staging slot is dynamically generated each time a service is deployed.

Each time a service instance is deployed to one of these slots the DNS name associated with it has an IP address assigned to it from a pool of available addresses. The service slot will then keep that IP address until the service deployment is deleted. Upgrading your application, altering the configuration or swapping the production and staging slots will not cause the IP address to change.

The same IP address is used for both inbound and outbound traffic.

“Soma” Somasegar’s New Offers for Visual Studio 2010 post of 3/1/2010 describes new free Azure benefits with VS 2010 purchases:

… Today, we’re also unveiling an offer for customers who purchase Visual Studio Professional at retail. To help these developers fully realize the power and benefits of a MSDN subscription, I am announcing MSDN Essentials, a one-year trial MSDN subscription that will be included with every retail copy of Visual Studio Professional sold.

MSDN Essentials subscribers will have access to three of the latest Microsoft platforms: Windows 7 Ultimate, Windows Server 2008 R2 Enterprise, and SQL Server 2008 Datacenter R2 for development and test use, as well as 20 hours of Windows Azure. Subscribers will also have access to MSDN’s Online Concierge, Priority Support in MSDN Forums, and will be included in special offers from partners. …

If that “20 hours of Windows Azure” is a one-time only event, big deal (worth $2.40). 20 hours per month (or week) for a year would be a more impressive offer, but still not enough to take the bite out of an individual developers budget for running a full-time Azure site.

Microsoft’s new, Web-based Microsoft Case Studies search tool turns up 67 case studies when using the search term “Azure” on 3/2/2010.

DotNetSolutions explains Windows Azure Diagnostics – Why the Trace.WriteLine method only sends Verbose messages in this 2/26/2010 post:

Logging diagnostic information plays a key part for any application. The .Net framework provides a number of Diagnostic features, which can be used to provide logging functionality in your application.

However, in Windows Azure, the diagnostic API available in early CTPs was very limited. In the November release of Windows Azure, a new feature for Windows Azure Diagnostics was launched. I will not delve into details of how to use the Azure diagnostic API. You can read about this here and also here.

In this article, I will be focusing only on a recent challenge we came across while implementing the Windows Azure Diagnostics API for ScrumWall (http://scrumwall.cloudapp.net). ScrumWall uses a factory pattern to determine which concrete log writer to use. It had both a trace listener logger and a custom Windows Azure Table storage logger. The trace listeners implementation could be configured in web.config.

Return to section navigation list>

Windows Azure Infrastructure

Bill Claybrook continues the public-versus-private cloud battle with his Cloud vs. in-house: Where to run that app? article of 3/1/2010 for ComputerWorld. Prefaced by “Options include public clouds and external private clouds. Here's how to choose wisely,” the article continues:

One of the biggest decisions IT managers have to make is how and where to run data center applications. Fortunately, there are multiple choices that lower costs and increase business agility, including server virtualization, internal clouds, public clouds and external private clouds.

Many IT organizations are taking advantage of these options. Server virtualization is currently being used by more than 70% of enterprises to reduce costs, and cloud computing is being used or planned for use by more than 10% of corporations, according to Antonio Piraino, research director at Tier1 Research.

It can be confusing and difficult to determine which cloud environment to use (see sidebar below for descriptions of the most popular types of clouds). There are few, if any, guidelines, and each company will almost certainly have a unique discussion about its choices because each will have varying requirements and different views of what cloud computing means.

To take advantage of the new opportunities afforded by cloud computing, IT organizations have to learn the differences between server virtualization and various types of clouds, and understand the risks associated with using each execution environment in terms of the characteristics of various applications. …

Bill Zack reviews Hanu Kommalapati’s Azure Platform for Enterprises article for MSDN Magazine’s March 2010 issue in a brief 3/1/2010 post to the Innovation Showcase blog:

This MSDN magazine article focuses on the use of Windows Azure in the Enterprise. Starting with the architecture of a cloud application in general and the Windows Azure Platform in particular.

First the author reviews the (somewhat) accepted cloud taxonomy terms such as Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software + Services (S+S).

Then he puts the Windows Azure Platform into context as a powerful PaaS offering that is heading towards adding IaaS Features.

Following that he goes on to show how it also interfaces nicely with a customer’s on premise Windows Server Platform infrastructure in a hybrid Software Plus Services model. Read more here.

Eugenio Pace’s SLA Quiz post of 3/1/2010 demonstrates how to calculate the uptime of “two-and-a-half nines” and other fractional SLAs:

A few days ago I met with Steve Marx, of Azure fame, and he mentioned how people often misunderstand SLAs percentages. So I posted this quiz on twitter:

The answer is: 99.99864% (or close)

Why?

- One 9 = 99.9% = 100 – 1/10 = 1 – 1/(10^1)

- Two 9’s = 99.99% = 100 – 1/100 = 1 – 1/(10^2)

- Three 9’s = 99.999% = 100 – 1/1000 = 1 – 1/(10^3)

So, [t]wo and half 9’s = 100 – 1/(10^2.5) = 99.99684%

Daz Wilkins gets an “approved” :-)

Lori MacVittie’s asserts “Ultimately a highly-scalable, high-performance architecture will rely on choosing the right form factor in the right places at the right time” as a preface to her Square Infrastructure Pegs Don’t Fit in Round Network Holes post of 3/1/2010:

Scale is not just about servers, and for corporate data centers and cloud computing providers looking to realize the benefits of rapid elasticity and on-demand provisioning scale simply must be one of the foundational premises upon which a dynamic data center is built. And that includes the infrastructure.

This isn’t the first time I’ve touched upon this subject, but it’s a concept that needs to be reiterated – especially with so many pundits and analysts looking for the next big virtualization wave to crash onto the infrastructure beachhead. I’m here to tell you, though, that the devil is in the details. Virtual network appliances (VNAs) are certainly one means to achieving elastic scalability of infrastructure services but just as scale is not just about servers it’s also not just about form factor. It’s about the right form factor in the right place and, ultimately, at the right time.

First consider the scalability needs of aggregation points within an architecture. These are points at which high volumes of requests/traffic are essentially directed at one infrastructure solution, i.e. a Load balancer for application traffic, a router for network traffic. At these points in your architecture it would almost certainly be a bad idea to introduce a virtual network appliance because such solutions are much more constrained in terms of capacity than their hardware counterparts. This is due to inherent limitations on the virtualization platform, the network interfaces, and the operating system (if it is in the mix).

Where a single hardware load balancer can sustain millions of TCP connections, a virtual version of the same solution will unlikely be able to reach half of that

capacity. The differences between hardware and software solutions are minimal, but among those differences are the way in which the different implementations interact with the network and available bandwidth. As we discussed in another post on a similar subject, scalability also becomes problematic because deploying multiple, cloned instances of any solution as a means to achieve higher capacity limits requires the use of virtualization, a la load balancing.

These high-volume, high-capacity strategic points of control are simply not good candidates for virtualization as a means to achieve scalability. There are two options for scaling these hardware solutions – use an extensible chassis based solution that can accommodate expansion of capacity through acquisition of additional “blades” or take advantage of the fact that most are equipped with specialized “clustering” that allows multiple devices to work in parallel while still ensuring reliability through fault-tolerance mechanisms. …

<Return to section navigation list>

Cloud Security and Governance

Jonathan Penn covers RSA president, Art Coviello’s keynote address in Live at the RSA Conference: an industry view of 3/2/2010:

Just saw the keynote by Art Coviello, President, RSA. The focus was on securing the cloud. His main points were:

- Migration of IT to the cloud is inevitable. I totally agree with this, and see security merely as a temporary hurdle.

- That adoption of virtualization and cloud has implications on the relationship between IT Security and IT Ops, as the former focuses on governance and the latter on quality of service.

Both of these observations are spot on. The Keynote wasn’t a place to announce how to secure the cloud, or what RSA is doing here to further the effort, but this is obviously where RSA (and VMware and EMC) are in a great position to develop leadership.

Jon also analyzes Cisco’s Secure Borderless Network strategy.

K. Scott Morrison reviews the Cloud Security Alliance’s paper in his The Seven Deadly Sins: The Cloud Security Alliance Identifies Top Cloud Security Threats post of 3/1/2010 from the RSA Conference:

Today marks the beginning of RSA conference in San Francisco, and the Cloud Security Alliance (CSA) has been quick out of the gate with the release of its Top Threats to Cloud Computing Report. This peer-reviewed paper characterizes the top seven threats to cloud computing, offering examples and remediation steps.

The seven threats identified by the CSA are:

- Abuse and Nefarious Use of Cloud Computing

- Insecure Application Programming Interfaces

- Malicious Insiders

- Shared Technology Vulnerabilities

- Data Loss/Leakage

- Account, Service, and Traffic Hijacking

- Unknown Risk Profile

Some of these will certainly sound familiar, but the point is to highlight threats that may be amplified in the cloud, as well as those that are unique to the cloud environment.

Scott concludes:

… The latest survey results, and the threats paper itself, are available from the CSA web site. Bear in mind that is evolving work. The working group intends to update the list regularly, so if you would like to make a contribution to the cloud community, please do get involved. And remember: CSA membership is free to individuals; all you need to give us is your time and expertise.

James Urquhart’s The cloud cannot ignore geopolitics post of 3/1/2010 to C|Net News’ Wisdom of Clouds blog begins:

Cloud computing is an operations model, not a technology: that critical fact underpins so many of the challenges and advantages that cloud computing models place upon distributed applications.

It is the reason that so many existing application architecture concepts work in the cloud, and it is the reason that so many developers are forced to address aspects of those architectures that they could skimp on or even ignore in the self-contained bubble of traditional IT.

One of the most fascinating aspects of cloud's architectural forcing function is the way it forces developers to acknowledge the difference between two often conflicting "realities" that applications must live in; that of the physical world we humans live in each day, and that of the computing world, consisting of electronics, wires, and its own set of rules, captured in software form. …

(Graphic credit: cc Doug/Picasa)

James concludes:

… In cloud computing, "virtual" geography and "physical" geography are both extremely important, and it's up to humans to keep the two aligned. Because this is complex and prone to error, it may be one of the great business opportunities to come out of the disruption that cloud computing is wreaking on IT practices.

Brenda Michelson comments on James Urquhart’s article in her James Urquhart: It’s not (just) the data destination, it’s also the journey post of 3/2/2010:

Per usual, James Urquhart published a thought provoking post on cloud computing and geopolitics. Recently, as part of my 100-day cloud watch, I’ve pointed out the importance of cloud computing environment location in respect to data residence. In his post, James goes further, or perhaps better stated, starts earlier. Raising awareness on the paths that data travels, to reach its destination:

“How would an application operator deploy applications at a minimum "distance" from users in a network sense, without finding themselves passing data through a country that would jeopardize the safety of that data? Again, the path your data takes between two physical locations may not be the path you expected.

You are already seeing some examples of how the governments and corporations are trying to mold the Internet and "the cloud" to fit into human geopolitical realities. Countries like China, Iran, Pakistan, and others have demonstrated their willingness to control the Internet transoms over their nation’s borders, and to apply technology to controlling the "border traffic" at those crossing points.”

In his post, James makes some great observations on computing versus world boundaries, and poses a challenge for cloud computing, networking, business and political leaders:

“What’s missing, however, is any form of formal infrastructure within the Internet/Intercloud itself to "automate" mapping the human world to the computer world. Is this even possible, I often wonder. Can we (or, more to the point, should we) try to "codify" the laws and regulations of the world into digital form, allowing computer networks and applications to self-regulate?

What would the political fallout of such a system be?

In cloud computing, "virtual" geography and "physical" geography are both extremely important, and it’s up to humans to keep the two aligned. Because this is complex and prone to error, it may be one of the great business opportunities to come out of the disruption that cloud computing is wreaking on IT practices.”

Read his post. Remember it’s not just the destination, but also the journey that counts.

See SearchCloudComputing.com’s RSA has its head in the cloud on opening day of 3/1/2010 in the Cloud Computing Events section.

<Return to section navigation list>

Cloud Computing Events

Geva Perry describes Migration to the Cloud: CloudConnect Panel in this 3/2/2010 post:

The excellent CloudConnect conference is coming up in Santa Clara, California, March 15-18. There is a lot of excitement around this event, as the content seems excellent and many people are coming into the Bay Area for this event.

As a matter of fact, the San Francisco Cloud Computing Club is also having a meetup around the event on Tuesday, March 16 at 6:30 pm with many "clouderati" expected to participate. Should be fun.

Back to CloudConnect, on March 16 at 1:30 PM, I'll be participating in a panel moderated by Randy Bias (@randybias), which is part of the Cloud Computing Migration track at the conference. Below are the official details and Randy provides additional background on the CloudScaling blog.

Moving to Clouds: It's Not All or Nothing - Panel

You're ready to embrace cloud computing. You can move your IT to on-demand public clouds, and reap the benefits of elasticity; or you can create on-premise clouds that make you more agile and efficient while keeping control of data. The only thing you can't afford to do is keep using old-fashioned IT. But when it's time to adopt a cloud strategy, the options can be daunting. Do you need a public cloud? Private? Both? Should you use a platform or an infrastructure cloud? Which applications are candidates for cloud deployment?

In this opening session, you'll learn the differences between public and private clouds, and the factors you need to consider when planning a migration to cloud-based IT.

- Moderator Randy Bias, Founder, Cloudscaling

- Speaker Ellen Rubin, VP of Products, CloudSwitch

- Speaker Geva Perry, Author, Thinking Out Cloud

- Speaker Victoria Livschitz, CEO, GridDynamics

- Speaker Tom Gregory, CTO, Presidio Health

I’m planning to be at the conference and the San Francisco Cloud Computing Club meetup.

Jeremy Geelan reports Cloud Computing Bootcamp Returns to New York City April 20, 2010 in this 3/1/2010 post:

No one can properly understand anything related to enterprise-level Cloud Computing without having first gained a reasonable understanding of the very basics.

SYS-CON's pioneering Cloud Computing Bootcamp is designed with that in mind. It is a one-day, fully immersive deep-dive into the Cloud, in which the sessions during the day seek to deal with real problems and look at alternative solutions.

Click Here to Register Now for Cloud Computing Bootcamp 2010 East and Save!

The ever-popular Bootcamp, which is now held regularly around the world, is being held next in conjunction the 5th Cloud Expo in New York, NY (April 20, 2010). It is led by software industry entrepreneur and Bootcamp Instructor Alan Williamson.

Attending delegates will leave the Bootcamp with resources, ideas and examples they can apply immediately.

The SearchCloudComputing.com staff claims RSA has its head in the cloud on opening day in this 3/1/2010 post:

The giant paranoia-for-profit fest known as the RSA Conference kicked off today in San Francisco, and cloud computing is drawing heavy interest. The Cloud Security Alliance's five-hour session was reportedly turning people away at the door for the keynote.

The CSA announced a peer-reviewed paper, entitled "Top Threats to Cloud Computing," that outlines major challenges to providing and using cloud computing. Its choice for number one was the "abuse and nefarious use" of cloud to provide spammers and bot herders access to massive pools of computing firepower. Number two was insecurity at the technical level for cloud providers who didn't mind their APIs, and number three was "malicious insiders."

"This threat is amplified for consumers of cloud services," it said.

The report cited the lack of transparency and highly automated use of clouds as a reason to fear a provider's employees, much as one might fear the janitor at the gym; only with cloud computing, you're not encouraged to bring your own lock. Fortunately, or perhaps worrisome, "No public examples are available at this time." …

<Return to section navigation list>

Other Cloud Computing Platforms and Services

David Linthicum asserts “2010 will clearly be the year of cloud consolidation, so you need to be careful where and how you place your cloud computing bets” in his Cloud provider roulette: Will yours still be around? post of 3/2/2010 to InfoWorld’s Cloud Computing blog:

I was not surprised to see that CA has bought cloud infrastructure provider 3Tera -- I believe that 2010 will be the year of cloud consolidation, and we've only just begun.

This is CA's third major acquisition that targets cloud computing, including Cassatt last summer and Oblicore earlier this year. CA has long been a company that purchases its way into a space, and apparently cloud computing will be no different than other emerging areas that CA has entered through acquisition.

3Tera offers both public and private cloud infrastructures, available in a choice of cloud service or on-premise software. With this purchase, CA now has a true public cloud in its portfolio, and that's a very different model than its core enterprise software business.

The larger issue here is that we're entering into a buying frenzy around cloud computing technology, both public and private. I suspect we'll see this accelerate as stock prices continue to rebound, and thus management feels much better about entering into riskier deals. …

Dave concludes with this advice:

… If you are evaluating cloud computing technology today, you have to consider that your choice could be bought up this year or next. That means you need to make sure your legal agreements are rock-solid and spell out what happens if your provider is acquired. In other words, you need to make sure that having a different cloud landlord won't affect your business. Good luck all.

John Treadway adds to David Linthicum’s post with prescriptive advice for use of open-source cloud systems in his Protecting Yourself from Cloud Provider & Vendor Roulette post of 3/1/2010:

David Linthicum wrote a piece today in InfoWorld regarding the coming wave of cloud vendor consolidation. After CA’s acquisition of 3Tera, it’s natural to ask how you can protect yourself from having your strategic vendor acquired by a larger, less focused entity. Face it, the people building these startups are mostly hoping to have the kind of success that 3Tera had — a reported $100m payday. A lot of people are concerned with what CA will do with AppLogic – their general history with young technologies is not particularly promising. If you are building a cloud, AppLogic is the heart of your system. If CA screws it up (and I’m not saying they will, but if they do…), you’re pretty much hosed.

As I wrote in November, we will start seeing both consolidation and market exits in the cloud provider in the not too distant future. So, whether you are building a cloud, or using someone else’s cloud, you need to have a plan to mitigate the all-too-real risks of your vendor going away or having a change of control event (e.g. being acquired) that results in a degraded capability.

If you’re building a cloud (private or public), the primary way you can protect yourself is by selecting a vendor with an open source model. If the commercial entity fails, you can still count on the community to move the product forward – or you can step in and become the commercial sponsor. If the vendor gets acquired and the new owner takes the project in a direction you don’t want, you can “fork” the project (see Drizzle and MariaDB as forks of the MySQL project owned by Oracle as a result of the Sun acquisition). Or, you can start with a community-sponsored project like OpenNebula that has a very open license (Apache). It is highly unlikely that OpenNebula will go away anytime soon, and due to the licensing model there is no chance the a vendor will get deep control of the project.

John Rymer claims Oracle Likes Cloud Computing After All in this 3/1/2010 post to the Forrester Research blog for Application Development and Program Management Professionals:

Larry Ellison angrily dismisses suggestions that Oracle’s business will be harmed by the rise of cloud computing. Many misinterpret Ellison’s remarks to mean he (and by extension Oracle) thinks cloud computing is a dumb idea that Oracle won’t pursue. We are now learning that Oracle does, in fact, intend to pursue cloud computing. But we're also learning that Oracle's strategy is more limited than those of IBM and Microsoft, its large-vendor competitors.

I attended the San Francisco Oracle Cloud Computing Forum on February 25, 2010 -- one of 51 such events Oracle is running worldwide. Oracle described a strategy that is pragmatic for Oracle, but not well-articulated and missing key pieces.

PRAGMATIC: Oracle’s approach to cloud computing has three major parts:

- If you want an internal, or private, cloud, Oracle will sell you the hardware and/or middleware to build it.

- If you want to use Oracle’s software on a third-party cloud, Oracle supports Amazon Web Services and Rackspace Cloud today, and will support other clouds in the future.

- If you want to rent rather than own Oracle’s business applications, Oracle will provide those apps under a hosted subscription model.

This strategy seeks to adapt Oracle’s current business modes and products to the concepts of cloud computing. To Oracle, internal clouds are a new data-center architecture, public clouds are a new sales channel, and cloud-based applications (SaaS) are new business terms. There’s no new operating system (a la Microsoft Windows Azure), no new development model (a la Salesforce’s Force.com), no commitment to provide public cloud platform services (a la IBM and Microsoft).

Oracle described a two-step process to move from today’s typical architectures to internal clouds that makes sense (see figure) -- and starts with adoption of Oracle's "grid" products. This progression makes sense, but not as easy as Oracle makes it sound. To be fair, most vendors understate the difficulty of transition from today's data centers to internal clouds.

John continues with an analysis of the Oracle Private PaaS offering.

Eric Beeler writes “The idea of the private cloud provides a lot of promise, but the technology is not cheap. Here's what vendors can actually offer today -- and how much it will cost” as a preface to his Building a Private Cloud article for Redmond Magazine of 3/1/2010:

… Vendors are defining the private cloud, so every vendor has a different take on the meaning of this new approach to computing resources. The big reasons to move toward a private cloud include automation and utilization. Companies have been moving data centers toward virtualization to save money by increasing utilization of the computers they already own. The private cloud elevates this concept not only by virtualizing servers, but also by turning virtual machines (VMs) into a pool of resources that can be provisioned on demand with minimal manual intervention.

In addition, it allows for a type of on-demand response to needs by moving resources to where they're needed quickly. Some vendors take that model and extend it to public cloud resources and address the typical multi-tenant model to try to satisfy security, usability and performance concerns unique to enterprise IT. Whatever the definition, what an organization is trying to do is harness better utilization of computing power and ease administration requirements with better control over the environment.

Eric continues with vendor assessments of Amazon, IBM, Microsoft, and VMware private-cloud offerings.

John Brodkin claims “CloudSwitch moves applications to cloud service” in his Cloud computing start-up creates secure tunnel between data center and Amazon cloud post of 3/1/2010 to NetworkWorld’s Cloud Computing community:

A start-up that moves VMware-based applications to the Amazon cloud and creates a secure tunnel between a customer's data center and the cloud service is launching a public beta trial Monday.

CloudSwitch, recently out of stealth mode, moves existing applications from internal data centers to the cloud without rewriting the app or requiring changes in management tools.

The concept is not a new one. Amazon's Virtual Private Cloud and the vendor CohesiveFT both tackle the goal of bridging the internal data center with public cloud services in a secure manner.

But CloudSwitch is unique in offering a service that takes care of all the networking, isolation, management, security and storage concerns related to moving an existing application to a cloud, says The 451 Group analyst William Fellows. Still, CloudSwitch has yet to demonstrate a real-world example of its technology in action, so there are still questions about the mechanism of deployment and user experience, he says.

"There are still some question marks around the operation of a CloudSwitch environment," Fellows says. "A lot of details are still quite closely held." …