Windows Azure and Cloud Computing Posts for 1/13/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

• Update 1/15/2010: Tim Anderson quotes Steve Ballmer on HP/Microsoft agreement: “… I think of it as the private cloud version of Windows Azure. …” (me, too) and Carl Brooks goes into more technical detail about HPMSFT private clouds. Click here to read more and see my Private Azure Clouds Float the $250 Million HP/Microsoft Agreement of 1/13/2010.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts, Databases, and DataHubs*”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the November CTP in January 2010.

* Content for managing DataHubs will be added as Microsoft releases more details on data synchronization services for SQL Azure and Windows Azure.

Off-Topic: OakLeaf Blog Joins Technorati’s “Top 100 InfoTech” List on 10/24/2009.

Azure Blob, Table and Queue Services

Joannes Vermorel’s promises a future Object/Cloud Mapper (O/CM) in his Table Storage gotcha[s] in Azure post of 1/14/2010:

Table Storage is a powerful component of the Windows Azure Storage. Yet, I feel that there is quite a significant friction working directly against the Table Storage, and it really calls for more high level patterns.

Recently, I have been toying more with the v1.0 of the Azure tools released in November'09, and I would like to share a couple of gotchas with the community hoping it will save you a couple of hours.

- Gotcha 1: no REST level .NET library is provided …

- Gotcha 2: constraints on Table names are specific …

- Gotcha 3: table client performs no client-side validation …

- Gotcha 4: no batching by default …

- Gotcha [5]: paging takes a lot of plumbing …

- Gotcha [6]: random access to many entities are once takes even more plumbing …

- Gotcha [7]: table client support limited tweaks through events …

Stay tuned for an O/C mapper to be included in Lokad.Cloud for Table Storage. I am still figuring out how to deal with overflowing entities.

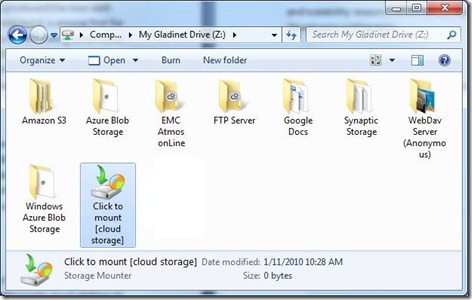

Jerry Huang touts Gladinet’s “ubiquitous client” as the front-end to multiple cloud-based storage services, including Azure blobs, that “turns the Windows Explorer into a Storage Portal” in his Deliver Cloud Storage To Your Desktop post of 1/12/2010:

… Use Cases

The speed of the internet determines the use case. For example, if everyone were still using Dial-Up network, nobody is talking about cloud storage.

Use Case I - Direct Random Access

Broadband is the norm nowadays, with speed varies around 100KB/S - 800KB/s. A response time of < 5s is required for good usability for direct access. This means direct random access to the cloud files with 500K-4M in size will be very usable. As a simple experiment, a directory listing of the My Documents folder on my PC reveals 237 Files with 46M Bytes total. I have on average 200KB per file for the files that I used the most often.Use Case II - Online Backup

At current broadband speed (slower than the 802.11B wireless network), a bigger use case is online backup, which is write once and seldom read. At this stage, the cloud storage can't replace either the network attached storage or the local hard drive because of the speed but it is perfect for backing up stuff.Use Case III - File Server with Cloud Backup

An interesting twist is to combine the Use Case I and Use Case II by moving the access point from user's desktop to a file server. Users can do direct random access on a network server while the network server is backed up by online storage. As a second product coming out of Gladinet, the Cloud Gateway fits this use case. …

See Mike Pontacoloni reports Early Azure adopter sees financial benefits of cloud computing in the Live Windows Azure Apps, Tools and Test Harnesses section.

Shivprasad Koirala describes 9 simple steps to run your first Azure Table Program in this 1/10/2010 post to The Code Project:

Azure has provided 4 kinds of data storages blobs, tables, queues and SQL azure. In this section we will see how to insert a simple customer record with code and name property in Azure tables.

In case you are complete fresher and like me you can download my two azure basic videos which explain what azure is all about Azure FAQ Part 1: Video1 Azure FAQ; Part 2: Video2.

Please feel free to download my free 500 question and answer eBook which covers .NET, ASP.NE, SQL Server, WCF, WPF, WWF, Silverlight, and Azure [at] http://tinyurl.com/4nvp9t. …

We will create a simple customer entity with customer code and customer name and add the same to Azure tables and display the same on a web role application.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

My SSMS 2008 R2 11/2009 CTP Has Scripting Problems with SQL Azure Selected as the Target Data Base Engine Type post of 1/13/2010 describes the multitude of errors I encountered while attempting to use SQL Server Management Studio 2008 R2’s Script Generation wizard to upload the schema and data of an on-premises AdventureWorks2008LT database to my live SQL Azure instance.

My post of the same date to the SQL Azure forum asks Has Anyone Else Seen These Bugs in SQL Server 2008 R2's Script Generation for SQL Azure?

<Return to section navigation list>

AppFabric: Access Control, Service Bus and Workflow

See Eugenio Pace’s ADFS / WIF on Amazon EC2 post in the Other Cloud Computing Platforms and Services section.

<Return to section navigation list>

Live Windows Azure Apps, Tools and Test Harnesses

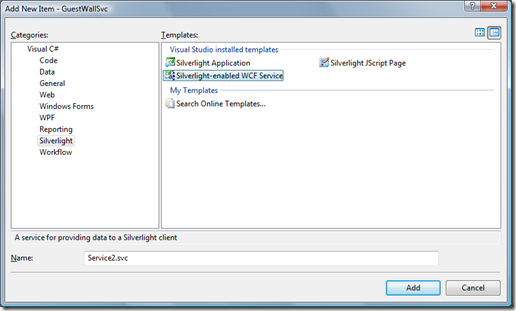

Colinizer offers a Quick Tip for Hosting Services for Silverlight on Windows Azure in this 1/14/2010 post:

The Silverlight and Azure Tools for Visual Studio 2008 SP1 both provide convenient means to get going with these respective technologies.

Windows Azure is a good place to host services that your Silverlight application may call, as well as the web application that contains the Silverlight application itself. However, if you’ve tried to get this to work, then you may have encountered as issue.

Let’s say you’ve added a Web Role to your Azure application to host the Silverlight application, and you want to add a Silverlight-enabled WCF Service to the website. You may have tried using the Add New Item dialog to select the “Silverlight-enabled WCF Service” like this…

Unfortunately (for reasons I may go into in the future), the Azure platform isn’t going to correctly publish this WCF service in the web role, and your Silverlight app may be able to talk to the service just fine in your development environment, but not when you deploy to Azure proper.

The easiest workaround for this is to create a WCF Role, instead of a Web Role and then add your website files and Silverlight application to that. The WCF Role exposes a service that is compatible with Silverlight on Azure.

If you check out my Guest Wall application (including source code), you’ll see this in action as well as the code.

Microsoft’s Public Sector DPE Team announced City of Edmonton, Canada uses Windows Azure/Microsoft Open Government Data Initiative for publishing government data on 1/13/2010:

The City of Edmonton today launched http://data.edmonton.ca - an Open Data catalogue that increases the accessibility of public data managed by the City. As part of an initiative to improve the accessibility, transparency, and accountability of City government, this catalogue provides access to City managed data sets in machine-readable formats. This site uses the Microsoft Open Government Data Initiative (OGDI) code that is available at http://ogdi.codeplex.com. OGDI is an open source data publishing solution designed to run on Windows Azure (and uses Bing maps).

This solution serves as an example of what every government agency can do with Azure and OGDI. The important thing to note is that this is a repeatable solution that is available for download at CodePlex and fits an exponentially growing need for government agencies that need to publish data to satisfy Open Government and transparency initiatives. Access http://www.opengovdi.com to learn more about OGDI.

tbtechnet asserts 300 Developers Can’t Be Wrong: Free Technical Support for Windows Azure in this 1/13/2010 post to the Windows Azure Platform, Web Hosting and Web Services blog:

Sometimes I feel like I’m over promoting this or maybe shouting at the wind. I can’t resist though as it seems like such a great deal.

Get FREE technical support while developing your Azure applications. Click here to become a Front Runner for Windows Azure Platform.

When you join the Front Runner program, you’ll get access to one-on-one technical support from our developer experts by phone or e-mail. Then, once you tell us that your application is compatible, you’ll get a range of marketing benefits to help you let your customers know that you’re a Front Runner.

So, join now. 300 software development companies already have. Don’t miss out.

Mike Pontacoloni reports Early Azure adopter sees financial benefits of cloud computing in this 1/12/2010 post to SearchWinDevelopment:

When Advanced Telemetry, an energy consumption monitoring and management provider, realized they could not accommodate their rapid growth with on-site infrastructure, they looked at cloud computing options to expand their computing resources. …

Advanced Telemetry first looked at Google BigTable to meet its needs, but eventually chose Microsoft Azure. "All Google BigTable offered was offloading our data storage requirement, which was our primary need initially," said Naylor. "We became aware of Azure and started to give the table storage a good look. To my surprise, the framework Microsoft was developing offered many more benefits."

For Naylor, those benefits include the building blocks for new cloud applications. Prior to migrating to Azure, Advanced Telemetry developed most applications from scratch. "There's all kinds of data being queued, services that run, background services that run—all things we had to essentially design and develop on our own," said Naylor. "In the Azure framework, you get replacements for these things." …

John Moore’s Analysis: MediConnect Acquires PHR Vendor, PassportMD post of 1/12/2010 to the Chilmark Research blog announces:

Yesterday, MediConnect announced that it had acquired Florida-based PHR vendor, PassportMD. The acquisition is a good move by MediConnect as it will allow them to extend beyond just collecting records on behalf of consumers (it offers such a service on Google Health), but now provide consumers with a solution to present such records in an easy to view and understand format within the framework of the PassportMD PHR. Terms of the deal were not disclosed and PassportMD operations will be moved to MediConnect’s headquarters in Utah.

John continues with his analysis of the acquisition.

PassportMD uses Microsoft HealthVault as a secure storage service. I wrote about my problems with PassportMD in Logins to PassportMD Personal Health Records Account No Longer Fail with Expired Certificate on 10/11/2010 and earlier posts.

Return to section navigation list>

Windows Azure Infrastructure

Brenda Michelson’s 100-day Cloud Watch: Surveying the Cloud Computing Surveys post of 1/14/2010 begins her series:

As I mentioned on Tuesday, I’m dedicating 100-days worth of research sessions to explore “enterprise cloud computing considerations”. My first topic is adoption trends:

- What are organizations doing, or planning to do, with cloud computing?

- What types of cloud computing environments are being used, considered and/or ignored?

- What industries are leading and lagging adoption?

- What use cases are being fulfilled, in part or in full, by cloud computing offerings?

- What are the major drivers and expectations?

To get started, I’m surveying the cloud computing surveys. Certainly, I expect to see security noted as a big concern, and operating expense versus capital outlay as a driver. In fact, I’ll cover each of those points in later days, as business risk and economic considerations, respectively. What I’m looking for in my survey of the surveys are observations, trends and even predictions, beyond the headlines.

Brenda continues with a list of the surveys/papers she’ll use “[Written hours later.]”

Jon Brodkin asserts “Shift to cloud will have broad impacts on hardware market and IT staffing” in his 20% of businesses will get rid of all IT assets as they move to cloud, Gartner predicts post of 1/14/2010 to NetworkWorld:

Cloud computing will become so pervasive that by 2012, one out of five businesses will own no IT assets at all, the analyst firm Gartner is predicting.

The shift toward cloud services hosted outside the enterprise's firewall will necessitate a major shift in the IT hardware markets, and shrink IT staff, Gartner said.

"The need for computing hardware, either in a data center or on an employee's desk, will not go away," Gartner said. "However, if the ownership of hardware shifts to third parties, then there will be major shifts throughout every facet of the IT hardware industry. For example, enterprise IT budgets will either be shrunk or reallocated to more-strategic projects; enterprise IT staff will either be reduced or reskilled to meet new requirements, and/or hardware distribution will have to change radically to meet the requirements of the new IT hardware buying points."

If Gartner is correct, the shift will have serious implications for IT professionals, but presumably many new jobs would be created in order to build the next wave of cloud services.

But it's not just cloud computing that is driving a movement toward "decreased IT hardware assets," in Gartner's words. Virtualization and employees running personal desktops and laptops on corporate networks are also reducing the need for company-owned hardware. …

My SLAs for Microsoft Public Clouds Go Private with an NDA post of 1/14/2010 describes Microsoft’s recent branding of Service Level Agreements for

- Windows Azure Compute

- Windows Azure Storage

- SQL Azure

- Windows Azure platform AppFabric Service Bus & Access Control

as “confidential information” and concludes:

The only motive I can attribute to Microsoft’s desire to seal the SLA terms is an attempt to prevent comparative reviews of SLAs for public cloud computing offerings. The NDA requirement will undoubtedly be an EPIC #FAIL and an embarrassment to the newly minted Server & Cloud Division.

I won’t be downloading the SLA documents until Microsoft removes the NDA requirement.

Microsoft’s Public Relations Team announced a New HP and Microsoft Agreement to Simplify Technology Environments in an IT Infrastructure Spotlight of 1/13/2010.

HP and Microsoft Corp. today announced an agreement to invest $250 million over the next three years to significantly simplify technology environments for businesses of all sizes. This agreement represents the industry’s most comprehensive technology stack integration to date — from infrastructure to application — and is intended to substantially improve the customer experience for developing, deploying and managing IT environments.

The press release features video segments by Microsoft and HP execs, links to related articles and blog posts, and featured tweets. The designated Twitter hashtag for the announcement is #HPMSFT.

• When I first heard about the HPMSFT agreement, I posted the following tweet:

Sounds to me like #HPMSFT will deliver the long-denied but recently-promised #Azure private cloud. MSFT's early excuse was non-std. hardware [8:45 AM Jan 13th from web].

My Private Azure Clouds Float the $250 Million HP/Microsoft Agreement of 1/13/2010 post of 1/15/2010 includes analysis of the agreement’s cloud computing aspects by Tim Anderson and Carl Brooks, as well as other private cloud proponents and naysayers.

Jim Nakashima describes Windows Azure - Resolving "The Path is too long after being fully qualified" Error Message in this 1/14/2010 post:

When you run a cloud service on the development fabric, the development fabric uses a temporary folder to store a number of files including local storage locations, cached binaries, configuration, diagnostics information and cached compiled web site content.

By default this location is: C:\Users\<username>\AppData\Local\dftmp

For the most part you won’t really care about this temporary folder, the Windows Azure Tools will periodically clean up the folder so it doesn’t get out of hand. …

There are some cases where the length of the path can cause problems.

If the combination of your username, cloud service project name, role name and assembly name get so long that you run into assembly or file loading issues at runtime. This will give you the following error message when you hit F5:

“The path is too long after being fully qualified. Make sure the full path is less than 260 characters and the directory name is less than 248 characters.”

For example, in my test, the path to one of the assemblies in my cloud service was:

C:\Users\jnak\AppData\Local\dftmp\s0\deployment(4)\res\deployment(4).CloudServiceabcdefghijklmnopqrstuvwxyzabcdefghijklmnopqr.WebRole1.0\AspNetTemp\aspNetTemp\root\aff90b31\aa373305\assembly\dl3\971d7b9b\0064bc6f_307dca01\Microsoft.WindowsAzure.Diagnostics.DLL

which exceeds the 260 character path limit.

If you aren’t married to your project and assembly names, you could name those differently so that they are shorter

The other workaround is to change the location of the development fabric temporary folder to be a shorter path.

You can do this by setting the _CSRUN_STATE_DIRECTORY to a shorter path, say “C:\A” for example.

Make sure that you close Visual Studio and shutdown the development fabric by using the “csrun /devfabric:shutdown” command I mentioned above or by clicking “exit” on the Windows Azure the tray icon.

After making this change, my sample above was able to run without problem.

I’ve encountered this problem occasionally and simply shortened the project and assembly names. However, Jim’s approach is easier.

Jackson Shaw asks Is Azure priced too high? and concludes “Microsoft’s pricing is out-of-whack” in this 1/14/2010 post:

A colleague at the office mentioned that over the holidays he helped build a custom application for a small business in his town and they made some a startling discovery about Azure versus Google pricing. I did track down a blog post by Danny Tuppeny where this was talked about in more detail:

As a .NET developer, I was quite excited to hear about Windows Azure. It sounded like a less painful version of Amazon's EC2, supporting .NET (less painful in terms of server management!). When I saw the pricing, it didn't look too bad either. That was, until I realized that their "compute hour" referred to an hour of your app running, not an hour of actual CPU time. Wow. This changes things. To keep a single web role running, you're looking at $0.12/hour = $2.88/day = $20.16/week = $86.40/month. Anyone that's bought hosting for a small site/app recently will know that this is not particularly cheap! …

Jeffrey Schwartz contributes an analysis of the agreement for Redmond Channel Partner in his Microsoft and HP Ink Cloud, Virtualization Pact article of 1/13/2010:

… "If you discard all the hyperbole, this is simply another announcement of bundled solutions and an initiative by two major players attempting to cover major holes in their offerings," said Richard Ptak, managing partner of IT advisory firm Ptak, Noel & Associates, in an e-mail.

Ballmer said the end goal of the pact is to co-develop and deliver cloud-based application and system architectures. "This is entirely cloud motivated," he said.

For Microsoft, the deal underscores its quest to offer Windows Azure and SQL Azure as private cloud offerings. The public versions of Azure went live this month but many larger enterprises are awaiting private and hybrid cloud implementations.

Early deliverables are not expected to be groundbreaking. Bob Muglia, president of Microsoft's server and tools business, said on the call that engineers from both companies are already working to integrate Microsoft's System Center Management platform and Hyper-V virtualization technology into HP's ProLiant Servers and Insight Manager management software. …

… While HP recently launched its own cloud initiative, [Redmonk analyst Michael] Coté said it doesn't have the cloud ecosystem that Microsoft has. Meanwhile, Microsoft can gain from HP's enterprise hardware, storage, networking and systems management expertise.

Key to the agreement will be the ability to marry both companies' systems management offerings, said Forrester Research analyst Glenn O'Donnell. "The fact that they're joining forces on that software isn't in and of itself unique. Both HP and Microsoft have built technology partnerships for their systems management software with other vendors," O'Donnell said. "What I think is notable with this is they are really tying this into a turnkey packages of applications, where the systems and the management software that orchestrates everything happen under the covers." …

Mary Jo Foley chimes in with Microsoft, HP to unveil 'solutions built on new infrastructure-to-application model', which she started writing before the teleconference. Mary Jo provides the details of the agreement in an update at the end of her post.

James Urquhart helps with Understanding Infrastructure 2.0 in this 1/13/2010 post to C|Net News’ The Wisdom of Clouds blog:

In an interview this week, Greg Ness, a senior director at network automation vendor Infoblox, outlines the problems lurking in today's network architectures and processes in the face of dynamic distributed computing models like cloud computing and data center virtualization.

The interview focuses on the concepts behind Infrastructure 2.0, and how vendors and enterprises are working together to address the many opportunities and challenges they present.

Take a look at the core TCP/IP and Ethernet networks that we all use today, and how enterprise IT manages those services. Not long ago, I wrote an article that described how most corporations relied heavily on manual labor to manage everything from IP addresses and domain names to routing and switching configuration. At the time, I cited a survey that indicated that a full 63 percent of enterprises were still using spreadsheets to manage IP addresses. …

Eric Nelson’s Step by Step sign up for the MSDN Subscriber offer for the Windows Azure Platform post of 1/13/2010 is similar to my How to Create and Activate a New Windows Azure and SQL Azure Account or Upgrade an Existing Account post of 1/7/2010. However, Eric suggests:

Don’t rush to activate your Azure benefit it if you don’t plan to start using Azure just yet!

because of the eight-month duration of the initial MSDN Premium benefit. The Azure Platform Introductory Special expires on 7/31/2010, about eight months from the Windows Azure Platform’s originally scheduled 1/1/2010 release to the Web. I expect the same to occur with the MSDN offer, regardless of when you sign up.

Eric’s Step by Step sign up for the 25 hour free Windows Azure Platform Introductory Special post of the same date covers the lesser offer.

Bill Lodin and the msdev.com Team updated the Virtual Lab: Windows Azure virtual lab on 1/6/2010 to reflect recent API changes:

Update: If you are returning to continue the Windows Azure virtual lab and you started it before January 6, 2010, please note that the lab has been updated to reflect recent API changes. The exercises as shown here reflect the latest SDK (November 2009.)

In this virtual lab, you will create a fully functional Windows Azure application from scratch. In the process, you will become familiar with several important components of the Windows Azure architecture including Web Roles, Worker Roles, and Windows Azure storage. You will also learn how to integrate a Windows Azure application with Windows Live ID authentication, and you’ll learn how to store relational data in the cloud using SQL Azure.

<Return to section navigation list>

Cloud Security and Governance

Mamoon Yunus asserts “IaaS vendors and Enterprise consumers share responsibilities for enabling Cloud Security” in his Strategies for Securing Enterprise-to-Cloud Communication analysis of 1/14/2010:

The Cloud Security Alliance (CSA) published Version 2.1 of its Guidance for Critical Areas of Focus in Cloud Computing with a significant and comprehensive set of recommendations that enterprises should incorporate within their security best practices if they are to use cloud computing in a meaningful way.

The Guidance provides broad recommendations for operational security concerns including application security, encryption & key management, and identity & access management. In this article, we will consider security implications of REST- and SOAP-based communication between consumers and specifically, Infrastructure as a Service (IaaS) providers.

Cloud Application Security

Cloud application security requires looking at classic application security models and extending these models out to dynamic and multi-tenant architectures. While planning for cloud-based application security, DMZ-resident application security should be used as the starting point. DMZ-based security enforcement models should be extended to incorporate secure enterprise-to-cloud traffic management and operational control.IaaS cloud providers design their management APIs to accommodate consumers with varying skill sets. Popular IaaS providers (Amazon EC2, Rackspace, Opsource, GoGrid) publish RESTful APIs for keeping a low threshold-of-difficulty to accommodate a broad set of consumers that lack sophisticated SOAP-based communication stacks but can easily consume XML/JSON using RESTful interaction. Amazon EC2, however, also provides a sophisticated WDSL for SOAP-based interaction.

Although cloud providers have a greater responsibility of implementing extensive application security provisions while accommodating consumers of varying technical skills, cloud consumers also have to share the burden of risk mitigation by ensuring that their API calls into the cloud providers are secure and clean. The potential for a cascading effect in a shared, multi-tenant environment is high: a single poorly formed SOAP- or REST-based API request can cause a Denial of Service (DoS) attack potentially shutting down access to the cloud management APIs for many. …

Greg Shipley’s Navigating the Storm: Governance, Risk and Compliance in the Cloud Analytical Report of 12/3/2009 for InformationWeek (requires site registration) carries this abstract:

Cloud computing can save money, but it also provides IT groups with extra potential layers of abstraction, extremely complex interdependency models—and an unsettling level of uncertainty about where data goes, how it gets there and how protected it will be over time. As we discuss in this InformationWeek Analytics report, prudent IT teams will approach the decision with a focus on evaluating the true performance, cost and risk implications of embracing the cloud model to achieve a specific goal. In this report, we provide a guide to clarifying the cloud governance, risk management and compliance picture, along with analysis of how the nearly 550 business technology professionals who responded to our survey perceive cloud computing risks.

Greg Shipley is the chief technology officer for Chicago-based information security consultancy Neohapsis.

<Return to section navigation list>

Cloud Computing Events

MSDN Events presents Take Your Applications Sky High with Cloud Computing and the Windows Azure Platform in Phoenix, AZ on 2/23/2010 1:00 PM to 5:00 PM MT:

Join your local MSDN Events team as we take a deep dive into cloud computing and the Windows Azure Platform. We’ll start with a developer-focused overview of this new platform and the cloud computing services that can be used either together or independently to build highly scalable applications. As the day unfolds, we’ll explore data storage, SQL Azure, and the basics of deployment with Windows Azure. Register today for these free, live sessions in your local area.

- SESSION 1: Overview of Cloud Computing and Windows Azure

- SESSION 2: Survey of Windows Azure Platform Storage Options

- SESSION 3: Going Live with your Azure Solution

Event ID: 1032438178 Register online or by phone.

Eric Nelson announces Windows Azure Accelerated Training Workshop March 30th in London:

I was just “reviewing” a Windows Azure Platform developer video from Adrian Jakeman of QA and spotted at the end that they had various Azure courses up and running or in development.

Given the video was excellent, Adrian is a top chap and QA consistently deliver great sessions, I felt I should point folks at:

Windows Azure Platform – Accelerated Training Workshop, March 30th in London, 2 days

This course is aimed at software developers with at least 6 months practical experience using Visual Studio 2008, C# and who are familiar with Virtual PC 2007 or Windows Virtual PC. At the end of the workshop delegates will:

- Gain an understanding of the nature and purpose of the Windows Azure Platform

- Understand the role played by the following components:

- Windows Azure

- SQL Azure

- .NET Services

Outline

- Module 1: Windows Azure Platform overview

- Module 2: Introduction to Windows Azure

- Module 3: Building services using Windows Azure

- Module 4: Windows Azure storage

- Module 5: Building applications using SQL Azure

- Module 6: Introduction to .NET Services

- Module 7: Building applications using the .NET Service Bus

Two other courses are “in development”

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Bill McColl asserts “Your next steps are to Go Cloud and Go Parallel” in his Got Excel? Go Cloud in 2010 post of 1/14/2010:

At the recent Hadoop World conference, Doug Cutting, Hadoop Project Founder, remarked that "The Dream" was to provide non-programmers with the power of parallel cloud computing tools such as MapReduce and Hadoop, via simple, easy-to-use spreadsheet-like interfaces.

With Cloudcel, the non-programmers of the world (and the programmers too!) can "live that dream" today.

Not only can you develop and launch massively parallel MapReduce/Hadoop-style cloud computations, simply and seamlessly from within the standard Excel interface, you can also go way beyond tools such as Elastic MapReduce and Hadoop, developing and launching live, continuous, realtime stream processing applications in the cloud, from that same Excel interface.

So if you already have Excel, and also have growing volumes of data you need to handle, your next steps in 2010 are to Go Cloud and Go Parallel.

You'll be surprised how easy it is to get started, and amazed by how powerful it is, as soon as you begin exploiting the elastic scalability of the cloud.

Amazon Web Services’ AWS Newsletter - January 2010 of 1/14/2009 announces:

- Amazon CloudFront Streaming Now Available …

- Announcing Amazon EC2 Spot Instances …

- AWS Management Console Adds Support for Elastic Load Balancing …

- Amazon Virtual Private Cloud Enters Unlimited Beta …

- Job Opportunities at Amazon Web Services …

Rich Miller reports Amazon EC2 scalability issues in his Amazon: We Don’t Have Cloud Capacity Issues post of 1/14/2010 to Data Center Knowledge:

One of the key selling points for cloud computing is scalability: the ability to handle traffic spikes smoothly without the expense and hassle of adding more dedicated servers. But this week some users of Amazon EC2 are reporting that their apps on the cloud computing service are having problems scaling efficiently, and suggesting that this uneven performance could be due to capacity problems in Amazon’s data centers.

A chart from CloudKick looking at latency for resources running on Amazon EC2.

The reports emerge as rival services focus on Amazon’s performance in the battle for cloud computing mindshare and customers.

Amazon says that if customers are experiencing performance problems, it isn’t because EC2 is overloaded. “We do not have over-capacity issues,” said Amazon spokesperson Kay Kinton. “When customers report a problem they are having, we take it very seriously. Sometimes this means working with customers to tweak their configurations or it could mean making modifications in our services to assure maximum performance.” …

Eugenio Pace’s ADFS / WIF on Amazon EC2 post of 1/12/2010 begins:

Steve Riley’s post on growing interest from Amazon customers on identity federation is consistent with what I hear from our own customers.

As part of our project, we actually tested the two scenarios he describes on EC2:

“I imagine most scenarios involve applications on Amazon EC2 instances obtaining tokens from an ADFS server located inside your corporate network. This makes sense when your users are in your own domains and the applications running on Amazon EC2 are yours.”

This would be exactly Scenario #1 in the guide, hosting a-Expense sample on EC2:

The guide covers a similar deployment on Windows Azure.

Larry Dignan asks Salesforce raises $500 million; Time to go shopping? in this 1/11/2010 post to ZDNet’s Between the Lines blog:

Salesforce.com doesn’t need the cash, but on Monday said it would float a $500 million convertible bond offering.

The convertible bonds are due in 2015 and Salesforce.com will enter hedge transactions to minimize future dilution on shareholders.

Now Salesforce.com may just be taking advantage of low rates, but whenever a company with cash floats debt the natural question is: What for?

The official line (statement): Salesforce.com will use the proceeds for “general corporate purposes, including funding possible investments in, or acquisitions of, complementary businesses, joint ventures, services or technologies, working capital and capital expenditures.”

The unofficial line: Expect Salesforce.com to go shopping. As of Oct. 31, Salesforce.com had $1.07 billion in total cash and marketable securities.

Jason Ouellette offers An Introduction to Force.com in this 1/8/2010 article for Dr. Dobbs Journal: “An infrastructure for building business apps, delivered to you as a service:”

… Force.com is different from other PaaS solutions in its focus on business applications. Force.com is a part of Salesforce.com, which started as a SaaS Customer Relationship Management (CRM) vendor. But Force.com is unrelated to CRM. It provides the infrastructure commonly needed for any business application, customizable for the unique requirements of each business through a combination of code and configuration. This infrastructure is delivered to you as a service on the Internet.

Companies like Amazon, Microsoft, and Google provide PaaS products. In this article I introduce the mainstream PaaS products and include brief descriptions of their functionality. Consult the Web sites of each company for further information. …